Organizations spend between 60–80% of their IT budgets on maintaining existing systems, leaving only 20–40% for new growth work. For companies running Informatica PowerCenter, this problem becomes critical. The average cost of operating and maintaining one legacy system is $30 million, which is why Informatica to Databricks migration has become a top priority for data-driven enterprises looking to escape this financial trap.

Legacy ETL setups that once seemed steady now fall behind modern needs. Therefore, they struggle with rising data loads, slow real-time work, and limited support for AI tasks. Teams end up repairing old pipelines instead of building new features, while costs climb each year.

This blog covers the key benefits, common challenges, and a clear step-by-step migration process. It also explains migration tools and automation, testing and validation, cost and ROI, and post-migration operations and governance.

Reduce Your Migration Timeline by 80% With Kanerika’s Flip Platform

Partner with Kanerika for Data Modernization Services

Why Are Enterprises Migrating from Informatica to Databricks?

The shift from Informatica PowerCenter to Databricks isn’t just a technology upgrade. Moreover, It’s a fundamental business transformation. Organizations are making this move to address critical limitations that prevent them from competing in today’s data-driven economy.

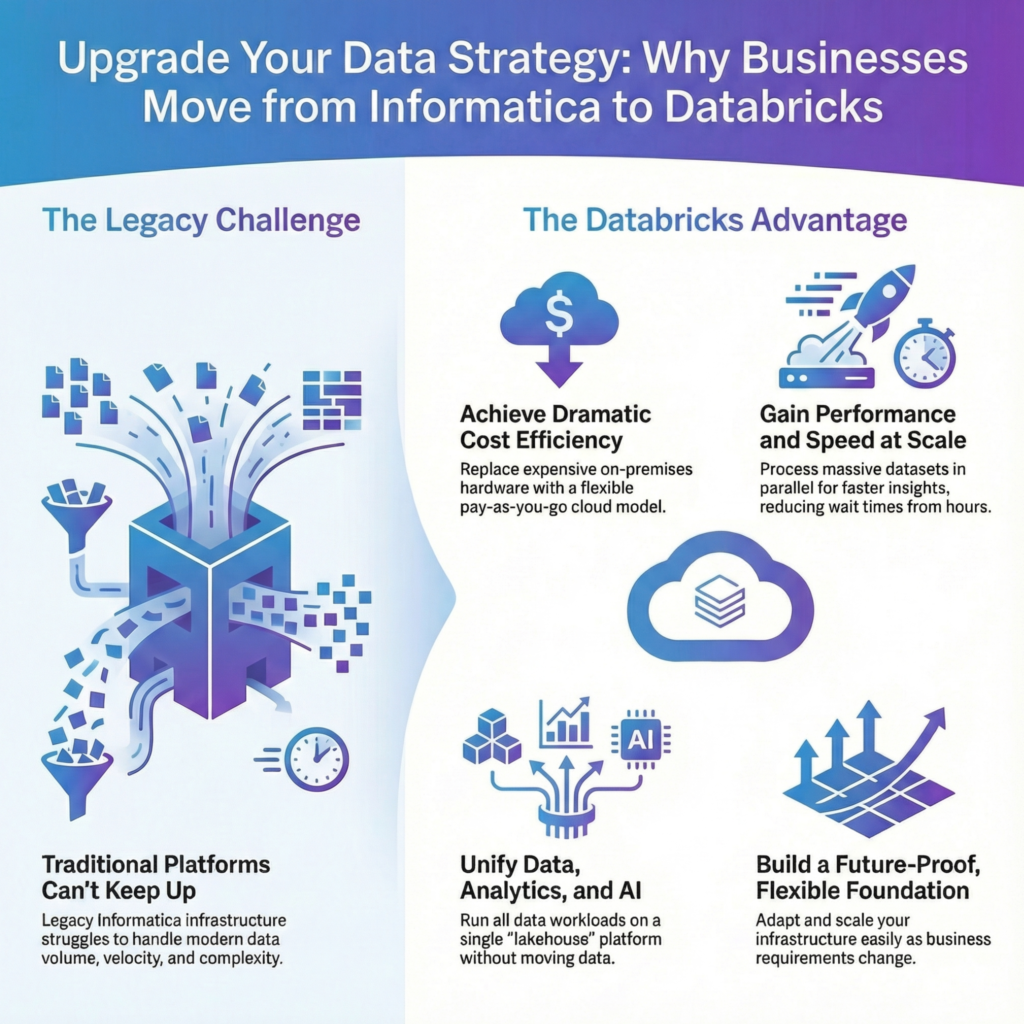

1. The Legacy Infrastructure Challenge

Informatica PowerCenter was built for a different era of data management. While it served organizations well for decades, the platform now struggles with modern requirements:

- On-premises infrastructure demands significant capital investment in hardware

- Maintenance teams spend countless hours on system upkeep instead of innovation

- Scaling to handle growing data volumes becomes prohibitively expensive

- Legacy systems weren’t designed for real-time analytics and AI workloads

These limitations force businesses to choose between maintaining outdated infrastructure or embracing modern cloud platforms built for today’s data demands.

2. Cost Efficiency That Transforms Bottom Lines

One of the most compelling reasons for driving migration is the dramatic cost reduction. Thereby, organizations eliminate expensive on-premises infrastructure entirely, shifting to a pay-as-you-go cloud model. This approach delivers several financial benefits:

- Only pay for the resources you use

- No hardware procurement or maintenance costs

- Automatic scaling prevents paying for idle infrastructure

- Typical ROI achieved within 12-18 months

Traditional data warehouses require provisioning for peak capacity even during low usage. Databricks automatically scale compute resources based on demand, ensuring optimal cost efficiency at all times.

3. Performance and Speed at Scale

Modern businesses can’t afford to wait hours for data insights. Databricks leverages Apache Spark’s distributed computing to process massive datasets in parallel:

- Data processing speeds increase by 5-10x compared to legacy systems

- Query response times drop from hours to seconds

- Real-time streaming data becomes accessible for immediate analysis

- Complex transformations that took overnight now complete in minutes

What once required batch processing overnight now delivers results almost instantly, enabling real-time decision-making across the organization.

4. Unified Platform for All Data Workloads

Perhaps the most transformative aspect is Databricks’ unified approach. Therefore, instead of maintaining separate systems for different functions, everything consolidates into one platform. This means:

- Data warehousing, data lakes, and ETL processing in one place

- Data engineers, analysts, and scientists collaborate seamlessly

- No data movement between systems for different workloads

- Single governance model across all data types

The lakehouse architecture combines data lakes and data warehouses. Hence, allowing you to store structured, semi-structured, and unstructured data together while running analytics and AI workloads without moving data.

5. Built for AI and Machine Learning

While Informatica excels at traditional ETL, it wasn’t designed for AI-driven enterprises. Additionally, databricks removes barriers between data engineering and data science:

- Native support for machine learning frameworks

- Integrated notebooks for collaborative development

- MLflow for end-to-end ML lifecycle management

- Build, train, and deploy models where data already lives

Organizations pursuing AI initiatives reduce time from concept to production from months to weeks, accelerating innovation cycles dramatically.

6. Future-Proof Flexibility and Scalability

Technology landscapes evolve rapidly, and businesses need infrastructure that adapts without forcing complete rebuilds. Databricks provides this flexibility through:

- Support for major cloud providers (AWS, Azure, Google Cloud)

- Open-source foundation preventing vendor lock-in

- Seamless scaling as data volumes and user bases grow

- Access to cutting-edge innovations from the data community

As your requirements expand, Databricks scale without requiring architectural redesigns or expensive infrastructure overhauls.

Top 7 Challenges in Informatica to Databricks Migration

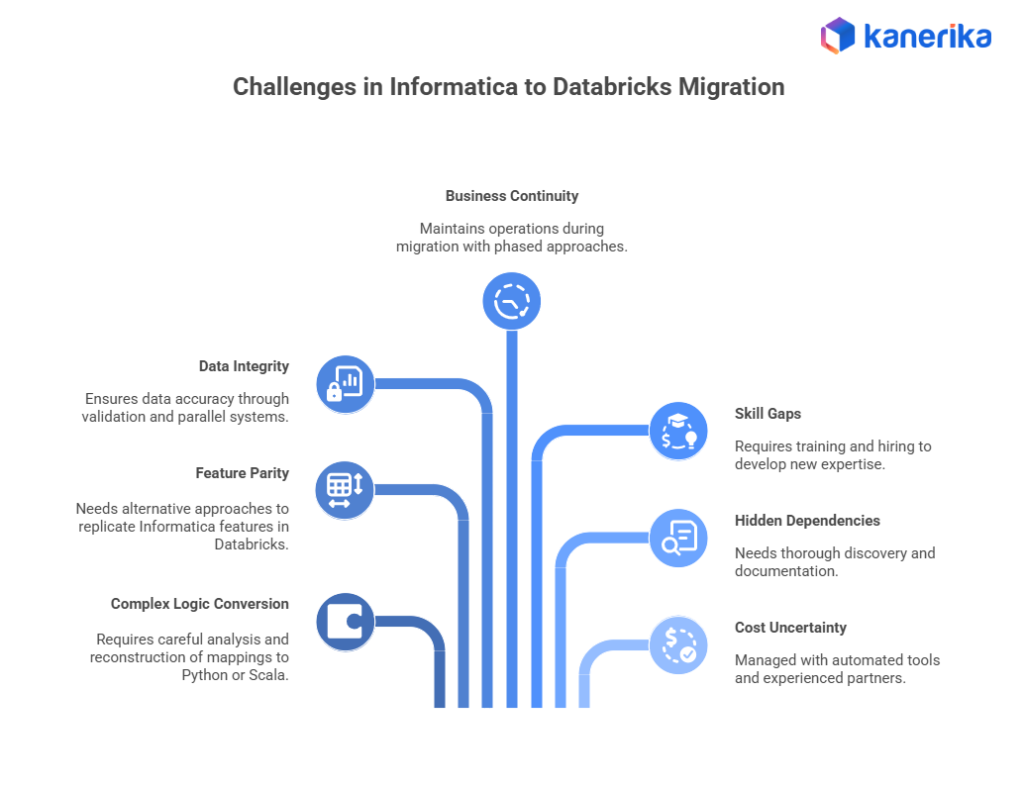

1. Complex Transformation Logic Conversion

Informatica PowerCenter uses a visual, GUI-based approach to building ETL workflows. Thus, converting these into code-based Databricks pipelines, however, requires careful analysis and reconstruction:

- Hundreds or thousands of mappings need translation to Python or Scala

- Complex transformation logic must be rewritten for distributed computing

- Nested mapplets and reusable components require special handling

- Custom transformations may not have direct equivalents in Spark

Moreover, the challenge intensifies when dealing with mappings built over many years by different developers, often with limited documentation.

2. Ensuring Feature Parity and Functional Equivalence

Not every Informatica feature has a one-to-one match in Databricks. Consequently, organizations must find alternative approaches to replicate functionality:

- Session-level parameters and variables need new implementation strategies

- Lookup transformations require redesign for optimal Spark performance

- Error handling mechanisms work differently in distributed environments

- Workflow orchestration follows different patterns in cloud-native platforms

Furthermore, teams often discover that simply translating code isn’t enough. They must rethink how certain operations work in a distributed computing environment to achieve the same business outcomes effectively.

3. Data Integrity and Validation Requirements

Maintaining data accuracy throughout migration is non-negotiable. After all, any discrepancies between source and target systems can lead to incorrect business decisions:

- Every record must be validated between old and new systems

- Complex data types may behave differently across platforms

- Aggregations and calculations need verification at multiple levels

- Historical data must remain consistent after migration

As a result, organizations typically run parallel systems during migration to continuously compare outputs. This validation process can be time-consuming but is essential for building stakeholder confidence in the new platform.

Tableau to Power BI Migration: Benefits, Process, and Best Practices

Learn how to move from Tableau to Power BI with clear steps, real benefits, and practical tips to keep reports accurate and users on board.

4. Managing Business Continuity During Transition

One of the biggest concerns for enterprises is maintaining operations while migrating critical data pipelines. Indeed, production systems can’t simply shut down for weeks or months:

- Critical reports and dashboards must continue delivering results

- Downstream systems depending on data feeds need uninterrupted service

- Users require access to data throughout the migration period

- Any failures could impact revenue and customer satisfaction

Therefore, most organizations adopt a phased migration approach, moving workflows in waves while keeping legacy systems operational. Moreover, this requires careful coordination and planning to manage dependencies effectively.

5. Skill Gaps and Learning Curves

Databricks operates fundamentally differently from Informatica, which means teams must develop entirely new expertise:

- Developers accustomed to GUI tools must learn Spark programming

- Understanding distributed computing concepts becomes essential

- Cloud infrastructure knowledge replaces on-premises expertise

- DevOps practices for continuous integration and deployment are new for many teams

Additionally, training existing staff takes time and resources. Meanwhile, hiring experienced Databricks developers can be competitive and expensive, creating resource constraints during the critical migration period.

6. Hidden Dependencies and Undocumented Logic

Legacy systems often contain surprises that emerge only during migration. In fact, business rules may be hidden in unexpected places:

- Transformations embedded in SQL stored procedures outside Informatica

- Manual processes that supplement automated workflows

- Undocumented assumptions about data quality or formats

- Integration points with other systems not captured in formal documentation

Unfortunately, these hidden dependencies can derail migration timelines when discovered late in the process. That’s why thorough discovery and documentation during the assessment phase are absolutely critical.

7. Cost and Timeline Uncertainty

Without proper planning and tooling, migration projects can easily exceed budgets and schedules:

- Manual code conversion is labor-intensive and slow

- Testing and validation require significant time investment

- Unexpected complexity emerges as migration progresses

- Resource requirements often exceed initial estimates

How Kanerika’s FLIP Accelerates Informatica to Databricks Migration

Migration doesn’t have to be a months-long struggle with unpredictable costs and delays. Kanerika’s FLIP platform transforms the traditionally complex, manual migration process into a streamlined, automated journey that delivers faster and more reliable results.

The FLIP Migration Advantage

FLIP specifically addresses the unique challenges of Informatica to Databricks transitions through intelligent automation. The platform delivers several key advantages:

- 80% automation of transformation effort – Your team spends less time on tedious code conversion

- Business logic preservation – All transformation rules and data quality checks migrate intact

- Optimized for Spark architecture – Code is restructured to leverage distributed computing

- Reduced migration risk – Automated validation ensures accuracy throughout the process

Unlike generic migration tools, FLIP understands PowerCenter’s nuances and converts assets into production-ready Databricks pipelines while maintaining functional equivalence.

Phase 1: FIRE – Intelligent Repository Extraction

Before migration begins, you need comprehensive access to your PowerCenter repository. FIRE serves as Kanerika’s extraction foundation:

1. Secure Connection to PowerCenter: FIRE connects to your Informatica environment through pmrep protocols, providing secure access to your repository without disrupting existing operations.

2. Preview and Selection: Instead of manually exporting components one by one, FIRE allows you to preview your entire repository and select specific mappings, workflows, and business logic you want to migrate.

3. Complete Dependency Packaging: FIRE identifies not just the mappings you select but also all related dependencies:

- Mapplets and reusable transformations

- Sessions and workflow configurations

- Parameters and variables

- Connection objects and metadata

Everything gets organized into a structured ZIP format, maintaining all relationships between components to ensure nothing gets lost in the migration process.

Phase 2: FLIP – Automated Code Transformation

Once your Informatica assets are extracted, FLIP’s intelligent conversion engine takes over the heavy lifting.

1. Simple Upload and Configuration: You upload your FIRE package to the FLIP platform, select your target Databricks workspace, and choose your preferred output language—Python Spark or Scala Spark.

2. Intelligent Analysis and Conversion: FLIP analyzes your Informatica objects and performs sophisticated transformations:

- Mappings convert to optimized Databricks notebooks

- Complex transformations become efficient Spark code

- Workflow dependencies are preserved and restructured

- Business logic maintains accuracy while gaining performance benefits

3. Optimization for Distributed Architecture: The conversion goes beyond simple translation. FLIP restructures your code to take advantage of Spark’s distributed computing capabilities, ensuring better performance than a direct lift-and-shift approach.

Phase 3: Deployment and Validation

FLIP ensures your migrated code is ready for production with comprehensive deployment support.

1. Organized Deployment Packages: Access your migrated scripts immediately through structured packages that include:

- Converted Databricks notebooks

- Detailed migration logs and reports

- Documented source code

- Test templates for validation

2. Built-in Validation Framework: FLIP provides extensive validation capabilities to ensure migration accuracy:

- Schema reconciliation between source and target

- Data validation and quality checks

- Error handling verification

- Performance benchmarking

3. Seamless Integration: Deploy validated workflows to your Databricks production environment with confidence, knowing that business logic has been preserved and optimized.

Key Benefits of the Choosing FLIP

- Dramatic Time Savings: What traditionally takes 6-12 months can be accomplished in weeks, allowing your organization to realize value from Databricks much faster.

- Cost Reduction: Automated migration reduces labor costs by 60-70% compared to manual approaches, while minimizing the risk of expensive errors and rework.

- Minimal Disruption: Phased migration capabilities allow you to continue running critical workloads on Informatica while gradually transitioning to Databricks, ensuring zero downtime.

- Full Transparency: Detailed reports and documentation throughout the process give stakeholders complete visibility into migration progress and validation results.

Best Practices for Successful Informatica to Databricks Migration

A successful migration requires more than just converting code—it demands strategic planning, methodical execution, and attention to detail. Following proven best practices ensures your transition is smooth, cost-effective, and delivers the expected business value.

1. Start with Comprehensive Assessment

Before touching any code, conduct a thorough assessment of your PowerCenter landscape. Moreover, document every mapping, workflow, and dependency to understand the full scope of what needs to migrate. This inventory should include:

- Total count of mappings, mapplets, and workflows

- Complexity classification (simple, medium, complex)

- Source and target system dependencies

- Data volumes and processing frequencies

- Business criticality of each workflow

Creating this baseline helps you estimate timelines accurately and identify potential challenges before they become problems.

2. Analyze Complexity and Dependencies

Not all workflows are created equally. Therefore, some mappings contain straightforward transformations, while others include complex business logic that requires careful handling. Use automated assessment tools to:

- Identify mappings with custom transformations

- Map dependencies between workflows

- Detect hard-coded values that need parameterization

- Find outdated or unused components that can be retired

This analysis reveals which components can be automated and which need manual attention, allowing you to allocate resources appropriately.

3. Adopt a Phased Migration Strategy

Migrating everything at once is risky and overwhelming. Instead, prioritize workflows based on business impact and technical complexity:

- Start with low-risk, high-value workflows to build confidence and demonstrate quick wins

- Move critical production workflows carefully with extensive testing and validation

- Leave complex, tightly coupled systems for later phases after your team gains experience

This approach delivers incremental value while minimizing operational disruption.

4. Choose the Right Conversion Approach

Balance Automation with Manual Refinement: Automated migration tools handle the bulk of code conversion, but strategic manual refinement optimizes results. Moreover, the best approach combines both:

- Use automation for standard transformations to save time and reduce errors

- Manually optimize complex workflows to leverage Databricks-specific features

- Refactor inefficient legacy patterns rather than simply replicating them

- Document custom changes for future maintenance and troubleshooting

Remember that migration is an opportunity to improve, not just replicate what exists.

Select Appropriate Target Language: Databricks supports both Python and Scala for Spark development. Thus, choose based on your team’s skills and organizational standards:

- Python offers easier learning curves and broader data science integration

- Scala provides better type safety and slightly better performance for some operations

- Consider mixing languages strategically based on use case requirements

Consistency matters more than the specific choice—pick one primary language and stick with it for maintainability.

5. Implement Rigorous Testing and Validation

Establish Multiple Validation Layers Data accuracy is non-negotiable. Implement comprehensive validation at every stage:

Schema Validation

- Verify table structures match between source and target

- Confirm data types convert correctly

- Check column mappings and naming conventions

Data Validation

- Compare row counts between Informatica and Databricks outputs

- Validate aggregations and calculations at summary levels

- Perform cell-by-cell comparisons for critical datasets

- Test edge cases and boundary conditions

Performance Validation

- Benchmark processing times against expectations

- Monitor resource utilization and costs

- Identify and optimize bottlenecks early

Automated validation tools accelerate this process while ensuring consistency across all workflows.

6. Plan for Post-Migration Optimization

Successfully migrating code is just the beginning. Plan for continuous improvement:

- Monitor performance metrics to identify optimization opportunities

- Leverage Databricks-native features that weren’t possible in Informatica

- Implement medallion architecture for better data quality management

- Explore AI/ML capabilities now that your data is in Databricks

The real value comes from modernizing workflows to take full advantage of what Databricks offers, not just replicating legacy patterns.

Why Choose Kanerika for Informatica to Databricks Migration Services

Kanerika brings specialized expertise and proven automation to make your Informatica to Databricks migration faster, safer, and more cost-effective. As a Microsoft Solutions Partner for Data and AI with deep Databricks experience, we combine technical excellence with strategic insight to deliver migrations that exceed expectations. Moreover, our proprietary FLIP platform automates up to 80% of the migration effort, dramatically reducing timelines from months to weeks while preserving business logic and ensuring zero data loss. Unlike generic consulting firms, we’ve successfully completed numerous enterprise migrations across industries, giving us the battle-tested methodologies and automated accelerators that turn complex transitions into predictable, low-risk projects.

What sets Kanerika apart is our end-to-end approach that goes beyond simple code conversion. Furthermore, We start with comprehensive assessment and planning, execute migrations using intelligent automation, provide rigorous validation at every stage, and deliver hands-on training to ensure your team can maintain and optimize the new platform independently.

Accelerate Your Data Transformation by Migrating to Modern Platforms!

Partner with Kanerika for Expert Data Modernization Services

FAQs

1. What is Informatica to Databricks migration?

Informatica to Databricks migration involves converting legacy PowerCenter ETL workflows into modern cloud-native data pipelines on the Databricks platform. This transformation enables organizations to leverage distributed computing, real-time processing, and unified analytics while eliminating expensive on-premises infrastructure and accelerating data engineering workflows.

2. How long does Informatica PowerCenter to Databricks migration take?

Migration timelines range from weeks to months depending on workflow complexity and volume. Simple mappings migrate in days, while enterprise implementations require longer periods. Automated migration accelerators reduce deployment time by 60-80% significantly faster than manual rewriting approaches that can take 6-12 months.

3. Can we migrate Informatica to Databricks in phases?

Yes, phased migration approaches are highly recommended. Organizations can select specific mappings, workflows, or business domains to migrate incrementally. Critical workloads migrate first for validation, followed by additional components when ready. This strategy minimizes operational disruption and enables teams to adapt gradually to the new platform.

4. What is the cost of Informatica to Databricks migration?

Migration costs depend on workflow complexity, transformation volume, data source diversity, and customization requirements. Automated accelerators reduce expenses by 60-70% compared to manual approaches. Most organizations achieve positive ROI within 12-18 months through combined infrastructure savings, eliminated PowerCenter licensing fees, and productivity improvements.

5. Does Databricks support all Informatica PowerCenter features?

Databricks provides equivalent or superior capabilities for most PowerCenter features including complex transformations, workflow orchestration, error handling, and data quality operations. Mappings, workflows, sessions, parameters, variables, and connection objects all migrate successfully. Some proprietary Informatica functions may require custom implementation using Spark APIs or user-defined functions.

6. Will Informatica to Databricks migration disrupt our operations?

No, properly planned migrations ensure zero downtime through parallel system operation. Organizations run both Informatica and Databricks simultaneously during the transition, validating outputs before fully switching over. Phased approaches allow critical workloads to continue running on legacy systems while new workflows are tested and validated in Databricks.

7. How does automated Informatica migration work with tools like FLIP?

Automated migration tools extract Informatica metadata from PowerCenter repositories, analyze mapping logic and workflow dependencies, then automatically convert them into optimized Databricks notebooks. These platforms preserve business logic while transforming proprietary code into Python or Scala Spark scripts ready for deployment, automating up to 80% of the conversion effort.

8. What training is required for teams after Informatica to Databricks migration?

Teams need training on Databricks platform fundamentals, Spark programming concepts (Python or Scala), Delta Lake features, workflow orchestration, and performance optimization techniques. Most organizations invest in hands-on workshops where team members work with actual migrated workflows under expert guidance. Training typically takes 2-4 weeks depending on team size and existing cloud experience.