Ever wondered how Netflix recommends shows that perfectly match your taste? An intricate ETL pipeline plays an important role in making this possible. It seamlessly gathers and processes user data, transforming it into insights that fuel personalized recommendations.

Wouldn’t it be great if your data analysis isn’t affected by inconsistencies, errors, and scattered sources? What if you could effortlessly transform your unorganized or fragmented data into a clean, unified stream, ready for analysis? An ETL pipeline makes this a reality.

From financial institutions handling millions of transactions every day to large e-commerce companies customizing your shopping experience, ETL Pipelines play a crucial role in modern day data architecture.

Table of Contents

- What is an ETL Pipeline?

- Importance of ETL Pipelines in Data Integration

- Key Phases in the ETL Pipeline

- A Guide to Building a Robust ETL Pipeline

- Best Practices for Designing an Effective ETL Pipeline

- Top ETL Tools and Technologies

- Applications of Successful ETL Pipelines

- Experience Next-Level Data Integration with Kanerika

- FAQs

What is an ETL Pipeline?

An ETL pipeline is a data integration procedure that gathers information from several sources, unifies it into a standard format, and loads it into a target database or data warehouse for analysis.

The following example helps understand how an ETL pipeline works. Think about an online retailer that gathers client information from several sources. This data would be extracted by the ETL pipeline, which would then clean, format, and load it into a central database. After it is organized, the data can be utilized for reporting, analytics, and data-driven business decision-making. ETL pipelines ensure consistency and quality of data while streamlining workflows for data processing.

Importance of ETL Pipelines in Data Integration

ETL (Extract, Transform, Load) Pipelines play a crucial role in data integration for several reasons:

1. Data Consistency

ETL Pipelines ensure that data from various sources, such as databases, applications, and files, is converted into a standard format prior to being loaded into a target destination. This uniformity enhances the quality and precision of data within the enterprise.

2. Data Efficiency

These pipelines automate the extraction, transformation, and loading processes, thus making data integration workflows more efficient. Automation minimizes manual errors, saves time, and enables faster delivery of information to end users.

3. Data Warehousing

They are widely used for loading structured and well-organized data into data warehouses. Businesses can perform complex analytics, generate insights and make informed decisions based on a unified dataset when information is centralized in this way.

4. Scalability

When data volume increases, ETL pipelines can scale up so that vast information can be processed effectively. Depending on its requirements, an organization may need batch or real-time processing. The flexibility of ETL Pipelines allows for this without compromising performance, empowering you to manage data at different scales and processing requirements.

5. Data Transformation

Different types of relational databases, cloud services, APIs, streaming platforms, etc., can be integrated together through an ETL Pipeline. These transformations ensure that data is consistent, meaningful, and ready for analysis.

A Quick Glimpse of Our Successful Data Integration Project

See How Kanerika Helped Improve Operational Efficiency of a Renowned Media Company with Real-time Data Integration

Key Phases in the ETL Pipeline

The ETL pipeline operates in three distinct phases, each playing a vital role in transforming raw data into a goldmine of insights. Let’s delve deeper into each stage:

1. Extraction Phase

This is the first step, where the ETL pipeline acts like a data cleaner. Its job is to identify and access data from various sources. This data can reside in relational databases like MySQL or Oracle or be retrieved programmatically through APIs offered by external applications or services. Even flat files (CSV, TXT) and social media platforms like Twitter can be valuable sources, although they might require additional parsing to become usable.

The choice of extraction technique depends on the nature of the data and its update frequency. Full extraction pulls all data from the source at a specific point in time, offering a complete snapshot. However, this method can be resource-intensive for large datasets. Incremental extraction, on the other hand, focuses on retrieving only new or updated data since the last extraction. This approach proves more efficient for frequently changing data streams.

2. Transformation Phase

This is where the real magic happens! The raw data extracted from various sources is far from perfect. It might contain inconsistencies, errors, and missing values. The transformation phase acts as a data cleaning and shaping workshop, meticulously preparing the information for analysis.

Data cleaning involves addressing missing values. Techniques like imputation (filling in missing values) or data deletion might be employed. Inconsistent data formats (e.g., dates, currencies) are standardized to ensure seamless analysis across different sources. Additionally, data validation checks are implemented to identify and remove errors, guaranteeing the accuracy and consistency of the data.

Once the data is clean, it’s further refined through aggregation and consolidation. Aggregation involves summarizing data by grouping it based on specific criteria. For example, you might want to sum sales figures by product category. Consolidation brings data from multiple sources together, creating a single, unified dataset that paints a holistic picture.

This phase can also involve data enrichment, where additional information from external sources is added to the existing data, providing deeper insights. Finally, data standardization ensures all the information adheres to a consistent format (e.g., units of measurement, date format) across all sources, facilitating seamless analysis.

3. Loading Phase

The final stage involves delivering the transformed data to its designated destination, where it can be readily accessed and analyzed. The chosen destination depends on the specific needs of your organization. Data warehouses are optimized for storing historical data and facilitating complex data analysis. They offer a structured environment for housing historical trends and facilitating in-depth exploration.

Alternatively, data lakes serve as central repositories for storing all types of data, both structured and unstructured. This allows for flexible exploration and accommodates future analysis needs that might not be readily defined yet.

The loading process itself can be implemented in two ways: batch loading or real-time loading. Batch loading transfers data periodically in large chunks. This approach is efficient for static or slowly changing data sets. However, for fast-moving data streams where immediate insights are crucial, real-time loading becomes the preferred choice. This method continuously transfers data as it becomes available, ensuring the most up-to-date information is readily accessible for analysis.

A Guide to Building a Robust ETL Pipeline

1. Define Your Business Requirements

Before getting started with the design and creation of an ETL pipeline, think about what kind of data do you need to integrate, and what insights are you hoping to glean. Understanding your goals will guide your ETL design and tool selection.

2. Identify Your Data Sources

Where is your data located? Databases, APIs, flat files, social media – map out all the locations you’ll need to extract data from. Consider the format of the data in each source – structured, semi-structured, or unstructured.

3. Choose Your ETL Tools

With your data sources identified, explore ETL tools. Open-source options like Apache Airflow or Pentaho are popular choices, while commercial solutions offer additional features and support. Consider factors like scalability, ease of use, and security when making your selection.

4. Design Your Pipeline

Now comes the blueprint. Sketch out the flow of your ETL pipeline, outlining the specific steps for each stage – extraction, transformation, and loading. Define how data will be extracted from each source, the transformations needed for cleaning and shaping, and the destination for the final, transformed data.

5. Implement Data Extraction

This is where your chosen ETL tool comes into play. Build the logic for extracting data from each source. Leverage connectors or APIs provided by your ETL tool to simplify the process.

6. Craft Your Data Transformations

This is where the magic happens! Design the transformations needed to clean and shape your data. Address missing values, standardize formats, and apply any necessary calculations or aggregations. Ensure your transformations are well-documented and easy to understand.

7. Load the Transformed Data

Delivery time! Configure your ETL tool to load the transformed data into its final destination – a data warehouse, data lake, or another designated storage location. Choose between batch loading for periodic updates or real-time loading for continuous data streams.

8. Test and Monitor

No pipeline is perfect. Build in thorough testing mechanisms to ensure your ETL process is running smoothly and delivering accurate data. Regularly monitor the pipeline for errors or performance issues.

9. Schedule and Automate

Once confident in your pipeline’s functionality, schedule it to run automatically at designated intervals. This ensures your data is consistently refreshed and reflects the latest information.

10. Maintain and Refine

ETL pipelines are living organisms. As your data sources or requirements evolve, your pipeline might need adjustments. Regularly review and update the pipeline to maintain its effectiveness and ensure it continues to deliver valuable insights.

Case Study Video: Our Data Integration Triumph

Learn How Kanerika has enhanced decision-making capabilities of a leading logistics and spend management solution provider with data integration and visualization.

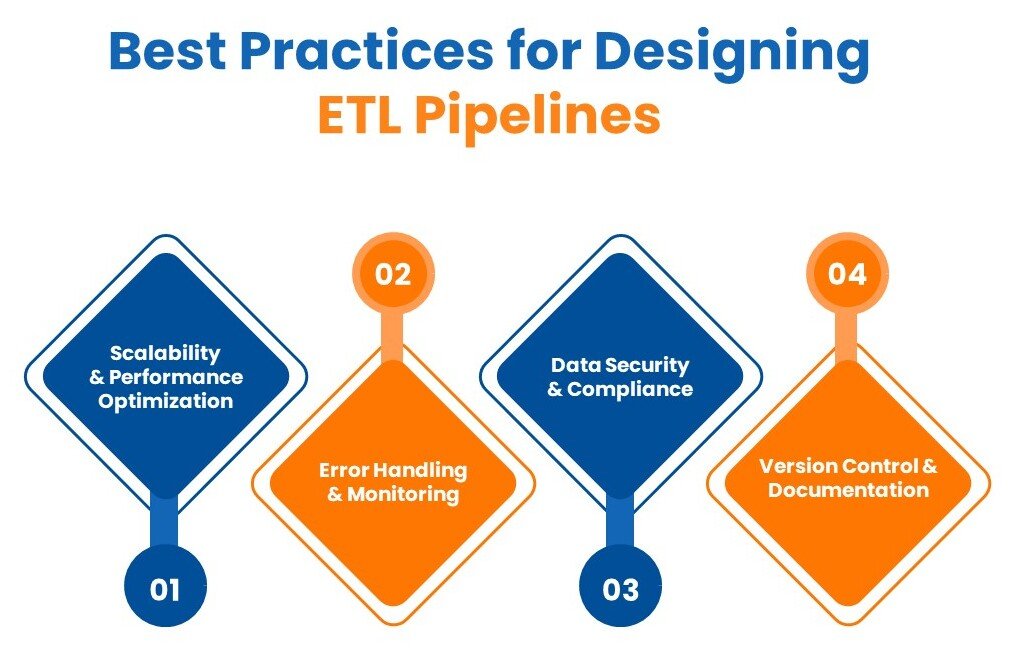

Best Practices for Designing an Effective ETL Pipeline

Building a robust ETL pipeline goes beyond just the technical steps. Here are some key practices to ensure your pipeline operates efficiently, delivers high-quality data, and remains secure:

1. Scalability and Performance Optimization

Choose Scalable Tools and Infrastructure: As your data volume grows, your ETL pipeline needs to keep pace. Select tools and infrastructure that can scale horizontally to handle increasing data loads without compromising performance.

Optimize Data Extraction and Transformation: Streamline your code! Avoid unnecessary processing or complex transformations that can slow down the pipeline. Utilize efficient data structures and algorithms.

Partition Large Datasets: Break down massive datasets into manageable chunks for processing. This improves processing speeds and reduces memory usage.

Utilize Parallel Processing: When possible, leverage parallel processing capabilities to execute multiple ETL tasks simultaneously, significantly reducing overall processing time.

2. Error Handling and Monitoring

Implement Robust Error Handling: Anticipate potential errors during data extraction, transformation, or loading. Design mechanisms to handle these errors gracefully, log them for analysis, and trigger notifications or retries as needed.

Monitor Pipeline Health Continuously: Don’t let errors lurk undetected! Set up monitoring tools to track the pipeline’s performance, identify potential issues, and ensure it’s running smoothly.

Alert on Critical Issues: Configure alerts to notify you of critical errors or performance bottlenecks requiring immediate attention. This allows for proactive troubleshooting and minimizes data quality risks.

3. Data Security and Compliance

Secure Data Access: Implement access controls to restrict access to sensitive data sources and the ETL pipeline itself. Utilize role-based access control (RBAC) to grant permissions based on user roles and responsibilities.

Data Encryption: Encrypt data at rest and in transit to safeguard it from unauthorized access. This is especially crucial when dealing with sensitive data.

Compliance with Regulations: Ensure your ETL pipeline adheres to relevant data privacy regulations like GDPR or CCPA. This might involve implementing specific data anonymization or retention policies.

4. Version Control and Documentation

Version Control Your Code: Maintain a clear version history of your ETL code using version control systems like Git. This allows for easy rollback in case of issues and facilitates collaboration among developers.

Document Your Pipeline Thoroughly: Document your ETL pipeline comprehensively. This includes documenting data sources, transformations applied, transformation logic, and data destinations. Clear documentation ensures smooth handoff and simplifies future maintenance efforts.

Top ETL Tools and Technologies

When it comes to ETL (Extract, Transform, Load) tools and technologies, various options cater to different needs, ranging from data integration to business intelligence. Below are some popular ETL tools and technologies:

1. Informatica PowerCenter

Features: Highly scalable, offers robust data integration capabilities, and is widely used in large enterprises.

Use Cases: Complex data migration projects, integration of heterogeneous data sources, large-scale data warehousing.

2. Microsoft SQL Server Integration Services (SSIS)

Features: Closely integrated with Microsoft SQL Server and Microsoft products, offers a wide range of data transformation capabilities.

Use Cases: Data warehousing, data integration for Microsoft environments, business intelligence applications.

3. Talend

Features: Open source with a commercial version available, provides broad connectivity with various data sources.

Use Cases: Data integration, real-time data processing, cloud data integration.

4. Oracle Data Integrator (ODI)

Features: High-performance ETL tool, well integrated with Oracle databases and applications.

Use Cases: Oracle environments, data warehousing, and business intelligence.

5. IBM DataStage

Features: Features strong parallel processing capabilities, suitable for high-volume, complex data integration tasks.

Use Cases: Large enterprise data migration, integration with IBM systems, business analytics.

6. AWS Glue

Features: Serverless data integration service that makes it easy to prepare and load data for analytics.

Use Cases: Cloud-native ETL processes, integrating with AWS ecosystem services, serverless data processing.

7. Apache NiFi

Features: Open-source tool designed for automated data flow between software systems.

Use Cases: Data routing, transformation, and system mediation logic.

8. Fivetran

Features: Cloud-native tool that emphasizes simplicity and integration with many cloud data services.

Use Cases: Automating data integration into data warehouses, business intelligence.

9. Stitch

Features: Simple, powerful ETL service for businesses of all sizes that automates data collection and storage.

Use Cases: Quick setup for ETL processes, integration with numerous SaaS tools and databases.

10. Google Cloud Dataflow

Features: Fully managed service for stream and batch data processing, integrated with Google Cloud services.

Use Cases: Real-time analytics, cloud-based data integration, and processing pipelines.

Applications of Successful ETL Pipelines

1. Business Intelligence & Analytics

ETL pipelines are the backbone of BI and analytics. They provide clean, consistent data for reports, dashboards, trend analysis, and advanced analytics like machine learning.

2. Customer Relationship Management (CRM)

ETL pipelines create a unified customer view by integrating data from sales, marketing, and support. This enables personalized marketing, improved customer service, and segmentation for targeted campaigns.

3. Marketing Automation & Campaign Management

ETL pipelines enrich marketing data by integrating it with website activity and social media data. This allows for measuring campaign performance, personalization of messages, and optimization of future initiatives.

4. Risk Management & Fraud Detection

Real-time data integration through ETL pipelines facilitates transaction analysis and suspicious pattern identification, helping prevent fraud and manage risk exposure in financial institutions and other organizations.

5. Product Development & Innovation

ETL pipelines empower product development by providing insights from consolidated customer feedback data and user behavior patterns. This informs product roadmap decisions, feature development, and A/B testing for data-driven optimization.

6. Regulatory Compliance

ETL pipelines can ensure data accuracy and completeness for adhering to industry regulations. They help organizations track and manage sensitive data efficiently.

Experience Next-Level Data Integration with Kanerika

Kanerika is a global consulting firm that specializes in providing innovative and effective data integration services. We offer expertise in data integration, analytics, and AI/ML, focusing on enhancing operational efficiency through cutting-edge technologies. Our services aim to empower businesses worldwide by driving growth, efficiency, and intelligent operations through hyper-automated processes and well-integrated systems.

Our commitment to customer success, passion for innovation, and hunger for continuous learning are reflected in the solutions we build for the clients, ensuring faster responsiveness, connected human experiences, and enhanced decision-making capabilities.

At Kanerika, we focus on automated data integration and advanced technologies positions them as a reliable partner for organizations seeking efficient and scalable data integration solutions.

Frequently Asked Questions

What is the ETL pipeline?

What are the stages of an ETL pipeline?

- Extract: Data is collected from multiple source systems.

- Transform: Data is cleansed, enriched, and converted to match the target schema.

- Load: The processed data is loaded into a data warehouse or another database.

What is the difference between ETL and ELT pipelines?

How to automate an ETL pipeline?

What are the uses of ETL pipelines?

What is an example of an ETL pipeline?

What are some of the best ETL tools?

- Informatica PowerCenter: Well-suited for enterprise-level data integration.

- Microsoft SQL Server Integration Services (SSIS): A comprehensive and enterprise-ready tool integrated with Microsoft SQL Server.

- Talend: Known for its open-source model that also offers cloud and enterprise editions.

- AWS Glue: A fully managed ETL service that makes it easy to prepare and load data for analytics.

- Apache NiFi: An open-source tool designed for automating data flow between systems.