Every enterprise grapples with disconnected systems, data silos and sprawling analytics stacks but Databricks Lakehouse Architecture changes the game. This unified, open and scalable platform brings together the best of data lakes and data warehouses into a single system.

Modern organisations face an explosion of data: structured tables, semi-structured logs, real-time streams and global multi-cloud workloads. Traditional lake + warehouse approaches struggle to keep up with cost, governance and performance demands. Databricks positions its Lakehouse as “one architecture for integration, storage, processing, governance, sharing, analytics and AI.”

By combining the agility and scale of a data lake with the reliability and performance of a data warehouse, the Lakehouse offers businesses a streamlined, future-ready analytics foundation.

In this blog, we’ll dive deep into what Databricks Lakehouse Architecture really is, why it matters, and how you can design and implement it within your enterprise. We’ll unpack core components, explore architecture and governance, and share best practices for successful adoption.

Key Takeaways

- Databricks Lakehouse Architecture unifies the best of data lakes and data warehouses into a single, open, and scalable platform for analytics and AI.

- It eliminates data silos by combining storage, processing, governance, and machine learning under one architecture.

- The Lakehouse enables organizations to handle structured, semi-structured, and unstructured data efficiently with real-time analytics capabilities.

- Enterprises using Databricks report record-setting performance and faster time-to-insight for both BI and AI workloads.

- Core components include Delta Lake for storage, Unity Catalog for governance, and Databricks SQL for analytics.

- Successful migration requires assessment, pilot runs, validation, and cost monitoring, supported by Databricks’ migration services and partner ecosystem.

- Real-world success stories like AT&T and Viessmann show measurable improvements in cost efficiency, speed, and governance after adopting the Lakehouse model.

What is Databricks Lakehouse Architecture?

The Databricks Lakehouse Architecture is a modern data management framework that unifies the best features of data warehouses and data lakes into a single, cohesive platform. Unlike traditional architectures that separate analytical and storage systems, the lakehouse model allows organizations to manage, analyze, and govern all types of data are structured, semi-structured, and unstructured within one environment.

Databricks defines its Lakehouse as an architecture that “unifies your data, analytics, and AI” bringing together data integration, storage, processing, governance, sharing, analytics, and machine learning on a single platform (Databricks). This unified approach eliminates the complexity and duplication that come with maintaining separate data lakes and warehouses.

Built on open-source technologies such as Apache Spark, Delta Lake, and MLflow, the Databricks Lakehouse runs seamlessly across major Cloud providers including AWS, Azure, and Google Cloud. These foundations provide scalability, flexibility, and interoperability that enterprises need for hybrid and multi-cloud data strategies.

For enterprise teams, the Lakehouse simplifies data management by reducing silos, converging batch and real-time processing, and supporting a wide variety of data types. Most importantly, it delivers unified governance and security ensuring trusted, consistent, and high-quality data across the entire organization.

Why It Matters for Modern Enterprises

Enterprises today are generating and consuming data at an unprecedented scale. With the explosion of structured, semi-structured, and streaming data, the need for a unified data architecture has become critical. Traditional systems that rely on separate data lakes for raw storage and data warehouses for analytics often create silos, duplicate data, and increase operational complexity. These fragmented architectures lead to higher costs, inconsistent governance, and slower time-to-insight.

The Databricks Lakehouse Architecture addresses these challenges by combining the scalability of a data lake with the performance and reliability of a warehouse all within a single platform. This unified model simplifies data management, eliminates the need for multiple systems, and provides one source of truth across the organization.

Databricks reports that its Lakehouse platform delivers “world-record-setting performance” for both data warehousing and AI workloads (Databricks). By consolidating analytics, data science, and machine learning on one platform, enterprises gain faster, more reliable insights while reducing maintenance overhead.

The result is a simplified data stack that enhances operational efficiency, strengthens data governance, and prepares organizations to be future-ready for AI and advanced analytics empowering smarter, faster, and more data-driven decision-making.

Struggling to choose between Cloudera and Databricks? We simplify the journey.

Partner with Kanerika for expert data strategy and implementation.

Core Components of the Databricks Lakehouse Architecture

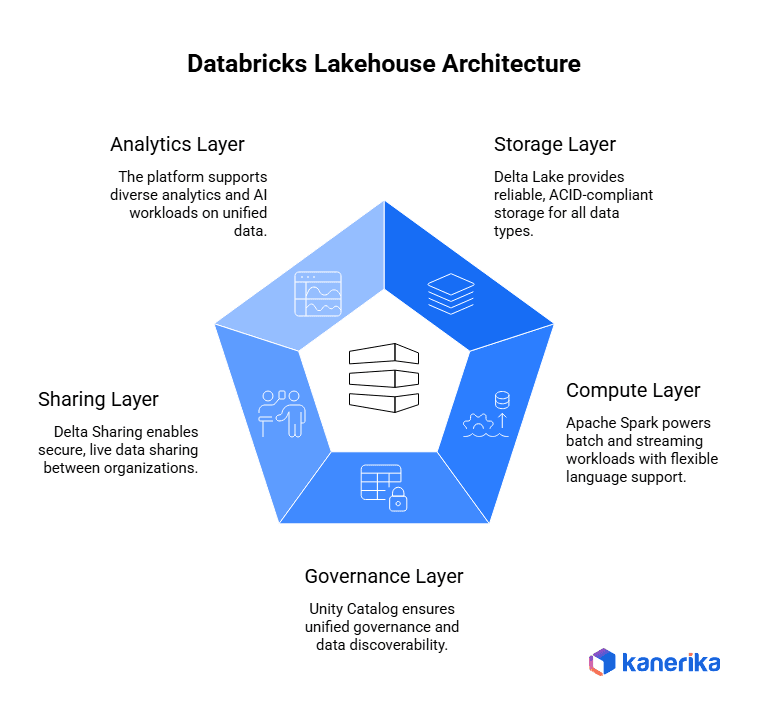

The Databricks Lakehouse combines five core components working together to deliver unified data, analytics, and AI capabilities on a single platform.

1. Storage Layer (Delta Lake)

Delta Lake provides the foundation for lakehouse storage with enterprise-grade reliability. The technology delivers ACID transactions ensuring data consistency even during concurrent writes. Built-in versioning tracks every change to data over time. Schema enforcement prevents bad data from entering tables. Time-travel capabilities let users query data as it existed at any previous point, enabling audit compliance and mistake recovery.

Delta Lake supports unified storage for both structured data like customer records and unstructured data like documents or images. This eliminates the traditional separation between data warehouses handling structured data and data lakes managing unstructured content. Organizations store all data types in one location while maintaining quality and governance.

2. Compute & Processing Layer

Apache Spark powers the Databricks processing engine, handling both batch and streaming workloads. The platform supports SQL for analysts, Python for data scientists, R for statisticians, and Scala for engineers. This flexibility lets different teams work in their preferred languages on the same platform.

Storage separates from compute, allowing independent scaling of each resource. Auto-scaling adjusts compute resources automatically based on workload demands, reducing costs during quiet periods and preventing performance problems during peak usage. Built-in concurrency management allows multiple users and jobs to run simultaneously without conflicts.

3. Governance & Metadata (Unity Catalog)

Unity Catalog provides unified governance across data, analytics, and AI assets in one place. The system manages metadata showing what data exists, where it lives, and what it means. Data lineage tracking reveals how data flows through pipelines and transforms over time. Access controls enforce who can view or modify specific datasets. Centralized cataloging makes data discoverable across the organization.

This unified approach simplifies governance compared to managing separate systems for different data types or workloads. Security policies apply consistently whether data supports reporting, machine learning, or application development.

4. Data Sharing & Ecosystem

Delta Sharing implements an open protocol for secure live data sharing between organizations and platforms. Companies share data with partners, customers, or other business units without copying files or managing complex APIs. Recipients access current data directly, seeing updates as they occur.

The platform integrates with partner ecosystems and networks, connecting to existing tools and extending capabilities. Organizations leverage their current investments while gaining lakehouse benefits.

5. Analytics & AI Capabilities

The platform supports diverse workloads on unified architecture. Business intelligence teams run SQL queries for reporting and dashboards. Data scientists build and deploy machine learning models. Engineers develop generative AI applications. Data engineering teams build and maintain pipelines.

Running everything on one platform eliminates data movement between specialized systems. The same data serves BI reports, machine learning models, and AI applications without copying or synchronization. This unified approach reduces complexity, improves data freshness, and accelerates time to value for analytics and AI initiatives.

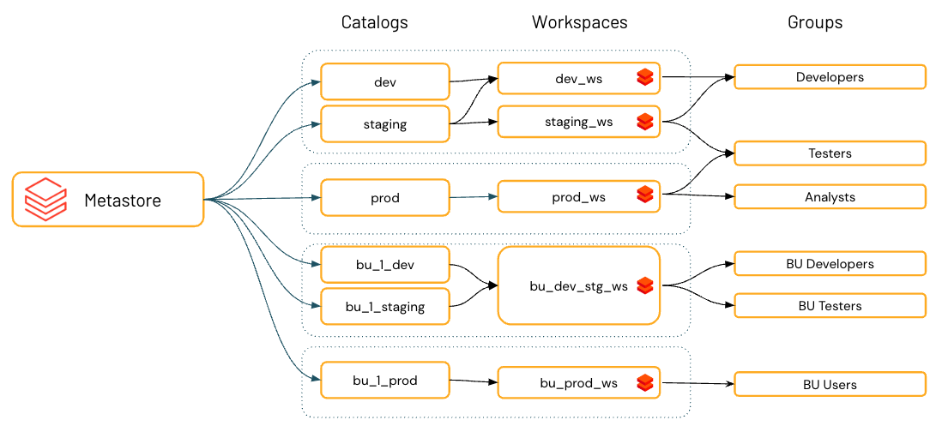

Designing a Lakehouse Architecture for Enterprises

Source – https://docs.databricks.com/aws/en/data-governance/unity-catalog/

1. Start with Business Requirements

Understand what your organization needs before picking technology.

- Data sources – Identify where data comes from: databases, applications, IoT devices, files, APIs, streaming sources.

- Data volume – Measure how much data you handle: gigabytes, terabytes, or petabytes.

- Data velocity – Determine if you need batch processing (hourly, daily) or streaming (real-time, near real-time).

- User personas – Know who will use the system: business analysts running reports, data scientists building models, executives viewing dashboards, engineers maintaining pipelines.

2. Define Zones and Layers

Lakehouse architecture uses multiple layers to organize and improve data quality.

- Ingestion layer – Brings data from sources into the system using connectors and streaming tools.

- Bronze zone (raw data) – Stores data exactly as it arrives without changes. This preserves the original for audit and replay purposes.

- Silver zone (validated data) – Cleans and standardizes data. Removes duplicates, fixes errors, enforces data types.

- Gold zone (curated data) – Refines data for specific business needs. Creates star schemas and aggregations optimized for reporting.

- Sandbox – Provides space for data scientists and analysts to experiment without affecting production data.

3. Choose Cloud Platform

Select infrastructure that fits your needs and existing investments.

- AWS – Use S3 for storage, Glue for ETL, Redshift Spectrum for queries, EMR for Spark processing.

- Azure – Use Azure Data Lake Storage, Synapse Analytics, Databricks, Data Factory for orchestration.

- GCP – Use Cloud Storage, BigQuery, Dataproc for Spark, Dataflow for streaming.

- Multi-cloud or hybrid – Some organizations need data across multiple clouds or keep sensitive data on-premises while using clouds for processing.

4. Schema Design

Pick the right data model for your use cases.

- Star schema – Central fact table surrounded by dimension tables. Best for business intelligence and reporting. Easy for analysts to understand and query.

- Snowflake schema – Normalized version of star schema. Saves storage but adds complexity.

- Data lake style – Flexible schema-on-read approach. Store raw data without predefined structure. Good for data science and exploration.

Most lakehouses use both approaches: flexible storage in bronze/silver zones and structured star schemas in gold zone for reporting.

5. Performance Optimization

Make queries run faster and reduce costs.

- Caching – Store frequently accessed data in memory for quick retrieval.

- Indexing – Create indexes on columns used in filters and joins to speed up queries.

- Partitioning – Divide tables by date, region, or category so queries only read relevant data.

- Table clustering – Group related data physically close together on disk.

- Query optimization – Write efficient SQL, avoid SELECT *, use appropriate joins, filter early in queries.

- File formats – Use Parquet or ORC for columnar storage and compression.

6. Scalability and Elasticity

Design systems that grow with your needs without wasting money.

- Separate compute clusters – Create different clusters for different workloads: one for ETL, another for BI, another for data science.

- Auto-pause when idle – Automatically shut down compute resources when not in use to save costs.

- Auto-scaling – Add resources automatically when workload increases, remove them when demand drops.

- Resource isolation – Prevent one team’s heavy queries from slowing down another team’s work.

7. Monitoring and Operations

Track system health and costs to prevent problems.

- Alerting – Set up notifications for failures, slow queries, data quality issues, or security events.

- Resource usage monitoring – Track CPU, memory, storage, and network usage to identify bottlenecks.

- Cost monitoring – Watch spending on compute, storage, and data transfer to stay within budget.

- Query performance tracking – Identify slow queries and optimize them.

- Data quality monitoring – Check for missing data, schema changes, or anomalies.

- Audit logging – Record who accessed what data and when for security and compliance.

8. Key Design Principles

- Start simple – Begin with basic bronze-silver-gold zones. Add complexity only when needed.

- Plan for growth – Choose technologies that scale as data volume and users increase.

- Automate operations – Build automated monitoring, testing, and deployment to reduce manual work.

- Document decisions – Record why you chose specific tools, schemas, and patterns.

- Test thoroughly – Validate data quality, performance, and security before production use.

Successful lakehouse designs balance immediate needs with future requirements, avoid over-engineering, and focus on delivering value to business users quickly.

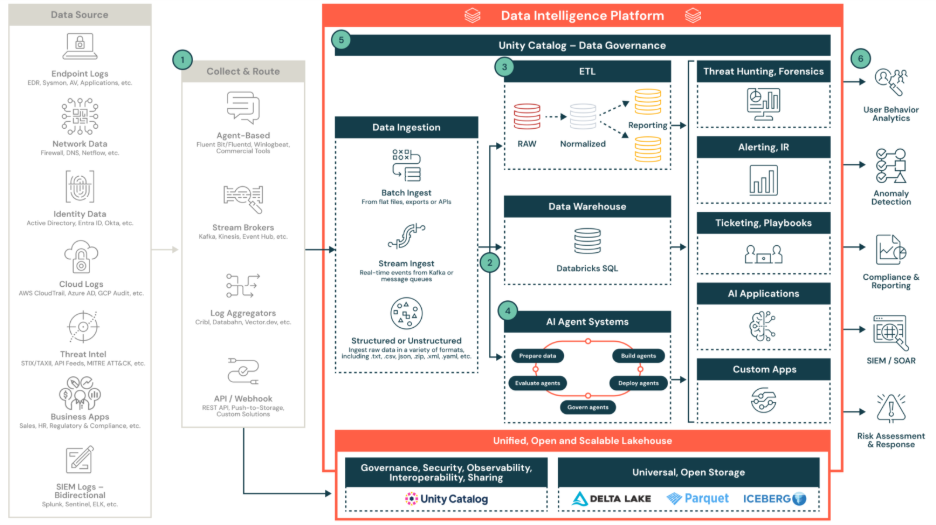

Governance, Security & Compliance in the Databricks Lakehouse Platform

Source – https://www.databricks.com/resources/architectures/reference-architecture-for-security-lakehouse

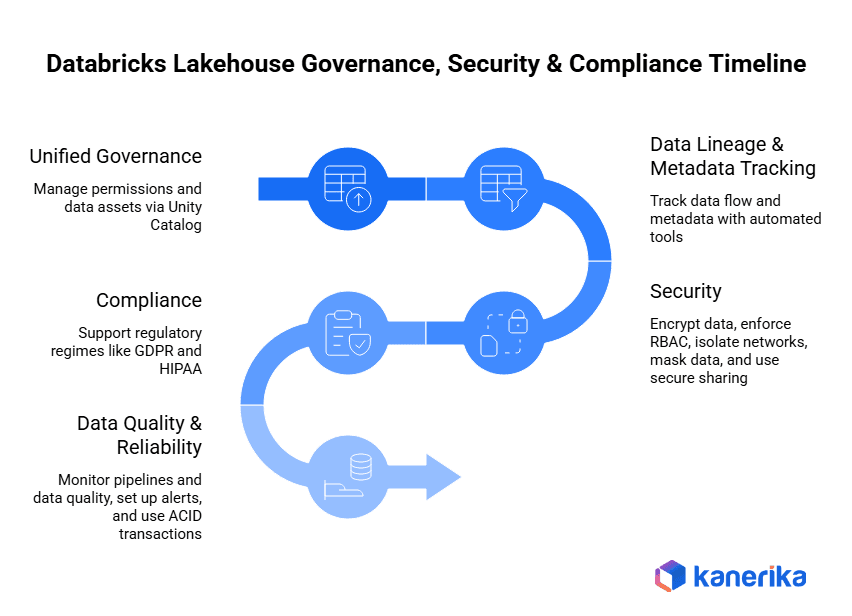

1. Unified Governance

Use a single interface to manage permissions, data assets and analytics products via Unity Catalog. This centralised governance ensures you reduce data silos and maintain consistent policy across all data domains.

2. Data Lineage & Metadata Tracking

Track how data flows through ingestion, transformation and consumption. Record metadata and lineage with automated tools so you can audit usage, version changes and build trust in your data platform.

3. Security

- Encrypt data at rest and in transit with cloud provider keys or customer-managed keys.

- Enforce role-based access control (RBAC) to restrict data and tool access by job role and team.

- Isolate networks via VPC/PE connections or private endpoints.

- Apply data-masking or anonymisation for sensitive records.

- Use secure sharing protocols (for example, live sharing of Delta tables) across domains.

These practices align with how Databricks describes its security-compliance framework.

4. Compliance

Support regulatory regimes like GDPR, HIPAA or region-based data-residency rules. Choose multi-region or locked-region deployment options and always adopt shared-responsibility models for cloud and platform.

5. Data Quality & Reliability

Monitor pipelines and data quality for accuracy, timeliness and completeness. Set up alerting when metrics fall outside defined thresholds. Maintain reliability by using ACID transactions (via Delta Lake), versioning and rollback capabilities in the lakehouse.

Migrating to the Databricks Lakehouse

The Databricks Lakehouse provides features that organizations should consider when migrating to the Lakehouse in cases where they operate on legacy data warehouses, have separate lake and warehouse systems, or hosted fragmented data environments. The structures tend to add expenses, postpone knowledge, and reduce scalability.

The process of migration starts with a detailed analysis of the current situation with data. Determine data sources, data transformation jobs, data structures, and data needs. Determine performance objectives, map dependencies, and measure data quality. Architecture Design the target Lakehouse architecture based on Delta Lake as a storage and Databricks SQL as a analytics engine.

One pilot migration assists in making design decisions correct and allows identifying problems in the early stages. Begin with a low-risk domain then do a parallel run then proceed to full cut-over with verification. A lot of businesses use a staged deployment to minimize downtime and risk of operations.

Databricks offers services on migration, extensive partner ecosystem, and accelerators to make the process easier. Schema conversion, data ingestion and ETL re-platforming are supported using open-source tools to guarantee compatibility between systems.

Risk management is crucial. Conduct data validation, reconciliation, and performance benchmarking at every stage. Implement governance controls early using Unity Catalog for metadata and access management.

After migration, focus on optimization. Right-size clusters, fine-tune SQL queries, and decommission legacy systems. Enable advanced analytics and AI workloads through Databricks’ integrated platform.

An effective migration provides scalable performance, uniform control, and a conglomerate platform of enterprise analytics and AI.

A proven strategy for low-risk migration

The process of migrating is complicated, yet does not necessarily need to be risky. Databricks offers a five-step system to go through to make the transition smooth that hundreds of customers have also gone through.

Partner with Kanerika for help.

Move Your Azure Workloads to Microsoft Fabric for a Unified Setup.

Best Practices & Pitfalls to Avoid in Databricks Lakehouse Architecture

Best Practices

The best practices and mistakes that can lead to the failures of projects must be avoided to implement Databricks Lakehouse architecture successfully. Those companies that are strategic realize quicker outcomes and superior results.

1. Establish Clear Business Goals First

Identify targeted business results and then choose technology. Determine what you require more reporting, less infrastructure spending, better data quality or greater AI capabilities. Also, measure the benefits that will be achieved by quantifying them using measurable values such as query performance goals or cost reduction proportions. Clear goals are used to guide the decisions of architecture and assist teams in prioritizing features. Implementations will not have specific goals and will thus tend to move in the direction of technical complexity instead of business value.

2. Define Your Data Strategy Early

Optimise your lakehouse design to current policies on governance, cloud infrastructure, and future AI projects prior to implementation. trace through data flows between sources through transformations to consumption. Moreover, define naming, security and quality criteria in advance. Long-term strategy effort avoids costly redoing. Most organizations which fail to plan strategically tend to experience integration difficulties and lack of governance once they are put into practice.

3. Start with a Pilot Project

Moving everything at once is not as good as starting with a small, low-risk project. Select one department, data domain or use case to start with. This method enables the teams to test the assumptions of performance, the governance controls validity and develop expertise safely. Moreover, pilot projects determine the unforeseen problems before they affect the vital systems. High achieving pilots develop confidence within the organization and they give tangible proof of larger investment.

4. Involve Key Stakeholders Throughout

Involve IT infrastructure teams, data engineers and analytics users in every project phase and business leaders. Design decisions are enhanced by the fact that each group has a vital insight. Data engineers are familiar with technical needs whereas the business users specify reporting requirements. Moreover, early engagement by stakeholders is a way of avoiding the development of misalignment between what is constructed and what will be required by the users. Communication frequently will make sure that all are aware of progress and the changes to come.

5. Implement Governance from Day One

Immediately instead of seeing governance as an afterthought set up metadata tracking, data lineage, and access controls using Unity Catalog. Before loading data, define data ownership, the level of data classification, and data retention policies. Besides, determine schema change and access requests approval processes. Premature governance avers swamping of data and breach of compliance. Companies which introduce governance retrospectively have costly cleanup initiatives and possible regulatory results.

6. Monitor Costs and Performance Continuously

Databricks cost management tools will allow monitoring expenditure trends and finding optimization opportunities. Establish budget warnings to avoid unwarranted expenditures of rogue queries or lost groups. Track query performance indicators on the identification of slow operations which should be optimized. Also, scale the compute clusters to the real usage as opposed to the preliminary estimates. Constant observation allows the proactive changes that maintain expenses within predictable boundaries and performance within satisfactory levels.

7. Iterate and Optimize Continuously

Implementation of lakehouse should be a continuous process instead of a project. Periodically reevaluate workload trends, change cluster configurations, and optimize data models in accordance with real-use. Collect feedback on users in terms of areas of pain and improvement. Moreover, keep on track with the recent databricks functionality that might enhance functionality or even lower the expenses. Companies that are dedicated to the concept of continuous improvement maximize the investment in lakehouse in the long term.

Common Pitfalls to Avoid

1. Treating Lakehouse as Just Another Data Lake

The mistake: Yes the implementation of Databricks without governance, quality checks, or correct organization results in another data swamp and not a controlled lakehouse.

The effect: Teams will not be able to locate credible data, duplicate databases will emerge, and users will lose confidence in the platform. Instead, organizations re-create identical issues that they attempted to avoid.

The solution: Unity Catalog governance, the application of data quality criteria and establishing clear patterns of data organization at the very beginning. Make the lakehouse a managed business platform.

2. Ignoring Governance and Security Requirements

The mistake: Waiting to complete governance configuration once data has been migrated, considering security as an option, or providing excessively liberal access control.

The effects: Data loss discloses confidential data, failure to comply leads to regulatory fines, and auditing misconducts do harm to the organizational reputation. Security retrofitting is much more costly than initial security.

The solution: Security policies, access control and compliance requirements must be defined in the architecture design. Apply row-level security, column masking, and audit logging to production data prior to loading.

3. Poor Cost Planning and Monitoring

The mistake: The lack of monitoring cloud resource consumption, and the running of idle clusters or lack of budget guardrails.

The effect: Cloud charges are more than 2-3 times than expected, which causes budget crises. When the costs go out of control, the executives lose their faith in the platform. The projects are cancelled even when their technical success is achieved.

The solution: Add cost allocation tags, automatic cluster termination, spending alerts and review the usage patterns on a weekly basis. Serverless options should be used with unpredictable workloads.

4. Skipping Data Quality Validation

The mistake: Movement of data without profiling quality, thinking that the source systems have the correct information, or lack of reconciliation of old and new systems.

The effect: Decisions in businesses are made based on wrong information which causes costly errors. Contradictory reports destroy user trust. The data quality problems that are identified after migration cost the company costly cleanup projects.

The solution: Profile source data of quality problems prior to migration. Introduce computerized quality assurance procedures that confirm completeness, accuracy and consistency. Reconciled migrated data with source systems that prove to be correct.

5. Neglecting User Training and Change Management

The mistake: The introduction of new platforms without proper training of the users, or thinking that teams will discover it by themselves, or not taking the learning curves seriously.

The effects: Customers will keep operating around the new system with spreadsheets and the old tools. The adoption rates are low even after heavy investment. The teams are those that are opposed to change as opposed to supporting new capabilities.

The solution: Design extensive training modules in the various user types. Offer practical trainings, manuals and continuous assistance. Find leaders in the teams who would be able to teach others the platform.

6. Over-Engineering the Initial Implementation

The mistake: Designing excessively complicated architectures in an attempt to address all the future needs on day one.

The effect: Projects are too long to provide first value. Complexity congests teams with simple tasks appearing a challenge. The cost of maintaining them goes up even more.

The solution: begin with the bare minimum architecture that meets the present requirements. Introduce complexity bit by bit as real needs become known. Adhere to the concept of simpler being easier to comprehend, maintain and develop.

7. Insufficient Performance Testing

The mistake: Testing on small data sets, not testing at peak-load, or testing queries one at a time and not at realistic concurrent load.

The effect: The real workloads of the production systems reduce drastically. Month-end processing results in the time out of business-critical reports. Customers leave the platform going back to legacy.

The Solution: Before going live, test on data volumes that are production scale. Load simulate parallel user loads that are in line with expected usage patterns. Perform benchmark before launch and optimize performance against set SLAs.

Implementation Checklist of Lakehouse

1. Strategic Planning

- Corporate objectives in written form with quantifiable criteria of success.

- Information strategy conformed with enterprise architecture.

- Stakeholder engagement plan with effective communication channels.

2. Governance Foundation

- Unity Catalog with appropriate access control.

- Policies defined on data classification and retention.

- Trace of lineage facilitates all the data flows.

3. Technical Implementation

- Pilot project successfully accomplished.

- Scalability performance testing.

- Cost checking/alarming set.

4. User Enablement

- Developing training programs based on every user personality.

- Documentation produced and available.

- Processes put in place to support.

5. Operational Readiness

- Tracking dashboards of the key metrics.

- Procedures written on incident response.

- Process defined Continuous improvement.

Adhering to these best practices and preventing traps that are typical among others will significantly enhance the chance of successful Databricks Lakehouse adoption. During an organization investing time in appropriate planning, governance, and user enablement realizes quicker time-to-value and greater ROI on their investments in a lakehouse.

Microsoft Fabric Vs Databricks: A Comparison Guide

Explore key differences between Microsoft Fabric and Databricks in pricing, features, and capabilities.

Case Study: Improving Sales Intelligence with Databricks-Driven Data Workflows

The client is a fast-growing AI-based sales intelligence platform that gives go-to-market teams real-time insights about companies and industries. Their system collected large amounts of unstructured data from the web and documents, but their existing tools could not keep up with the growing volume. They used a mix of MongoDB, Postgres, and older JavaScript processing, which made it hard to scale and deliver fast results.

Client’s Challenges

The company faced several problems with its data workflows:

- Old document processing logic in JavaScript made updates hard and slow.

- Data was stored in different systems that did not work well together, which made it hard to get reliable insights quickly.

- Handling unstructured PDFs and metadata required a lot of manual work and took a long time.

Kanerika’s Solution

To fix these issues, Kanerika:

- Rebuilt the document processing workflows in Python using Databricks to make them faster and easier to manage.

- Connected all data sources into Databricks so teams could get one clear view of data.

- Cleaned up the PDF, metadata, and classification processes so the system worked more smoothly and delivered results faster.

Key Outcomes

- 80% faster processing of documents.

- 95% improvement in metadata accuracy.

- 45% quicker time to get insights for users.

Partner with Kanerika to Modernize Your Enterprise Operations with High-Impact Data & AI Solutions

Kanerika: Driving Business Growth with Smarter Data and AI Solutions

Kanerika helps businesses make sense of their data using cutting-edge AI, machine learning, and strong data governance practices. With deep expertise in agentic AI and advanced AI/ML data analytics, we work with organizations to build smarter systems that adapt, learn, and drive decisions with precision.

We support a wide range of industries like manufacturing, retail, finance, and healthcare in boosting productivity, reducing costs, and making better use of their resources. Whether it’s automating complex processes, improving supply chain visibility, or streamlining customer insights, Kanerika helps clients stay ahead.

Our partnership with Databricks strengthens our offerings by giving clients access to powerful data intelligence tools. Together, we help enterprises handle large data workloads, ensure data quality, and get faster, more actionable insights.

At Kanerika, we believe innovation starts with the right data. Our solutions are built not just to solve today’s problems but to prepare your business for what’s next.

Make the most of Databricks Lakehouse Architecture with seamless integration.

Partner with Kanerika to build scalable, future-ready data solutions.

FAQs

1. What is Databricks Lakehouse Architecture?

Databricks Lakehouse Architecture is a unified data management platform that combines the best features of data lakes and data warehouses. It allows organizations to store, process, analyze, and govern all types of data as structured, semi-structured, and unstructured on a single, scalable system.

2. How does a Lakehouse differ from traditional data warehouses or data lakes?

Traditional data warehouses focus on structured data and BI, while data lakes handle raw, unstructured data. The Lakehouse merges both, offering the flexibility of a data lake with the performance and governance of a warehouse reducing complexity and cost.

3. What are the core components of the Databricks Lakehouse?

The key components include Delta Lake (for reliable storage), Unity Catalog (for governance and metadata management), Databricks SQL (for analytics), and MLflow (for machine learning lifecycle management).

4. Why is the Lakehouse becoming central to enterprise data strategy?

The Lakehouse unifies data, analytics, and AI on a single platform. It reduces complexity, improves collaboration, and accelerates innovation. In 2026, enterprises see the Lakehouse as a foundation for long-term data modernization and AI readiness.

5. 6. How does the Lakehouse help enterprises scale securely?

The Lakehouse architecture scales across large data volumes while enforcing security through centralized policies. Fine-grained access control, encryption, and monitoring protect sensitive data. This enables growth without compromising compliance or operational stability.

6. What best practices should enterprises follow when adopting the Lakehouse?

Start with clear goals, define a data governance framework, run pilot projects, monitor cost and performance, and train teams early. View Lakehouse adoption as both a technical and cultural transformation.