You would agree that Artificial Intelligence and Natural Language Processing (NLP) have become an integral part of various technological applications today–for many good reasons. From powering intelligent chatbots to providing critical insights through NLP sentiment analysis, our interaction with machines has revolutionized.

However, the real game-changer in the NLP landscape has been the advent of Generative Pre-trained Transformers, commonly known as GPT models. These models have not only enhanced the capabilities of existing applications but have also opened doors to new possibilities in the realm of artificial intelligence. This comprehensive guide aims to provide a deep dive into the intricacies of GPT models, covering everything from their basic architecture to advanced optimization techniques. Whether you are a seasoned data scientist or a curious enthusiast, this guide will equip you with the knowledge you need to harness the power of GPT models effectively.

Table of Contents

What is the GPT Model?

Definition and Origin

At its core, the Generative Pre-trained Transformer or GPT model is built upon the Transformer architecture, initially designed for translation tasks but later adapted for a broader range of NLP applications. Unlike traditional models that require task-specific training data, these models are pre-trained on a massive corpus of text data and then fine-tuned to perform specific tasks. This two-step process of pre-training and fine-tuning has made GPT models a go-to solution for a multitude of NLP challenges.

Evolution of GPT Models

The GPT series has been a remarkable journey in the evolution of natural language processing. Starting with GPT-1, this model was equipped with 110 million parameters, enabling it to perform foundational tasks like text completion with a new level of proficiency.

Then came GPT-2, marking a significant leap with its 1.5 billion parameters. This enhancement broadened the model’s capabilities, improving the quality and diversity of text generation. It began to handle more complex tasks with greater contextual understanding, setting a new standard in AI language models.

GPT-3 further revolutionized the landscape with a staggering 175 billion parameters. This leap forward expanded the scope of what’s possible in NLP to an unprecedented degree. GPT-3’s prowess in tasks such as summarization, translation, question-answering, and code generation demonstrated its versatility and power. Notably, GPT-3’s advanced capabilities, vast parameter count, and sophisticated context window management redefined benchmarks in NLP, making it a highly sought-after model for a multitude of applications.

GPT-4, despite having a similar number of parameters as GPT-3, introduced significant improvements. These refinements led to enhanced performance, notably in its understanding of nuanced instructions, multi-modal capabilities (processing both text and images), and a more sophisticated context window that allows for more coherent and contextually aware responses. GPT-4 marked another milestone in the journey of AI, delivering more precise and contextually relevant outputs.

GPT 4o, the most recent AI model from OpenAI, takes language interaction to a new level. It boasts a significant upgrade in understanding complex language, allowing for more natural and nuanced conversations. But that’s not all! It can also process and generate audio and images, opening doors for creative tasks. The speed improvements make interactions smoother, while the introduction of free and paid tiers makes this powerful tool accessible to a wider audience.

Overview of GPT Models

Architectural Components

Built on the Transformer architecture, these models consist of multiple layers of transformer blocks, each containing self-attention mechanisms and feed-forward neural networks. This design enables GPT models to capture long-range dependencies in the text, making them highly effective for a variety of NLP tasks.

Features and Capabilities

One of the standout features of GPT models is their ability to generate human-like text. This is achieved through a mechanism known as “autoregression,” where the model generates one token at a time and uses its previous outputs as inputs for future predictions. This sequential generation process allows for the creation of coherent and contextually relevant text, setting GPT models apart from many other machine learning models in the NLP domain.

Another remarkable capability of GPT models is their versatility. Due to their pre-training on diverse and extensive text corpora, these models can be fine-tuned to excel in specific tasks or industries. Whether it’s generating creative writing, answering questions in a customer service chatbot, or even composing music, the possibilities are virtually endless.

The evolution from GPT-1 to the latest versions has also seen significant improvements in efficiency and performance. Advanced optimization techniques and hardware accelerators like GPUs have made it feasible to train your own GPT model and deploy it, opening up new avenues for research and application.

Working Mechanism of GPT Models

Information Processing Flow

Understanding the working mechanism of GPT models is crucial for anyone looking to harness their capabilities effectively. At the heart of these models is the process of information flow, which begins with the input data. The data, usually in the form of text, is first tokenized into smaller pieces, often words or subwords. These tokens are then converted into vectors using embeddings, which capture the semantic meaning of each token.

Decoding Phase

Once the data is prepared, it is fed into the GPT model, which consists of multiple layers of transformer blocks. Each block contains self-attention mechanisms and feed-forward neural networks. The self-attention mechanism allows the model to weigh the importance of different parts of the input text, enabling it to capture long-range dependencies and relationships between words or phrases. This is particularly useful for tasks that require understanding the context, such as question-answering or summarization.

The feed-forward neural networks in each transformer block further process the data, applying nonlinear transformations that help the model make complex decisions. As the data passes through each layer, the model learns to generate more accurate and contextually relevant outputs.

One of the key steps in the working of GPT models is the decoding phase, where the model generates output text. This is done using an autoregressive mechanism, where the model produces one token at a time, using its previous outputs as context for generating future tokens. This ensures that the generated text is coherent and contextually appropriate.

How to Choose the Right GPT Model for Your Needs

Factors to Consider

Choosing the right GPT model for your specific needs involves a careful evaluation of several factors. The first and perhaps most obvious is the size of the model, usually indicated by the number of parameters it contains. Larger models like GPT-3 offer unparalleled performance but come with increased computational requirements and costs. If your task is relatively simple or you’re constrained by resources, smaller versions like GPT-2 or even DistilGPT may suffice.

Fun Fact – The “Distil” in DistilGPT stands for “knowledge distillation,” where the model plays the role of a “student” learning from a larger “teacher” model. Even though it’s like a mini-me version of the original GPT, DistilGPT can still deliver impressively accurate results while being faster and more efficient!

Comparative Analysis of Different Versions

It’s also beneficial to compare different versions of GPT models to understand their strengths and limitations. For instance, while GPT-3 excels in tasks that require a deep understanding of context, GPT-2 might be more than adequate for simpler tasks like text generation or summarization. Some specialized versions are optimized for specific tasks, offering a middle ground between performance and computational efficiency.

What Makes a Good GPT Model?

The quality of a GPT model can be evaluated based on several criteria. Accuracy is often the most straightforward metric, but it’s not the only one. Other factors like computational efficiency, scalability, and the ability to generalize across different tasks are also crucial. Moreover, the interpretability of the model’s predictions can be vital in applications where understanding the reasoning behind decisions is important.

Factors that Contribute

- Model Size: A larger model with more parameters often means better performance, as it can capture more complex patterns and dependencies in the data. However, there is a trade-off with computational efficiency.

- Training Data: The quality and diversity of the training data play a crucial role. Data should be well-curated, diverse, and representative of the tasks the model can perform.

- Architecture: The underlying architecture, like the Transformer structure in the case of GPT, should be capable of handling the complexities involved in natural language understanding and generation.

- Self-Attention Mechanism: The self-attention mechanisms should effectively weigh the importance of the input, capturing long-range dependencies and relationships between words or phrases.

- Training Algorithms: Effective optimization algorithms and training procedures are essential for the model to learn efficiently.

- Fine-Tuning Capabilities: The ability to fine-tune the model on specific tasks or datasets can enhance its performance and applicability.

- Low Latency & High Throughput: For real-world applications, the model should be able to provide results quickly and handle multiple requests simultaneously.

- Robustness: A good GPT model should be robust to variations in input, including handling ambiguous queries or coping with grammatical errors in the text.

- Coherence and Contextuality: The model should generate outputs that are coherent and contextually appropriate, especially in tasks that require generating long-form text.

- Ethical and Fair: It should minimize biases and not generate harmful or misleading information.

- Resource Efficiency: While larger models may offer better performance, resource efficiency is also a key factor, especially for deployment in constrained environments.

Interpretability of Model Predictions

Interpretability is another crucial factor, especially for applications where understanding the model’s decision-making process is important. Techniques like LIME (Local Interpretable Model-agnostic Explanations) or SHAP (SHapley Additive exPlanations) can be used to understand how the model is making its predictions, providing valuable insights into its behavior.

- Perplexity: It is a measure of how well a language model predicts a given sequence of words. It measures the uncertainty of the model’s predictions and is calculated as the inverse probability of the test set, normalized by the number of words in the test set. A lower perplexity score indicates that the model is better at predicting the next word in the sequence.

- BLEU: Bilingual Evaluation Understudy or BLEU is a metric commonly used in machine translation to evaluate the quality of generated text. It measures the similarity between the generated text and a reference text, based on n-gram overlap. A higher BLEU score indicates that the generated text is more similar to the reference text.

Evaluating a GPT model’s performance is not just about looking at its accuracy or loss metrics. Depending on the specific NLP task, different metrics might be more appropriate. For instance, for translation tasks, BLEU scores are often used, while for text summarization, ROUGE scores might be more relevant.

Importance of Domain-Specific Knowledge in Training a GPT Model

While GPT models are designed to be versatile, incorporating domain-specific knowledge can significantly enhance their performance in specialized tasks. For instance, a GPT model trained in medical literature will be much more effective in diagnosing diseases than a generic model. Similarly, a model trained on legal documents will be better suited for tasks like contract analysis or legal research.

Prerequisites to Build a GPT Model

Hardware and Software Requirements

Before you can start building a GPT model, you’ll need to ensure that you have the necessary hardware and software. High-performance GPUs are generally recommended for training these models, as they can significantly speed up the process. Some of the popular choices include NVIDIA’s Tesla, Titan, or Quadro series. Alternatively, cloud-based solutions offer scalable computing resources and are a viable option for those who do not wish to invest in dedicated hardware.

On the software side, you’ll need a machine-learning framework that supports the Transformer architecture. TensorFlow and PyTorch are the most commonly used frameworks for this purpose. Additionally, you’ll need various libraries and tools for tasks like data preprocessing, model evaluation, and deployment.

Introduction to Datasets

The quality of your dataset is a critical factor that can make or break your GPT model. For pre-training, a large and diverse text corpus is ideal. This could range from Wikipedia articles and news stories to books and scientific papers. The goal is to expose the model to a wide variety of language constructs and topics.

For fine-tuning, the dataset should be highly specific to the task you want the model to perform. For instance, if you’re building a chatbot, transcripts of customer service interactions would be valuable. If it’s a medical diagnosis tool, then clinical notes and medical records would be more appropriate.

How to Create a GPT Model

Steps to Initialize a Model

Creating a GPT model from scratch is a complex but rewarding process. The first step is to initialize your GPT model architecture. This involves specifying the number of layers, the size of the hidden units, and other hyperparameters like learning rate and batch size. These settings can significantly impact the model’s performance, so it’s crucial to choose them carefully based on your specific needs and the resources available to you.

It’s not just about the architecture; it’s also about planning and domain-specific data. Before you even start initializing your model, you need to have a clear plan and a dataset that aligns with your specific needs. This planning stage is often overlooked but is crucial for the success of your model.

Setting Up the Environment and Required Tools

Before you start the training process, you’ll need to set up your development environment. This involves installing the necessary machine learning frameworks and libraries, such as TensorFlow or PyTorch, and setting up your hardware or cloud resources. You’ll also need various data preprocessing tools to prepare your dataset for training. These could include text tokenizers, data augmentation libraries, and batch generators.

Setting up the environment is not just about installing the right tools; it’s also about ensuring you have the computational resources to handle the training process. Whether it’s robust computing infrastructure or cloud-based solutions, make sure you have the resources to meet the computational demands of training and fine-tuning your model.

Also Read- The Perils of Generative AI: When Facts Turn Fictional

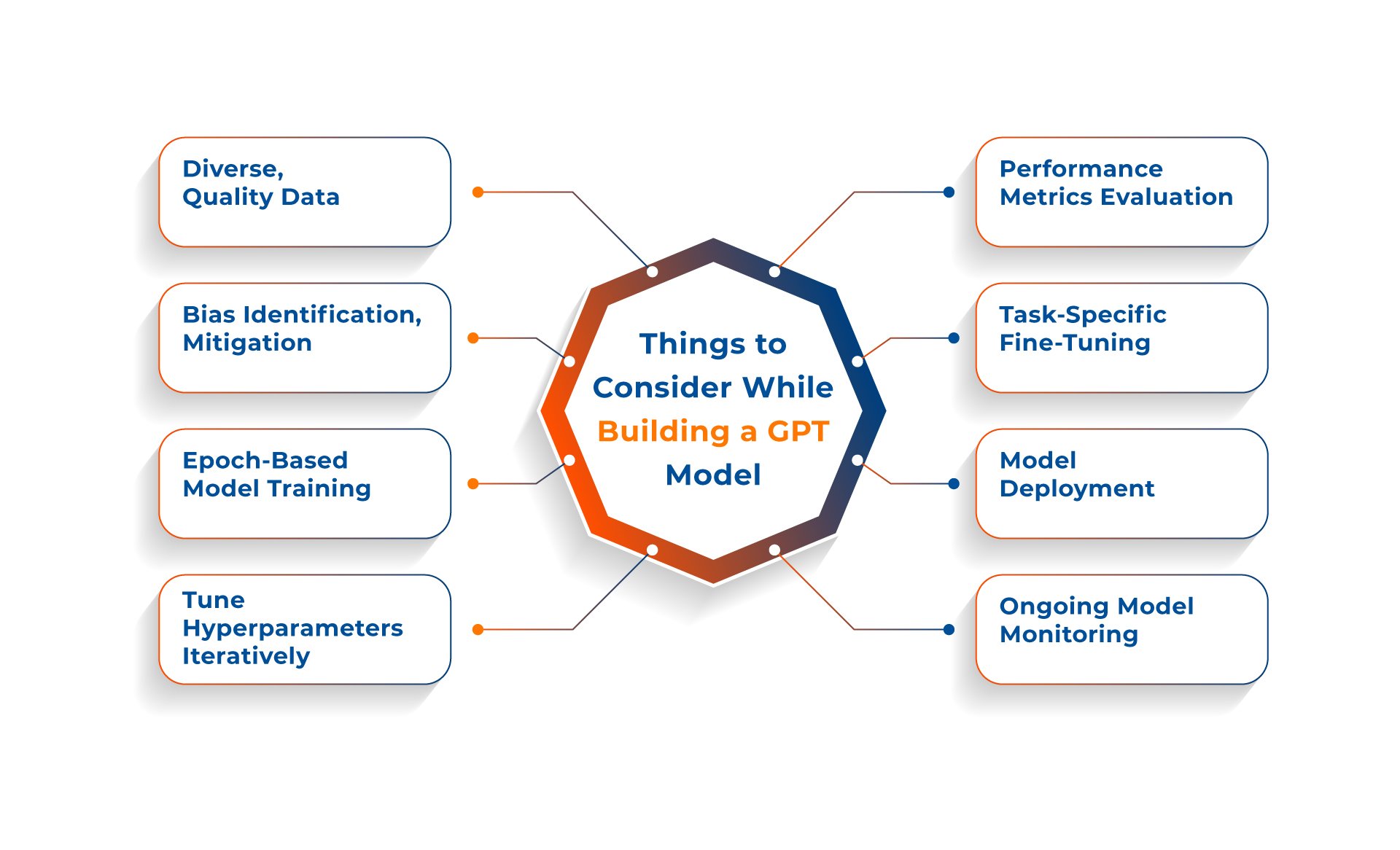

Things to Consider While Building a GPT Model

Data Quality and Diversity

The quality and diversity of your training data can significantly impact your model’s performance. It’s crucial to use a dataset that is both large and diverse enough to capture the various nuances and complexities of the language. This is especially important for tasks that require a deep understanding of context or specialized knowledge.

Handling Biases in the Data

Another critical consideration is the potential for biases in your training data. Biases can manifest in many forms, such as gender bias, racial bias, or even bias toward certain topics or viewpoints. It’s essential to identify and mitigate these biases during the data preprocessing stage to ensure that your model performs fairly and accurately across diverse inputs.

Training the Model

Once your environment is set up, and you have your domain-specific data ready, the next step is to train your model. Training involves feeding your prepared dataset into the initialized model architecture and allowing the model to adjust its internal parameters based on the data. This is usually done in epochs, where one epoch represents one forward and backward pass of all the training examples.

Hyperparameter Tuning

While the model is training, you may need to fine-tune hyperparameters like learning rate, dropout rate, or weight decay to improve its performance. This process can be iterative and may involve running multiple training sessions with different settings to find the optimal combination of hyperparameters.

Model Evaluation

After training is complete, you should evaluate your model’s performance using various metrics such as accuracy, F1-score, or precision-recall, depending on your specific application. It’s also crucial to run your model on a separate validation set that it has not seen before to gauge its generalization capability.

Fine-Tuning for Specific Tasks

If you’ve built a generic GPT model, you may need to fine-tune it on a more specialized dataset to perform specific tasks effectively. Fine-tuning involves running additional training cycles on a smaller, domain-specific dataset.

Deployment

The final step is deploying your trained and fine-tuned GPT model into a real-world application. This could range from integrating it into a chatbot to using it for data analysis or natural language processing tasks. Ensure that your deployment environment meets the computational demands of your model.

Monitoring and Maintenance

After deployment, continuously monitor the model’s performance and make adjustments as needed. Data drift, where the distribution of the data changes over time, may require you to retrain or fine-tune your model periodically.

Pre-Training Your GPT Model

Pre-training serves as the foundational phase for GPT models, where the model is trained on a large, general-purpose dataset. This allows the model to learn the basic structures of the language, such as syntax, semantics, and even some world knowledge. The significance of pre-training cannot be overstated; it sets the stage for the model’s later specialization through fine-tuning. Essentially, pre-training imbues the model with a broad understanding of language, making it capable of generating and understanding text in a coherent manner.

The quality of the dataset used for pre-training is crucial. It must be diverse and extensive enough to cover various aspects of language. The data should also be clean and well-preprocessed to ensure that the model learns effectively.

Methods and Strategies for Effective Pre-Training

Effective pre-training is not just about feeding data into the model; it’s about doing so in a way that maximizes the model’s ability to learn and generalize. Here are some strategies to make pre-training more effective:

Data Augmentation

Data augmentation involves modifying the existing data to create new examples. This can be done through techniques like back translation, synonym replacement, or sentence shuffling. Augmenting the data can enhance the model’s ability to generalize to new, unseen data.

Layer Normalization and Gradient Clipping

These are techniques used to stabilize the training process. Layer normalization involves normalizing the inputs in each layer to have a mean of zero and a standard deviation of one, which can speed up training and improve generalization. Gradient clipping involves limiting the value of gradients during backpropagation to prevent “exploding gradients,” which can destabilize the training process.

Use of Attention Mechanisms

Attention mechanisms, particularly the Transformer architecture, have been pivotal in the success of GPT models. They allow the model to focus on different parts of the input text differently, enabling it to capture long-range dependencies and relationships in the data.

Monitoring Metrics

Just like in the fine-tuning phase, monitoring metrics like loss and accuracy during pre-training is crucial. This helps in understanding how well the GPT model is learning and whether any adjustments to the training process are needed.

By employing these methods and strategies, you can ensure that the pre-training phase is as effective as possible, setting a strong foundation for subsequent fine-tuning and application-specific training.

Steps to Train a GPT Model

Detailed Walkthrough of the Training Process

Training a GPT model is not a trivial task; it requires a well-thought-out approach and meticulous execution. Once you’ve preprocessed your data and configured your model architecture, you’re ready to begin the training phase. Typically, the data is divided into batches, which are then fed into the model in a series of iterations or epochs. After each iteration, the model’s parameters are updated based on a loss function, which quantifies how well the model’s predictions align with the actual data.

Batch Size and Learning Rate

Choosing the right batch size and learning rate is crucial. A smaller batch size often provides a regularizing effect and lower generalization error. On the other hand, a larger batch size helps the model to converge faster but may lead to overfitting. The learning rate controls how much the model parameters should be updated during training. A high learning rate might make the model converge faster but overshoot the optimal solution, while a low learning rate could make the training process extremely slow.

Use of Optimizers

Optimizers like Adam or RMSprop can be used to adjust the learning rates dynamically during training, which can lead to faster convergence and lower training costs.

Monitoring and Managing the Training Phase

Effective management of the training phase can make or break your model’s performance. This involves not just monitoring key metrics like loss, accuracy, and validation scores, but also making real-time adjustments to your training strategy based on these metrics. Tools like TensorBoard can be invaluable for this, offering real-time visualizations that can help you make data-driven decisions.

Early Stopping and Checkpointing

If you notice that your model’s validation loss has stopped improving (or is worsening), it might be time to employ techniques like early stopping. This involves halting the training process if the model’s performance ceases to improve on a held-out validation set. Checkpointing, or saving the model’s parameters at regular intervals, can also be beneficial. This allows you to revert to a ‘good’ state if the model starts to overfit.

Hyperparameter Tuning

Once you have a baseline model, you can perform hyperparameter tuning to optimize its performance. Techniques like grid search or random search can be used to find the optimal set of hyperparameters for your specific problem.

By carefully monitoring and managing each aspect of the training process, you can ensure that your GPT model is as accurate and efficient as possible, making it a valuable asset for whatever application you have in mind.

Tips for Generating and Optimizing GPT Models

Enhancing the Efficiency and Performance

The journey doesn’t end with your GPT model’s training. There are several avenues to explore for optimizing its performance further. One such method is model pruning, which included removing insignificant parameters to make the model more efficient without a significant loss in performance. Another technique is quantization, which involves converting the model’s parameters to a lower bit-width. This not only reduces the model’s size but also improves its speed, making it more deployable in real-world applications.

Model Compression and Pruning Techniques

Model compression techniques like knowledge distillation can also be employed. In this method, a smaller model, often referred to as the “student,” is trained to replicate the behavior of the larger, trained “teacher” model. This student model is then more computationally efficient and easier to deploy, making it ideal for resource-constrained environments.

Dynamic Inline Values and Directives

Dynamic inline values and directives can be used to give GPT inline instructions. For example, you can use custom markup such as brackets to introduce some variety to the output. This is particularly useful when you are using GPT in an exploratory way, similar to how you would use a Jupyter Notebook.

Optimizing for Specific Tasks

GPT can be carefully adjusted for specific purposes such as text generation, code completion, text summarization, and text transformation. For instance, if you’re using GPT for code generation, you can use the directive “Optimize for space/time complexity” to get more efficient code.

Shortening Prompts to Stay Under Length Limits

When using platforms with token limits, like OpenAI’s playground, you can write your prompts using shorthand to save space. This allows for more room for GPT’s response, enabling more detailed and informative outputs.

Exploring Topics with GPT

Directives can be used to instruct GPT to perform specific tasks. These directives are in a specific format and include action verbs such as “write,” “do,” or “type.” They may also include additional conditions that must be followed, indicated by a colon (:). This makes GPT extremely versatile for a variety of tasks, from simple text generation to complex data analysis.

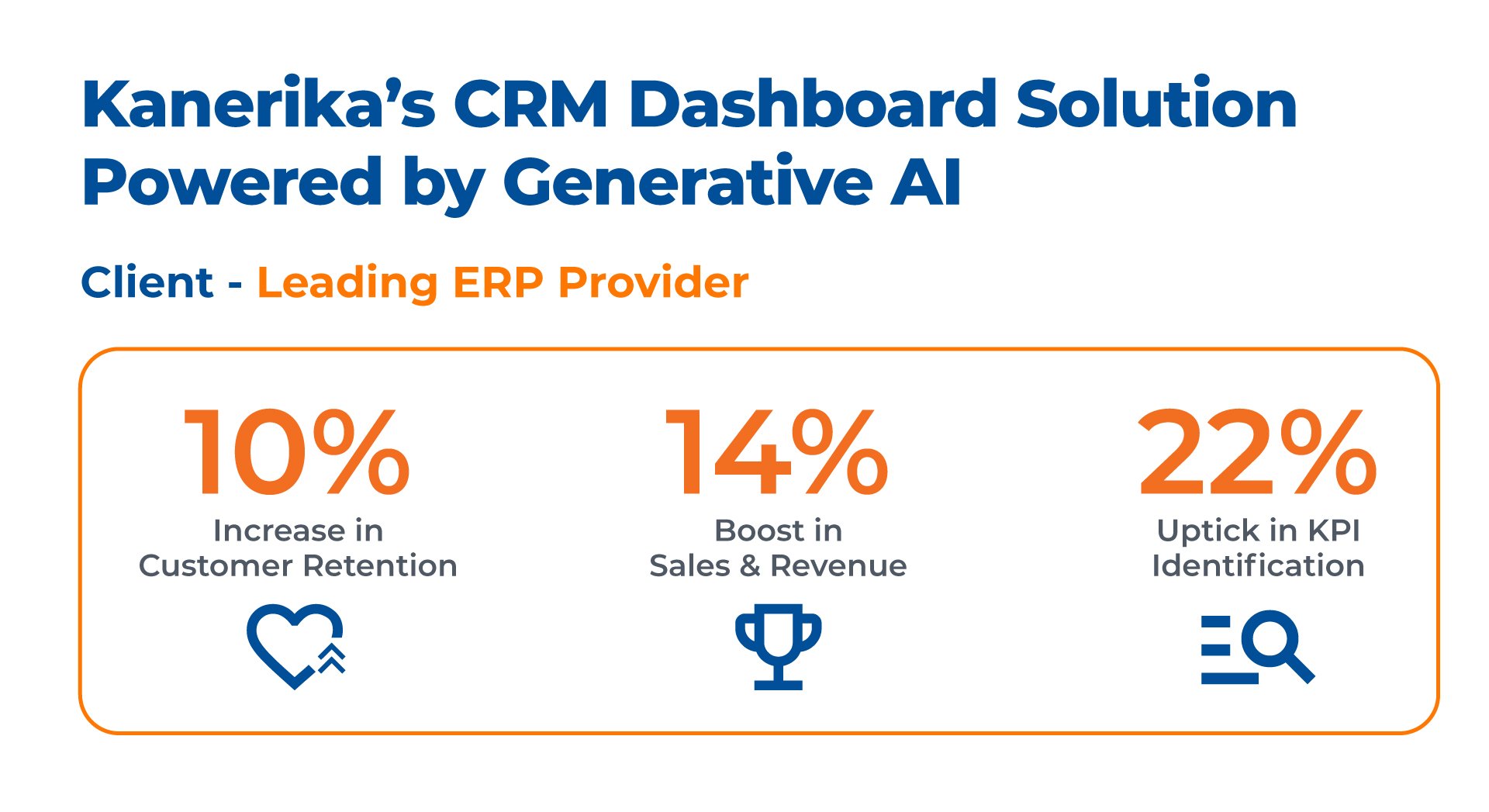

Case Study: Kanerika’s Generative AI Consulting Solutions

Enhancing CRM Efficiency by 21%: A Breakthrough with Advanced Generative AI for a Leading ERP Provider.

Fine-Tuning GPT Models

Customizing Pre-Trained Models for Specific Tasks

After the initial phase of pre-training, a GPT model can be fine-tuned to specialize in a particular task or domain. This involves training the model on a smaller, task-specific dataset. The fine-tuning process allows the model to apply the general language understanding it gained during pre-training to the specialized task, thereby improving its performance. This is particularly useful in scenarios like customer service chatbots, medical diagnosis, and legal document summarization where domain-specific knowledge is crucial.

Strategies for Successful Fine-Tuning

Successful fine-tuning involves more than just running the model on a new dataset. It’s crucial to adjust the learning rate to prevent the model from unlearning the valuable information it gained during pre-training. Techniques like gradual unfreezing, where layers are unfrozen gradually during the fine-tuning process, can also be beneficial. Another strategy is to use a smaller learning rate for the earlier layers and a larger one for the later layers, as the earlier layers capture more general features that are usually transferable to other tasks.

Hyperparameter Tuning for Fine-Tuning

Hyperparameter tuning is another essential aspect of fine-tuning. Parameters like batch size, learning rate, and the number of epochs can significantly impact the model’s performance. Automated hyperparameter tuning tools like Optuna or Hyperopt can be used to find the optimal set of hyperparameters for your specific task.

Monitoring and Evaluation During Fine-Tuning

It’s essential to continuously monitor the model’s performance during the fine-tuning process. Tools like TensorBoard can be used for real-time monitoring of various metrics like loss and accuracy. This helps in making informed decisions on when to stop the fine-tuning process to prevent overfitting.

Deploying GPT Models

Integration into Applications

Once your GPT model is trained and fine-tuned, the next step is deployment. This involves integrating the model into your application or system. Depending on your needs, this could be a web service, a mobile app, or even an embedded system. Tools like TensorFlow Serving or PyTorch’s TorchServe can simplify this process, allowing for easy deployment and scaling.

Scalability and Latency Considerations

When deploying a GPT model, it’s essential to consider factors like scalability and latency. The model should be able to handle multiple requests simultaneously without a significant drop in performance. Caching strategies and load balancing can help manage high demand, ensuring that the model remains responsive even under heavy load.

Security and Compliance

Security is another critical consideration when deploying GPT models, especially in regulated industries like healthcare and finance. Ensure that your deployment strategy complies with relevant laws and regulations, such as GDPR for data protection or HIPAA for healthcare information.

Best Practices for Maintaining GPT Model Performance

Continuous Monitoring and Updating

After deploying your GPT model, it’s crucial to keep an eye on its performance metrics. Tools like Prometheus or Grafana can be invaluable for this, allowing you to monitor aspects like request latency, error rates, and throughput in real-time. But monitoring is just the first step. You should update your model based on these metrics and any new data that comes in. OpenAI suggests that the quality of a GPT model’s output largely depends on the clarity of the instructions it receives. Therefore, you may need to refine your prompts or instructions to the model based on the performance metrics you observe.

Advanced Monitoring Techniques

In addition to basic metrics, consider setting up custom alerts for specific events, such as a sudden spike in error rates or a drop in throughput. These can serve as early warning signs that something is amiss, allowing you to take corrective action before it becomes a significant issue.

Handling Drift in Data and Model Performance

Data drift is an often overlooked aspect of machine learning model maintenance. It refers to the phenomenon where the distribution of the incoming data changes over time, which can adversely affect your model’s performance. OpenAI recommends breaking down complex tasks into simpler subtasks to lower error rates. This can be particularly useful in combating data drift. By isolating specific subtasks, you can more easily identify where the drift is occurring and take targeted action.

Strategies for Managing Data Drift

- Re-sampling the Training Data: Periodically update your training data to better reflect the current data distribution.

- Parameter Tuning: Adjust the model’s parameters based on the new data distribution.

- Model Re-training: In extreme cases, you may need to re-train your model entirely.

- Systematic Testing: Any changes you make should be tested systematically to ensure they result in a net improvement. OpenAI emphasizes the importance of this, suggesting the development of a comprehensive test suite to measure the impact of modifications made to prompts or other aspects of the model.

By implementing these best practices, you can significantly enhance the effectiveness of your interactions with GPT models, ensuring that they remain a valuable asset for your team or project.

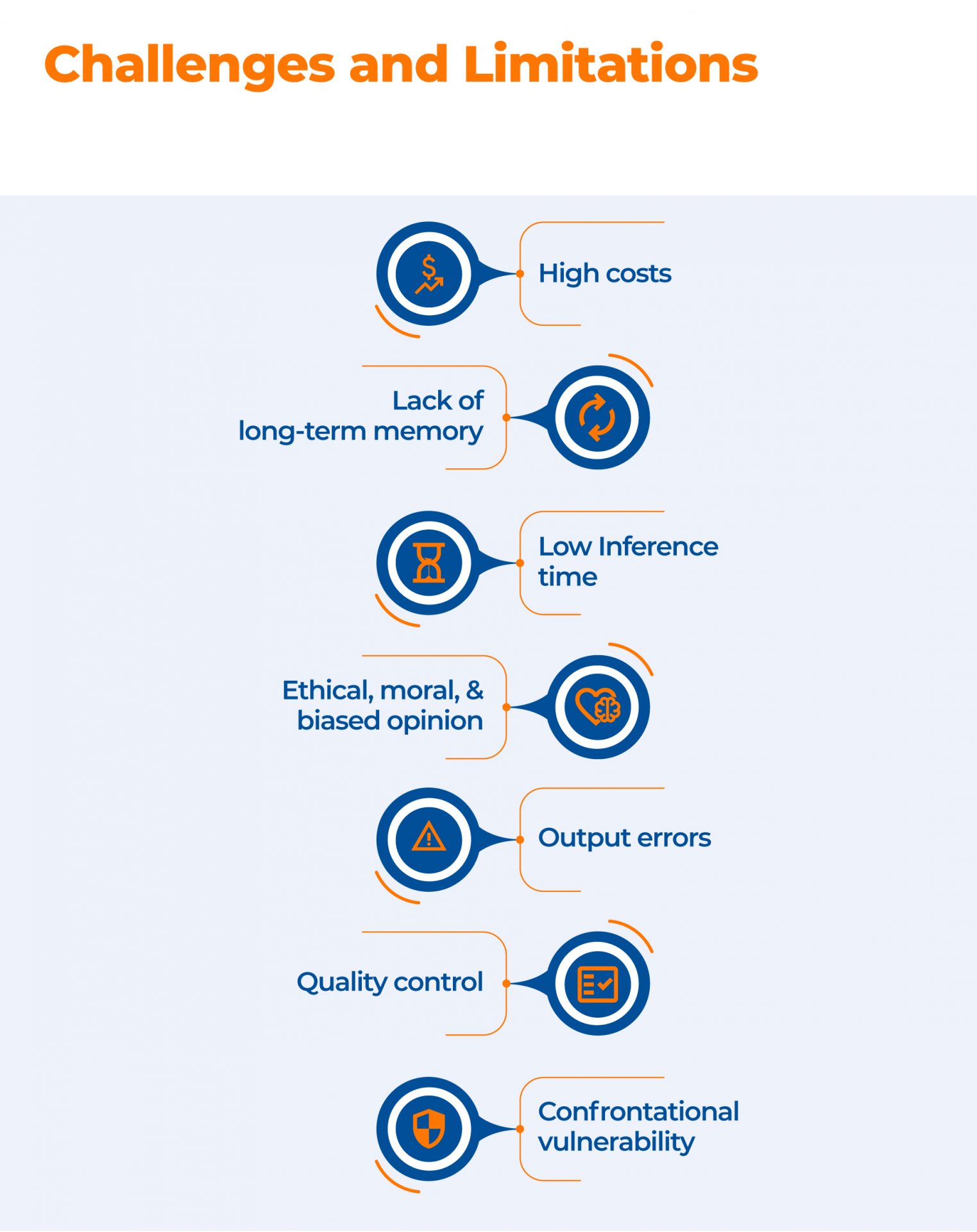

Challenges and Limitations of GPT Models

Computational Costs

While GPT models offer unparalleled performance, they come with high computational costs, especially the larger versions like GPT-3 and GPT-4. This can be a limiting factor for small organizations or individual developers. However, techniques like model pruning and quantization can help mitigate this issue to some extent.

Ethical and Societal Implications

The ethical considerations of GPT models are critical, especially as they become more integrated into daily life. These concerns include the risk of perpetuating biases, spreading misinformation, and generating misleading or harmful content. To address these issues, transparency in training data and methods is essential, as is the development of guidelines and safeguards for responsible use.

Future Direction on Building GPT Models for Natural Language Processing

The field of NLP is continuously evolving, and GPT models are at the forefront of this revolution. With advancements in model architectures and training techniques, we can expect even more powerful and efficient models in the future.

Multi-Modal Capabilities

One of the emerging trends in the field of NLP and machine learning is the development of multi-modal models. These are models that can process more than one type of input, such as text and images, to make more informed decisions. GPT models can be adapted for multi-modal tasks, offering a more comprehensive understanding of complex data.

Federated Learning and GPT Models

Federated learning is a machine learning approach where a model is trained across multiple decentralized devices holding local data samples, without exchanging them. This approach can be particularly useful for GPT models in scenarios where data privacy is a concern, as it allows for model training without the need to centralize the data.

Building GPT Models for Your Business with a Trusted Partner

When it comes to implementing GPT models to address specific business use cases, having a trusted partner can make all the difference. Building, training, and deploying a GPT model is a complex process that requires specialized skills and resources. A trusted partner can provide the expertise and infrastructure needed to navigate this complexity successfully.

Why Choose Kanerika as Your Trusted Partner?

Kanerika has a proven track record as a solution provider in the field of ML and NLP. With years of experience and a team of experts, we offer end-to-end solutions for building and deploying GPT models.

Expertise in Custom Solutions

Kanerika specializes in developing custom solutions that align with your specific business objectives. Whether you’re looking to automate customer service, enhance data analytics, or any other application, Kanerika has the expertise to deliver a GPT model that meets your needs.

Cutting-Edge Technology

Kanerika stays ahead of the curve by continuously updating its technology stack and methodologies. This ensures that you benefit from the latest advancements in machine learning and NLP, resulting in a more effective and efficient GPT model.

Scalable and Robust Solutions

Kanerika understands the importance of scalability and robustness in business applications. The solutions we provide can to scale with your business, ensuring that the GPT model can handle increased loads and complexities as your business grows.

Ethical and Responsible AI

Kanerika is committed to the responsible and ethical use of AI. All GPT models are designed with fairness, accountability, and transparency in mind, ensuring that they meet the highest ethical standards.

Comprehensive Support and Maintenance

Kanerika offers comprehensive support and maintenance services, ensuring that your GPT model continues to perform optimally even after deployment. From monitoring to regular updates and fine-tuning, Kanerika takes care of all aspects of post-deployment maintenance.

Choosing Kanerika as your trusted partner ensures that you get a GPT model that is not just state-of-the-art but also tailored to meet your specific business objectives. With Kanerika, you can be confident that you’re making a wise investment that will deliver significant returns.

FAQs

How much does it cost to train a GPT model?

Can I train GPT with my data?

How is the GPT model trained?

Can you build your GPT model?

Is the GPT model open source?

How to train your GPT model?

- Collect and preprocess data: Assemble a large dataset, and prepare it for training.

- Set up the model: Choose your model size and architecture.

- Pre-train on your data: Teach the model to understand language patterns.

- Fine-tune for specific tasks: Tailor the model to perform the tasks you need.

- Evaluate and adjust: Test the model's performance and fine-tune it as needed.

- Deploy: Integrate the model into your application.

- Monitor and update: Keep an eye on performance and retrain with new data when necessary.

How much does it cost to build GPT-4?

Can I use GPT commercially?