When exploring the future of data management, the debate of Data Lake vs. Data Lakehouse is imminent. Let’s break down the differences for you.

Imagine a retail giant that collects vast amounts of data – customer purchase history (structured), social media sentiment (unstructured), and even sensor data from in-store beacons (semi-structured). Traditionally, they might struggle to store and analyze all this data effectively.

This is where data lakes come in. Think of them as a giant, digital warehouse where you can store anything, regardless of format. Our retailer could dump all their customer data into the lake, which would be ready for analysis later.

However, data lakes have limitations. While great for raw storage, analyzing that data can be challenging. Here’s where data lakehouses enter the picture. Imagine a data lakehouse as a more sophisticated storage solution—a safe locker with additional sorting and storage capabilities.

It combines the flexibility of a data lake with the organization of a traditional data warehouse. Our retailer can now store and analyze all their data and structure efficiently, unlocking valuable insights to optimize marketing campaigns or improve store layouts.

Fundamentals of Data Lakes

Definition of Data Lake

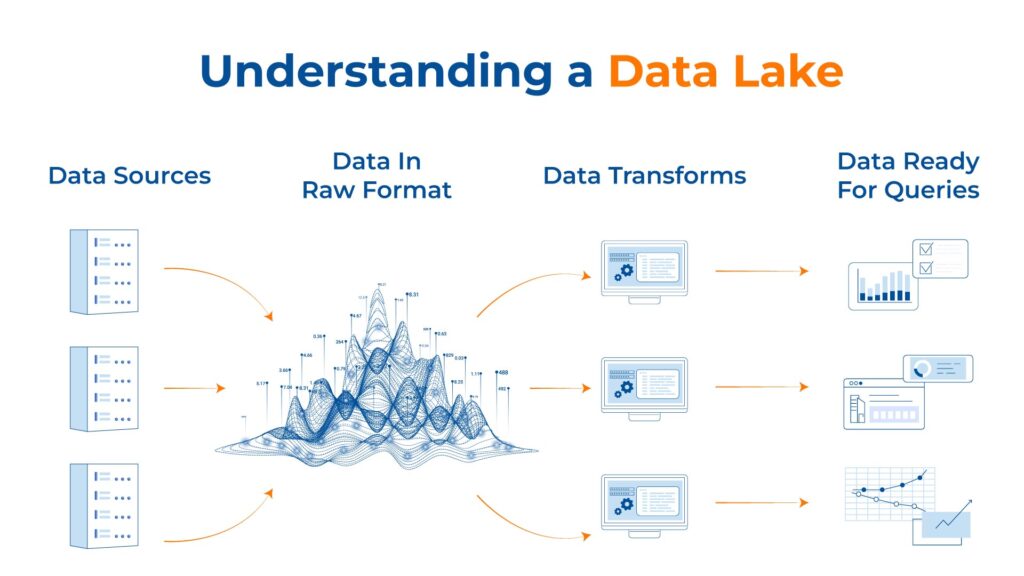

A data lake is a centralized storage repository that holds a vast amount of raw data in its native format until it is needed. Unlike traditional databases, the data lake can store structured, semi-structured, and unstructured data, such as text, images, and social media postings.

Key Characteristics of Data Lakes

- Scalability: Data lakes are highly scalable, accommodating the ever-growing volumes of data produced by modern systems

- Flexibility: Due to their schema-on-read architecture, data lakes allow for the storage of data in diverse formats, offering flexibility in data usage and analysis

- Cost-effectiveness: They typically employ low-cost storage solutions, making it economical to store large volumes of data

- Accessibility: Data lakes enable access to raw data for diverse analytical methods, including machine learning and real-time analytics

Common Use Cases for Data Lakes

- Machine Learning and Advanced Analytics: Data lakes provide a rich source of raw data that can be used to train machine learning models

- Real-time Analytics: They are often used for streaming data and real-time analytics, offering insights as data is ingested

- Data Discovery and Exploration: Businesses use data lakes for data discovery, where analysts can explore vast sets of unstructured data to uncover insights

Fundamentals of Data Lakehouses

Definition of Data Lakehouse

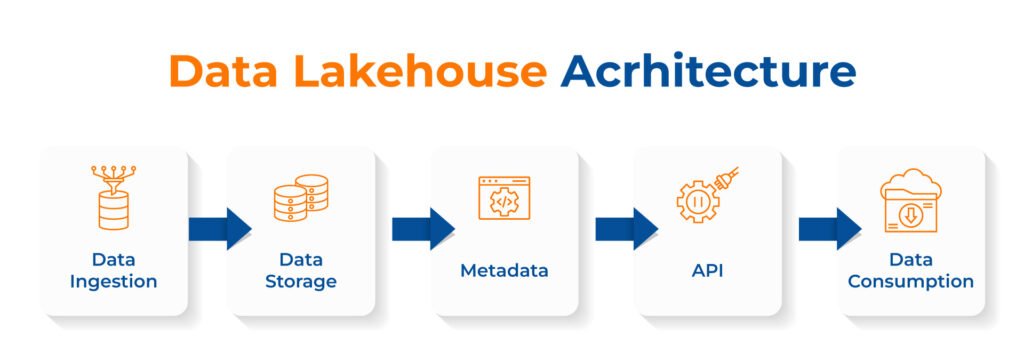

A data lakehouse is an innovative data management solution that brings together the flexible storage capabilities of data lakes with the schema management and data management features typically associated with data warehouses. It is designed to store a wide variety of data formats while maintaining data integrity and offering capabilities similar to traditional data warehouses for analytics purposes.

Key Characteristics of Data Lakehouses

Data lakehouses share several defining characteristics:

- Unified Platform: They provide a centralized platform for both structured and unstructured data

- Support for Multiple Data Types: Capable of storing JSON, CSV, Parquet files, and more

- Cost-Effectiveness: By leveraging inexpensive object storage, data lakehouses reduce storage costs

- Scalability: They can easily scale to accommodate growing data volumes, thanks to the inherent scalability of cloud storage solutions

- Schema Management: A data lakehouse maintains a catalog to store schema information, allowing for schema enforcement and evolution without sacrificing the flexibility of a data lake

Common Use Cases for Data Lakehouse

Organizations utilize data lakehouses for various applications, some of which include:

- Data Integration: Combining data from different sources into a cohesive, analyzable format

- Advanced Analytics: Enabling complex analytical workloads, including machine learning and predictive modeling

- Real-time Analysis: Facilitating analytics on streaming data, as well as batch-processing workflows

Architecture Comparison for Data Lake vs. Lakehouse

When debating data lakes vs. data lakehouses, one must consider their storage architecture, data processing layers, and the governance mechanisms that organize and secure data.

Storage Architecture

Data Lake: The data lake is built on a flat architecture that stores data in its raw format, including structured, semi-structured, and unstructured data. The data is often stored in object stores like Amazon S3 or Azure Blob Storage

Data Lakehouse: In contrast, a data lakehouse maintains the vast storage capabilities of a data lake but introduces an additional layer of organization. This layer indexes data and enforces schema upon ingestion, facilitating efficient data queries and management

Also Read- Data Lake vs. Data Warehouse: Understanding The Differences

Data Processing Layers

Data Lake: Data lakes typically have a decoupled compute and storage environment. They perform processing with the schema-on-read approach, where data is applied to a schema as it’s read from storage. This allows for flexibility in data analytics and machine learning processes

Data Lakehouse: Data lakehouses build upon this by offering transaction support and incorporating features of both schema-on-read and schema-on-write methodologies. A data lakehouse provides an environment that streamlines both batch and stream processing with improved concurrency

Data Governance and Cataloging

Data Lake: Governance in a data lake relies on metadata tagging and access controls to ensure proper data usage and compliance. However, cataloging in a pure data lake may be less structured, leading to potential challenges in searching and managing data assets

Data Lakehouse: Data lakehouses enhance governance through a consolidated metadata and cataloging strategy, often coupling with data management tools to provide fine-grained access control and auditing. They bridge the gap between data lakes and warehouses by offering structured cataloging akin to that of a traditional data warehouse, improving data discoverability and compliance

Performance and Scalability

Within the realm of data lake vs. data lakehouse, performance and scalability are critical factors that determine an architecture’s efficiency and future readiness. Performance relates to the speed of data processing and retrieval, while scalability denotes the system’s ability to grow and manage increased demand.

Query Performance

Data Lakes traditionally manage vast quantities of raw data, which can impact query performance due to the lack of structure. Users typically experience high latency when querying large tables or processing complex analytics directly on a Data Lake

Data Lakehouse aims to mitigate these issues by blending the vast storage capability of Data Lakes with the structured environment of a Data Warehouse. By organizing data into a manageable schema and maintaining metadata, they can provide more efficient data retrieval and faster query execution

Scaling Capabilities

Data Lakes excel at storing enormous volumes of structured, semi-structured, or unstructured data. They are inherently designed to scale out with respect to data volume, but they can be limited by cataloging systems, such as Hive or Glue, when handling metadata for the stored content.

On the contrary, Data Lakehouses are architected to scale effectively not only in terms of data size but also in performance. The incorporation of features from data warehouses enables better management of metadata and schema, which can improve scalability when responding to more complex analytical workloads.

Data Management and Quality

Effective data management and quality are crucial in the data lake vs. data lakehouse debate. These structures handle the organization, cleansing, and enrichment of data differently, directly impacting analytics and business intelligence outcomes.

Metadata Handling

In a data lake, metadata is often handled separately and may require additional tools to manage efficiently. It serves as a catalog for the raw data, but users must typically access and manage it through manual processes or separate systems.

- Pros: Provides a flexible approach to metadata management

- Cons: Can become unwieldy as the data lake grows

Conversely, a data lakehouse incorporates metadata handling into its architecture, enabling automatic metadata logging and tighter data governance practices.

- Pros: Streamlines metadata management, allowing for better data discoverability and governance

- Cons: May require rigorous design and setup to ensure metadata is captured and utilized effectively

Data Cleansing and Enrichment

Data lakes store vast amounts of raw data, which might include duplicates, incomplete, or inaccurate records. They rely on the users’ ability to cleanse and enrich data.

- Cleansing: Users apply their own tools and processes to remove errors and inconsistencies

- Enrichment: Users must manually link and augment data with additional sources for analysis

Data lakehouses, however, are designed with data quality in mind, providing built-in mechanisms for cleansing and enriching data as part of the data pipeline.

- Cleansing: Automated processes detect and correct data quality issues, ensuring higher integrity

- Enrichment: Integrated tools allow for seamless data augmentation, improving the richness of datasets for analytics

Cost and Complexity

Choosing between a data lake and a data lakehouse involves weighing the cost and complexity associated with each, with a clear understanding of your organization’s data strategy and operational capabilities.

Infrastructure Costs

Data Lake: Typically, data lakes offer a cost-effective solution for storing massive volumes of raw data across various formats. Organizations can benefit from the pay-as-you-go pricing model that cloud storage offers. This aligns well with the scalable nature of data lakes. The separation of storage and compute resources allows for granular cost management.

Data Lakehouse: A data lakehouse inherits the cost benefits of a data lake’s storage and adds a layer designed to improve data governance and reliability, which may increase initial infrastructure costs. However, by integrating the qualities of a data warehouse, a data lakehouse often streamlines analytics, potentially offering long-term cost savings in operational efficiency and resource utilization.

Maintenance and Management Complexity

Data Lake: Maintenance entails ensuring the security and accessibility of a wide variety of data formats. A data lake requires substantial metadata management to prevent it from becoming a ‘data swamp’. The complexity arises in cataloging and indexing the data efficiently for future access and use.

Data Lakehouse: Data lakehouses introduce more sophisticated data management tools and processes to enforce governance and consistency, which can increase complexity. They require the implementation of data quality measures and a metadata layer that simplifies data discovery and querying, usually necessitating a skilled team to maintain these systems effectively.

Also Read- Which One Do You Need? Data Governance Vs Management

Security and Compliance

Security and compliance are critical factors in the data lake vs. data lakehouse debate. They have distinct access control mechanisms and adhere to compliance standards essential for protecting data integrity and privacy.

Access Control and Security Features

Data Lakes typically provide robust access control mechanisms to manage who can access and manipulate data. They allow administrators to set permissions at the file and folder level, offering a fine-grained access control structure. However, the security features may require additional tools to enforce more complex data security policies.

In contrast, Data Lakehouses integrate advanced security features inherent in their architecture. They incorporate built-in Access Control Lists (ACLs) and role-based access control, ensuring only authorized users can perform actions on the data sets. Moreover, they often include features like schema enforcement and data masking, which are pivotal in maintaining data security.

Compliance Standards and Audits

Data Lakes must conform to various compliance standards such as HIPAA, GDPR, and CCPA, depending on the industry and data type. They often rely on third-party tools and manual interventions to ensure compliance and facilitate audits.

On the other hand, Data Lakehouses are designed to simplify compliance adherence. They offer ACID compliance, ensuring transactional integrity and supporting consistent data snapshots for auditing purposes. A Data Lakehouse’s architecture promotes an environment that can more easily adapt to changing compliance standards and manage data lineage and metadata for audits.

Integration and Ecosystem

Data lakes vs. lakehouses differ in their approaches to integrating diverse data types and in the degree to which they are supported by communities and tooling ecosystems.

Support for Data Integration Tools

Data lakes are designed to store vast amounts of raw data in their native formats. They provide extensive support for data integration tools that can ingest both structured and unstructured data. These data integration tools enable organizations to pull data from multiple sources into the data lake, accommodating a wide range of data types and formats without the need for initial cleansing or structuring.

In contrast, data lakehouses not only absorb the capabilities of data lakes in handling massive, diverse datasets but also streamline data management tasks. They enhance data lakes’ integration tools with additional features such as schema enforcement and metadata management, facilitating a more ordered and discoverable dataset.

Ecosystem and Community Support

The ecosystem surrounding data lakes typically consists of a combination of open-source and proprietary solutions that aid in data ingestion, processing, and analysis. Tools like Apache Hadoop and Apache Spark have grown alongside data lakes, creating a robust suite of tools for various data operations.

Data lakehouses tend to inherit this established ecosystem while cultivating their own communities focused on improving performance, governance, and analytical capabilities. The lakehouse architecture advocates for an open environment where tools for real-time analytics, machine learning, and data governance can be seamlessly integrated, bolstering community support for a comprehensive and unified data platform.

Explore more on: What is Data Lakehouse? Exploring the Next-Gen Data Management Platform

Data Lake vs. Data Lakehouse: the Optimal Data Management Strategy

Navigating the choice between Data Lake and Data Lakehouse is pivotal to harnessing your organization’s data potential. Each framework offers unique benefits and considerations tailored to different data management needs.

The Data Lake architecture centralizes vast amounts of raw, unstructured, and structured data. It offers a scalable and flexible environment for big data storage and analytics. It’s also ideal for organizations that need to store massive volumes of data without immediate purposes.

Conversely, data lakehouses merge a data lake’s expansive storage capability with the structured processing power of a data warehouse. This architecture supports big data storage and efficient data querying and management. It enables more sophisticated and real-time analytics.

Deciding between Data Lake and Data Lakehouse hinges on your organization’s specific data requirements, processing needs, and analytical sophistication. While a Data Lake offers raw data potential, a Data Lakehouse provides structured, ready-to-use insights.

Kanerika: Your Ideal Data Strategy Partner

Kanerika is a leader in data management, offering advanced solutions in the Data Lake and Data Lakehouse domain. Our strategies are tailored to the dynamic needs of modern enterprises. Our expertise in integrating and optimizing large-scale data environments enables businesses to

- streamline their data processing

- enhance analytical capabilities

- unlock actionable insights

With a deep understanding of cutting-edge data architectures, Kanerika crafts strategies that align with your operational and analytical requirements.

Our solutions in Data Lake and Data Lakehouse technologies stand out for their robustness, scalability, and efficiency. The solutions facilitate seamless data integration, management, and analysis. Furthermore, our commitment to innovation and client success positions us as a preferred partner for organizations. We aid them in leveraging their data for strategic advantage, driving growth and transformation in today’s competitive business landscape.

FAQs