Have you ever wondered why even well-planned migrations go off track? According to Gartner, 83% of data migration projects either fail, exceed budgets, or miss timelines, often due to mismatches and data quality issues rather than simply moving data from one point to another. This is where data reconciliation tools become indispensable. It is not just a matter of transferring data and ensuring that all records, metrics, and business values are matched across source and target systems, organizations with risk reporting mistakes, operational failure, and lack of trust in analytics. Reconciliation tools are automated to compare data before, during, and after migration and make sure that data is accurate and complete.

Besides, the tools enable teams to detect mismatches early, correct errors in transformations, and generate reconciliation reports to support audit and governance requirements. Finally, they allow businesses to move with ease and get the best out of contemporary platforms. In this blog, we will explore what data reconciliation tools are, how they work within the migration lifecycle, common challenges they address, and best practices for choosing the right solution in enterprise environments.

Key Learnings

- Data migration without reconciliation leads to failure – Simply moving data does not guarantee accuracy. Without reconciliation, mismatches and missing records often go unnoticed, causing reporting errors and business disruption.

- Data reconciliation tools build trust in migrated data – Reconciliation tools verify that source and target data match at every stage of migration. This ensures stakeholders can rely on reports and dashboards after go-live.

- Early and continuous reconciliation reduces migration risk – Validating data before, during, and after migration helps detect issues early. As a result, teams avoid costly rework and last-minute delays.

- Automation is essential for enterprise-scale migrations – Large volumes of data are not compatible with manual reconciliation. Software-based data reconciliations enhance speed, accuracy and consistency in multi-faceted migrations.

- Reconciliation supports compliance and audit readiness – Proper reconciliation reports are a trace and evidence of data integrity. This becomes essential to the controlled industries and the governance of enterprises.

Ready to avoid these common migration pitfalls?

Schedule a demo to see how proven accelerators eliminate execution risk.

What Are Data Reconciliation Tools ?

Data reconciliation tools are software solutions that automatically compare data across different systems to find and fix discrepancies. In a simple way, these tools ensure that the data that moves between databases, applications and platforms are moving in the right direction.

Automated vs Manual Reconciliation

Manual reconciliation implies that individuals manually verify information by the help of spreadsheets and reports. This is time consuming and involves errors that are expensive. Automated reconciliation tools on the other hand process these checks in minutes and with a much greater level of accuracy. They have the ability to do with millions of records where human beings can only do thousands.

Current businesses are dependent on the information flowing between various systems. Lost revenue and angry customers are the results of mismatch of customer information between your billing system and CRM. In the meantime, there are compliance issues and poor business choices associated with erroneous financial information.

Recent developments in financial teams are the use of reconciliation tools that are used to align accounting systems with bank statements. Operations teams balance the inventory of information on warehouses and selling platforms. When putting together information from various sources to make reports, analytics teams will make sure that the data used is accurate.

Data reconciliation software has been very crucial in any organization that transfers data across systems. They can eliminate expensive errors, maintain compliance, and assist teams to have confidence in their information. Any minor errors in data can also cause major business problems without adequate reconciliation.

Why Data Migration Projects Fail Without Data Reconciliation Tools

The failure rate of data migration projects is 60 percent in cases where the organization does not undertake the right validation of reconciliation. The absence of these essential tools by enterprises is faced with expensive losses that disorganize business processes.

Critical Failure Points

- Data Loss and Row Count Mismatches – Then, the absence of reconciliation tools generates blind spots during transfers of data. As a result, organizations find that they have major data gaps after migrating and this causes disruption in the operations.

- Logical fallacies concerning transition to a new state – Also, there is complicated data mapping, which should undergo constant validation. But in the absence of reconciliation checks the errors in transformation are compounded across datasets, and are poisoning business-critical information.

- Inconsistency of Business Report – Moreover, after-migration reporting tends to expose differences between the system of origin and the system of destination. These discrepancies weaken the processes of decision making and undermine the confidence of stakeholders.

- Audit and Compliance Risks – Lastly, the regulatory needs assert the full data lineage documentation. In the absence of proper reconciliation tools, organizations will be unable to examine the integrity of data, which can expose them to non-compliance and even penalty.

The Solution

Validation Data checks of an automated nature are in real time, and thus, the accuracy of the migration process is checked throughout. These kinds of solutions identify gaps in real time and thus teams can deal with these gaps before it affects business functions.

Smart reconciliation eliminates failure of projects, but the data remains intact during the migration process.

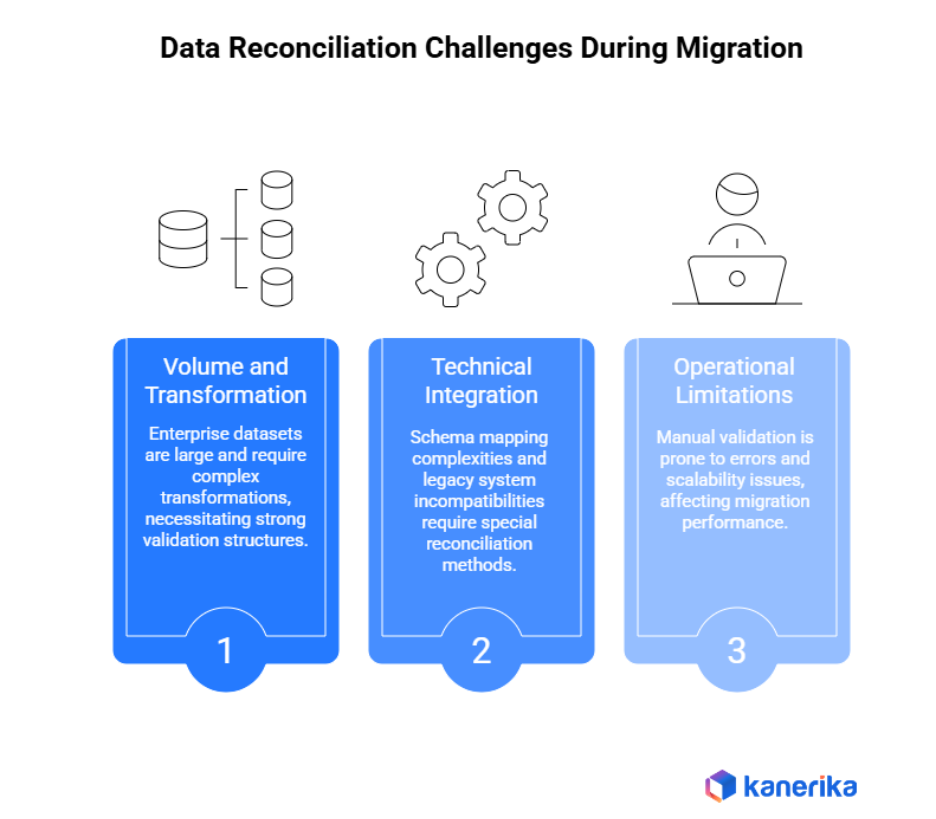

Key Data Reconciliation Challenges During Data Migration

One of the most challenging and the most important issues of successful data migration projects is data reconciliation. There are several validation challenges that organizations may meet which may considerably affect the rates of migration, as well as data integrity.

1. Volume and Transformation Complexity

To start with, enterprise datasets usually have millions of records that require complex transformation logic. This then requires that the reconciliation of these large volumes requires strong validation structures that are capable of handling the data effectively without any loss of accuracy.

Furthermore, complicated business rules and data mapping specifications produce extra validation layers. So, organizations should have automated reconciliation tools, which are able to deal with complex transformation situations.

2. Technical Integration Challenges

Also, there are schemas mapping complexities associated with migrating across database systems. Nonetheless, reconciliation tools should take into consideration different data types, field lengths and structure differences between source and target settings.

Moreover, the format of legacy systems may also be incompatibility with current database schemas, and only special reconciliation methods can ensure proper validation.

Likewise, the decision on batch and real-time reconciliation influences the timing of validation and resources used. Whereas batch processing does full validation, real-time reconciliation has the immediate error detection capabilities.

3. Operational Limitations

In the meantime, manual validation can cause risks of human errors and scalability. Those organizations, which use the spreadsheet form of reconciliation have high accuracy problems and processing delays.

Lastly, the performance of migration and system resources may be affected by the reconciliation processes. This therefore means that organizations have to trade off between the extent to which validation is done and the speed at which it is acceptable.

Strategic Solutions

The effective data migration reconciliation needs to have automated tools that are responsive to volume problems, infrastructure that is capable of managing the difference in schema as well as offering flexible validation time. The solutions provide the integrity of data as well as the optimal performance of migration throughout the entire process.

How Data Reconciliation Tools Work in Data Migration

Data reconciliation tools play the key role of ensuring the success of the data migration project, as they act as systematic validation to the whole migration lifecycle. These automatic solutions maintain the integrity of data and reduce the migration risk as well as operational downtime.

1. Pre-Migration Data Profiling and Baselining

The first step in reconciliation is the analysis of the source data to be used to create the baseline metrics and quality measurements. This profiling process therefore determines the data patterns, anomalies and the possible migration challenges prior to the actual transfer.

In addition, establishment of baselines generates benchmarks of post-migration confirmation, which guarantees comprehensive comparableness throughout the procedure.

2. Real Time Migration Tracking

Afterwards, sophisticated reconciliation systems do real time validation in the operations of transferring the data. These are ongoing checks that observe the number of records, data transformations, and transfer completeness that instantly alerts anomalies to be resolved.

Moreover, parallel processing gives possibilities to continue migration and validation without major degradation of performance.

3. Complete Post-Migration Check.

After the completion of the migration process, reconciliation tools then perform comprehensive source to target comparisons of all migrated data. Moreover, these validations confirm the accuracy of data, its completeness and the implementation of transformation logic.

In the same way, automated reporting will ensure that detailed validation summaries are created, showing successful transfers and pointing out any remaining discrepancy.

4. Smart-Error Management

In the meantime, advanced exception management systems classify and rank validation failures according to business consequences. Thus, data teams will be able to solve urgent problems initially with the less urgent discrepancies being handled in a systematic way.

5. Automation Benefits

Lastly, automated reconciliation removes manual validation errors and saves time drastically on migration. Such tools have a single consistent validation criterion, audit trails, and the ability to scale processing.

The modern data reconciliation solutions are fully compatible with the data migration frameworks currently in place and provide credible validation with the outcome of the data migration being guaranteed, even though the enterprise data quality standards will be maintained during the process. Responses should be checked twice.

Looking For Enterprise Data Migration Services With Proven Accelerators?

Connect with migration experts to discuss your specific requirements.

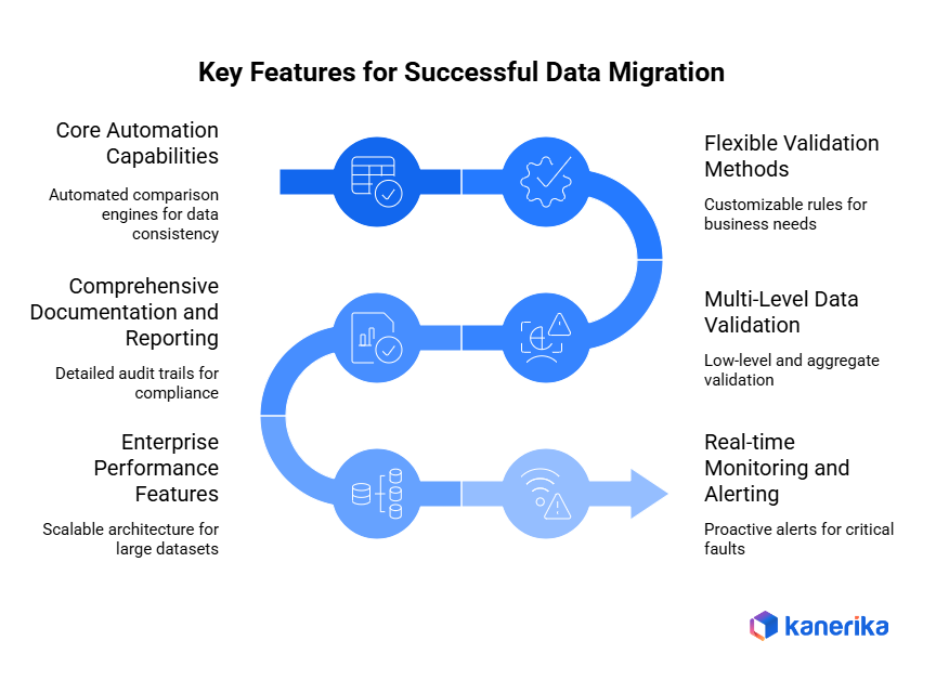

Key Features to Look for in Data Reconciliation Tools for Migration

The choice of the appropriate data reconciliation tool is highly influential on the rates of the success of the migration and the quality of data. Organizations that have an enterprise need end-to-end validation of their system that will guarantee proper transfer of data in complex migration situations.

1. Core Automation Capabilities

First, powerful reconciliation software is able to offer automatic comparison engines that check the data consistency between source and target systems. Such automated operations, therefore, remove manual validation errors and also speed up migration schedules by a long margin.

Besides, intelligent comparison algorithms support a wide range of data forms and structures, thus a wide range of coverage of validation in different database environments.

2. Flexible Validation Methods

Besides, complex reconciliation software also provides customizable rules of validation that can be tailored to the needs of the business. However, tolerance-based checking permits acceptable range of variance especially where migrating between systems with varying levels of precision.

Moreover, custom rule configuration allows organizations to establish the rule validation criteria in accordance with industry standards and compliance.

3. Multi-Level Data Validation

Equally, detailed reconciliation systems are able to execute both low-level row validation and aggregate validation. Thus, the organizations will be able to detect discrepancies on the individual records and track the accuracy of data volume on the same.

In the meantime, aggregate validation is used to run a rapid health check on large datasets, and major migration problems can be discovered swiftly.

4. Comprehensive Documentation and Reporting

Then detailed audit trail features make sure that migration documentation is fully done to be used in case of compliance and troubleshooting. These detailed reports give the stakeholders a clear validation summaries and exception details.

5. Enterprise Performance Features

Lastly, enterprise level reconciliation solutions should be able to deal with huge amounts of data without slowing down. Scalable architecture thus helps to carry out concurrent validation processes on multiple streams of migration.

6. Monitoring and Alerting in Real-time.

In addition, proactive monitoring features will send urgent alerts when the validation limits are broken or critical faults are detected. Hence, the migration teams will be able to react instantly to the problems, and the minor discrepancies will not turn into the significant project delays.

The following features are a combination of the critical characteristics found in modern data reconciliation tools to provide valid validation frameworks that guarantee the success of migration results without compromising the integrity of data across intricate projects of transferring enterprise data.

Top Data Reconciliation Tools Used in Data Migration Projects

Data migration projects require robust reconciliation tools to ensure accuracy and prevent costly data discrepancies. Organizations increasingly rely on specialized validation software to maintain data integrity throughout complex migration processes. These tools significantly reduce migration risks while accelerating project timelines.

1. Enterprise Commercial Solutions

Informatica Data Validation First, Informatica Data Validation stands as the industry leader for enterprise data reconciliation needs. Consequently, this platform provides comprehensive source-to-target validation with advanced transformation verification capabilities.

Moreover, Informatica handles complex schema mappings and supports multiple database environments simultaneously. Therefore, organizations benefit from automated reconciliation workflows that scale efficiently across massive datasets.

QuerySurge Additionally, QuerySurge specializes in automated data testing and validation for migration projects. However, its unique strength lies in SQL-based validation approaches that integrate seamlessly with existing database infrastructures.

Furthermore, QuerySurge offers real-time reconciliation monitoring and detailed exception reporting, enabling migration teams to identify and resolve discrepancies quickly.

Talend Data Quality Similarly, Talend Data Quality combines data profiling with comprehensive reconciliation capabilities. Meanwhile, its visual interface simplifies validation rule configuration while maintaining enterprise-grade performance standards.

IBM InfoSphere Information Analyzer Subsequently, IBM InfoSphere provides deep data profiling and reconciliation features specifically designed for large-scale enterprise migrations. These capabilities ensure thorough validation across diverse data sources and complex transformation scenarios.

2. Cloud-Native Reconciliation Platforms

AWS Deequ / Glue Data Quality Moreover, AWS Deequ leverages machine learning algorithms for intelligent data validation during cloud migration projects. Consequently, organizations migrating to AWS benefit from native integration with existing cloud infrastructure.

Additionally, AWS Glue Data Quality provides automated data validation pipelines that scale dynamically based on migration volume requirements.

Microsoft Purview + Fabric Data Quality Furthermore, Microsoft’s reconciliation ecosystem integrates Purview’s governance capabilities with Fabric’s data quality features. Therefore, organizations using Microsoft technologies achieve comprehensive validation coverage throughout migration lifecycles.

3. Open Source and Flexible Solutions

Great Expectations Meanwhile, Great Expectations offers Python-based data validation that appeals to organizations seeking customizable reconciliation frameworks. However, its flexibility requires technical expertise to implement effectively.

Subsequently, Great Expectations provides extensive documentation and community support, making it accessible for teams with development capabilities.

Apache Griffin Similarly, Apache Griffin delivers open-source data quality measurement specifically designed for big data environments. Consequently, organizations handling massive datasets benefit from its distributed processing capabilities.

dbt Tests Additionally, dbt Tests provides SQL-based validation that integrates seamlessly with modern data transformation workflows. Moreover, its version control integration ensures consistent validation standards across migration projects.

4. Advanced AI-Powered Solutions

Custom AI-Based Reconciliation Frameworks Finally, cutting-edge organizations develop custom artificial intelligence frameworks for intelligent data reconciliation. These solutions leverage machine learning algorithms to identify complex data patterns and anomalies that traditional tools might miss.

Furthermore, AI-powered reconciliation adapts continuously to data characteristics, improving validation accuracy over time while reducing manual intervention requirements.

5. Selection Criteria for Migration Success

When choosing data reconciliation tools, organizations should evaluate several critical factors. First, scalability requirements must align with migration volume expectations. Additionally, integration capabilities with existing infrastructure significantly impact implementation success.

Moreover, budget considerations often determine whether commercial or open-source solutions provide better value propositions. However, technical expertise availability within migration teams influences tool selection significantly.

6. Implementation Best Practices

Successful tool implementation requires comprehensive planning and stakeholder alignment. Therefore, organizations should establish clear validation requirements before selecting specific reconciliation platforms.

Subsequently, pilot testing with representative data samples helps identify potential configuration issues before full-scale migration begins.

Modern data reconciliation tools continue evolving to address increasingly complex migration scenarios while maintaining user-friendly interfaces that accelerate adoption across diverse organizational environments.

AI in Robotics: Pushing Boundaries and Creating New Possibilities

Explore how AI in robotics is creating new possibilities, enhancing efficiency, and driving innovation across sectors.

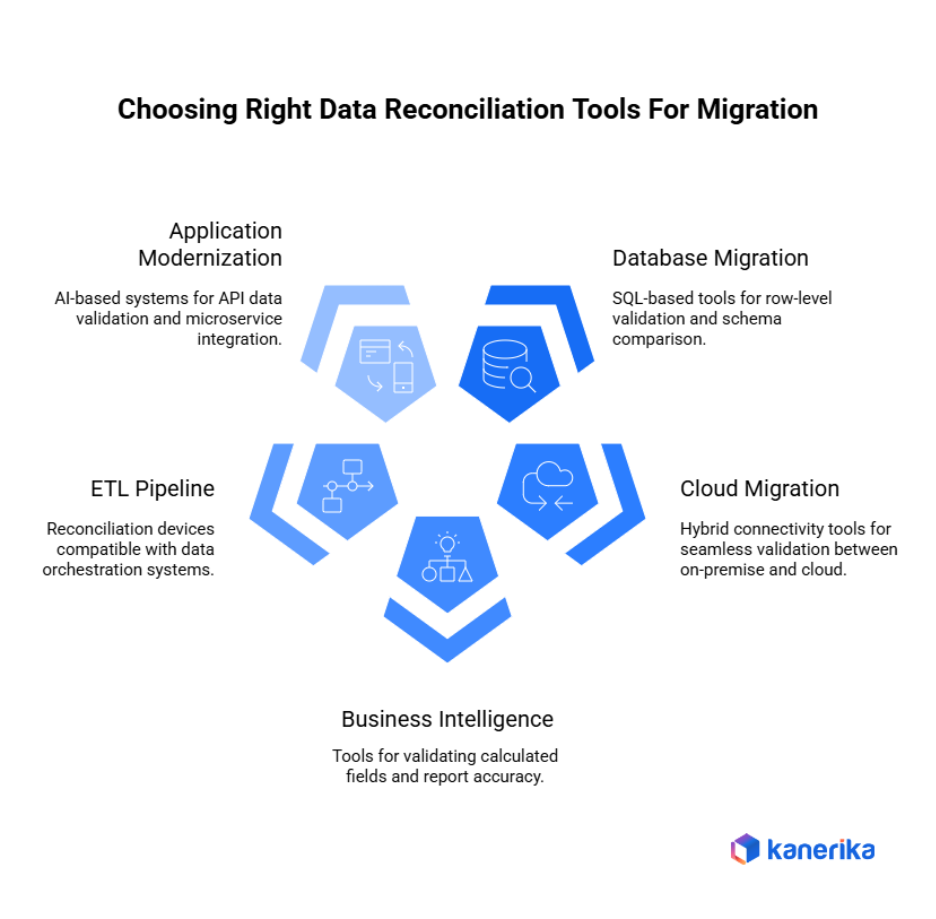

Choosing the Right Data Reconciliation Tools for Different Migration Types

Various migration scenarios need dedicated data reconciliation methods and toolsets. To achieve the best accuracy and performance results, organizations should choose tools of validation that correspond to certain migration needs.

1. Database-to-Database Migration

First of all, SQL-based conciliation tools such as QuerySurge and IBM InfoSphere are useful in migrating between databases. These platforms therefore have a complete row-level validation and schema comparison facility.

Additionally, programs such as Informatica Data Validation are very efficient in managing complex transformation logic among various database systems without data degradation.

2. Cloud Migration Scenarios

Also, cloud migrations will need hybrid connectivity tools that are incorporated with reconciliation tools. Thus, AWS Deequ/Microsoft Purview gives a smooth validation between on-premise origins and cloud destinations.

Moreover, these cloud-native applications have automated scaling which supports different volumes of migration without diminishing performance.

3. Business Intelligence Transformations

Likewise, the business intelligence migrations require dedicated validation of the calculated fields and aggregated data. Meanwhile, such tools as Talend Data Quality have the whole aspect of report validation and dashboard reconciliation.

These solutions, in turn, guarantee the accuracy of reporting in migrated analytics environments.

4. ETL / Pipeline Migration

Additionally, ETL pipeline migrations enjoy the benefit of having reconciliation devices which are compatible with the current data orchestration systems. As a result, dbt Tests and Great Expectations offer ongoing validation in automated pipeline processes.

5. Application Modernization

Lastly, application modernization projects must have reconciliation tools that process API data validation and microservice integration. As such, tailor-made AI-based systems can be quite flexible to demanding application migration cases.

Usage of migration-specific reconciliation tools will guarantee extensive coverage of validation and effective use of resources in various data transformation initiatives.

Success Stories: Kanerika’s Proven Approach to Enterprise Data Migration

Proven Results vs. Negative Review Patterns

While negative reviews dominate many vendor pages for enterprise data migration services, clients working with experienced migration specialists tell different stories. These outcomes demonstrate the difference between generic vendors and the best data migration companies.

Case Study 1: UiPath to Power Automate Migration for Trax

Challenge

Trax depended on 16 UiPath automations that handled high‑volume invoice and supply chain tasks. Licensing costs were rising fast. They also had a strict 120‑day deadline. Their automations had custom integrations across web systems, APIs, databases, Excel, Word, and Microsoft 365, which increased risk during migration.

Solution

Kanerika performed a fast audit of all workflows. They used the FLIP Migration Workbench to automate the bulk of the conversion. The rollout started with a pilot phase and moved to full deployment after testing.

Results

- Migration finished in 90 days

- 50% reduction in manual effort due to automated conversion

- 75% savings in annual licensing costs after moving to Power Automate

- Zero workflow failures during transition

Case Study 2: Tableau to Power BI Migration using FLIP Accelerators

Challenge

The client relied heavily on Tableau for enterprise reporting but faced rising licensing fees. Their teams used Microsoft 365 daily, yet Tableau lacked native integration. They needed to move to Power BI without losing dashboard logic or performance.

Solution

Kanerika used the FLIP Data Migration Accelerator to convert dashboards. The accelerator automatically mapped visuals, calculations, and data relationships. Complex dashboards were manually reviewed to ensure accuracy.

Results

- 80% of the migration effort was automated using FLIP

- 100% of dashboard logic preserved

- 40 to 60% reduction in BI licensing and maintenance costs after switching to Power BI

- Analytics delivery time improved by up to 30% due to Microsoft ecosystem integration

What Makes These Outcomes Different

These positive results reflect Kanerika’s execution-first approach that directly addresses the failure patterns highlighted in customer reviews:

Engineering-Led Discovery Implementation

- Eliminates “didn’t understand our environment” complaints through comprehensive technical assessments

- Prevents timeline surprises by identifying complexity factors upfront

- Reduces post-implementation issues through thorough dependency analysis

Platform-Specific Accelerator Usage

- Prevents manual rework issues through automated conversion tools

- Eliminates learning-curve problems with dedicated platform expertise

- Reduces project risk through proven, tested migration frameworks

Integrated Governance Strategy

- Addresses compliance gaps that derail projects through built-in tools (KANGovern, KANGuard, KANComply)

- Prevents security issues through early governance implementation

- Ensures regulatory compliance from project start to completion

Microsoft Specializations and Certifications

- Data & AI Azure specialization provides technical authority

- Proven track record across 100+ successful migrations

- Industry recognition for excellence in enterprise data migration services

Looking For Engineering-led Data Migration Consulting?

Schedule a consultation to explore how proven methodologies eliminate execution risk.

How Kanerika Helps Companies Move To Modern Data Platforms

Kanerika supports companies that want to move from older data systems to modern platforms without slowing down their business. Our FLIP migration accelerators help teams shift from tools such as Informatica, SSIS, Tableau, and SSRS to Talend, Microsoft Fabric, and Power BI in a smooth, predictable way. We handle the full process from the first review of your setup to the final cutover so your data stays accurate, protected, and ready for reporting or analysis. This gives your team confidence that the move will not disrupt daily work.

We also strengthen how systems connect across your organization so information flows without breaks. This includes real-time data sync, API-based automation, and cloud-ready designs that support both on-premises systems and cloud platforms. When data travels cleanly across all tools, your teams can work with a single version of truth and avoid the usual delays caused by manual fixes or duplicate inputs. The outcome is steady reporting, better visibility, and smoother operations across the board.

Kanerika works closely with your teams to understand how your business runs, what you want to achieve, and where your data challenges actually come from. This helps us design migration plans that match your real needs instead of forcing you into a fixed template. Our work in banking, retail, logistics, healthcare, and manufacturing gives us the experience to cut costs, reduce risk, and strengthen security. With Kanerika, you gain a long-term partner for data migration and a clear path to modern analytics and AI readiness that supports your plans.

Frequently Asked Question

1. What are data reconciliation tools?

Data reconciliation tools compare data between source and target systems to ensure accuracy and consistency. They verify record counts, field values, and business totals. These tools are commonly used during data migration, reporting, and system integration projects. Their main goal is to ensure migrated data can be trusted by both technical and business teams.

2. Why are data reconciliation tools important in data migration?

During data migration, mismatches can occur due to transformations, schema changes, or data quality issues. Reconciliation tools detect these issues early and prevent incorrect data from moving into production. This reduces migration risk and avoids rework after go-live. They help organizations achieve confident migration sign-off.

3. What types of checks do data reconciliation tools perform?

These tools perform row-level comparisons, aggregate checks, and rule-based validations. They also validate business KPIs and tolerance thresholds. Some tools support real-time and batch reconciliation. This ensures both technical correctness and business accuracy.

4. Can data reconciliation tools handle large data volumes?

Yes, modern automated data reconciliation tools are designed to scale. They can process millions or billions of records efficiently. This makes them suitable for cloud, data lake, and enterprise data warehouse migrations. They eliminate the need for slow and error-prone manual checks.

5. How do data reconciliation tools support compliance and audits?

Reconciliation tools generate audit-ready reports that show data accuracy and completeness. They provide traceability, logs, and validation results. This is essential for regulations like SOX, GDPR, HIPAA, and PCI-DSS. They help enterprises demonstrate data integrity to auditors and regulators.

6. Are data reconciliation tools used only after migration?

No, reconciliation tools are used before, during, and after migration. Pre-migration profiling sets baselines, during-migration checks catch issues early, and post-migration validation confirms success. This continuous approach reduces overall migration risk. It also prevents surprises after go-live.

7. How should enterprises choose the right data reconciliation tool?

Enterprises should consider data volume, migration complexity, automation needs, and integration with existing tools. Support for source-to-target validation and audit reporting is critical. The right tool should align with both technical and business requirements. Ease of use and scalability should also be key decision factors.