Data integration is changing fast. Companies need to connect more systems, handle more data, and do it all in real time. Global data volumes are expected to reach 200 zetabytes in 2025, which means businesses face serious pressure to keep their data organized and accessible while staying fast and accurate.

Old batch processing can’t keep up anymore. Most companies are moving to newer systems that handle data instantly, work across multiple clouds, and automate most of the heavy lifting with cloud data integration.

The numbers show this shift. The market is growing from $17.58 billion in 2025 to $33.24 billion by 2030, a 13.6% annual growth rate. Banks, hospitals, retailers, and manufacturers are all moving from older ETL systems to platforms that handle real-time analytics and work across different cloud providers. This guide covers what’s actually happening in data integration right now and how to pick the right tools.

Key Takeaways

- Real-time data integration through change data capture is now essential for companies that need instant analytics and want to make faster decisions

- Zero-ETL removes transformation layers but needs careful planning for security and compliance

- Data observability prevents pipeline failures and helps companies meet regulatory requirements

- Consumption pricing gives you flexibility but you need to watch costs closely to avoid surprises

- Modern platforms combine quality checks, tracking, and automatic schema updates in one place

Enhance Data Accuracy and Efficiency With Expert Integration Solutions!

Partner with Kanerika Today.

Real-Time Data Integration and Event-Driven Pipelines

Batch processing is too slow for most businesses now. If you need to catch fraud, manage inventory, or personalize customer experiences, you can’t wait hours for data to update. Real-time integration moves data between systems in seconds, so you can act on information as it happens.

How Change Data Capture Works?

Change data capture makes this work. CDC tracks changes in databases and sends them to other systems right away. Unlike old methods that copy entire tables over and over, log-based CDC reads the database’s transaction log. It catches every insert, update, and delete while adding only about 1-3% extra load to your database. According to Gartner’s research on data management, CDC has become a critical capability for enterprises requiring real-time data synchronization.

Common CDC Implementation Patterns

You can use CDC in different ways:

- Stream changes straight to data warehouses for real-time analytics

- Replicate data across cloud platforms for disaster recovery

- Send events to message queues where multiple apps can use them

- Publish to streaming platforms like Apache Kafka for event-driven architectures

Tools like Debezium, Striim, Oracle GoldenGate, AWS Database Migration Service, and Azure Data Factory handle most of the work automatically. They detect schema changes, transform data, and make sure everything stays in sync.

Evaluating Real-Time Integration Tools

When you’re looking at real-time tools, check a few things:

- Speed Requirements: How fast does it need to be? Fraud detection needs millisecond speed. Dashboards can usually wait a few seconds.

- Throughput Capacity: Can it handle your peak loads without falling behind?

- Schema Management: Does it keep working when your database schema changes?

- Data Type Support: Make sure it handles complex data types, deletes records properly, and can replay events if something goes wrong.

Business Impact and ROI

Companies using real-time CDC see big returns. According to recent research, organizations get 171-295% ROI within three years, mostly from:

- Faster decisions based on current data

- Lower operational costs through automation

- Better customer experiences from real-time personalization

Learn more about implementing automated data integration in your organization.

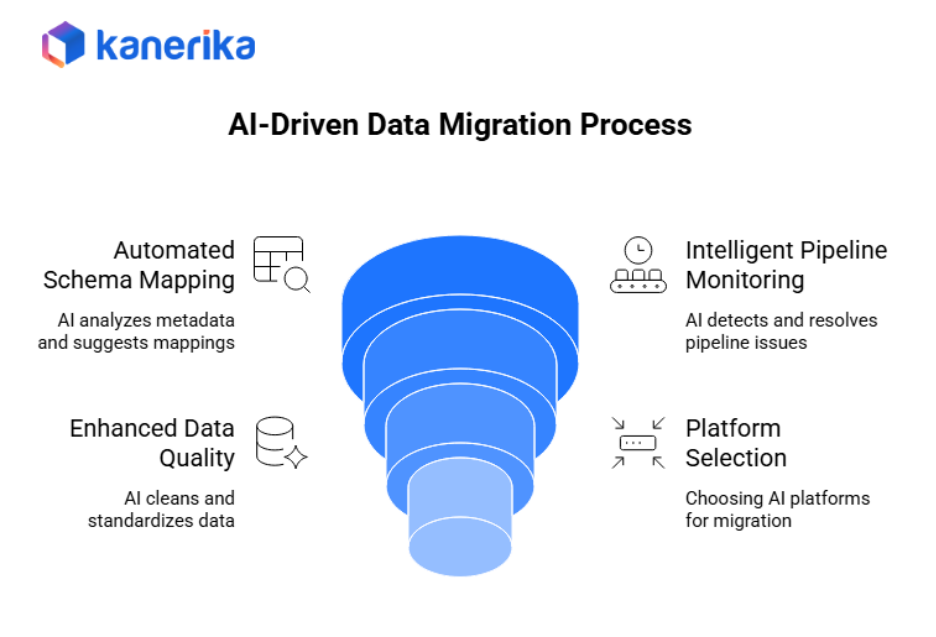

AI-Driven Automation Across Pipelines

AI is making data integration less manual. Instead of spending hours mapping fields and writing rules, you can let AI handle most of it. Automation cuts manual work by 40-60% while making pipelines more reliable. McKinsey research shows that AI-powered automation can reduce data pipeline development time by up to 50% while improving data quality.

1. Automated Schema Mapping

Schema mapping used to take forever. Engineers would analyze source and target systems, then create transformation rules for every field that has a different name or format. AI changes this:

- Platforms like Informatica’s CLAIRE engine analyze your metadata and sample data

- They suggest how fields should connect automatically

- The system learns from your corrections and gets better over time

- Pattern recognition handles similar mappings across multiple systems

2. Intelligent Pipeline Monitoring

AI also watches your pipelines. It spots weird patterns in data volumes, processing times, or error rates before they cause problems:

- Proactive Alerts: You get warnings early instead of waiting for things to break

- Root Cause Analysis: When errors happen, AI examines logs and recent changes to find the cause fast

- Auto-Remediation: Some platforms even fix common issues automatically, like missing files or temporary network problems

3. Enhanced Data Quality

Data quality gets better too:

- Machine learning learns what normal data looks like for each field

- It flags anything unusual that simple rules miss

- Natural language processing cleans up text and standardizes addresses

- AI pulls structured data from messy sources

4. Choosing AI-Powered Platforms

When you’re checking out AI platforms, see if they:

- Handle changing data patterns over time

- Let you train custom models with your data

- Explain why they made specific decisions

- Provide transparency in automated recommendations

You want to understand what the AI is doing, not just trust it blindly.

Cloud-Native iPaaS, MultiCloud, and Integration Fabric

Integration platform as a service has gotten a lot better. Over 70% of growth now comes from cloud services as companies ditch on-premise tools. Cloud platforms handle the infrastructure, scale automatically, and charge based on usage instead of forcing you to buy fixed capacity. Forrester reports that cloud-based integration platforms reduce operational overhead by up to 60% compared to traditional on-premise solutions.

1. Modern iPaaS Capabilities

Modern iPaaS handles different integration types in one place:

- API Development: Build REST and GraphQL APIs for application consumption

- Event-Driven Workflows: Set up workflows that respond to business triggers in real time

- Data Warehouse Pipelines: Create continuous replication to cloud data warehouses

- Pre-Built Connectors: Access hundreds of connectors for common enterprise apps

This cuts down on tool sprawl and keeps governance consistent. Big vendors like MuleSoft, Boomi, SnapLogic, and Workato offer hundreds of pre-built connectors for apps like Salesforce, SAP, and Microsoft Dynamics, plus databases and cloud services.

2. Multicloud Integration

Multicloud matters more now because companies spread workloads across AWS, Azure, and Google Cloud while keeping some systems on-premise. Data needs to move between all these places:

- Cloud-agnostic tools prevent vendor lock-in

- You can put workloads wherever makes sense for cost or compliance

- Private connections through VPC peering or AWS PrivateLink improve security

- Avoid sending everything over the public internet

Check that platforms support private connections instead of routing all traffic through public internet. Explore our cloud data integration solutions for more details.

3. Data Fabric Architecture

Data fabric goes further than point-to-point connections:

- Intelligent Discovery: Uses metadata and AI to understand how data relates across systems

- Automatic Relationships: Finds relevant data automatically for specific business questions

- Unified Views: Presents unified views without building custom integrations for every question

- Query Federation: Queries multiple sources without always moving data around

You need strong metadata management and the ability to query multiple sources without always moving data around.

Data Integration Services in the California

Explore Kanerika’s data integration services in California, designed to seamlessly connect diverse data sources, streamline workflows, and enhance data accessibility.

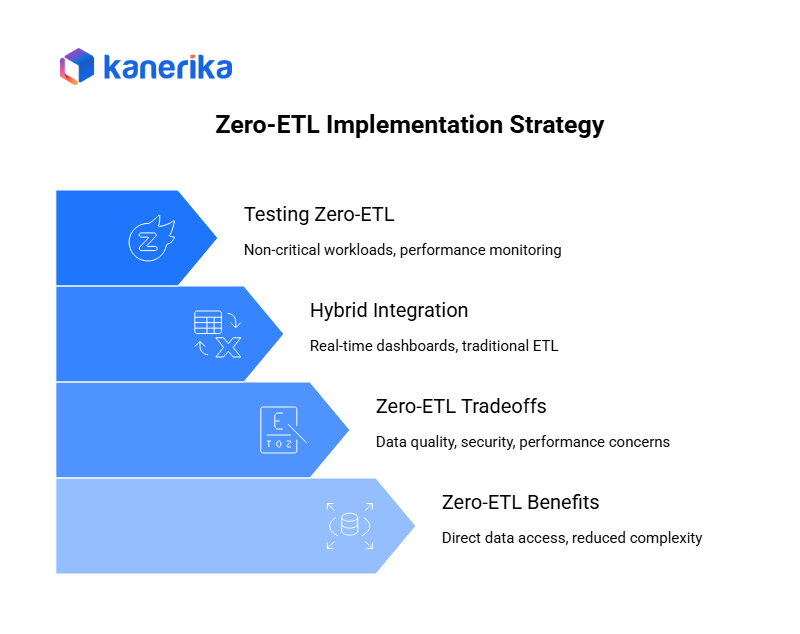

Zero-ETL and Hybrid Approaches

When Zero-ETL Works Best?

Zero-ETL works best when your target system can handle transformation during queries. Modern cloud data warehouses like Snowflake, BigQuery, Redshift, and Azure Synapse do this well:

- You can connect operational databases straight to analytics platforms

- Analysts can query live data without building pipelines

- Big vendors are adding native integrations to make this easier

- Reduces infrastructure complexity and maintenance overhead

1. Zero-ETL Tradeoffs

But there are tradeoffs:

- Data Quality: Problems are harder to catch when there’s no transformation stage to validate data

- Security Concerns: Source system permissions might not match what analysts should see

- Performance Issues: Query performance can be unpredictable when you’re hitting operational systems in real time

- Compliance Challenges: If you operate in countries with strict data laws, zero-ETL might not work because you can’t always control where data gets processed

2. Hybrid Integration Strategy

Most companies end up using both approaches:

- Real-time dashboards might query sources directly for maximum freshness

- Complex analytics with lots of business rules still use traditional ETL

- Different workloads require different patterns

You need platforms that support both without locking you into one approach.

3. Testing Zero-ETL

Test zero-ETL with less critical workloads first:

- Pick non-critical dashboards or reports

- Monitor performance under realistic load

- Check actual costs versus projections

- Verify governance requirements are met

- Document lessons learned before expanding

See how performance holds up, what it costs, and whether governance is manageable before you move important business intelligence systems over.

Low-Code and No-Code Adoption for Citizen Integrators

More people can build integrations now thanks to low-code tools. Gartner says 70% of new apps will use low-code by 2025, with 80% of users coming from outside IT by 2026. This helps when you don’t have enough data engineers and need to get things done faster.

1. How Low-Code Platforms Work

Low-code platforms use visual interfaces:

- Drag and drop components for data sources, transformations, and destinations

- Fill out forms instead of writing code

- Platform generates code behind the scenes automatically

- Handles connection pooling, error handling, and retries

Business analysts and data analysts can build working integrations without knowing programming languages or database internals.

2. Governance Requirements

But you need some guardrails:

- Approval Workflows: Technical people review things before they go to production

- Testing Environments: Proper staging to validate without impacting live systems

- Clear Ownership: Define who owns each integration and handles issues

- Access Controls: Use role-based access to control who can see sensitive data

- Audit Trails: Track who created what and when for troubleshooting

- Template Libraries: Provide approved patterns to help people avoid mistakes

3. Choosing the Right Tools

Different tools work for different needs:

Simple Tools:

- Easy for business users

- Fast to learn and deploy

- Can’t handle complex transformations

- Limited performance optimization

Sophisticated Platforms:

- Let you drop into code when needed

- Handle complex business logic

- Support performance tuning

- Require more technical knowledge

Most companies need both types. Use simple tools for connecting SaaS apps. Use full-featured platforms for complex scenarios with legacy systems or tricky business logic. Just make sure everything feeds into your central monitoring system.

Data Ingestion vs Data Integration: How Are They Different?

Uncover the key differences between data ingestion and data integration, and learn how each plays a vital role in managing your organization’s data pipeline.

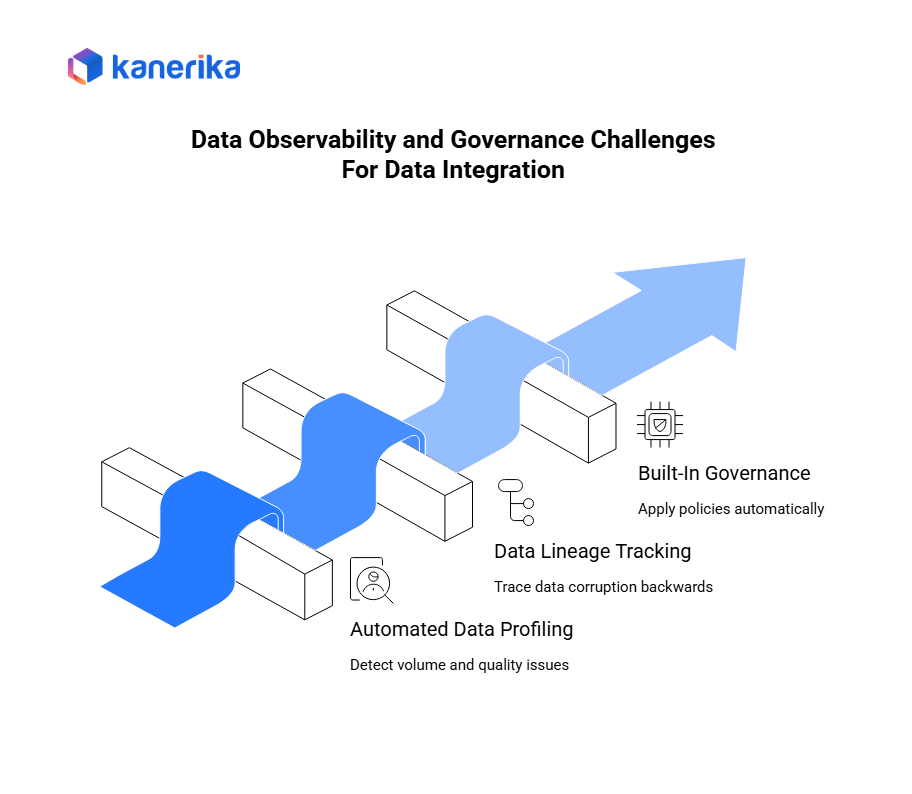

Data Observability, Metadata, and Governance Baked into Pipelines

Data observability is critical now. Old monitoring just checked if pipelines ran. Observability goes deeper to track data quality, completeness, and freshness through the whole process. Research shows governance failures affect 80% of data projects, and poor data quality costs millions in bad decisions and wasted time. According to Gartner, the average cost of poor data quality is $12.9 million annually for organizations.

1. Automated Data Profiling

Observability platforms profile your data automatically:

Tracking Metrics:

- Record counts to detect volume anomalies

- Null percentages showing completeness issues

- Value distributions revealing quality problems

- Schema changes that might break systems

Machine Learning Alerts:

- Establishes what’s normal for your data

- Alerts when something looks unusual

- Catches problems before they cause failures

- Reduces false positives over time

2. Data Lineage Tracking

Data lineage tracking shows how data flows from sources through transformations to final destinations:

- Impact Analysis: See what breaks when sources change

- Quality Debugging: Trace data backwards to find where corruption occurred

- Compliance Proof: Show regulators where personal data lives and how it’s used

- Automated Building: Modern platforms analyze transformation code to build lineage graphs automatically

They add metadata about who owns the data, what the quality rules are, and what fields mean.

3. Built-In Governance

Governance needs to be built in, not added later:

Automated Classification:

- Finds sensitive information like personal data or payment details

- Applies the right policies automatically

- Encrypts data in transit and at rest

- Updates as data sensitivity changes

Access Controls:

- Masking and tokenization hide sensitive values in test environments

- Role-based access ensures only authorized people can read or write data

- Audit trails track every access and change

Governance needs to be built in, not added later. This includes automated data classification that finds sensitive information like personal data or payment details, then applies the right policies. Additionally, data gets encrypted in transit and at rest. Moreover, masking and tokenization hide sensitive values in test environments. Access controls ensure only authorized people and apps can read or write specific data. If you’re in healthcare, finance, or government, check that platforms support frameworks like GDPR, HIPAA, SOC 2, ISO 27001, and PCI-DSS.

Cost Control Models and Consumption Pricing

You need to understand how integration platforms charge. Most have moved from fixed licenses to consumption-based pricing, which aligns costs with usage but can surprise you if you’re not careful. Vendors meter usage in different ways. According to Flexera’s State of the Cloud Report, 82% of enterprises report challenges managing cloud costs, with unexpected consumption charges being a primary concern.

1. Per-Row Pricing

Per-row pricing charges for each record processed. This works well if your data volumes are stable, but spikes can get expensive. Make sure you understand how vendors count rows. Some charge for updates and deletes separately. The others count failed records. As well as few charge for both extracting and loading data, which means you pay twice for the same movement.

2. Per-Connector Pricing

Per-connector pricing gives you unlimited data movement for each source-target pair at a fixed monthly fee. This makes budgeting easier but adds up fast when you’re connecting lots of systems. Also, calculate total costs for all your current and future connectors before committing. Watch out for premium pricing on enterprise apps like SAP or Oracle.

3. Compute-Based Pricing

Compute-based pricing charges for processing time or resources used. You control costs by tuning performance, but inefficient transformations can burn money without delivering value.

4. Cost Optimization Strategies

Here’s how to keep costs down:

Data Management:

- Set retention policies to avoid processing old data repeatedly

- Archive or delete data that’s no longer needed

- Use incremental processing with CDC or timestamps

- Avoid full refreshes when partial updates work

Scheduling:

- Run non-urgent jobs during off-peak times

- Take advantage of lower rates when available

- Batch similar operations together

- Schedule large loads for nights or weekends

Monitoring:

- Watch usage dashboards weekly to catch spikes early

- Set up cost alerts at specific thresholds

- Review bills monthly for unexpected charges

- Track cost per integration to find inefficiencies

Capacity Planning:

- Consider reserved capacity for steady workloads

- Use on-demand pricing for variable needs

- Right-size resources based on actual usage

- Scale down during low-activity periods

Don’t forget hidden costs like data egress fees when you move data between cloud regions or out to external systems.

Maximizing Efficiency: The Power of Automated Data Integration

Discover the key differences between data ingestion and data integration, and learn how each plays a vital role in managing your organization’s data pipeline.

Security, Privacy, and Compliance

Security and privacy shape how you build integrations, especially in healthcare, finance, and government. Integration platforms handle sensitive data moving between systems, which creates risks. Rules like GDPR, CCPA, HIPAA, PCI-DSS, and SOC 2 set specific requirements for how you handle, store, and track data. IBM’s Cost of a Data Breach Report found that the average cost of a data breach reached $4.45 million in 2023, with breaches in data pipelines being particularly costly.

1. Encryption Standards

Encryption is the foundation. All good platforms encrypt data in transit using TLS 1.2 or higher. They also encrypt data at rest when it’s stored temporarily during processing. You should control the encryption keys through services like AWS KMS, Azure Key Vault, or HashiCorp Vault.

End-to-end encryption keeps data protected from source to destination without exposing it in the middle. The National Institute of Standards and Technology (NIST) provides comprehensive guidelines for data security in transit and at rest.

2. Data Residency Requirements

Data residency matters because laws say certain data has to stay in specific countries. Healthcare data often has to stay in the US under HIPAA. GDPR gives EU citizens rights over their personal data.

Your integration platform needs to let you process and store data within required boundaries. You need to see exactly where your data is at each stage, including temporary storage, processing systems, backups, and logs.

3. Access Management

Access management controls who can view, change, or run integrations. Role-based access maps job roles to specific permissions. Moreover, single sign-on with Active Directory, Okta, or Azure AD makes user management easier and keeps security policies consistent. Use multi-factor authentication for privileged accounts.

Service accounts for automated processes should rotate credentials regularly and use secret management instead of hardcoded passwords. As well as, audit logs need to capture everything: who accessed what data, who changed configurations, who deployed what, and all admin actions.

Simplify Your Data Management With Powerful Integration Services!!

Partner with Kanerika Today.

How Organizations Implement Data Integration Successfully

Good integration projects start small and focused. Don’t try to transform everything at once. Start with a pilot that shows real value while your team learns the tools. This cuts risk, gets you quick wins to build support, and establishes patterns you can reuse. Learn more about our proven data integration implementation approach.

Phase 1: Assessment and Planning (Weeks 1-2)

Spend the first week or two on inventory and assessment:

Data Inventory:

- Identify all source systems and data types

- Document data volumes and growth rates

- Track how often data updates

- Assess current quality levels

Stakeholder Engagement:

- Talk to business users about pain points

- Understand which integrations deliver most value

- Document success criteria and metrics

- Get buy-in from executive sponsors

Requirements Documentation:

- Security and compliance requirements upfront

- Data sensitivity classifications

- Regulatory constraints (GDPR, HIPAA, etc.)

- Performance and latency requirements

This phase produces a roadmap that sequences work based on business impact and technical dependencies.

Phase 2: Pilot Implementation (Weeks 3-6)

Weeks 3-6 are for building 3-5 priority integrations in a pilot environment:

Integration Development:

- Pick 3-5 critical data flows

- Set up CDC for core transactional systems

- Configure transformations and mappings

- Implement error handling and retries

Testing:

- Check data accuracy against source systems

- Make sure no records are lost

- Validate transformation logic

- Test performance under realistic loads

Baseline Metrics:

- Capture processing times before optimization

- Track resource usage (CPU, memory, network)

- Measure data freshness and latency

- Document costs for comparison

Phase 3: Production Deployment (Weeks 7-12)

Weeks 7-12 focus on production deployment and enabling your team:

Go-Live Activities:

- Move pipelines to production with proper monitoring

- Set up alerting for failures and degradation

- Implement data quality rules and checks

- Configure backup and recovery procedures

Optimization:

- Tune performance based on real usage

- Optimize costs through resource right-sizing

- Refine transformation logic

- Address any bottlenecks found

Enablement:

- Write documentation and runbooks

- Train operations teams on monitoring

- Conduct demos for stakeholders

- Gather feedback for improvements

Comparison of Leading Integration Approaches

| Approach | Best For | Key Strengths | Common Challenges | Typical Cost Range |

| Traditional ETL | Complex transformations, data warehousing | Proven reliability, comprehensive tooling | Batch latency, infrastructure overhead | $50K-500K+ annually |

| Real-time CDC | Operational analytics, instant sync | Sub-second latency, minimal source load | Complexity, schema management | $30K-300K+ annually |

| iPaaS Cloud | Multi-cloud, hybrid environments | Managed service, rapid deployment | Consumption costs at scale | $20K-250K+ annually |

| Zero-ETL | Cloud data warehouse analytics | Simplified architecture, reduced maintenance | Limited transformation, governance gaps | $10K-100K+ annually |

| Low-code Platforms | Citizen integrator enablement | Business user friendly, fast development | Limited complex logic handling | $15K-150K+ annually |

Case Studies: Kanerika’s Successful Data Integration Projects

1. Unlocking Operational Efficiency with Real-Time Data Integration

The client is a prominent media production company operating in the global film, television, and streaming industry. They faced a significant challenge while upgrading its CRM to the new MS Dynamics CRM. This complexity in accessing multiple systems slowed down response times and posed security and efficiency concerns.

Kanerika has reolved their problem by leevraging tools like Informatica and Dynamics 365. Here’s how we our real-time data integration solution to streamline, expedite, and reduce operating costs while maintaining data security.

- Implemented iPass integration with Dynamics 365 connector, ensuring future-ready app integration and reducing pension processing time

- Enhanced Dynamics 365 with real-time data integration to paginated data, guaranteeing compliance with PHI and PCI

- Streamlined exception management, enabled proactive monitoring, and automated third-party integration, driving efficiency

2. Enhancing Business Performance through Data Integration

The client is a prominent edible oil manufacturer and distributor, with a nationwide reach. The usage of both SAP and non-SAP systems led to inconsistent and delayed data insights, affecting precise decision-making. Furthermore, the manual synchronization of financial and HR data introduced both inefficiencies and inaccuracies.

Kanerika has addressed the client challenges by delvering follwoing data integration solutions:

- Consolidated and centralized SAP and non-SAP data sources, providing insights for accurate decision-making

- Streamlined integration of financial and HR data, ensuring synchronization and enhancing overall business performance

- Automated integration processes to eliminate manual efforts and minimize error risks, saving cost and improving efficiency

Transform Your Data Integration Strategy with Kanerika

Data integration in 2025 needs real-time capabilities, intelligence, and cloud infrastructure. You can’t stick with old batch processing. Moreover, pick platforms that fit your current needs but give you room to grow. Start with a focused pilot that shows real value through faster decisions, lower costs, or better customer experience. Then expand based on what works.

At Kanerika, we help companies modernize their data integration with our FLIP platform. It automates ETL migration and cloud data integration. Additionally, we combine automation with expertise from hundreds of projects, delivering complete solutions in weeks instead of months. Companies in healthcare, finance, and retail use us to hit 70-90% automation rates while keeping data quality and governance intact.

We handle everything from assessment and strategy through implementation, testing, and ongoing support. We build solutions for specific industries and regulated environments. Consequently, we have pre-built tools for SAP, Informatica, and DataStage that cut implementation time. Moreover, we offer fixed-price projects with guaranteed ROI so our success depends on yours. Explore our comprehensive data analytics services that complement our integration solutions.

Frequently Asked Questions

What is the best data integration platform for enterprises in 2025?

It depends on what you need. If you’re all-in on cloud data warehouses like Snowflake or Redshift, native tools like Azure Data Factory or AWS Glue often work best.

How does zero-ETL work and when should organizations use it?

Zero-ETL lets you query source data directly or replicates data with automatic transformation in the target system. No separate transformation stage. It works when your target system can transform during queries, your transformation logic is simple, and you want less infrastructure to manage.

What is change data capture and why does it matter?

CDC tracks changes in databases (inserts, updates, deletes) and sends them to other systems immediately. It matters because it keeps everything in sync without hammering your databases.

How much does enterprise data integration cost?

Costs vary a lot. Cloud tools with usage-based pricing might start at $5,000-20,000 per year for small setups but can hit $100,000-500,000 for big deployments processing terabytes across many systems.

How can organizations ensure data quality in real-time pipelines?

Build quality checks into pipelines, don’t wait until after loading. Validate schemas before accepting data. Use anomaly detection to catch weird patterns. Create data contracts between teams that spell out quality expectations and what happens when data doesn’t meet them.

Can non-technical teams build integrations with low-code tools?

Yes, business analysts and data analysts can build working integrations with modern low-code tools. Visual interfaces and templates make common scenarios accessible. But you need governance: approval workflows, testing environments, clear ownership, and access controls.

What integration capabilities support AI and machine learning initiatives?

AI projects need high-quality data in the right formats. Look for automated data prep, support for structured and unstructured data, real-time integration. So, models work with current info, vector database support for embeddings and semantic search, and metadata management for reproducibility.

How do organizations balance security with integration accessibility?

Security and accessibility work together with the right controls. Encryption protects data without blocking authorized access. Role-based access gives people exactly what they need.