Rapid data growth is reshaping how enterprises manage and use information. With the global data volume expected to reach 180 zettabytes by 2025 (IDC), organizations are under pressure to modernize their data infrastructure to keep up with analytics, AI, and real-time decision-making demands. Amid this shift, one question keeps surfacing: data fabric vs data lake — which is the right approach for your enterprise?

While both play critical roles in modern data architecture, they serve different purposes. A data lake provides a cost-effective way to store raw, structured, and unstructured data for advanced analytics and AI experiments. In contrast, a data fabric is an intelligent architecture layer that connects, governs, and delivers data seamlessly across hybrid and multi-cloud environments.

This blog explores what data fabrics and data lakes are, their core differences, benefits, and future trends — helping you decide which approach (or combination) aligns best with your enterprise’s data strategy.

Key Learnings

- A data lake is a cost-effective repository for storing raw, structured, and unstructured data, while a data fabric acts as an intelligent layer that unifies, governs, and integrates data across hybrid and multi-cloud environments.

- Data lakes require additional tools to ensure data quality and avoid “data swamp” issues, whereas data fabrics offer built-in metadata-driven governance, security, and compliance.

- Data lakes are ideal for experimentation, big data analytics, and AI model training, while data fabrics enable enterprise-ready AI, self-service analytics, and production-grade insights.

- Data lakes are cheaper upfront but can become costly to manage at scale; data fabrics reduce long-term operational complexity and improve data trust and reusability.

- Data lakes focus on storing and ingesting data, while data fabrics connect, virtualize, and integrate existing sources for real-time, unified access.

- The future of enterprise data architecture lies in combining both—using data lakes for raw storage and data fabrics as the intelligent, governed layer for analytics and AI.

Accelerate Your Data Transformation with Microsoft Fabric!

Partner with Kanerika for Expert Fabric implementation Services

What Is a Data Fabric?

A data fabric is an advanced architecture layer that connects and manages distributed data across hybrid and multi-cloud environments. Instead of physically moving or duplicating data into one central repository, a data fabric creates a virtualized, intelligent data network that integrates multiple sources while maintaining governance and security. Its core goal is to deliver seamless, trusted, and real-time data access to business users, analysts, and AI systems—without the complexity of manually managing siloed systems.

Key Capabilities of a Data Fabric

- Metadata-Driven Integration: Uses rich metadata to automatically identify, map, and connect data assets across on-prem databases, cloud storage, data warehouses, and lakes.

- Automated Data Discovery & Cataloging: Continuously scans and classifies data, making it easy for teams to search and access trusted datasets.

- Active Data Governance & Security: Enforces policies like access control, lineage tracking, and compliance with GDPR, HIPAA, or SOX.

- AI/ML for Data Quality & Self-Service Analytics: Uses AI to detect anomalies, improve data accuracy, and enable non-technical users to explore curated insights.

Real-World Example: Citi’s Data Fabric Journey

Global banking leader Citi implemented a data fabric architecture to unify fragmented data across its global operations and meet strict regulatory requirements. With data residing in multiple legacy systems and cloud environments, risk and compliance teams often struggled to access accurate, real-time information. Citi used a data fabric approach (leveraging metadata-driven integration and AI-powered governance) to create a single, trusted view of financial data.

This transformation enabled faster compliance reporting, improved fraud detection with real-time analytics, and enhanced decision-making for risk managers. By connecting data without extensive migrations, Citi improved agility, cut reporting delays, and strengthened compliance with evolving financial regulations.

A data fabric like Citi’s shows how enterprises can simplify complex data ecosystems and deliver governed, analytics-ready data for both regulatory needs and innovation.

What Is a Data Lake?

A data lake is a centralized repository that stores an organization’s data in its raw, native formats—structured, semi-structured, and unstructured—without forcing a schema during ingestion. Unlike traditional relational systems that require rigid formats from the start, data lakes allow you to define schema on read—when data is queried or processed later.

Key Characteristics of a Data Lake

- Schema-on-Read

In a data lake, data is ingested as-is. You don’t need to transform or normalize it upfront. Instead, when users or applications request the data, they apply schema, filters, or transformations as needed. This flexibility supports evolving analytics needs.

- Cost-Effective Big Data Storage

Data lakes are built on scalable, low-cost storage (especially in the cloud), making it feasible to store massive volumes of raw data (logs, images, sensor data, clickstreams) without prohibitive costs.

- Flexibility for AI & ML Workloads

Because they preserve raw data, data lakes are ideal for advanced analytics, machine learning, and exploratory data science. Models can ingest raw features directly, experiment with new combinations, and evolve over time.

- Typical Architecture: Cloud Object Storage

In modern deployments, data lakes are often built on cloud object storage platforms—such as AWS S3, Azure Data Lake Storage (ADLS), or Google Cloud Storage. These provide durability, scalability, and often integrate with compute and analytics services in the cloud.

Real-World Example: Netflix’s Data Lake & Personalization

Netflix uses a cloud-based data lake architecture (primarily on AWS) to power its personalization engine and analytics. Medium

They ingest vast amounts of raw data: user interactions (plays, pauses, skips), metadata about content, device logs, streaming behavior, and more. This raw data lives in S3 and is cataloged (e.g. via AWS Glue) so downstream processes—analytics jobs, ML model training, real-time inference systems—can access it when needed. Medium

Netflix engineers have also modernized parts of their lake using Apache Iceberg to manage large-scale data more reliably and support ACID operations and efficient querying. Amazon Web Services, Inc.

By combining a data lake architecture with metadata, catalogs, and query/compute layers, Netflix delivers recommendations and insights at scale—without needing to keep everything pre-transformed in advance.

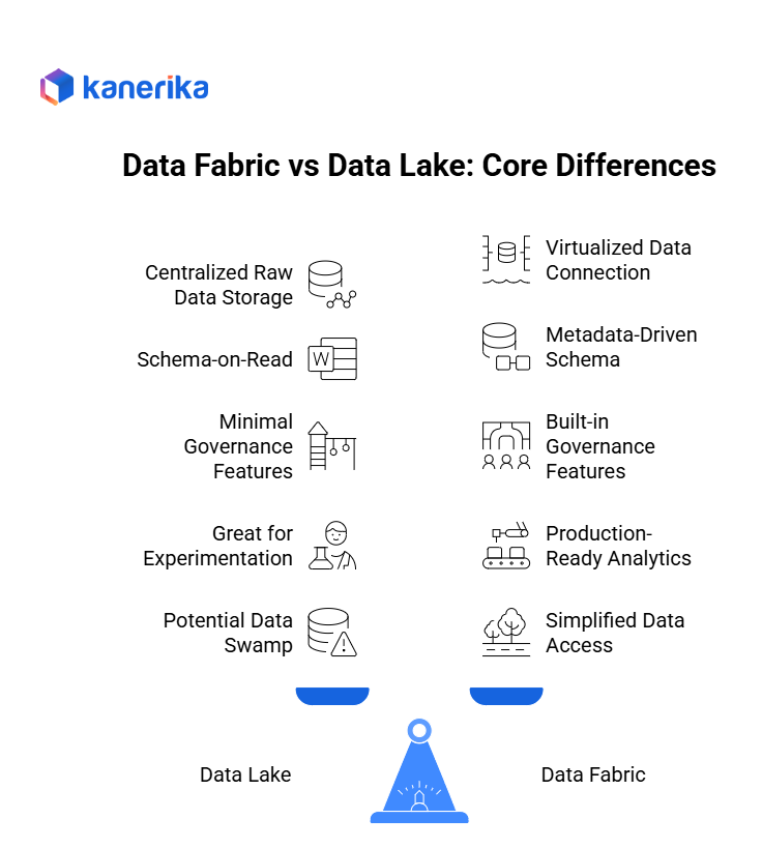

Data Fabric vs Data Lake: Core Differences

When organizations modernize their data architecture, data lakes and data fabrics often appear in the same conversation. While both aim to break data silos and support analytics, they approach the problem from very different angles. Below is a clear comparison:

| Aspect | Data Lake | Data Fabric |

| Definition | A centralized repository that stores raw, structured, semi-structured, and unstructured data at scale. | An architecture layer that connects, virtualizes, and manages distributed data across hybrid and multi-cloud environments. |

| Data Storage | Physically stores raw data in a single location (often cloud object storage like AWS S3 or Azure Data Lake Storage). | Connects and virtualizes existing data across systems without moving it, creating a unified access layer. |

| Schema | Schema-on-read — apply structure only when consuming data. | Metadata- and schema-driven — standardizes data definitions and relationships upfront for consistency. |

| Governance | Minimal built-in governance; requires additional tools to secure and manage. | Built-in data governance, compliance, and lineage features baked into the architecture. |

| AI/ML Support | Great for exploration, experimentation, and feature engineering. | Great for production-ready, governed analytics and AI at scale. |

| Complexity | Easy to set up initially but can turn into a “data swamp” without management. | More complex to implement but simplifies long-term data access and control. |

| Integration Approach | Primarily ingests and stores data from multiple sources. | Connects, integrates, and orchestrates data from different locations without mass replication. |

| Cost | Lower upfront storage cost but requires more tools later for curation and governance. | Higher initial investment but saves cost long-term by reducing duplication and improving reuse. |

| Use Cases | Data science, machine learning, exploratory analytics, log data storage. | Enterprise analytics, compliance-driven insights, self-service BI, and regulated industries. |

Data Lake

A data lake acts as a massive storage pool for raw data of any format. It’s ideal when you need a flexible, cost-effective repository to store logs, images, transactional data, and other big data types. However, it’s not inherently governed. Without proper cataloging and metadata management, data lakes can become “data swamps” — huge, unorganized repositories that are hard to search and trust. Organizations must add tools for cataloging (e.g., AWS Glue, Azure Purview), quality, and access control to keep lakes usable.

Data Fabric

A data fabric is a higher-level architecture that sits above your existing data systems — including lakes, warehouses, databases, and SaaS apps. Instead of replicating everything, it creates a virtualized, metadata-driven layer to unify access, enforce governance, and deliver real-time data integration. This is especially powerful in complex enterprises where data lives in multiple clouds and on-prem systems. A data fabric helps provide trusted, governed, and real-time analytics across distributed sources.

Integration Approach: Store vs Connect

The fundamental difference lies in their integration philosophy. Data lakes follow an “ingest and store” model that requires physically copying data from source systems into the lake before analysis can begin. This approach provides complete control over the data but creates data freshness challenges and storage costs.

Data fabric follows a “connect and integrate” model that virtualizes access to data across existing systems without requiring physical movement. This approach provides access to real-time data while reducing storage requirements and maintaining data sovereignty within source systems.

Cost Considerations: Initial vs Long-term Economics

- Data lakes offer lower initial investment costs since they primarily require storage infrastructure and basic ingestion tools. However, long-term costs escalate as organizations add governance tools, data quality solutions, and specialized analytics platforms to make the data lake truly enterprise-ready.

- Data fabric requires higher upfront investment in integration platforms and metadata management capabilities. However, long-term costs often prove lower due to reduced data duplication, improved governance efficiency, and enhanced data reuse across the organization. The virtual integration approach eliminates ongoing storage costs for duplicated data while providing better data freshness and compliance capabilities.

Both approaches serve important roles in modern data architectures, with data lakes excelling in experimental and data science use cases, while data fabric enables enterprise-grade analytics and compliance-driven environments.

When to Use Data Lake vs Data Fabric

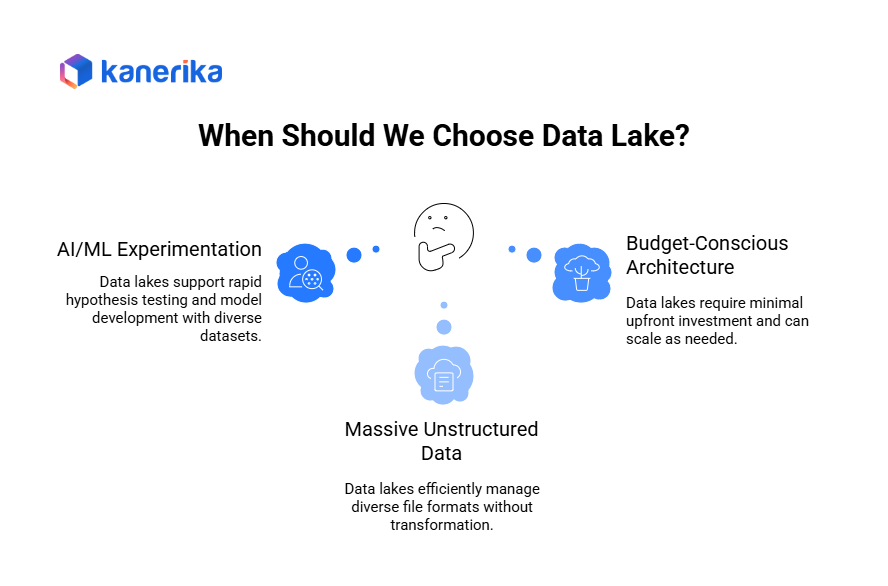

When to Choose a Data Lake

1. Heavy AI/ML Experimentation Requirements

Data lakes excel when organizations need to conduct extensive machine learning experiments with diverse, unprocessed datasets. The schema-on-read approach allows data scientists to explore relationships and patterns without predefined structures, enabling rapid hypothesis testing and model development.

Research teams can store training datasets, feature engineering outputs, and model artifacts in their raw formats, providing flexibility for iterative experimentation. This approach supports the exploratory nature of AI/ML development where requirements evolve as insights emerge.

2. Massive Unstructured Data Storage Needs

Organizations dealing with high volumes of unstructured data—including images, videos, IoT sensor logs, clickstream data, and social media content—benefit from data lake architectures that handle diverse file formats without transformation requirements.

Media companies analyzing user-generated content, IoT manufacturers processing sensor telemetry, and e-commerce platforms tracking customer behavior patterns all generate data types that data lakes manage efficiently at scale.

3. Budget-Conscious Flexible Architecture

Startups and growing companies with limited initial budgets find data lakes attractive because they require minimal upfront investment in governance tools and metadata management. Organizations can start simple and add sophistication as they mature and generate value from their data.

Example: A streaming media startup storing user interaction logs, content metadata, and viewing patterns in a data lake for recommendation algorithm development. They can experiment with different analytics approaches without significant infrastructure investment while building their data science capabilities.

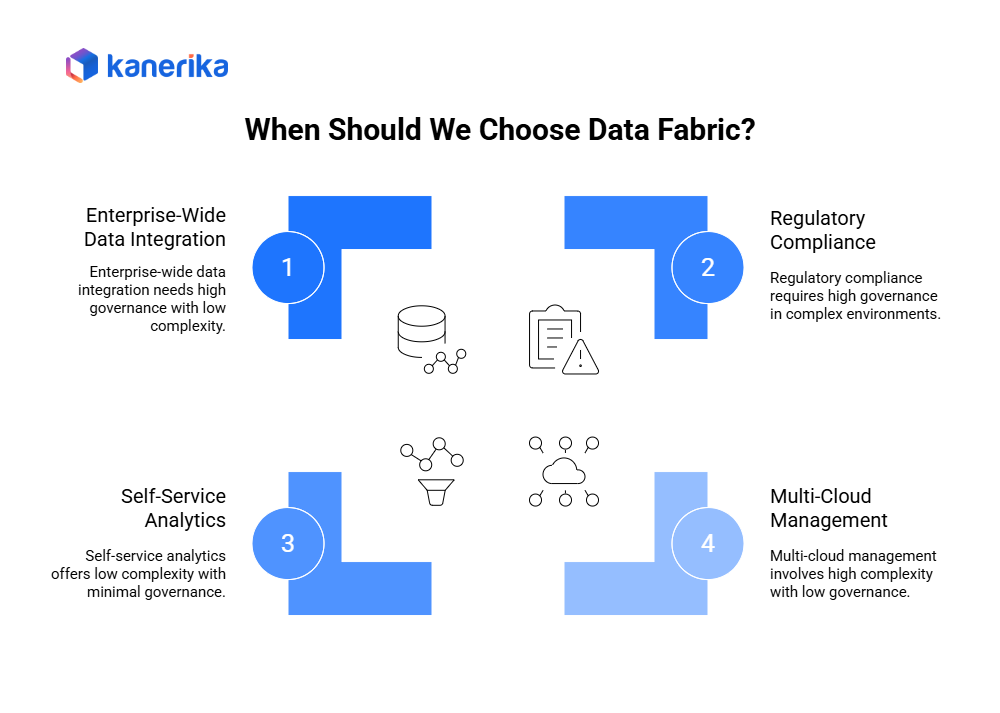

When to Choose a Data Fabric

1. Enterprise-Wide Data Integration & Governance

Large organizations with data scattered across multiple departments, systems, and geographic locations need data fabric’s integration capabilities to create unified views without massive data movement projects. The semantic layer ensures consistent definitions and business context across all data assets.

Data fabric enables organizations to break down data silos while maintaining data sovereignty within business units, addressing both technical integration needs and organizational governance requirements.

2. Regulatory Compliance Requirements

Heavily regulated industries including finance, healthcare, and pharmaceuticals require robust governance, lineage tracking, and compliance reporting that data fabric provides natively. Built-in policy enforcement ensures sensitive data handling meets regulatory standards across all access points.

The metadata-driven approach automatically maintains audit trails and access logs necessary for regulatory reporting while enabling real-time compliance monitoring across distributed data environments.

3. Self-Service Analytics for Business Users

Organizations wanting to democratize data access for non-technical business users benefit from data fabric’s semantic layer that presents data in business terms rather than technical schemas. This approach reduces dependency on IT teams while maintaining data governance and security.

Business analysts can discover and access relevant data without understanding underlying technical complexities, enabling faster insights and reducing bottlenecks in data-driven decision making.

4. Multi-Cloud and Hybrid Architecture Management

Companies operating across multiple cloud providers or maintaining hybrid on-premises and cloud environments need data fabric’s virtualization capabilities to provide consistent data access regardless of physical location.

Example: A global investment bank unifying transactional data from core banking systems, customer relationship management platforms, and IoT sensors from branch locations. Data fabric enables real-time fraud detection and regulatory reporting while keeping sensitive financial data within appropriate jurisdictional boundaries.

Hybrid Approach: Best of Both Worlds

Many mature enterprises implement hybrid architectures that combine data lake storage capabilities with data fabric governance and access management. This approach leverages data lakes for raw data storage, experimentation, and cost-effective archiving while using data fabric to provide governed, business-friendly access to curated datasets.

The hybrid model allows organizations to support both data science experimentation and enterprise analytics use cases within a single architecture. Data lakes handle the “store everything” requirement while data fabric ensures the “find and trust anything” capability that business users demand.

Organizations typically start with one approach and evolve toward hybrid architectures as their data maturity and requirements grow. This evolution path provides flexibility while maximizing the value of existing investments in either data lake or data fabric technologies.

Benefits of Data Lakes and Data Fabrics

Both data lakes and data fabrics help enterprises manage and use their data more effectively — but they serve different priorities. Here’s how each delivers unique business value.

1. Benefits of Data Lakes

- Cost-effective storage for large data sets enables organizations to store massive amounts of raw data at a fraction of traditional database costs. Cloud-native data lakes leverage object storage pricing models, making petabyte-scale storage economically viable for businesses of all sizes.

- Flexibility for data science and AI innovation allows teams to experiment with diverse analytical approaches without predefined schemas. Data scientists can explore raw datasets, develop machine learning models, and iterate quickly without the constraints of structured database requirements.

- Scalability across cloud environments provides seamless expansion capabilities as data volumes grow. Organizations can automatically scale storage and compute resources up or down based on demand, ensuring performance remains consistent regardless of data volume fluctuations.

- Support for diverse formats accommodates the full spectrum of modern data types including structured CSV files, semi-structured JSON documents, optimized Parquet files, multimedia content like videos and images, and real-time IoT sensor data streams.

2. Benefits of Data Fabric

- Unified access to distributed data breaks down organizational silos by creating a single logical layer that spans multiple systems, databases, and cloud environments. Users can access all relevant data through a consistent interface regardless of where it physically resides.

- AI-driven metadata and automation for faster insights automatically catalogs, classifies, and suggests relevant datasets for analytical projects. Intelligent automation handles routine data preparation tasks, dramatically reducing time-to-insight for business analysts and data scientists.

- Strong compliance and governance provides built-in data lineage tracking, access controls, and regulatory compliance features. Organizations can maintain audit trails, enforce data privacy policies, and ensure compliance with regulations like GDPR and CCPA across their entire data ecosystem.

- Self-service analytics for business users democratizes data access by providing intuitive interfaces that enable non-technical users to find, understand, and analyze data independently. Business teams can generate reports and insights without relying heavily on IT or data engineering resources.

- Real-time data integration across hybrid and multi-cloud environments enables seamless data flow between on-premises systems, multiple cloud providers, and edge computing environments. This ensures decision-makers have access to the most current information regardless of where it originates.

Comparison: Data Lakes vs Data Fabrics

| Feature | Data Lake Benefit | Data Fabric Benefit |

| Storage | Cheap, scalable raw data storage | Virtualized access to all data |

| Governance | Minimal — requires add-ons | Built-in, metadata-driven governance |

| AI Readiness | Experimental data science | Production-ready AI & analytics |

| Business Enablement | Data scientists & engineers | Business users & decision makers |

Both architectures serve complementary roles in modern data strategies. Data lakes excel as cost-effective repositories for experimental analytics, while data fabrics provide enterprise-grade governance and accessibility for production systems.

Real-World Case Studies: Data Lakes and Data Fabrics

These compelling real-world examples demonstrate how organizations across industries have successfully implemented data lakes and data fabrics to achieve transformational business outcomes.

Case Study 1 – Retail: Tealium & AWS Customer Data Platform

Challenge: A large multi-channel retailer with both ecommerce and brick-and-mortar operations wanted to personalize customer experiences across website, app, messaging, and advertising touchpoints. The business was manually pulling customer segments and uploading them to individual vendors—a process that was neither efficient nor scalable for the dynamic, multi-segment targeting they envisioned.

Solution: The retailer implemented Tealium’s Customer Data Platform integrated with an AWS data lake built on Amazon S3. This solution created a real-time, 360-degree view of customers by combining clickstream data from websites and mobile apps, point-of-sale transaction data from physical stores, and IoT sensor data from in-store devices. The platform automated the process of creating customer segments and distributing them across marketing vendors.

Outcomes: By creating new customer segments and testing various marketing activations, the retailer was able to improve the customer experience, increase sales, and drive marketing ROI. The bi-directional data flow between Tealium and AWS enabled enhanced product recommendations through Amazon Personalize and triggered personalized messaging via Amazon Pinpoint, resulting in significantly improved cross-channel campaign performance.

Source: AWS Partner Network Blog – Customer Data Platform with Data Lakes

Case Study 2 – Banking: Swiss Bank’s Mainframe Data Fabric Integration

Challenge: A global Swiss financial services company and investment bank operating in over 50 countries needed to quickly and securely connect their legacy mainframe applications to third-party fraud detection services with very low latency for real-time Know Your Customer (KYC) compliance. Their legacy infrastructure required uniform SOAP and REST APIs to be rapidly created with minimal coding time.

Solution: The bank deployed Adaptigent’s data fabric solution that unified data access across their mainframe systems, CRM databases, and external APIs. The fabric created Smart APIs that seamlessly connected core PL/I z/OS business logic applications to modern fraud detection services, bridging the gap between legacy systems and cloud-based security applications.

Outcomes: The implementation achieved 60% reduction in manual data preparation time through automated data integration workflows. The solution dramatically improved regulatory reporting accuracy by providing real-time access to unified customer data across all systems. The Smart APIs ensured the bank’s legacy systems remained securely connected to fraud detection services regardless of future infrastructure changes.

Source: Adaptigent Case Study – Swiss Bank Mainframe Integration

Case Study 3 – Healthcare: AWS HealthLake Implementation

Challenge: Healthcare organizations face the complex challenge that approximately 80% of medical data is unstructured, coming from clinical notes, medical images, EHR free-form text fields, medications, transfer summaries, and various legacy systems. This unstructured data contains valuable insights but requires significant processing before it can be used for analytics while maintaining strict HIPAA compliance.

Solution: Healthcare networks implemented Amazon HealthLake combining data lake capabilities for raw EMR/EHR storage with data fabric features for real-time analytics. HealthLake uses specialized machine learning models to structure, tag, and index the data chronologically to provide a complete patient history, converting unstructured healthcare data into FHIR R4 format for standardized analysis.

Outcomes: The implementation enabled faster care decisions through real-time patient analytics while maintaining robust HIPAA compliance. Built-in data security, HIPAA and GDPR compliance, and stringent identity access management ensures protected health information (PHI) and personally identifiable information (PII) is secure and protected. Healthcare providers can now extract meaningful insights such as identifying trends and making predictions for individuals or entire patient populations, significantly improving clinical decision-making speed and accuracy.

Source: SourceFuse – Amazon HealthLake Case Study

Challenges & Considerations: Data Lakes and Data Fabrics

While data lakes and data fabrics offer transformational benefits, organizations must navigate significant challenges to achieve successful implementations. Understanding these obstacles and their mitigation strategies is crucial for project success.

Data Lake Challenges

- Risk of becoming a data swamp represents the most critical threat to data lake initiatives. Without proper governance, cataloging, and metadata management, data lakes quickly deteriorate into unusable repositories where data cannot be found, understood, or trusted. Organizations often underestimate the discipline required to maintain data quality standards across diverse, high-volume data ingestion processes.

- High complexity to ensure quality and security emerges as data volumes and variety increase exponentially. Traditional data validation approaches fail at scale, requiring new frameworks for automated quality monitoring, lineage tracking, and access control management. Security becomes particularly challenging when managing petabytes of sensitive data across multiple cloud environments with varying access patterns.

- Requires skilled data engineering teams with specialized expertise in distributed systems, cloud technologies, and data processing frameworks like Apache Spark and Hadoop. The shortage of qualified professionals drives up implementation costs and timelines, while ongoing maintenance demands continuous investment in training and talent retention.

Data Fabric Challenges

- Higher upfront cost and metadata dependency creates significant barriers to entry. Data fabric implementations require substantial initial investments in infrastructure, software licensing, and professional services. Success depends heavily on comprehensive metadata management, which demands extensive data discovery, cataloging, and ongoing maintenance efforts.

- Requires strong data governance strategy before technical implementation begins. Organizations must establish clear policies for data ownership, access control, quality standards, and lifecycle management. Without robust governance frameworks, data fabrics become expensive technical solutions without business value.

- Integration complexity across legacy systems presents the greatest technical challenge. Connecting decades-old mainframe systems, proprietary databases, and modern cloud applications requires extensive custom development, API creation, and data transformation processes that can take months or years to complete.

Shared Challenges

- Data privacy and regulatory compliance affects both architectures equally. GDPR, HIPAA, CCPA, and other regulations require comprehensive data protection measures including encryption, access auditing, data minimization, and right-to-erasure capabilities. Compliance failures result in significant financial penalties and reputational damage.

- Change management and user adoption determines ultimate project success regardless of technical implementation quality. Business users resist new systems that disrupt established workflows, while IT teams struggle with unfamiliar technologies and processes.

| Challenge Category | Key Challenge | Mitigation Strategy |

| Data Lake Quality | Data swamp risk | Implement automated data cataloging, establish data governance policies, enforce metadata standards |

| Data Lake Technical | Security complexity | Deploy automated security scanning, implement zero-trust architecture, use cloud-native security services |

| Data Lake Resources | Skills shortage | Partner with experienced vendors, invest in training programs, adopt managed cloud services |

| Data Fabric Cost | High upfront investment | Start with pilot projects, implement phased approach, demonstrate ROI early |

| Data Fabric Governance | Governance dependency | Establish governance frameworks first, assign clear data ownership, create policy enforcement mechanisms |

| Data Fabric Integration | Legacy system complexity | Use API-first architecture, implement gradual modernization, leverage integration platforms |

| Shared Compliance | Regulatory requirements | Build privacy-by-design, implement comprehensive audit trails, engage legal experts early |

| Shared Adoption | Change resistance | Invest in user training, demonstrate business value, provide ongoing support |

Success requires treating these challenges as organizational transformation initiatives rather than purely technical projects, with equal emphasis on people, processes, and technology.

Future of Enterprise Data Architecture: Fabric + Lake

The future of enterprise data management is not about choosing between a data lake or a data fabric — it’s about combining their strengths into a unified, intelligent architecture.

One of the most significant trends is the rise of the data lakehouse, which merges the cost-effective raw storage of data lakes with the performance and governance capabilities needed for analytics. When combined with a data fabric, this architecture gains a powerful intelligence layer that connects, catalogs, and governs data across hybrid and multi-cloud environments.

A key driver of this evolution is AI-driven metadata management. Modern data fabrics use machine learning to automate data discovery, lineage tracking, and quality monitoring, making it easier for enterprises to trust and use their data at scale. This reduces manual curation while enabling faster, self-service analytics.

Cloud providers are also integrating data fabric capabilities directly into their ecosystems. For example:

- Microsoft Fabric offers an end-to-end, unified analytics platform combining lakehouse storage with intelligent data governance and real-time collaboration.

- Databricks Unity Catalog enables centralized governance and fine-grained access controls across multi-cloud data lakes.

- Snowflake Horizon adds discovery, lineage, and security to cloud-native data sharing.

According to Gartner, “By 2026, 50% of organizations will adopt data fabric architecture to unify data across platforms and accelerate digital transformation”.

The long-term vision for enterprises is clear:

- Data lakes will remain the foundation for scalable, cost-effective raw data storage.

- Data fabrics will serve as the intelligent access and governance layer, enabling real-time analytics, AI/ML readiness, and secure self-service for both technical and business users.

This convergence promises a future-proof, AI-ready data architecture — empowering enterprises to move from raw data collection to trusted, actionable insights faster than ever before.

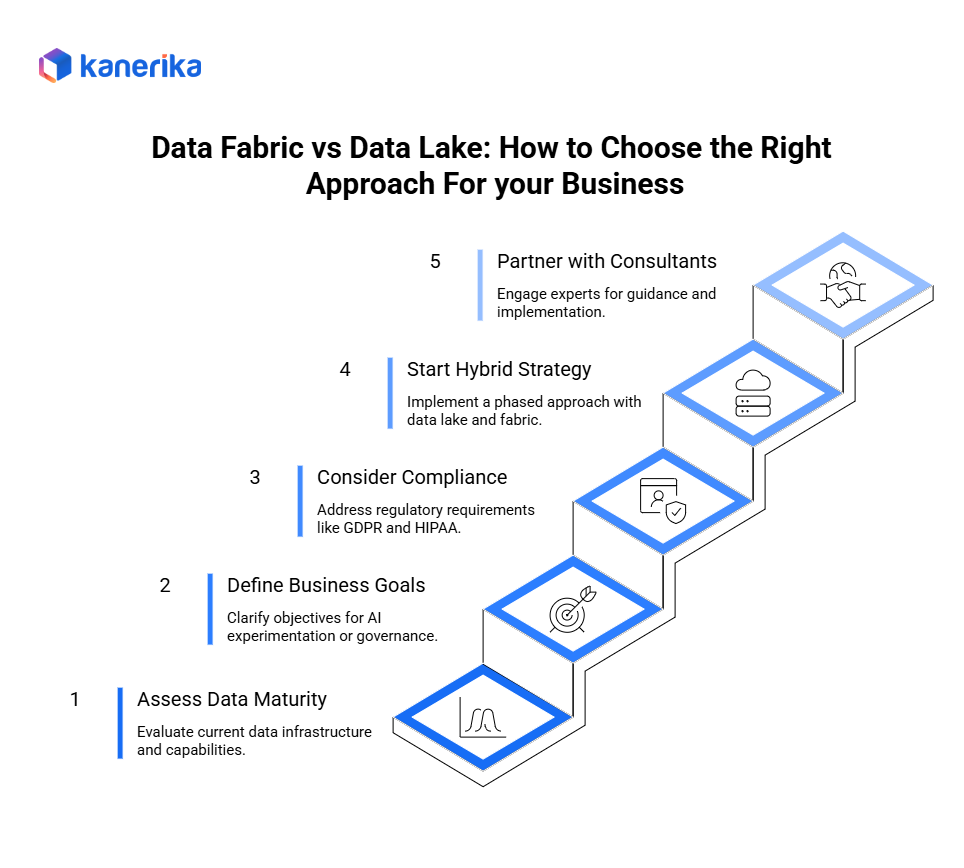

How to Choose the Right Approach for Your Business

Selecting the optimal data architecture requires a strategic evaluation of your organization’s current capabilities, business objectives, and long-term vision.

1. Assess Data Maturity and Current Architecture

Begin by conducting a comprehensive audit of your existing data infrastructure and organizational capabilities. Organizations with basic analytics needs and limited data governance may benefit from starting with a data lake approach.

Conversely, enterprises with complex, distributed systems and established governance requirements should consider data fabric solutions. Evaluate your team’s technical expertise, existing data volumes, and current integration challenges to understand your foundation.

2. Define Business Goals

Clarify whether your primary objective is AI experimentation or enterprise governance. Data lakes excel for exploratory data science, machine learning model development, and cost-effective storage of diverse data types.

Organizations focused on real-time decision-making, regulatory compliance, and unified data access across business units should prioritize data fabric implementations. Companies pursuing both objectives need integrated solutions that support experimental and production workloads simultaneously.

3. Consider Compliance Requirements

Regulatory frameworks like GDPR, HIPAA, SOX, and industry-specific mandates significantly influence architectural decisions. Data fabrics typically provide superior built-in governance capabilities, automated compliance reporting, and granular access controls.

Data lakes require additional governance layers to meet stringent regulatory requirements, though they offer more flexibility for evolving compliance needs.

4. Start with Hybrid Strategy

The most pragmatic approach involves implementing a phased hybrid strategy. Begin by building a cloud data lake for cost-effective storage and basic analytics capabilities.

Once established, gradually add data fabric components to provide governance, metadata management, and real-time access capabilities. This evolutionary approach minimizes risk while building organizational capabilities over time.

5. Partner with Experienced Data Platform Consultants

Engage specialized consultants with proven track records in your industry and technology stack. Experienced partners can accelerate implementation timelines, avoid common pitfalls, and provide objective guidance on technology selection.

Look for consultants who understand both technical architecture and business transformation, as successful data initiatives require expertise in both domains.

Why Choose Kanerika for Your Data Engineering Journey?

When it comes to data engineering solutions, experience matters. That’s why leading organizations trust Kanerika to transform their data chaos into competitive advantage.

Proven Track Record: HR Analytics Transformation

Take our recent success with a major client’s HR data modernization. We implemented a common and integrated Data Warehouse on Azure SQL and enabled Power BI dashboard, consolidating HR data and providing the client with a comprehensive view of their human resources. The results? Enhanced efficiency and decision-making, improved talent pool engagement through decoded recruitment, tenure, and attrition trends, effective employee policy management, and significant time savings with overall efficiency improvements in HR operations.

Enterprise-Scale Platform Modernization

In another transformative project, we helped a major enterprise overhaul their entire data analytics platform. The solution enhanced their decision-making processes, enabling them to make informed and strategic choices based on real-time insights, increased operational efficiency by fastening data retrieval, reducing manual data handling, and enhancing productivity across various departments. Moreover, we empowered their reporting and analytics capabilities to identify trends and extract valuable insights, while ensuring scalability and futureproofing through agile data architecture frameworks.

What Sets Kanerika Apart:

- Deep Technical Expertise: Our team combines years of data engineering experience with cutting-edge technology knowledge

- Business-First Approach: We don’t just implement technology – we solve business problems

- Proven Methodologies: Our structured implementation approach minimizes risk while maximizing value

- End-to-End Support: From strategy through execution to ongoing optimization, we’re your partner every step of the way

Optimize Your Data Strategy with Intelligent Analytics Solutions!

Partner with Kanerika Today.

FAQs

1. What is the main difference between a data fabric and a data lake?

A data lake is a centralized repository for storing raw, structured, and unstructured data, while a data fabric is an architecture layer that connects, governs, and provides unified access to distributed data across hybrid and multi-cloud environments.

2. Can a data fabric replace a data lake?

No. A data fabric does not store data; it virtualizes and connects existing sources, including data lakes, warehouses, and cloud platforms. Many enterprises use both together for raw storage and governed access.

3. Which is better for AI and machine learning workloads?

Data lakes are great for experimentation and training models on raw data. Data fabrics are better for production-ready AI, offering governed, high-quality data with metadata-driven insights.

4. Is governance easier with data fabric than with data lakes?

Yes. Data fabrics include built-in governance, metadata management, and compliance features, while data lakes often require extra tools to avoid becoming “data swamps.”

5. Are data fabrics more expensive than data lakes?

Data lakes are cheaper to set up initially since they focus on storage. Data fabrics may have higher upfront costs but save money long term through better data reuse, compliance, and faster insights.

6. Can small and mid-sized businesses use data fabric or is it only for large enterprises?

While originally used by large enterprises, modern cloud platforms like Microsoft Fabric and Databricks Unity Catalog make data fabric accessible to SMBs seeking simplified, governed data access.

7. Should I choose a data lake or a data fabric for my organization?

If your focus is storing and experimenting with raw big data, start with a data lake. If you need unified, governed, AI-ready analytics across multiple systems, a data fabric is the better choice—and many companies use both together.