Data mesh is an innovative architectural pattern nuances of data management at scale, particularly as prevalent in larger companies with varied data assets. Traditional centralized data management systems typically become bottlenecks as companies grow and data volumes explode, leading to inefficiencies and loss of usefulness in the information. Data mesh principles stress that instead of treating data like any other resource, it should be treated as a product, emphasizing on how usable, qualitative, and accessible data is.

Data mesh suggests that instead of monolithic systems, there should be a shift towards a more decentralized approach to managing data that aligns with the principles of domain-driven design.

Top companies across the globe such as JP Morgan, Intuit, VistaPrint are now leveraging data mesh to solve their data challenges and enhance their business operations. According to Markets and Research, the global market size of data mesh was at $1.2 billion in 2023, and is expected to reach $2.5 billion by 2028, growing at a CAGR of 16.4%. This shows increasing demand and utilization of data mesh all over the world.

The foundational principles of data mesh revolve around domain-oriented decentralized ownership of data, treating it as a product, self-serve infrastructure for data, and federated computational governance. These pillars are aimed at empowering domain-specific teams to take charge of their data ensuring that it receives similar care and strategic importance like other products offered by the company. By doing so, Data Mesh aims at enhancing enterprise-wide discoverability accuracy in terms of finding truth online and trust regarding its information resources.

What is Data Mesh?

In the era of data-centric organizations, data mesh emerges as an innovative strategic resolution for the difficulties tied up with large-scale record keeping challenges. Data Mesh is an architectural paradigm that advocates for a decentralized socio-technical system of managing analytics data across diverse and large-scale environments. This approach deals with the problem of traditional data architectures that can lead to data silos and governance bottlenecks. It shifts towards a more collaborative and flexible infrastructure where domain-specific teams own and provide data as a decentralized suite of products.

6 Core Data Mesh Principles

Data Mesh principles relies on several key principles in its design and functioning:

1. Domain-Oriented Data Ownership

In traditional data management approaches, data ownership often rests with centralized teams, leading to bottlenecks and inefficiencies. On the other hand, Data Mesh advocates for distributing ownership to domain teams, aligning with the organization’s structure and business domains. This improves the quality of data as well as its relevance and alignment with the organizational objectives.

2. Self-Serve Data Platform

Empowering domain teams to have self-service solutions that allow them to independently access and manage their own data enhances decentralization of managing central data departments. It can also facilitate faster decision-making in terms of information within various domains. The organizations can improve their agility, innovation, and time-to-insight by enabling teams to be self-sufficient in dealing with their own information.

3. Data as a Product

Treating data as a product involves curating high-quality datasets tailored to specific business needs, emphasizing clear data specifications, documentation, and service-level agreements. This approach ensures that data consumers understand data capabilities, limitations, and usage guidelines.

4. Decentralized Data Governance

Decentralized data governance allows domain teams to take charge of governance processes within their domains, defining and enforcing data quality standards, privacy policies, security measures, and regulatory compliance. This decentralization aligns data practices with business goals, ensuring accountability and transparency.

5. Federated Computational Governance

Federated computational governance involves using federated systems for data processing, enabling domain teams to perform computations closer to data sources, reducing data movement and latency. This approach supports data sovereignty, privacy, and collaborative analysis across domains when needed.

6. API-First Architecture

Adopting an API-first architecture in data platforms ensures seamless integration and interoperability across systems and teams. APIs serve as the primary interface for data access and interaction, promoting scalability, flexibility, and reusability in data management and application development efforts.

Technical Aspects Of Data Mesh

Data Infrastructure and Technologies

Data Mesh relies on a decentralized infrastructure framework that supports a variety of technologies. The main elements here include self-service data infrastructures and the interoperability between systems. An example setup entails:

- Data Lakes and Data Warehouses: Storage solutions are organized as domains with domain-specific schemas.

- Cloud Providers: They provide scalable resources and services for hosting and managing data products.

- Microservices Architecture: Each data product may be supported by microservices, offering agility and scalability

- Machine Learning Platforms: Designed to support advanced analytics within the data ecosystem

Organizations should invest in robust architecture support for versioning of data products while being analytics-ready.

Security and Compliance

In a data mesh architecture security protocol, there is:

- Encryption on data at rest or in transit.

- Strong access controls, making sure only authorized people have access to data products.

Compliance is maintained through

- Using best practices on governance issues relating to information privacy/data protection.

- Continuously enforcing policy checks regularly over a time period.

Data Product Design & Lifecycle

A data product’s lifecycle encompasses its creation, use, evolution, and eventual retirement. Key design aspects include:

- Data Product Schema: It should be carefully designed to reflect their domain and usage purpose.

- Self-Service Infrastructure: Simplifies deployment, modification and scaling of data products thus facilitates generalist pod model where small teams own end-to-end lifecycle of their own domain’s datascape.

Technical foundation of any Data Mesh architecture enables diverse use cases empowering domain-led teams with valuable insights driving innovation across organizations as highlighted below;

The Significance of Data as a Product

There is an important change in perspective regarding viewing Data as a Product within Data Mesh frameworks. When designing data products, their target users are taken into consideration making sure that they are understandable, reliable, and consumable. This model brings about a cultural shift within companies where data is not only something valuable in terms of insights but also crafted with the same level of care and attention as any customer-oriented product. They include;

- Improved Quality and Usability: Data products are curated for quality and designed to be immediately useful for consumers

- Accountability: Teams assign ownership so they can be held accountable for their data products thereby improving stewardship and governance

- Collaborative Environment : Data as a product culture fosters collaboration between different domains because stakeholders work together to create and maintain valuable assets.

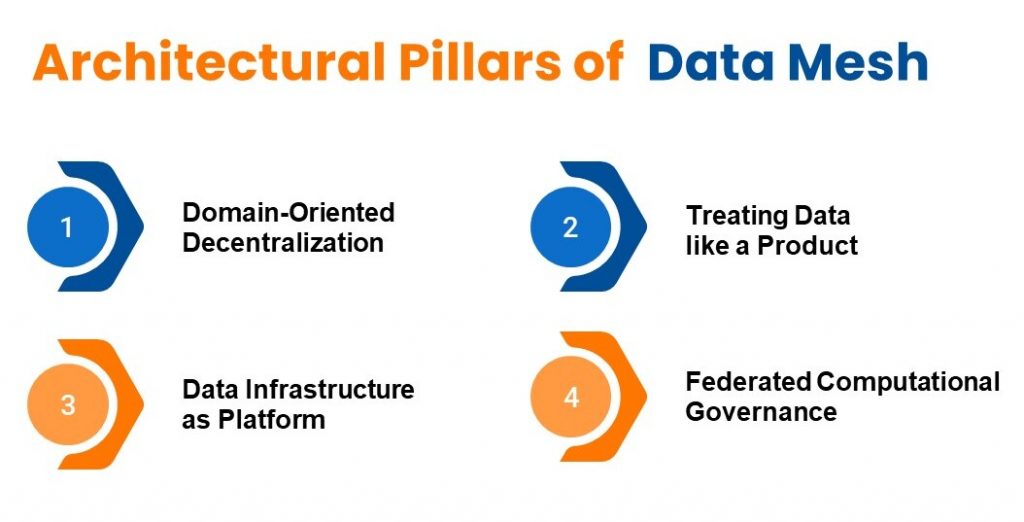

Architectural Pillars of Data Mesh

The Data Mesh paradigm redefines data architecture with four foundational pillars designed to cater to the growing need for scalability, agility, and reliability in managing enterprise data.

1. Domain-Oriented Decentralization

On the other hand, a domain orientation of decentralization is embraced by Data Mesh, where control over data is based on organization domain structure. They manage their own data as if they were microservices architecture. It promotes freedom and a deeper understanding of data.

2. Data Infrastructure as a Platform

That means domains should be able to access and manage their own information easily without any bottlenecks through the help of self-service platforms supplied by data infrastructures. To enable teams to build and support their own data products more effectively, this platform should provide robust data pipelines, technologies, and tools that abstract away the complexities of working with massive amounts of data.

3. Treating Data Like a Product

In the Data Mesh concept, you ought to think about your approach to know how good for a product this or that dataset would be. It means that every piece of information has been tailored for end-users so that they could understand it well enough and employ it when needed. In turn, quality, discoverability, and reliability are the main focus areas, which underscore well-defined metadata along with documentation related to datasets.

4. Federated Computational Governance

The last one; federated computational governance uses a shared governance model where decisions making is done collectively. Policies on regulations regarding use of data are formulated through federated manner encouraging alignment without compromising autonomy of individual domains.

Steps to Implement Data Mesh

The operationalization of a data mesh framework involves organizing various elements together so that it becomes possible to have a decentralized approach to managing data effectively within an organization. This represents principles of domain-driven design (DDD), product thinking, self-serve-data infrastructure that scales agile and data-driven practices within organizations today.

1. Deployment Considerations for Data Mesh

Implementation of Data Mesh calls for careful planning and implementation process; however, it also requires hard work. Firstly, there is deployment based on domain, which means cloud-based or hybrid environments allow the owners of the company’s data management products to administer their respective domain spaces.. The structure of a mesh often uses DataOps methodologies that include automation of workflows, promoting agility and efficiency while ensuring quality at scale..

- Automation and DataOps: Crucially important in minimizing manual bottlenecks accelerating time-to-insight.

- Cloud Infrastructure: Enables scalability along with support for real-time information processing with different performance requirements.

- Product Thinking in Data: It involves treating data as internally targeted products requiring end-to-end ownership during its lifecycle.

2. Roles and Responsibilities

In this kind of architectural setup named “Data Mesh,” roles are defined clearly according to its distributed nature. Data Product Owners are responsible for the internal customer needs when it comes to their specific datasets in terms of security protection from unauthorized access. The role played by these two groups varies slightly although they both work on refining data infrastructure and analytical models.

3. Data Governance & Quality

A successful data mesh requires effective data governance. Such governance ensures alignment with rules and regulations governing the use of data across all stakeholders. Quality is underpinned by reliability and trustworthiness with respect to various kinds of products.

- Data Governance Framework: Provides guidelines about how one may access, secure, or ethically apply information.

- Quality Assurance: Maintains high standards of consistency at a given level within datasets covering different issues.

- Security Practices: They should contain procedures that would stop unauthorized access hence safeguarding against any breach that maintains the integrity for available information.

Scaling Data Mesh in Organizations

Data Mesh architecture represents a substantial departure from centralized systems towards becoming more responsive in terms of managing vast quantities of diverse datasets within an organization.

From Monolithic to Distributed

Centralized data systems are dismantled into domain products in the course of transitioning from monolithic to distributed architectures. These belong to business domains that comprehend the meaning and relevance of the data. This offers asset worth and quicker ingestion lead time. This is made possible by a distributed data mesh, which allows for management and reporting to occur closer to the ownership of data sources.

Cost and Complexity Management

Initially, deploying a data mesh may result in increased complexity and costs associated with managing distributed data. However, organizations can address this situation through a platform based on self-serve infrastructure for accessing data. It ensures a simplified ETL process, worldwide standards with monitoring capability, and enables efficient growth of analytic data.

Cultural Shift

To successfully implement a data mesh system within an organization there needs to be cultural shift. For instance, shifting from a centralized approach towards decentralized ownership of the company’s databases requires paradigm shift on its understanding. Establishing centralized governance over a self-service dataset guarantees access to current information while assuring users’ satisfaction.

Advanced Concepts in Data Mesh

Exploring advanced concepts in Data Mesh uncovers the layers of complexity and sophistication that cater to modern organizations’ need for decentralized data management. These topics are crucial for a well-rounded understanding of a mature data mesh implementation.

Interoperability/Integration

Interoperability is indispensable for aligning different datasets like lakes or warehouses for example under one roof. In designing such integration strategies, however creative they may appear, Data Mesh should ensure seamless ETL process between each other’s varied systems despite being separate entities.

Efficient integration strategies include:

- Standardized protocols including formats used when sharing or manipulating datasets.

- Using fabric-based architecture that links various issues about different types of information sources across analytics platforms.

Metadata and Discovery

Metadata management forms the backbone of discoverable information within Data Meshes; since it helps users to know where the data was obtained, what it is about and how good its quality is. Key features:

- Any self-service data platform that enables all types of developers and scientists to find and understand individualized datasets without any hindrances.

- A way of recording meta-data in a manner that protects privacy or deals with legal requirements of handling sensitive information.

Federated Governance Model

This model supports a decentralized data mesh therefore the power is shared while maintaining total uniformity throughout the whole field. Main characteristics include:

- Well-defined roles for product design and implementation responsibilities to promote cross-domain collaboration.

- Logical structure integration underpins these different types of data platforms while still encouraging innovation and independence.

Real-world Use Cases of Data Mesh

1. Understanding Customer Lifecycle

Data Mesh supports customer care by reducing handling time, enhancing satisfaction, and enabling predictive churn analysis.

2. Utility in the Internet of Things (IoT)

It aids in monitoring IoT devices, providing insights into usage patterns without centralizing all data.

3. Loss Prevention in Financial Services

Implementing Data Mesh in the financial sector enables quicker insights with lower operational costs, aiding in fraud detection and compliance with data regulations.

4. Marketing Campaign Optimization

Data Mesh accelerates marketing insights, boosts agility, and empowers data-driven decisions. It enhances competitiveness, trend awareness, and personalized strategies for effective sales team support and tailored customer interactions.

5. Supply Chain Optimization

Data Mesh decentralizes data ownership, enhancing quality and domain-specific handling. It optimizes supply chain efficiency, scalability, and autonomy in data management, leading to streamlined processes and data-driven decisions for improved performance.

Key Considerations for Implementing Data Mesh

1. Data Quality and Consistency

This may be difficult to achieve because making certain that there is quality and consistency in the domain-specific datasets calls for frameworks of standardized governance on data, quality controls and processes of validating data.

2. Integration Complexity

Complications are apparent in integrating different types of information sources, technologies as well as analytical tools within the architecture of Data Mesh. This is h due to its need for strong application programming interfaces (APIs), inter-data transfer pipelines and interoperability standards.

3. Scalability and Performance

Scaling Data Mesh to handle large volumes of data, diverse use cases, and complex analytics workloads while maintaining performance, reliability, and cost-effectiveness requires careful architectural design and optimization.

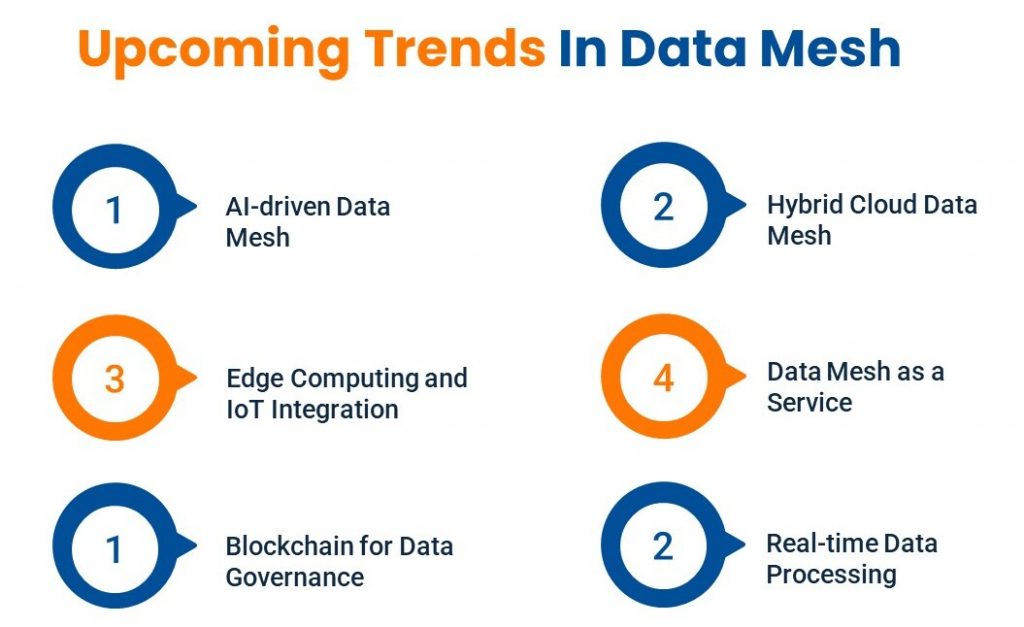

Upcoming Trends for Data Mesh

1. AI-Driven Data Mesh

Integrating artificial intelligence (AI) and machine learning (ML) technologies to automate data management tasks, enhance data quality, and boost predictive analytics capabilities within Data Mesh architectures.

2. Edge Computing and IoT Integration

Utilizing edge computing and Internet of Things (IoT) technologies for processing and analyzing data nearer the source that enables instant insights, reduces latency, and improves scalability in Data Mesh environments.

3. Blockchain for Data Governance

Examining blockchain technology for decentralized data governance, auditability, and data provenance within Data Mesh frameworks to ensure safety, fairness as well as conformity.

4. Hybrid Cloud Data Mesh

Incorporating hybrid cloud designs into Data Mesh deployments by combing on-premise resources with cloud resources to achieve scalable, flexible, and cost-effective solutions in managing analytics.

5. Data Mesh-as-a-Service (DMaaS)

Emergence of Data Mesh-as-a-Service offerings, providing organizations with managed Data Mesh solutions, tools, and expertise to accelerate adoption, reduce implementation complexity, and enhance operational efficiency.

6. Real-time Data Processing

Focus on real-time data processing capabilities within Data Mesh architectures, leveraging stream processing frameworks, event-driven architectures, and real-time analytics tools to enable instant insights and actions on streaming data.

Turn to Kanerika for Successful Implementation of Data Mesh Architecture

Kanerika excels in data analytics and management, offering expertise in implementing data mesh architecture to transform business operations. With a focus on transforming data handling, Kanerika ensures seamless integration of domain-driven design principles, self-service architecture, data products, and federated governance. By leveraging its capabilities, businesses can enhance data quality, foster innovation, and empower teams to make data-driven decisions effectively. Kanerika’s enables organizations to structure their data estate efficiently, ensuring robust governance, optimized consumption, and improved efficiency through the implementation of data mesh

Frequently Asked Questions

What are the 4 principles of data mesh?

Data mesh isn’t about a single database; it’s about a decentralized data management approach. Its four core principles are: domain ownership (data teams own their data products), data as a product (treating data like any other product with clear value and quality), self-serve data infrastructure (providing easy access to tools and platforms), and federated computational governance (collaborative, decentralized control, not centralized dictating). This empowers domain experts and improves data quality and accessibility.

What are the concepts of data mesh?

Data mesh flips the traditional data warehouse model. Instead of a centralized team managing all data, it distributes ownership to domain-specific teams. This fosters agility, better data quality, and increased business relevance because those closest to the data manage it. Essentially, it’s about democratizing data while maintaining governance.

What is the data mesh strategy?

Data mesh flips the traditional data management model. Instead of a centralized team controlling all data, it empowers individual domains to own and manage their data products. This fosters agility, better data quality, and aligns data with specific business needs. It’s essentially a decentralized, domain-driven approach to data.

What are the prerequisites for data mesh?

Data mesh isn’t just a technology, it’s a radical shift in how organizations manage data. Prerequisites include a strong data literacy culture, empowered domain teams capable of owning their data products, and robust tooling for data discovery, governance, and observability. Finally, a committed leadership team willing to decentralize data ownership is absolutely crucial for success.

What are the 4 principles of data quality?

Data quality hinges on four key pillars: Accuracy (is it correct?), Completeness (is everything there?), Consistency (does it match across sources?), and Timeliness (is it current enough for its purpose?). These ensure data is reliable and usable for decision-making. Ignoring any weakens the entire dataset.

What are the 4 pillars of data analysis?

Data analysis rests on four key pillars: Data acquisition (getting the right data), data cleaning (handling messy realities), data exploration (discovering patterns), and data interpretation (drawing meaningful conclusions and actionable insights). These stages are interconnected and iterative, meaning you often revisit earlier steps. Ultimately, they form a robust workflow for extracting value from data.

What are the three principles of data models?

Data models organize information, essentially acting as blueprints for databases. The three core principles are: representation (how data is structured and defined), integrity (ensuring data accuracy and consistency), and accessibility (allowing efficient retrieval and use of the data). These principles work together to ensure a robust and reliable data system.

What are the four main aspects of data handling?

Data handling boils down to four key areas: getting the data (collection & acquisition), cleaning it up (processing & validation), organizing it for use (storage & management), and finally, extracting meaningful information (analysis & interpretation). Think of it as a pipeline, each stage crucial for the next. Without careful attention to each, your insights will be flawed.

What are the six data processing principles?

The six data processing principles ensure fair and lawful data handling. They guide how we collect, use, and protect personal information, focusing on legality, purpose limitation, data minimization, accuracy, storage limitation, and individual rights. Essentially, they’re about responsible data stewardship, prioritizing transparency and user control. Violation of these principles can lead to legal and ethical problems.

What are the benefits of data mesh?

Data mesh empowers individual domain teams to own their data, fostering agility and faster insights. This decentralized approach improves data quality through localized expertise and reduces bottlenecks common in centralized data architectures. Ultimately, it allows for quicker innovation and better alignment with business needs. The result is a more responsive and efficient data organization.