Databricks CEO Ali Ghodsi has called semantics “existential” to enterprise AI, framing it as the foundation for intelligent agents that can reason, predict, and act on business data. In line with that vision, Databricks recently launched Agent Bricks and Lakeflow Designer, tools built to help companies build, fine-tune, deploy, and monitor generative AI agents at scale.

It also launched AI Gateway, a control layer for managing access to models like GPT-5 and Claude, and invested $100 million to add OpenAI models into its platform. Furthermore, these moves show Databricks is serious about making enterprise AI safer, faster, and easier to scale.

The generative AI market is booming. It’s expected to reach $71.36 billion by the end of 2025, with a projected value of over $1 trillion by 2030. Moreover, about 78% of companies already use generative AI in some form, and every $1 invested is yielding up to 7x ROI. Databricks is tapping into this growth by offering tools that help businesses train, fine-tune, and deploy custom AI agents using their own data.

Continue reading to discover how Databricks’ generative AI capabilities are helping businesses unlock innovation, scale intelligent automation, and make a real-world impact.

Build next-gen AI solutions with Databricks Generative AI

Partner with Kanerika to deploy secure, scalable, and efficient AI systems.

Key Takeaways

- Databricks is advancing enterprise AI with tools like Agent Bricks, Lakeflow Designer, and AI Gateway.

- Databricks integrates data, analytics, and AI on a single platform, simplifying workflows and enhancing collaboration.

- Features like Vector Search, RAG, and Unity Catalog enhance model accuracy, governance, and security.

- The platform supports full AI lifecycles, from data ingestion to deployment and monitoring, within a unified environment.

- Enterprises gain faster time to value, cost efficiency, and compliance through Databricks’ scalable infrastructure.

- Use cases span text generation, synthetic data creation, code automation, and industry-specific applications.

- Kanerika partners with Databricks to deliver tailored Generative AI solutions that boost innovation and operational efficiency.

What is Databricks Generative AI

Generative AI refers to AI systems that can create new content—such as text, images, code, music, or synthetic data—based on patterns learned from large datasets. These models include transformers, GANs, VAEs, and diffusion models. Additionally, they’re used in chatbots, image creation, drug discovery, fraud detection, and more.

Databricks Generative AI is built on the Lakehouse Platform, which combines data, analytics, and AI in one environment. Furthermore, it supports the full lifecycle: data ingestion, model training, fine-tuning, deployment, and governance. Databricks helps enterprises build generative AI applications using curated models, their own data, and connected tools such as MLflow, Unity Catalog, and Mosaic AI.

The platform supports use cases like retrieval-augmented generation (RAG), AI agents, and custom LLMs. Moreover, it also includes vector search, model serving, and monitoring tools to help teams transition from proof of concept to production.

Agentic AI Vs Generative AI: The Ultimate Comparison Guide

Explore the key differences between Agentic AI and Generative AI and their business impacts.

Why Choose Databricks for Generative AI

Databricks offers a lakehouse setup that brings together data, analytics, and AI. As a result, this removes the complexity of using multiple systems and helps organizations streamline their end-to-end workflows. By working in a single environment, data scientists and engineers can work together easily and manage every stage of the AI lifecycle efficiently.

Some of the key advantages include:

- Unified data and AI environment: Manage, train, and deploy models all within one platform.

- Advanced AI capabilities: Features such as Vector Search, Retrieval-Augmented Generation (RAG), and fine-tuning services enable the tailoring of large language models to business-specific data.

- Built-in governance and security: With Unity Catalog, Databricks ensures proper access control, data lineage, and compliance for all AI assets.

- Optimized performance and cost-efficiency: Scalable infrastructure supports faster processing while maintaining cost control and reliability.

Databricks also addresses major business challenges, including data fragmentation, poor governance, and high operational costs. Therefore, ensuring transparency and control across the data-to-AI pipeline helps organizations build trustworthy and efficient generative AI systems.

Core Components and Capabilities

Databricks Generative AI is built with enterprise-ready components that simplify and speed up AI workflows. Furthermore, each element plays a key role in ensuring smooth operations, scalability, and governance.

1. Data Ingestion and Preparation:

Using Delta Lake and Unity Catalog, Databricks allows smooth ingestion and transformation of structured and unstructured data. Additionally, these tools ensure high data quality, consistency, and governance before model training begins.

2. Model Fine-Tuning and Deployment:

Organizations can fine-tune large language models directly on Databricks with their proprietary data. Moreover, once trained, models can be deployed securely with Model Serving, which provides reliable performance for real-time or batch inference.

3. Vector Search and RAG (Retrieval-Augmented Generation):

The platform supports Vector Search, enabling the embedding of enterprise data and efficient retrieval of context. Furthermore, combined with RAG workflows, models create more relevant and context-aware responses based on internal knowledge.

4. Model Serving and Inference Optimization:

Databricks provides optimized serving for low latency and large-scale inference. Additionally, built-in monitoring ensures that models perform accurately and consistently in production environments.

5. Governance, Monitoring, and Security:

Through Unity Catalog, Databricks provides end-to-end visibility across data and AI pipelines. As a result, it ensures secure access management, version control, and audit trails—making it suitable for industries with strict compliance needs.

Together, these capabilities make Databricks Generative AI a powerful solution for enterprises seeking to integrate generative intelligence into their data systems while maintaining performance, security, and governance.

How Databricks Generative AI Works – Workflow Example

Databricks simplifies the end-to-end workflow for building and deploying generative AI applications. Moreover, its unified platform combines data engineering, machine learning, and model governance to help enterprises move from idea to production faster and with greater control.

Step 1: Define the Use Case and Collect Enterprise Data

The process begins by identifying the business use case—such as automating content creation, building chatbots, or improving customer insights—and collecting relevant enterprise data from multiple sources.

Step 2: Prepare Data and Create Embeddings

Once data is collected, it is cleaned, transformed, and structured using Delta Lake. Furthermore, Databricks then allows teams to create vector embeddings to power retrieval-based workflows or fine-tune existing large language models (LLMs) on enterprise-specific datasets.

Step 3: Build the Generative AI Application

Using the Databricks Workspace and AI development tools, teams can design and test generative applications such as intelligent assistants, summarization tools, or code generators. Additionally, integration with notebooks and APIs makes the workflow smooth for both developers and data scientists.

Step 4: Deploy and Monitor the Model

After development, models are deployed through Databricks Model Serving, ensuring secure and scalable access. Moreover, Lakehouse Monitoring tracks performance metrics, latency, and accuracy to ensure optimal model behavior in production.

Step 5: Iterate and Improve

Continuous improvement is enabled through feedback loops, performance tracking, and drift detection. Furthermore, teams can retrain or fine-tune models periodically to boost accuracy and maintain relevance as data evolves.

This workflow ensures that AI systems are built efficiently, monitored effectively, and continuously optimized for enterprise-grade performance.

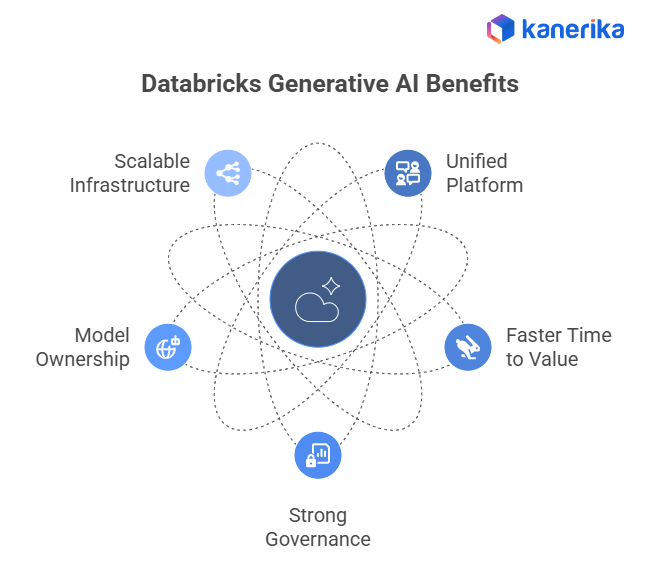

Benefits of Databricks Generative AI for Enterprises

1. Unified Platform for Data and AI

Databricks removes silos by integrating data, analytics, and AI into a single system. As a result, this integration enables seamless collaboration among data engineers, analysts, and AI developers, thereby enhancing overall efficiency and productivity.

2. Faster Time to Value

With pre-built capabilities like Vector Search and Retrieval-Augmented Generation (RAG), as well as access to open foundation models, Databricks accelerates development cycles. Therefore, organizations can prototype and deploy applications quickly without needing to build every component from scratch.

3. Strong Governance and Compliance

Enterprises benefit from built-in governance, audit trails, and access management through Unity Catalog. Consequently, this ensures data privacy, model traceability, and regulatory compliance—essential for industries such as finance, healthcare, and government.

4. Flexibility in Model Ownership

Databricks gives businesses full control over their models. Moreover, teams can fine-tune open-source or proprietary LLMs within their environment, maintain ownership of intellectual property, and customize models to match unique business requirements.

5. Scalable and Reliable Infrastructure

The Databricks Lakehouse Platform supports large-scale workloads with optimized compute and storage, making it suitable for enterprise-level AI deployments. Additionally, it offers both scalability and performance without compromising governance or cost control.

Overall, Databricks Generative AI helps enterprises put AI into action faster, maintain strong governance, and get measurable business value from their data and models.

Use Cases and Industry Applications of Databricks Generative AI

Databricks Generative AI enables organizations to unlock new possibilities across industries by turning raw data into intelligent, useful outputs. Furthermore, with its connected data, analytics, and AI environment, enterprises can develop scalable and production-ready applications tailored to their specific domains.

1. Text Generation and Language Applications

Databricks supports a wide range of language-based generative AI use cases, including chatbots, document summarization, and language translation. Moreover, by using fine-tuned large language models (LLMs) trained on enterprise data, businesses can automate communication, boost customer engagement, and streamline information access.

2. Synthetic Data Generation

Organizations often face challenges with limited or sensitive data. Additionally, Databricks Generative AI can create synthetic datasets for model training, testing, and validation. These datasets mimic real-world scenarios without exposing confidential information, making them especially useful for regulated sectors such as finance and healthcare.

3. Retrieval-Augmented Generation (RAG)

One of Databricks’ standout features is its RAG framework, which enriches model responses using enterprise-specific data. Furthermore, by combining Vector Search with embedded enterprise knowledge, organizations can ensure their AI assistants and applications deliver more relevant, accurate, and context-aware answers.

4. Code Generation and Automation

Databricks helps developers use generative AI for code creation, data pipeline automation, and query optimization. As a result, this enables teams to expedite data engineering tasks, enhance efficiency, and minimize manual errors—especially in large-scale projects involving data transformation and analytics.

5. Industry-Specific Applications

- Finance: Create automated investment reports, analyze market trends, and power personalized financial advisory tools.

- Healthcare: Summarize patient records, assist in drug discovery, and automate diagnostic report creation while maintaining data security.

- Retail: Personalize product recommendations, automate marketing content, and analyze consumer sentiment for better decision-making.

By connecting these use cases, Databricks Generative AI helps enterprises achieve better productivity, innovation, and operational efficiency across industries.

Kanerika and Databricks: Powering Enterprise Innovation with Generative AI

At Kanerika, we combine our deep expertise in Generative AI and data engineering with the advanced capabilities of the Databricks Data Intelligence Platform to help enterprises accelerate AI adoption. Moreover, as a trusted Databricks partner, we guide organizations through every stage from strategy and implementation to optimization, ensuring measurable impact and long-term scalability.

Our team develops custom AI applications powered by Databricks’ unified environment, including LLM integration, Retrieval Augmented Generation (RAG), and Vector Search. Additionally, these solutions enable enterprises to deploy intelligent chatbots, automate workflows, create high-quality content, and deliver richer data-driven insights.

Kanerika’s proven track record spans various industries, including finance, healthcare, manufacturing, and retail. Furthermore, our Databricks-based Generative AI solutions have helped clients reduce costs, improve efficiency, and unlock new opportunities for innovation while maintaining security, transparency, and control over their data.

Turn your Data into Smarter Business Outcomes with Databricks Generative AI.

Partner with Kanerika to bring enterprise-grade AI to life.

FAQs

1. What is Databricks Generative AI?

Databricks Generative AI is part of the Databricks Lakehouse Platform that allows users to build and deploy applications powered by large language models (LLMs). It helps organizations use their own data securely to create AI-driven tools such as chatbots, document assistants, and code generators.

2. What are the main use cases?

Businesses use Databricks Generative AI for automating workflows, enhancing analytics, building conversational AI, generating summaries, and improving decision-making. It is commonly applied in customer support, data analysis, and internal knowledge management systems.

3. How does Databricks support Generative AI development?

Databricks offers tools for data preparation, vector storage, fine-tuning models, and deployment within a single environment. It supports frameworks like Mosaic AI and allows integration with external APIs and model providers such as OpenAI and Anthropic.

4. What challenges should teams be aware of?

Common challenges include ensuring data quality, maintaining compliance, managing costs, and securing sensitive information. Teams also need to monitor model outputs to prevent inaccuracies or bias in generated results.

5. Do users need AI expertise to get started?

Not necessarily. Databricks provides guided tools, templates, and documentation to help even non-experts build generative AI solutions. However, having basic knowledge of data engineering, machine learning, or prompt design can make implementation smoother.