Enterprise data teams are under pressure to support AI, real‑time analytics, and ever‑growing data volumes while keeping costs under control.

Moving from a traditional data warehouse to a data lake or lakehouse is now a core modernization pattern for organizations that want flexibility, scalability, and advanced analytics without losing governance.

This guide offers a practical, step‑by‑step data warehouse to data lake migration playbook based on real implementation patterns, designed to help you modernize with low risk and measurable business value.

Who This Guide Is For

This article is written for decision‑makers responsible for data platforms and analytics outcomes: CIOs, CDOs, heads of data engineering, and analytics leaders.

If you currently run an on‑prem or cloud data warehouse and want to move towards a data lake, data lakehouse, or Microsoft Fabric without disrupting business operations, this guide is for you.

Rather than trying to cover all forms of data migration, this playbook focuses specifically on the warehouse to lake path, complementing separate content on generic data migration, data lake implementation, and data lake vs data warehouse comparisons.

Transform Your Data Warehouse Into A Scalable Data Lake.

Partner With Kanerika To Ensure Accuracy, Security, And Speed.

Key Takeaways

- Businesses are shifting from traditional data warehouses to flexible, scalable data lakes for real-time analytics and AI integration.

- Data lakes offer cost efficiency, scalability, and support for structured and unstructured data, unlike rigid warehouse systems.

- Migration enables faster insights, machine learning adoption, and better handling of large, diverse datasets.

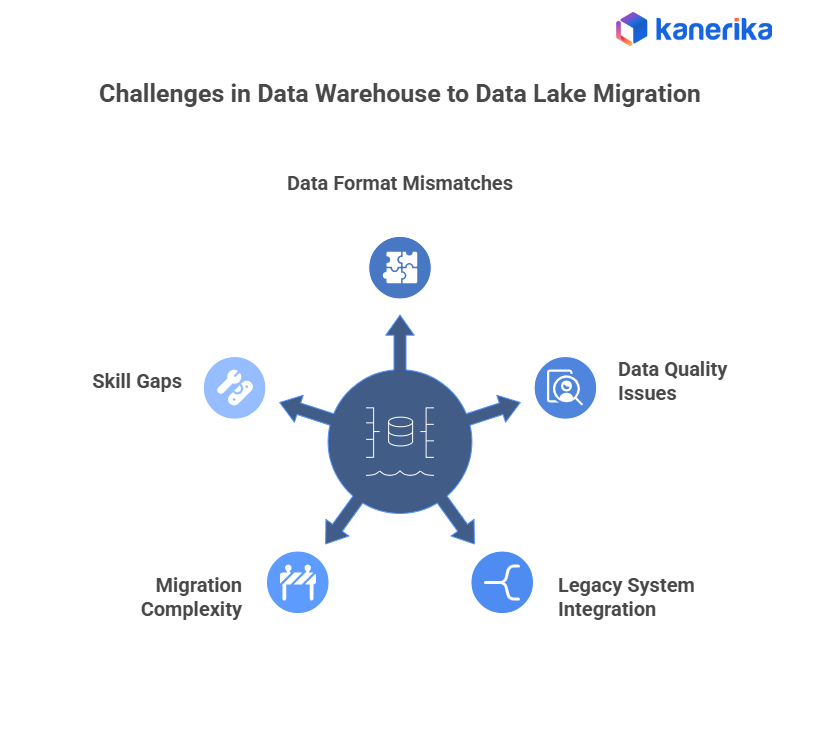

- Challenges include data format mismatches, governance issues, legacy integration, and skill gaps.

- A well-planned migration involves assessing architecture, defining goals, selecting platforms, and enforcing governance.

- Tools like Databricks, AWS Glue, Azure Synapse, and Google Dataflow simplify data movement and management.

- Kanerika helps organizations modernize data systems with secure, compliant, and AI-ready data lake and fabric solutions.

When Does Data Warehouse to Data Lake Migration Make Sense?

Not every organization needs to fully replace its data warehouse, and a hybrid approach often delivers the best risk–reward balance.

However, shifting at least part of the analytical workload from warehouse to data lake becomes compelling when one or more of these signals appear.

- Storage costs keep climbing as you retain years of historical data in a warehouse optimized for compute rather than cheap storage.

- New data types such as IoT, logs, clickstream, sensor feeds, and documents do not fit cleanly into rigid warehouse schemas.

- The organization wants to enable advanced analytics, AI/ML, and real‑time use cases that benefit from flexible lakehouse architectures.

- There is a push to decouple storage and compute and adopt open table formats like Parquet or Delta to reduce lock‑in.

In these situations, a data lake migration strategy helps you lower cost, expand use cases, and prepare for AI while still honoring existing BI and reporting requirements.

Data Warehouse Migration: A Practical Guide for Enterprise Teams

Learn key strategies, tools and best practices for successful data-warehouse migration.

Key Differences Between Data Warehouse and Data Lake

| Feature | Data Warehouse | Data Lake |

| Data Type | Stores structured data only | Handles structured, semi-structured, and unstructured data |

| Storage Cost | High due to schema enforcement and computation | Low, as it uses object storage systems |

| Schema Approach | Schema-on-write (defined before data loading) | Schema-on-read (defined during data access) |

| Scalability | Limited scalability, costly to expand | Highly scalable, ideal for large datasets |

| Processing Framework | Optimized for SQL queries and BI reporting | Supports big data processing, ML, and AI frameworks |

| Performance | High performance for structured analytical queries | Variable performance depending on data structure and processing |

| Data Freshness | Usually batch-processed and updated periodically | Enables near real-time data ingestion and processing |

| Use Cases | Business reporting, dashboards, compliance analytics | Data science, predictive analytics, IoT, and AI-driven insights |

| Cost Model | Expensive for large data volumes | More cost-effective for massive and diverse datasets |

| Integration | Works best with BI tools | Integrates easily with analytics, ML, and data visualization tools |

Warehouse to Data Lake Migration Lifecycle

The following lifecycle is tailored to data warehouse to data lake migration, not generic “lift‑and‑shift” projects, helping avoid content cannibalization with broader migration topics.

Phase 1: Assessment and Workload Discovery

The goal of this phase is to understand what exists today and which parts of your warehouse are actually good candidates for the lake.

Key actions:

- Inventory schemas, tables, materialized views, ETL jobs, SSIS/other pipelines, and downstream reports or dashboards.

- Categorize workloads into:

- Stay in warehouse (highly regulated, extremely latency‑sensitive, or not cost‑effective to move).

- Move to data lake or lakehouse (historical, semi‑structured, or AI‑oriented workloads).

- Run in parallel during transition (critical reporting and executive dashboards).

- Profile data: volumes, update frequency, dependencies, and quality issues that could be magnified by migration.

This assessment becomes the backbone of your warehouse to lake modernization roadmap, making migration predictable instead of ad‑hoc.

Phase 2: Target Architecture Design (Lake / Lakehouse / Fabric)

Rather than copying table structures into a lake, design a coherent target data architecture that aligns with business goals.

Design tasks:

- Choose the primary platform strategy:

- Azure Data Lake + existing warehouse.

- Databricks Lakehouse with Delta Lake and Unity Catalog.

- Microsoft Fabric OneLake with warehouse and lakehouse experiences on unified storage.

- Map warehouse star/snowflake schemas into bronze–silver–gold layers:

- Bronze: raw landing from source systems or warehouse exports.

- Silver: cleansed, conformed datasets.

- Gold: curated, performance‑optimized semantic models aligned with business domains.

- Establish governance and catalog:

- Decide how data will be cataloged, who owns which domain, and how lineage will be tracked.

A thoughtful architecture phase prevents technical debt and ensures your new data lake migration strategy supports future growth rather than replicating old constraints in a new environment.

Phase 3: Migration Execution Patterns

Top “data warehouse to data lake migration” resources agree that success comes from incremental, validated movement rather than one‑shot big‑bang events.

Execution patterns to consider:

- Historical bulk load

- Export historical warehouse tables and load them into the data lake as Parquet, Delta, or equivalent formats, organizing them by domain and time.

- Direct ingestion from sources using CDC or streaming

- Instead of always feeding the lake from the warehouse, connect directly to source systems using CDC or streaming to build a future‑proof pipeline.

- Domain‑based migration waves

- Migrate finance, sales, marketing, risk, operations, etc., in clearly defined waves, each with its own success metrics and sign‑offs.

- ETL/ELT refactoring

- Re‑implement legacy ETL logic as modular ELT in the lake, using orchestrators and modern transformation engines rather than replicating legacy workflows line by line.

This approach keeps business teams engaged and reduces the risk of breaking mission‑critical analytics while the new platform matures.

Phase 4: Testing, Validation, and Parallel Run

Validation is where a data lake migration either builds trust or fails to gain adoption.

A structured parallel run helps prove that the new lake or lakehouse is ready for production workloads.

Typical activities:

- Define a set of golden KPIs, aggregates, and sample queries to compare between the warehouse and the lake.

- Run both platforms in parallel for a defined period, logging discrepancies and systematically resolving them.

- Implement automated data quality checks, validation reports, and issue dashboards for ongoing monitoring.

By the end of this phase, key stakeholders should be confident that the new platform is at least as reliable as the old one—and more capable.

Phase 5: Cut‑Over, Optimization, and Decommissioning

After validation, workloads can be gradually cut over from warehouse to lake or lakehouse.

- Decommission or right‑size warehouse resources for workloads that fully move to the lake, starting with non‑critical or cost‑heavy analytics.

- Optimize lake performance using partitioning, clustering, caching, and suitable indexing, depending on your chosen platform.

- Continue refining governance, access models, and monitoring as new data sources and use cases are added.

This final phase turns the migration into a long‑term modernization journey rather than a one‑time infrastructure swap.

Challenges in Data Warehouse to Data Lake Migration

Migrating from a data warehouse to a data lake can unlock flexibility and scalability, but it also brings several technical and operational challenges. Additionally, the transition involves managing diverse data formats, new setups, and upskilling the team.

1. Data Format and Schema Mismatches

Data warehouses employ a rigid schema-on-write approach, whereas data lakes follow a schema-on-read approach. Furthermore, converting structured tables into formats such as Parquet or ORC often leads to compatibility issues, especially when incorporating streaming or semi-structured data.

2. Data Quality and Governance

Without proper validation and lineage tracking, the ingestion of raw data can lead to inconsistencies. Therefore, establishing governance, metadata catalogs, and quality rules is crucial to maintain trust in migrated datasets.

3. Integration With Legacy Systems

Legacy pipelines and BI tools built for relational databases may not work smoothly with data lakes. As a result, redesigning ETL workflows and updating dependent systems can add significant complexity.

4. Migration Complexity and Downtime

Large-scale migrations can cause delays or downtime. Consequently, maintaining dual systems temporarily and planning rollback options are key to minimizing disruption.

5. Skill and Tool Gaps

Data lake environments demand skills in Spark, distributed computing, and cloud storage. Moreover, teams may need training and new tools for monitoring, cataloging, and security.

Popular Tools and Technologies for Migration

Choosing the right tools is crucial for a smooth and efficient migration from a data warehouse to a data lake. The best choice depends on your existing cloud system, data volume, and integration needs. Here’s an overview of the widely used platforms and technologies that simplify and accelerate migration.

1. AWS Ecosystem

AWS Glue and AWS Lake Formation are core tools for building and managing data lakes on AWS.

- AWS Glue automates ETL processes, handles schema discovery, and supports serverless data movement.

- Lake Formation simplifies setup, access control, and cataloging, ensuring secure and governed data storage.

2. Azure Ecosystem

For Microsoft users, Azure Data Factory and Azure Synapse Analytics provide end-to-end data integration and analytics.

- Data Factory enables scalable ETL/ELT workflows with drag-and-drop pipeline design.

- Synapse brings together big data and data warehouse capabilities, making it easier to query both historical and real-time data.

3. Google Cloud Ecosystem

Google Cloud Dataflow and Dataproc are built for large-scale batch and stream processing.

- Dataflow handles event-driven data pipelines using Apache Beam.

- Dataproc provides a managed Spark and Hadoop environment for flexible analytics.

4. Unified Analytics Platforms

Databricks is a leading choice for enterprises moving to a lakehouse setup. Furthermore, it combines scalable storage, Spark-based data processing, and built-in ML tools, enabling a single platform for ETL, analytics, and AI. Snowflake can also complement a data lake setup, providing a high-performance SQL-based engine for querying curated datasets.

FLIP by Kanerika further enhances this ecosystem by simplifying complex data migration and modernization projects. It offers automated data mapping, quality checks, and schema transformation to accelerate warehouse-to-lake migrations. Designed for flexibility, FLIP integrates seamlessly with cloud ecosystems like AWS, Azure, and GCP, enabling faster, more reliable data movement with minimal manual intervention.

5. ETL and Integration Tools

Solutions like Informatica, Talend, and Matillion simplify complex data transformations and ensure consistency during migration. Additionally, they support automation, data quality checks, and integration across multiple systems.

6. Open Source and Storage Technologies

Frameworks such as Apache Hudi, Delta Lake, and Apache Iceberg introduce ACID transactions, schema evolution, and time travel to data lakes, making them more reliable and production-ready. Moreover, for workflow orchestration, Apache Airflow and Prefect are popular choices for scheduling, monitoring, and efficiently managing pipelines.

Kanerika: Empowering Seamless Data Warehouse to Data Lake Migration

At Kanerika, we help enterprises modernize their data landscape by choosing the correct setup that aligns with their operational needs, data complexity, and long-term analytics goals. Traditional data warehouses are effective for managing structured, historical data used in reporting and business intelligence, but they often fall short in today’s dynamic, real-time environments. Consequently, this is where data lakes and data fabric setups come into play, offering the flexibility to efficiently handle diverse, unstructured, and streaming data sources.

As a Microsoft Solutions Partner for Data & AI and an early user of Microsoft Fabric, Kanerika delivers unified, future-ready data platforms. Furthermore, we focus on designing intelligent setups that combine the strengths of data warehouses and data lakes. For clients focused on structured analytics and reporting, we establish robust warehouse models. For those managing distributed, real-time, or unstructured data, we create scalable data lake and fabric layers that ensure easy access, automated governance, and AI readiness.

All our implementations comply with global standards, including ISO 27001, ISO 27701, SOC 2, and GDPR, ensuring security and compliance throughout the migration process. Moreover, with our deep expertise in both traditional and modern systems, Kanerika helps organizations transition from fragmented data silos to unified, intelligent platforms, unlocking real-time insights and accelerating digital transformation—without compromise.

Simplify Your Data Warehouse To Data Lake Migration Process.

Partner With Kanerika For End-To-End Automation And Expertise.

FAQs

What is the difference between a data warehouse and a data lake?

A data warehouse stores structured, processed data for reporting and analytics, while a data lake can store raw, semi-structured, and unstructured data, enabling advanced analytics, AI, and real-time insights.

Why should organizations migrate from a data warehouse to a data lake?

Migration helps reduce storage costs, handle diverse data types, improve scalability, and support advanced analytics and machine learning workloads that traditional warehouses cannot efficiently manage.

What are the key challenges in data warehouse to data lake migration?

Common challenges include data quality issues, schema mismatches, security and governance setup, integration with existing tools, and ensuring minimal downtime during migration.

Which tools and platforms are best for data warehouse to data lake migration?

Popular choices include AWS Glue, Azure Data Factory, Google Cloud Dataflow, Databricks, Snowflake, and migration accelerators like FLIP by Kanerika, which automate data mapping, validation, and transformation.

How long does a typical data warehouse to data lake migration take?

The timeline depends on data volume, complexity, and automation tools used. For most enterprises, it can range from a few weeks (with automation tools) to several months for large-scale migrations.