Leading enterprises are rapidly modernizing their data infrastructure, and Databricks has become a preferred platform for migrating legacy systems. In 2025, Databricks announced enhanced migration tools that simplify moving large-scale, on-premises databases and data warehouses to its Lakehouse platform. Companies like Shell, T-Mobile, and Trek Bicycle are leveraging these capabilities to consolidate fragmented data systems, improve analytics performance, and enable AI-driven insights across their operations.

Industry reports show that more than 65% of large enterprises plan to migrate at least part of their legacy data infrastructure to cloud-native platforms by 2026. Moreover, early adopters of Databricks report up to 40% faster query performance, a 30% reduction in data integration costs, and improved governance through unified data catalogs. These improvements demonstrate the growing importance of modernizing legacy systems for business agility and competitive advantage.

This guide explains how migrating legacy systems to Databricks works, what tools and strategies make the process smoother, and how organizations are achieving faster, smarter analytics with measurable business outcomes.

Make Your Migration Hassle-Free with Trusted Experts!

Work with Kanerika for seamless, accurate execution.

Key Takeaways

- Databricks has emerged as a leading choice for modernizing legacy data systems, driven by new migration tools and proven improvements in speed, cost efficiency, and analytics performance.

- Enterprises are moving away from siloed, outdated infrastructure because it limits scalability, slows reporting, and blocks advanced analytics and AI initiatives that modern platforms support.

- Successful migration requires a clear assessment of current systems, strong governance, phased execution, and continuous validation to ensure accuracy, performance, and compliance.

- Cloud platforms, ETL and orchestration tools, data governance solutions, and modern BI tools collectively form the technical foundation that makes large-scale migrations efficient and reliable.

- Organizations often struggle with legacy complexity, data quality issues, skills gaps, and user adoption challenges, making experienced migration partners crucial for minimizing disruption.

- Accelerators like Kanerika’s FLIP significantly reduce migration timelines by automating workflow conversion, enforcing data quality, and enabling smooth movement to Databricks and other modern lakehouse architectures.

What Does Migrating Legacy Systems to Databricks Mean for Your Business?

Migrating legacy systems to Databricks involves moving your organization’s data and analytics from outdated, often siloed infrastructure to a modern, cloud-based lakehouse platform. This transformation changes how data is stored, processed, and accessed, providing a centralized environment for enterprise-wide analytics. Databricks supports handling both structured and unstructured data through its Delta Lake architecture and allows organizations to run complex analytics workflows efficiently.

The Databricks Lakehouse architecture combines the best features of data warehouses and data lakes. It provides ACID transactions and schema enforcement like traditional warehouses, while also supporting unstructured data and machine learning workloads like data lakes. This unified approach eliminates the need to maintain separate systems for different data types and use cases.

Key aspects of this migration include:

- Centralized Data Management: Consolidates multiple data sources into a single lakehouse platform, reducing duplication and inconsistencies across systems while providing a unified view of enterprise data.

- Real-Time Processing: Supports processing and analysis of large datasets in real time through Apache Spark and structured streaming, enabling timely insights that drive business decisions.

- Scalability: Provides elastic compute and storage that are separated, allowing businesses to manage growing data volumes without investing in additional hardware or overprovisioning capacity.

- Collaboration: Enables cross-functional teams to work on the same datasets through shared notebooks and workspaces, fostering better collaboration between analytics, engineering, and business teams.

- Unified Analytics Environment: Integrates batch and streaming data processing, SQL analytics, and machine learning initiatives on a single platform, reducing complexity and improving productivity.

- Delta Lake Foundation: Uses Delta Lake as the storage layer, providing reliability features such as ACID transactions, time travel, and schema evolution that legacy systems often lack.

How Do You Know It Is Time to Move from Legacy Systems to Databricks?

Recognizing when to migrate to Databricks requires evaluating system limitations, data complexity, and business needs. Organizations often encounter bottlenecks in reporting, slow query performance, and difficulty in consolidating multiple data sources when relying on legacy platforms.

The decision to migrate typically stems from specific pain points that impact business operations:

- Performance Bottlenecks: Legacy systems struggle with large datasets, causing delays in reporting and analytics.

- Data Fragmentation: Multiple silos across ERP, CRM, and operational systems make it difficult to achieve a unified view.

- Rapid Growth in Data Volumes: Increasing volumes of structured and unstructured data exceed the capabilities of legacy systems.

- Advanced Analytics Requirements: Need for predictive analytics, machine learning, or AI-driven insights that legacy platforms cannot support.

- Collaboration Challenges: Difficulty enabling teams from different departments to access and analyze data consistently.

- Strategic Alignment: Business goals demand faster insights, agility, and a modern platform that supports innovation and future growth.

Data Conversion vs Data Migration in 2025: What’s the Difference?

Discover the key differences between data conversion and data migration to guide your IT transition.

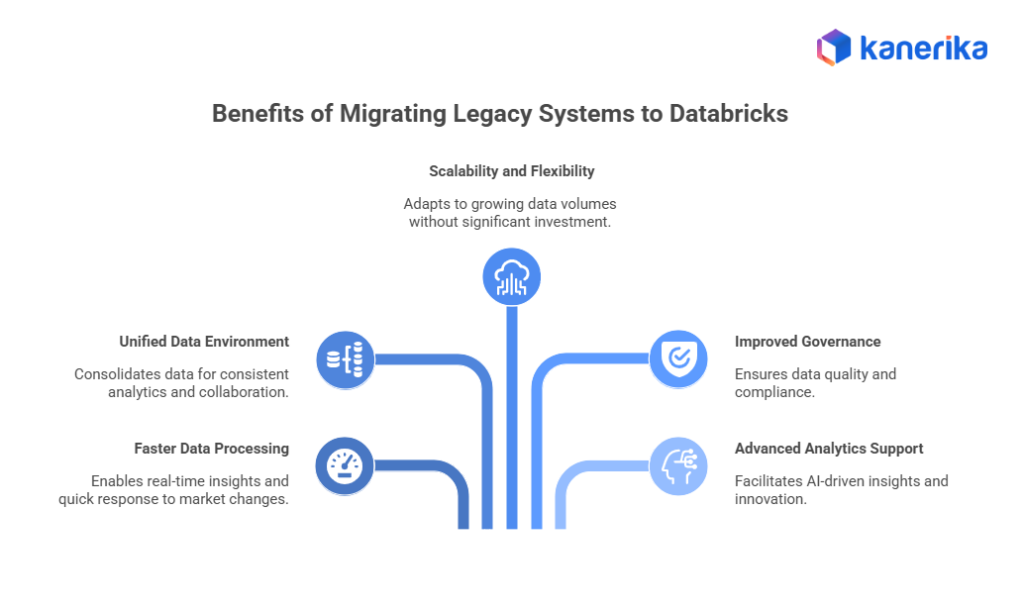

Benefits of Migrating Legacy Systems to Databricks

Migrating from legacy systems to Databricks allows organizations to modernize their analytics environment and unlock the potential of their data. Enterprises gain faster access to information, improved team collaboration, and the ability to efficiently analyze large volumes of structured and unstructured data. Moreover, the lakehouse architecture supports advanced analytics workflows, enabling businesses to make strategic decisions based on reliable and integrated data sources.

1. Faster Data Processing and Real-Time Insights

Databricks accelerates the processing of large datasets, enabling organizations to generate insights in real time. As a result, this allows businesses to respond quickly to operational issues, monitor trends, and adapt to changing market conditions. By reducing the time between data capture and actionable insights, enterprises can make more timely and informed decisions.

2. Unified Data and Analytics Environment

Legacy systems often store information across multiple silos, making it difficult to get a comprehensive view of the business. Migrating to Databricks consolidates data into a single platform, enabling analytics teams to work from a consistent source of truth. This integration improves collaboration between departments and supports enterprise-wide reporting and analysis.

3. Scalability and Flexibility

Databricks provides elastic compute and storage capabilities, which allow organizations to scale resources dynamically as data volumes grow. This flexibility ensures analytics workloads are handled efficiently without requiring significant upfront infrastructure investments. The platform can adapt to evolving business requirements and new analytics initiatives.

4. Improved Governance and Compliance

A modern analytics platform enables better data governance and compliance. Centralized management ensures that data quality, accuracy, and security standards are maintained, reducing the risk of errors or breaches. Enterprises can meet regulatory requirements more effectively and establish a trusted foundation for analytics.

5. Support for Advanced Analytics and Innovation

Databricks provides the infrastructure for predictive modeling, machine learning, and advanced analytics. By modernizing their systems, organizations can leverage AI-driven insights, optimize operations, and innovate products or services. This positions businesses to gain a competitive edge in increasingly data-driven markets.

The platform supports the entire machine learning lifecycle, from data preparation through model deployment and monitoring, without requiring data movement to separate ML platforms. This positions businesses to gain a competitive edge in increasingly data-driven markets where the speed of innovation matters.

How to Plan a Successful Legacy Systems to Databricks Migration

Migrating from legacy systems to Databricks is a major organizational change that requires methodical planning. A well-planned migration allows businesses to modernize analytics, unify data, and prepare for advanced data-driven initiatives. Poor planning can lead to data loss, downtime, or wasted resources, undermining confidence in the platform.

1. Assess Current Systems and Data Landscape

Start by comprehensively evaluating existing infrastructure, including data warehouses, ETL pipelines, reporting systems, and databases. Document data dependencies, workflows, and quality issues that will need to be addressed during migration. Identify critical datasets and categorize them by type (structured, semi-structured, or unstructured).

Databricks provides automated discovery tools that can profile your existing workloads and estimate migration complexity. Use these tools to understand the scope of your migration and identify potential challenges early. This assessment lays the foundation for a smooth migration by providing visibility into what needs to be moved and how it’s currently being used.

2. Define Objectives and Success Metrics

Clearly define the goals of migration beyond just “moving to the cloud.” Objectives might include improving data accessibility by enabling self-service analytics, supporting advanced analytics and ML initiatives, or enabling real-time reporting for operational decisions. Establish measurable KPIs, such as time to refresh data, query latency, error rates, or cost reduction, to demonstrate progress.

These metrics help track the success of migration and measure return on investment throughout the process. They also provide objective criteria for evaluating whether the migration is meeting business needs. Share these metrics with stakeholders regularly to maintain visibility and support for the initiative.

3. Plan a Phased Migration Strategy

Avoid migrating all workloads at once, which creates unnecessary risk and complexity. Begin with low-risk or non-critical datasets such as historical or archive data that are less time-sensitive. Use early migration phases as proof-of-concept to validate data integrity, test pipelines, and optimize performance before moving critical workloads.

Databricks supports two primary migration approaches: ETL-first (back-to-front), which builds a solid data foundation before migrating dashboards, and BI-first (front-to-back), which replicates dashboards first to showcase immediate business value. Choose the approach that aligns with your organizational priorities and stakeholder needs.

Gradual migration minimizes disruption and builds confidence in the new platform through demonstrated success. Each phase should deliver tangible value and incorporate lessons learned from previous phases.

4. Ensure Data Quality and Governance

Legacy systems often contain inconsistent or incomplete data accumulated over years of operation. Standardize formats, clean duplicates, and validate datasets before migration to avoid carrying forward quality issues. Establish governance policies using Unity Catalog to maintain data integrity and compliance in the new environment.

Implement data quality checks in your migration pipelines rather than assuming data is clean. Delta Lake’s schema enforcement helps prevent insufficient data from entering your lakehouse, but source data quality still matters. Hence, a clean, well-governed data environment ensures reliable analytics after migration and builds trust in the new platform.

5. Address Dependencies and Workflow Refactoring

Legacy systems may support multiple downstream applications and reporting tools that depend on specific data formats, schemas, or availability schedules. Map all dependencies thoroughly, refactor workflows as needed, and verify integrations before migrating production workloads. Consider using Databricks Lakehouse Federation to query legacy systems during the transition period.

Running old and new systems in parallel for a period can help ensure a smooth transition and validate that migrated data produces the same results. This parallel operation provides a safety net and builds confidence before fully decommissioning legacy systems.

6. Prepare Change Management and Training

Migration affects people and processes as much as technology. Provide training and documentation for data teams and business users that address their specific roles and needs. Communicate the benefits of the new platform clearly and assign roles for data ownership and governance that establish accountability.

Effective change management ensures user adoption and maximizes the value of Databricks. Create internal champions who understand the platform well and can help their colleagues. Provide hands-on training rather than just presentations. Show users how the new platform solves their specific problems better than legacy systems.

7. Validate and Monitor Post-Migration

After migration, run extensive validation to ensure data completeness and accuracy by comparing outputs with legacy systems to confirm results. Implement monitoring and observability for pipelines, jobs, and data quality to maintain stable operations and prevent issues from impacting business users.

Databricks provides built-in monitoring capabilities and integrates with tools like Monte Carlo and Bigeye for data observability. Set up alerts for pipeline failures, data quality issues, and performance degradation to quickly identify and resolve problems.

8. Decommission Legacy Systems

Once validation is complete and teams have successfully adopted Databricks, systematically phase out legacy infrastructure. Decommissioning reduces maintenance costs, eliminates redundancies, and ensures resources are fully focused on the modern platform. Keep legacy systems in read-only mode initially in case reference access is needed, then fully decommission once confidence is established.

Document what was retired, when, and why to maintain institutional knowledge. Celebrate the decommissioning milestone with stakeholders to reinforce the success of the migration.

Simplify Your Data Migration with Confidence!

Partner with Kanerika for a smooth and error-free process.

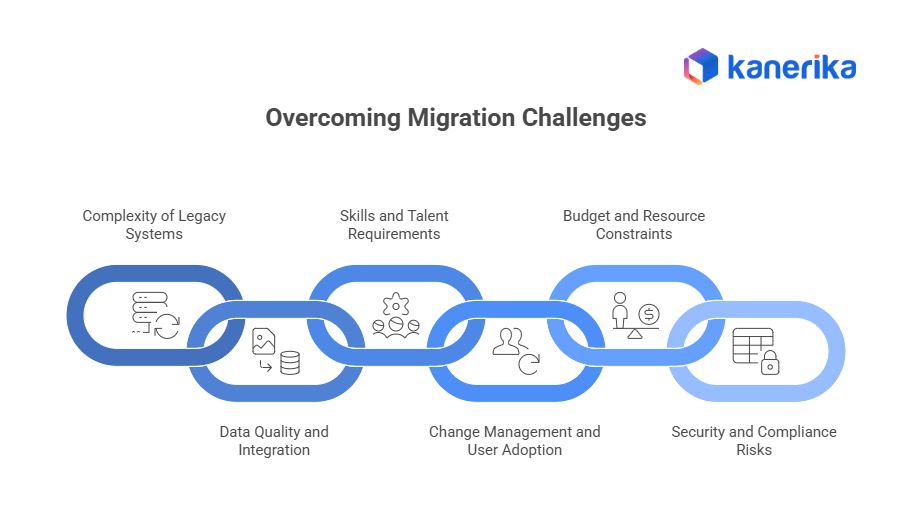

What Challenges Can You Expect During Migration and How to Overcome Them?

Migrating legacy systems to Databricks is a complex process that requires careful planning, resources, and expertise. Organizations may face technical, operational, and organizational challenges that need to be addressed to ensure a smooth transition and successful adoption of the new platform.

1. Complexity of Legacy Systems

Legacy systems often involve outdated databases, tightly coupled applications, and undocumented workflows, which makes migration challenging. A detailed assessment of existing infrastructure and a phased migration strategy can help minimize operational disruptions and ensure continuity of business processes.

2. Data Quality and Integration Issues

Data inconsistencies, missing records, or duplication are common challenges when migrating from legacy systems. Organizations must invest time in validating, cleaning, and standardizing data to ensure the new platform delivers accurate and reliable analytics. Effective integration strategies are critical to consolidating multiple sources and maintaining consistency across datasets.

3. Skills and Talent Requirements

Modern analytics platforms require expertise in cloud architecture, data engineering, and analytics workflows. Many organizations face a shortage of skilled personnel, which can slow migration projects. Training internal teams or engaging external experts can help bridge the skills gap and support a successful implementation.

4. Change Management and User Adoption

Employees accustomed to legacy systems may resist adopting new platforms and workflows. Structured training programs, clear communication of benefits, and collaborative onboarding initiatives can encourage adoption and ensure the platform is used effectively across departments.

5. Budget and Resource Constraints

Migration projects require investment in technology, infrastructure, and personnel. Careful planning, phased implementation, and clear prioritization of high-impact workloads are essential to manage costs and achieve measurable returns on investment.

6. Security and Compliance Risks

Transferring data to a new platform introduces potential security and compliance challenges. Enterprises must ensure access controls, encryption, and auditing measures are in place, and that all processes comply with relevant regulatory standards to mitigate risk.

Which Tools and Technologies Are Essential for Migration

A successful migration relies on a combination of cloud platforms, integration tools, data management solutions, and analytics software. These tools streamline the transition, maintain data integrity, and enable the full potential of Databricks once the migration is complete.

1. Cloud Platforms

Databricks operates across major cloud providers, including AWS, Azure, and Google Cloud, providing scalable compute and storage tailored to each environment. These platforms enable elastic resource allocation, support large-scale data processing, and integrate with existing cloud ecosystems for seamless analytics workflows.

Organizations typically choose their cloud provider based on existing relationships, geographic requirements, or specific service needs. Databricks provides a consistent experience across clouds, allowing organizations to avoid vendor lock-in while leveraging cloud-native capabilities.

2. Data Integration and ETL/ELT Tools

Efficient extraction, transformation, and loading of data from legacy systems are critical for successful migration. Integration tools help consolidate multiple data sources, automate workflows, and maintain data consistency throughout the process. These tools reduce manual effort and minimize errors during migration.

Examples include Fivetran for automated data replication, Talend for complex transformations, Informatica for enterprise data integration, and Azure Data Factory for cloud-native orchestration. Databricks also provides native tools like Lakeflow Connect for CDC-based replication and Auto Loader for continuous file ingestion that support smooth data movement and pipeline orchestration.

3. Data Governance and Observability Tools

To maintain data quality and compliance during and after migration, organizations rely on governance and observability solutions. Unity Catalog provides centralized governance natively within Databricks, managing permissions, tracking lineage, and ensuring compliance. These tools monitor data pipelines, detect anomalies, enforce access controls, and provide audit trails.

Additional platforms like Collibra and Alation offer data catalogs and metadata management, while Monte Carlo and Bigeye provide data observability and quality monitoring. Together, these tools ensure that the migrated data is reliable, secure, and compliant with regulatory requirements.

4. Analytics and Visualization Tools

Once data is migrated to Databricks, analytics and visualization tools enable teams to generate insights and share them across the organization. Databricks SQL provides a serverless data warehouse for SQL analytics. At the same time, tools such as Power BI, Tableau, Looker, and Qlik help build interactive dashboards, monitor KPIs, and support self-service analytics for business users.

Additionally, these tools connect directly to Databricks, eliminating the need for data exports or intermediate layers. Users can query the lakehouse directly while Databricks handles query optimization and performance.

5. Workflow Orchestration Tools

Complex migrations often require automated scheduling and orchestration of data workflows across multiple systems and dependencies. Platforms like Apache Airflow and Prefect help manage dependencies, monitor pipeline performance, and ensure that data moves smoothly from legacy systems to Databricks without disruption.

Databricks Workflows provides native orchestration capabilities that integrate tightly with the platform, supporting complex job dependencies, error handling, and monitoring. Consequently, this eliminates the need for external orchestration tools in many cases.

How to Choose the Right Partner for Your Migration

Choosing the right partner for your legacy-to-Databricks migration can mean the difference between a smooth transition and costly delays. The ideal partner offers strong technical expertise, industry experience, and a structured approach that minimizes risks and disruptions throughout the migration.

Key factors to consider when selecting a migration partner include:

- Proven Databricks Expertise: Experience with Databricks migrations, specifically, not just general cloud or data warehouse projects. Look for partners with Databricks certifications and documented customer success stories.

- Industry Knowledge: Familiarity with your industry’s specific data requirements, compliance standards, and common use cases. Healthcare, financial services, and retail each have unique challenges that industry experience helps address.

- End-to-End Services: Ability to handle assessment, planning, migration execution, testing, and post-migration support rather than just one phase. Comprehensive service coverage ensures continuity and accountability.

- Automation Capabilities: Proprietary accelerators or tools that automate migration tasks, reducing manual effort and minimizing errors while accelerating timelines.

- Change Management Support: Provides training, documentation, and guidance to ensure adoption across teams rather than just delivering technical implementation.

- Transparency and Communication: Clear updates on progress, risks, and outcomes throughout the migration. Regular check-ins and honest assessments build trust and enable course correction.

By evaluating partners on these criteria, organizations can ensure a successful migration that delivers reliable, scalable, and modern analytics capabilities that meet business needs.

RPA For Data Migration: How To Improve Accuracy And Speed In Your Data Transition

Learn how RPA streamlines data migration—automate, secure & speed up your transfers.

Case Study 1: Transforming Sales Intelligence with Databricks-Powered Workflows

Client Challenge

A global sales intelligence platform faced inefficiencies in document processing and data workflows. Disconnected systems and manual processes slowed down operations, making it hard to deliver timely insights to customers.

Kanerika’s Solution

Kanerika redesigned the entire workflow using Databricks. We automated PDF processing, metadata extraction, and integrated multiple data sources into a unified pipeline. Legacy JavaScript workflows were refactored into Python for better scalability. The solution enabled real-time data processing and improved overall system performance.

Impact Delivered

- 80% faster document processing

- 95% improvement in metadata accuracy

- 45% quicker time-to-insight for end users

Case Study 2: Optimizing Data-Focused App Migration Across Cloud Providers

Client Challenge

A leading enterprise needed to migrate its data-intensive application across cloud providers without disrupting operations. The existing setup had performance bottlenecks, high infrastructure costs, and frequent data-processing errors.

Kanerika’s Solution

Kanerika executed a seamless migration strategy using automation accelerators. We optimized the application architecture for the new cloud environment, improved data pipelines, and implemented robust monitoring to ensure stability. The migration was completed with zero downtime and full compliance with security standards.

Impact Delivered

- 46% improvement in application performance

- 32% reduction in infrastructure costs

- 60% faster error resolution and improved reliability

FLIP for Informatica to Databricks Migration: Accelerating Lakehouse Transformation

Migrating from legacy ETL tools like Informatica PowerCenter to modern platforms such as Databricks is critical for enterprises that need scalability, cost efficiency, and advanced analytics capabilities. Traditional migration methods are slow, manual, and prone to errors that can delay modernization and increase risk. Kanerika addresses these challenges with FLIP, a proprietary accelerator that significantly simplifies and speeds up the migration process.

How FLIP Helps Enterprises Modernize:

- Automated Workflow Conversion: FLIP automatically converts Informatica PowerCenter workflows into Databricks-native pipelines, reducing manual effort and ensuring accurate translation of transformation logic. This automation eliminates transcription errors that plague manual migration approaches.

- Schema Evolution Support: Handles changes in data structures seamlessly during migration, adapting to schema modifications without breaking pipelines or requiring extensive rework.

- Data Quality and Validation: Built-in checks maintain integrity and consistency across migrated datasets, validating that data arrives correctly and that transformations produce expected results.

- Governance and Compliance: Integrates security and compliance controls aligned with global standards, including ISO 27001, ISO 27701, SOC 2, and GDPR throughout the migration process.

- Accelerated Delivery: Cuts migration timelines by up to 70% compared to manual approaches, enabling faster adoption of Databricks Lakehouse architecture and quicker realization of benefits.

By leveraging FLIP, businesses move to a unified platform that supports data engineering, machine learning, and real-time analytics. This migration eliminates months of manual conversion work while modernizing infrastructure. It also unlocks capabilities like predictive modeling and AI-driven automation, helping enterprises stay competitive in a data-first world.

Kanerika: Accelerating Data Migration for Modern Enterprises

Kanerika helps organizations modernize their data and analytics infrastructure through fast, secure, and smart migration strategies to Databricks and other modern platforms. Legacy systems often struggle to manage growing data volumes, meet real-time reporting demands, and support AI-driven business needs. Our approach ensures a smooth transition to modern platforms without disrupting ongoing operations.

We provide end-to-end migration services across multiple areas:

- Application and Platform Migration: Move from outdated systems to modern, cloud-native platforms for better scalability and performance.

- Data Warehouse to Data Lake Migration: Shift from rigid warehouse setups to flexible data lakes or lakehouse platforms that handle structured, semi-structured, and unstructured data.

- Cloud Migration: Transition workloads to secure, scalable environments like Azure or AWS for improved efficiency and cost optimization.

- ETL and Pipeline Migration: Modernize data pipelines for faster ingestion, transformation, and orchestration.

- BI and Reporting Migration: Upgrade from legacy tools such as Tableau, Cognos, SSRS, and Crystal Reports to advanced platforms like Power BI for interactive dashboards and real-time insights.

- RPA Platform Migration: Move automation infrastructure from UiPath to Microsoft Power Automate for streamlined workflows.

Our proprietary FLIP platform accelerates migrations with Smart Migration Accelerators, automating up to 80% of the process to reduce risk and preserve business logic. FLIP enables rapid adoption of cloud-native, AI-ready architectures. It supports complex transitions such as SSIS to Microsoft Fabric, Informatica to Databricks, and Talend to Power BI, all with zero data loss and full operational continuity.

Additionally, Kanerika ensures end-to-end compliance with ISO 27001, ISO 27701, SOC 2, and GDPR while leveraging strong automation, AI, and cloud engineering expertise. We focus on practical, high-value use cases that deliver quick ROI, then scale capabilities through a phased approach that minimizes risk, maintains business continuity, and ensures measurable value at every stage.

Trust the Experts for a Flawless Migration!

Kanerika ensures your transition is seamless and reliable.

FAQs

1. Why should companies move from legacy systems to Databricks?

Companies migrate to Databricks to improve data processing speed, reduce maintenance costs, and support modern analytics. Databricks provides scalable compute, easy collaboration, and support for advanced AI and machine learning. Legacy systems often slow down innovation and cannot handle large or real time data.

2. How long does a legacy systems to Databricks migration typically take?

Timelines vary based on data size, complexity, and integrations. Small migrations can take a few weeks, while enterprise systems may take several months. Proper planning, data assessment, and pilot testing reduce delays and ensure a smooth transition.

3. What challenges do companies face during this migration?

Common challenges include dealing with outdated data formats, unclear data ownership, missing documentation, and compatibility issues. Teams also face skill gaps because Databricks uses modern cloud technologies. A structured migration plan and team training help overcome these issues.

4. How secure is data when moving from legacy systems to Databricks?

Migrating to Databricks is secure because it supports encryption at rest and in transit, role based access, and strong compliance controls. Security also depends on following best practices like setting access policies, auditing activities, and cleaning sensitive data before migration.

5. Do teams need to learn new tools or languages after moving to Databricks?

Yes, teams may need to learn Databricks notebooks, Spark, and cloud data engineering workflows. However, Databricks supports Python, SQL, and R, which makes adoption easier. Most teams can get productive quickly with basic training and guided learning paths.