In the autumn of last year, Rachel, the Chief Data Officer at a national insurance provider, confronted an uncomfortable reality during her quarterly business review. Her data science team had built 47 machine learning models over eighteen months. Only three had made it to production. The rest sat in Jupyter notebooks, technically sound, mathematically elegant, operationally useless.

What made Rachel’s predicament worth examining was that she wasn’t questioning whether machine learning worked. Insurance companies across North America have successfully deployed predictive models for risk assessment, achieving 20-30% accuracy gains over traditional actuarial methods. The algorithms perform exactly as advertised. What kept Rachel awake at night was a more fundamental challenge facing enterprise data leaders, according to Gartner research: only 53% of machine learning projects make it from prototype to production. Not because the models fail mathematically, but because organizations lack the operational infrastructure to deploy, monitor, and maintain models at scale.

Here’s the catch: building a model is straightforward. Rachel’s team had PhDs from Stanford and MIT. They understood random forests and gradient boosting. They could tune hyperparameters in their sleep. What they couldn’t do, what no amount of advanced mathematics prepared them for, was answer basic operational questions. How do you version a model? What happens when the distribution of production data shifts? Who gets paged at 2 AM when predictions start degrading?

MLOps represents the discipline of operationalizing machine learning workflows. It bridges the gap between data science experimentation and production deployment through standardized processes for model versioning, automated testing, continuous monitoring, and performance optimization. Rachel understood something that many executives overlook: you’re not really testing whether the model works. You’re testing whether your organization has the operational maturity to sustain that model.

TL;DR: MLOps in Microsoft Fabric unifies model development, deployment, and monitoring within a single platform, eliminating the fragmented tool sprawl that causes 87% of machine learning projects to fail before production. Organizations implementing structured MLOps frameworks see 3–5x faster model deployment cycles and 40–60% reduction in model maintenance overhead.

Key Takeaways

- MLOps frameworks reduce deployment cycles from months to weeks by standardizing model promotion workflows and automating deployment pipelines

- Microsoft Fabric integrates MLOps capabilities across data engineering, model training, deployment, and monitoring within a unified analytics platform

- Production monitoring prevents model decay through automated performance tracking that detects drift before business impact occurs

- Version control for ML assets maintains reproducibility across experiments, enabling teams to roll back deployments and audit model lineage

- Automated retraining pipelines keep models current as data distributions shift, maintaining prediction accuracy over time

Partner with Kanerika to Modernize Your Enterprise Operations with High-Impact Data & AI Solutions

What Makes ML Different from Traditional Software

Traditional software deployment follows predictable patterns. You change code, run tests, and push to production. Machine learning breaks this model in a fundamental way: models degrade over time even when the code stays identical.

A fraud detection algorithm trained on 2023 transaction patterns loses accuracy by 2024 because fraudsters adapt. Customer segmentation models drift as purchasing behaviors shift with economic conditions. Inventory forecasting algorithms lose accuracy when supply chain dynamics change. The statistical properties of your data move constantly, which means predictions become increasingly unreliable even though nothing in the codebase has changed. This is the core challenge MLOps is designed to solve.

MLOps brings software engineering discipline to the inherently unstable world of statistical models. It does this through four interconnected capabilities:

- Model development infrastructure gives data scientists reproducible experimentation environments with consistent access to training data, scalable compute, and tracking systems that log every experiment parameter and performance metric. Without this, debugging a production issue means reconstructing which model version was deployed last month from half-remembered notebook runs.

- Deployment automation standardizes how validated models move from experimentation to production endpoints. Manual deployment wastes weeks packaging models, configuring infrastructure, and coordinating across teams. Automated pipelines collapse this to hours and remove the human error that creeps in when someone forgets to update preprocessing logic between training and inference environments.

- Continuous monitoring tracks model performance against business metrics and statistical distributions simultaneously. This matters because models fail gradually, not catastrophically. Accuracy drops from 92% to 89% to 84% over months unless someone is actively watching. Systems that detect concept drift before it becomes a business problem are the difference between proactive operations and crisis management.

- Governance and compliance maintain audit trails for regulated industries. Financial services, healthcare, and insurance face regulatory requirements for model documentation, bias testing, and explainability that go beyond standard software compliance. MLOps platforms capture this metadata systematically rather than through the manual documentation processes that everyone intends to maintain, but nobody actually does.

Rachel’s insurance company had none of these capabilities when she arrived. Seven different Python versions existed across a team of twelve data scientists. Model deployment required manual coordination across four separate teams. Production monitoring consisted of quarterly accuracy reviews that routinely discovered problems three to six months after business impact. The organizational structure practically guaranteed failure.

| Capability | Traditional ML | MLOps | Business Impact |

|---|---|---|---|

| Model Deployment | Manual packaging, weeks of coordination | Automated pipelines, hours to production | 10–15x faster deployment |

| Version Control | Ad-hoc notebooks, manual tracking | Centralized registry, automated lineage | 100% reproducibility |

| Monitoring | Quarterly reviews, reactive discovery | Real-time alerts, proactive detection | 85% faster issue resolution |

| Retraining | Manual processes, irregular schedules | Automated triggers, performance-based | 60% less model drift |

| Governance | Manual documentation, audit gaps | Automated metadata, complete audit trails | Full regulatory compliance |

Microsoft Fabric’s MLOps Capabilities

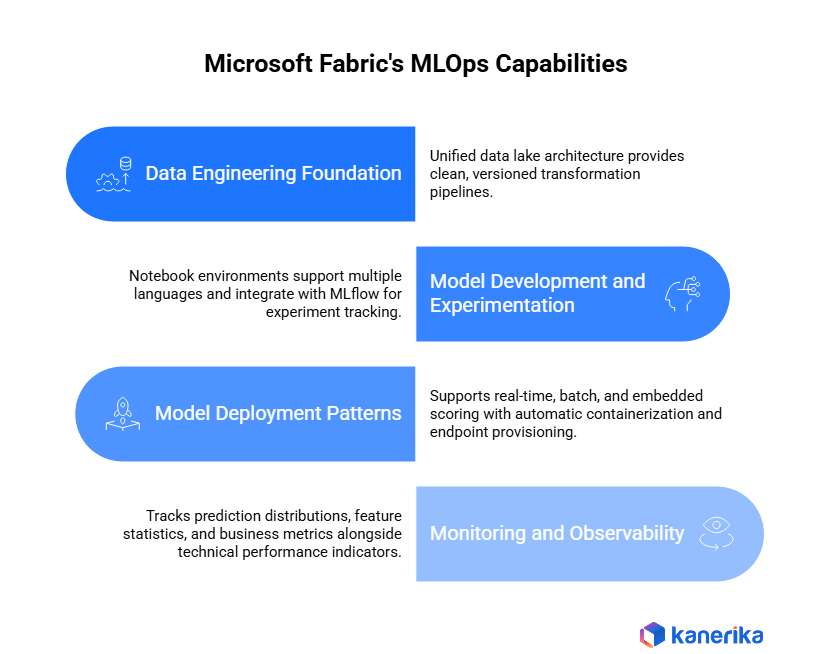

Microsoft Fabric is an end-to-end analytics platform that integrates data engineering, data science, and business intelligence within a single environment. What makes it practically useful for MLOps is that these integrations were built into the architecture from the start, not assembled through acquisitions and stitched together afterward.

1. Data Engineering Foundation

MLOps begins with data accessibility, which sounds obvious until you spend a week debugging why your production model performs differently from your training environment. Models require clean, versioned transformation pipelines that maintain consistency between training and inference. Fabric’s lakehouse architecture provides this through OneLake, a unified data lake accessible across all workload types without data movement or replication.

Data engineers build pipelines using familiar tools. Dataflows transform raw data into analytics-ready formats. Notebooks handle complex preprocessing logic. Pipelines orchestrate multi-step workflows on scheduled intervals. The platform maintains lineage automatically, which matters when something breaks upstream, and you need to trace which models depend on which data sources.

The deeper value here is consistency. When training data and production data diverge in subtle ways, such as missing values handled differently, feature encoding inconsistencies, or timestamp timezone mismatches that nobody noticed during development, models fail in ways that are genuinely difficult to debug. Unified data engineering infrastructure reduces these failure modes by ensuring training and inference use identical processing logic from the same source.

2. Model Development and Experimentation

Fabric provides notebook environments supporting Python, R, and Scala with access to the full ML library ecosystem, including scikit-learn, TensorFlow, PyTorch, XGBoost, and LightGBM, without infrastructure configuration overhead. The platform integrates with MLflow for experiment tracking, which changes how teams work in practice.

Instead of comparing model versions through scattered spreadsheets or tribal knowledge about “the good run from Tuesday,” teams log training runs with associated parameters, metrics, and artifacts. When a model underperforms in production six months after deployment, you can trace back through experiment history to understand exactly what changed, including the preprocessing steps someone modified at 11 PM before a demo. That kind of traceability is what separates teams that can debug production issues from teams that just redeploy and hope.

Compute resources scale elastically during hyperparameter tuning. Training jobs run on distributed clusters. Tuning sweeps run across dozens of configurations in parallel rather than sequentially. The practical effect is that more model architectures get evaluated within the same timeline, which consistently produces better-performing models in production.

3. Model Deployment Patterns

Different use cases require different deployment architectures, and Fabric supports all three major patterns:

- Real-time inference delivers low-latency endpoints serving individual predictions. This is the right pattern for credit card fraud detection, loan decisioning, or any scenario where milliseconds matter, and decisions happen one at a time

- Batch scoring processes large datasets on scheduled intervals. Monthly customer churn predictions, quarterly risk assessments, weekly demand forecasts — anything where you’re scoring an entire population rather than responding to individual events

- Embedded scoring integrates predictions directly into data pipelines, which is appropriate when you’re enriching data streams rather than serving external API requests

The platform handles containerization and endpoint provisioning automatically, which removes the infrastructure expertise barrier that often delays production deployment. Authentication integrates with Azure Active Directory, so predictions automatically respect organizational data access constraints without building custom authorization logic.

4. Monitoring and Observability

Production monitoring in a mature MLOps environment extends well beyond checking whether the service is responding to requests. Fabric tracks prediction distributions, feature statistics, and business metrics alongside technical performance indicators.

Data drift detection continuously compares production input distributions against training data baselines. When feature distributions shift beyond defined thresholds, say average transaction amounts increasing by 15% or customer age demographics changing unexpectedly, alerts notify teams before accuracy has a chance to degrade noticeably. This is the exact capability Rachel’s organization lacked. She was discovering problems three months after the business impact occurred, trying to reverse-engineer what went wrong from incomplete logs.

Performance dashboards centralize visibility across all deployed models. Operations teams can monitor request volumes, latency percentiles, error rates, prediction distributions, and resource utilization without switching between systems. When production load increases during peak periods, scaling decisions get informed by observable reality rather than capacity guesses made during initial provisioning.

Implementing MLOps Workflows

1. Version Control for ML Assets

Every component in the ML pipeline needs versioning: training data, preprocessing code, model architectures, hyperparameters, deployment configurations, and monitoring definitions. Fabric integrates with Git for code while providing built-in versioning for datasets and models. Teams establish naming conventions that capture version and context — a fraud detection model might follow a pattern like fraud_detection_v2.3_2024_02_15, making it immediately clear which model is in production and when it was trained.

Feature stores play a critical role here. When multiple models share similar inputs, such as customer demographics, transaction history, and behavior patterns, the feature store maintains a single source of truth. This prevents logic drift, where the same feature gets calculated slightly differently across models, causing prediction inconsistencies that take weeks to track down.

2. Automated Training Pipelines

Manual model retraining doesn’t scale past a handful of models. Automated pipelines trigger retraining based on defined conditions rather than someone remembering to kick off a job:

- Time-based triggers retrain on fixed schedules. A sales forecasting model might retrain weekly as new transaction data arrives, a demand prediction model monthly to incorporate seasonal patterns

- Performance-based triggers initiate retraining when accuracy degrades below thresholds. If a recommendation model’s click-through rate drops 10% below baseline, the pipeline starts retraining using recent interaction data automatically

- Data volume triggers activate when training datasets grow beyond defined sizes, encoding domain-specific knowledge about when additional data actually improves the model versus just increasing compute cost

The pipeline workflow follows a consistent sequence: data validation, preprocessing, hyperparameter tuning, model training, holdout validation, and automated deployment if performance meets acceptance criteria. Standardizing this sequence across all models is what makes the difference between an MLOps practice and a collection of ad-hoc scripts.

3. CI/CD for ML Models

Continuous integration and continuous deployment principles apply to machine learning, with modifications for the statistical nature of model quality. Pull requests trigger automated testing across multiple layers:

- Unit tests verify that preprocessing logic handles edge cases correctly

- Integration tests confirm model interfaces match expected input/output schemas

- Performance tests benchmark inference latency and memory consumption

- Statistical tests compare new model versions against previous versions on holdout datasets to ensure improvements rather than regressions.

- Bias tests check prediction fairness across demographic segments

Models passing all automated checks enter a manual review queue where domain experts assess whether improvements justify deployment risk. Approved models deploy automatically to staging environments for final validation before production. Rollback capabilities provide a safety net throughout: if production monitoring detects issues after deployment, teams revert to previous versions without manual intervention, which requires maintaining multiple model versions simultaneously and routing traffic based on validation results.

Common Challenges and How to Address Them

1. Data Quality and Consistency

Machine learning models amplify data quality issues in ways traditional software never experiences. The symptoms are recognizable: a model performs at 94% accuracy in offline evaluation but struggles at 78% in production. Prediction distributions shift dramatically within weeks of deployment. Certain customer segments receive systematically worse predictions while others perform normally.

The root causes are almost always the same. Training data undergoes different preprocessing than production data because different teams wrote the code at different times. Feature calculations use subtly different logic in training pipelines versus inference endpoints. Data types or null handling differ between environments because development and production databases have quietly diverged over months.

The architectural solution is to centralize feature engineering logic in reusable libraries that both training and inference code call identically, implement continuous data validation that compares production input distributions against training baselines, and test inference pipelines using production-representative data rather than the sanitized development data that masks real-world messiness.

2. Model Drift

Model performance degrades gradually as real-world patterns shift. The insidious part is how it happens: accuracy declines from 91% to 88% to 84% over eight weeks. Business metrics show unexpected degradation that leadership attributes to market conditions rather than model failure. By the time the problem is visible in business outcomes, the model may have been underperforming for months.

The cause is fundamental. Data distributions shift as customer behaviors evolve, market dynamics change seasonally, or external factors transform the operating environment. The code hasn’t changed. The world the model is predicting has. Addressing this requires monitoring that looks at what the model is seeing, not just what it’s producing:

- Statistical tests comparing production input distributions against training data continuously, not just periodically

- Prediction confidence score monitoring, which often degrades before accuracy does

- Automated retraining triggers based on detected drift severity rather than fixed schedules that might miss critical deterioration between intervals

3. Deployment Complexity and Scale

Moving models from development to production creates coordination bottlenecks at every team boundary. Standardized deployment pipelines using infrastructure-as-code approaches reduce this friction. Containerizing models ensures environment consistency between development and production. Blue-green deployment patterns enable safe rollbacks when something does go wrong.

At scale, models that perform well on development datasets often struggle against production data volumes. Inference endpoints experience high latency under load. Model serving costs exceed budgets due to inefficient resource utilization. The practical toolkit here includes quantization and pruning to reduce serving costs, autoscaling policies based on actual traffic patterns rather than peak estimates, parallelized batch processing for large data volumes, and prediction caching for frequently requested outputs.

How to Measure Whether MLOps Is Working

Metrics matter here because MLOps investment can feel abstract until you connect it to observable outcomes. The right metrics cluster into three categories.

1. Deployment velocity

It is the most immediate indicator. Time-to-production measures the duration from development completion to deployment—mature organizations achieve days rather than months. Deployment frequency reveals operational maturity; teams that deploy frequently have confidence in their testing and automation. Deployment success rate is the percentage of deployments that succeed without rollback, and declining rates are an early warning signal of process degradation.

2. Operational efficiency

It shows up in MTTD and MTTR. Mean time to detection measures how quickly teams identify model performance issues. Automated monitoring should surface problems within minutes or hours rather than weeks. Mean time to resolution measures how quickly teams fix identified issues, which depends on automated rollback capabilities and clear incident response procedures. Model maintenance overhead, the total engineering effort required to keep models running, should decline over time as automation matures.

3. Business impact

It is the ultimate measure. Model coverage, or what percentage of business decisions use ML predictions, shows whether adoption is growing. Trends in prediction accuracy indicate whether monitoring and retraining are effective. Revenue growth, cost reductions, fraud prevention, and operational efficiency improvements are what justify the MLOps investment to leadership.

Rachel’s insurance company tracked these metrics rigorously after implementing Fabric-based MLOps. Within six months, deployment time dropped from 12 weeks to 8 days. Production models grew from 3 to 27. Automated fraud detection reduced investigation costs by $2.4 million annually while improving claim processing speed by 40%. The investment paid for itself within the first year.

Partner with Kanerika to Modernize Your Enterprise Operations with High-Impact Data & AI Solutions

Getting Started: A Phased Approach

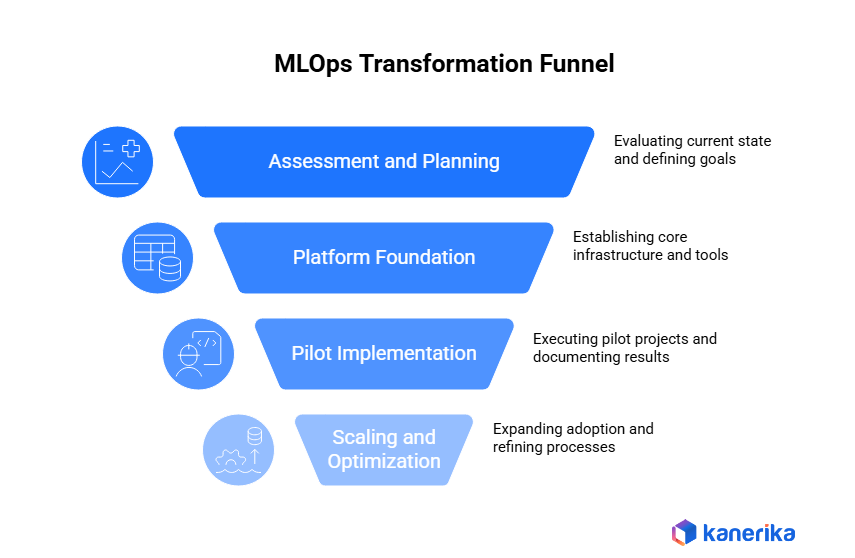

Attempting a comprehensive MLOps transformation all at once rarely works. A staged approach lets organizations build capability incrementally and validate it before scaling.

Phase 1 (Weeks 1–4): Assessment and Planning

Start by inventorying existing ML initiatives and documenting models in development, production, or maintenance. Evaluate current deployment processes, identify where time gets lost, and define what success looks like in measurable terms. Select 2–3 pilot projects that are representative of broader organizational needs but small enough to move quickly. Good pilots have business sponsors, clear success metrics, and manageable technical complexity.

Phase 2 (Weeks 5–12): Platform Foundation

Establish the Fabric environment with appropriate capacity and security configurations. Implement the core infrastructure that everything else depends on: version control repositories for ML code, experiment tracking systems, model registries, and monitoring dashboards. Build reference architectures for common ML patterns so future projects have a documented starting point. Train teams on new tools before they need them in production.

Phase 3 (Weeks 13–20): Pilot Implementation

Execute pilot projects using the established infrastructure and processes. Build models following reference architectures, deploy through standardized pipelines, and implement monitoring from day one rather than adding it later. Document what worked, what didn’t, and where standard processes need adjustment. Measure against the success criteria defined in Phase 1.

Phase 4 (Weeks 21+): Scaling and Optimization

Expand adoption to additional projects based on pilot learning. Refine processes based on team feedback. Invest in automating the steps teams find themselves repeating manually. Build organizational capability through internal communities of practice, documentation, and training that outlast individual projects. Track and communicate business impact to maintain leadership support for continued investment outlast.

Conclusion

Rachel’s insurance company transformed ML operations within eighteen months. Production models grew from 3 to 63. Deployment cycles shortened from months to days. ML-driven improvements delivered $8.7 million in measurable business value through fraud reduction, risk assessment accuracy, and claims processing efficiency.

The transformation didn’t come from revolutionary technology. It came from systematic application of MLOps principles: version control for ML assets, automated deployment pipelines, continuous monitoring, and standardized processes that teams follow consistently. Microsoft Fabric provided the platform to support these practices without the tool sprawl and integration complexity that typically derail enterprise ML programs.

That said, tools alone don’t guarantee success. Organizations must invest equally in process development, team training, and change management. MLOps is as much a cultural shift as a technical implementation, moving from artisanal model development to something that operates more like an industrial system.

The business case becomes more compelling as ML adoption scales. The first few models may be deployed successfully through manual processes and individual effort. With dozens or hundreds of models, that approach collapses under its own weight. Machine learning delivers business value only when models reach production and stay reliable over time. MLOps is the operational foundation that makes that possible consistently, not just once.

Kanerika’s Approach to Selecting and Modernizing Analytics Platforms

Kanerika, a certified Microsoft Data & AI Solutions Partner, enables enterprises to modernize their analytics environments with Microsoft Fabric. Our team of certified experts and Microsoft MVPs builds scalable, secure, and business-driven data ecosystems that simplify complex architectures, support real-time analytics, and strengthen governance through Fabric’s unified platform.

We also specialize in transforming legacy data systems using structured, automation-led migration strategies. Recognizing that manual migrations are time-consuming and prone to errors, Kanerika utilizes automation tools such as FLIP to ensure seamless transitions from SSRS to Power BI, SSIS and SSAS to Microsoft Fabric, and Tableau to Power BI. This streamlined approach enhances data accessibility, improves reporting accuracy, and lowers long-term maintenance costs.

As an early global adopter of Microsoft Fabric, Kanerika applies a proven implementation framework that includes architecture planning, semantic model development, governance configuration, and user enablement. Powered by FLIP’s automated DataOps capabilities, our methodology accelerates Fabric adoption, reinforces data security, and drives measurable business value with efficiency and precision.

Transform Your Business with AI-Powered Solutions!

Partner with Kanerika for Expert AI implementation Services

FAQs

What is the difference between MLOps and traditional DevOps?

MLOps extends DevOps principles to machine learning by adding capabilities specific to ML workflows: experiment tracking, model versioning, data drift detection, and continuous retraining. Traditional DevOps focuses on code deployment; MLOps addresses both code and data dependencies, statistical validation alongside functional testing, and model performance monitoring beyond system uptime.

How does Microsoft Fabric support the complete MLOps lifecycle?

Microsoft Fabric provides integrated capabilities across data engineering, model development, deployment, and monitoring within a single platform. Teams build data pipelines, train models, deploy endpoints, and monitor production performance without integrating multiple disparate tools. This integration reduces complexity and accelerates implementation timelines.

What are the key metrics for measuring MLOps success?

Critical metrics include deployment velocity (time from development to production), deployment frequency, model performance trends, mean time to detection for issues, mean time to resolution for incidents, and business outcomes driven by ML predictions. These metrics should show improving trends as MLOps maturity increases.

How does MLOps ensure model compliance and governance?

MLOps platforms maintain model registries documenting training data, performance metrics, approval workflows, and deployment history. Automated testing includes bias evaluation and explainability generation. Audit trails capture all model changes and predictions for regulatory review. These capabilities provide the documentation and controls required in regulated industries.

What skills do teams need for successful MLOps implementation?

Effective MLOps requires collaboration among data scientists (algorithm development and model evaluation), ML engineers (deployment infrastructure and optimization), data engineers (pipeline construction and data quality), and DevOps teams (platform management and security). Organizations should invest in cross-training to build shared understanding across these roles.

How does MLOps improve model deployment speed?

MLOps reduces deployment time through automated testing pipelines that validate model quality, containerization that ensures environment consistency, standardized deployment processes eliminating manual coordination, and infrastructure-as-code enabling rapid environment provisioning. Organizations typically see 5-10x deployment speed improvements with mature MLOps practices.