Did you know that each and every day, millions of data points are shared across the web, ranging from pricing updates on e-commerce sites to customer reviews, market trends analysis, and competitor intelligence? But most companies collect only a fraction of that valuable data. Imagine being able to automatically pull in pricing information from thousands of competing stores or monitor the fluctuating market trends with the click of a button. You can use AI-powered web scraping to do this.

The global AI-driven web scraping market is expected to grow from USD 7.48 billion in 2025 to USD 38.44 billion by 2034. Leading companies are already using this technology today to gain an edge, automating the manual work of data collection, and obtaining high-level insights that form the basis for making critical business decisions.

Web scraping transforms unstructured data from disparate and complex sources into structured, actionable intelligence. Wondering how this can help grow your business? So let us dive into the best tools, advantages, and possible applications of web scraping.

Key Takeaways

- AI-powered web scraping market growth from $7.48 billion to $38.44 billion by 2034, transforming how businesses collect and process web data automatically

- How automation and AI enhance web scraping through faster data collection, handling complex websites, improved accuracy, and effortless scaling without technical expertise

- Comprehensive tool comparison covering no-code solutions like Gumloop, visual AI tools like Landing.ai, and advanced models like Google Gemini and OpenAI GPT for different business needs

- Essential web scraping best practices including respecting robots.txt files, implementing rate limiting, using rotating IPs, and ensuring data accuracy for sustainable operations

- Real-world applications across e-commerce, real estate, finance, and market research industries, with Kanerika achieving 88% automation potential in data extraction processes

Drive Your Enterprise Growth with Premier AI Consulting Services!

Partner with Kanerika Today!

What is Web Scraping?

Web scraping is the process of extracting data from websites automatically. It’s like using a robot to browse the web, collect information, and save it in a structured format, such as a spreadsheet.

Instead of manually copying and pasting data, web scraping lets you gather large amounts of information quickly, such as prices, reviews, or news articles, from multiple sites.

This technique is widely used by businesses to track competitors, monitor trends, or collect research data, making it an essential tool in the digital age where data is key to decision-making and staying ahead in the market.

Why is Web Scraping Essential for Businesses?

Web scraping is essential for businesses because it allows them to collect valuable data from various online sources, such as e-commerce sites, social media, news outlets, and more.

With this data, businesses can track competitor prices, monitor customer sentiment, analyze market trends, and gather insights for decision-making.

Scraping large amounts of data manually would be time-consuming and error-prone, but automation through web scraping makes the process faster, more accurate, and scalable.

By gathering up-to-date, structured data, businesses can stay ahead of the competition, optimize their strategies, and respond quickly to changes in the market.

AI Proofreading: The Ultimate Solution for Flawless Documents

AI proofreading is the ultimate solution for creating flawless, error-free documents with speed and precision..

How Automation and AI Tools Enhance Web Scraping

1. Speeds Up Data Collection

Instead of manually sifting through websites for hours, automation tools let you gather vast amounts of data in a fraction of the time. AI-powered tools can extract everything you need from multiple websites simultaneously, so you get fresh data quickly and efficiently.

2. Handles Complex Websites with Ease

Some websites are tricky, with dynamic content or complex layouts. AI tools can analyze and navigate these websites, pulling the data even when things change or load dynamically, something regular scrapers can’t handle as well.

3. Accuracy and Precision

With AI tools, data extraction is much more accurate. The technology is smart enough to filter out irrelevant info and focus on what matters, reducing errors and saving you time cleaning up data afterward.

4. Scales Effortlessly

The real power of automation is scalability. Whether you need to scrape 10 pages or 10,000, AI tools handle large volumes of data without slowing down. As your data needs grow, the tools grow with you.

5. No Need for Technical Expertise

Many AI-powered web scraping tools are now user-friendly, with no-code solutions that allow even non-technical users to set up and run scraping tasks. This opens up opportunities for businesses without a dedicated technical team to still harness the power of web scraping.

6. Smart Data Interpretation

AI doesn’t just pull data; it understands it. Whether it’s extracting key-value pairs, interpreting sentiment from social media posts, or identifying trends, AI can process and structure raw data in ways that make it actionable for your business.

7. Reduces Costs in the Long Run

While setting up AI tools may require some initial investment, the long-term savings are undeniable. The automation reduces the need for manual work and the associated labor costs. Plus, the efficiency of AI tools ensures you can get more done with fewer resources.

8. Adapts to Website Changes

Websites are constantly evolving, and scraping scripts can often break when a site changes. AI tools are designed to adapt to changes, ensuring that your data collection remains uninterrupted even as websites update their layouts or content structures.

9. Customizable and Flexible

With AI-powered tools, you can customize the scraping process to suit your specific needs. Whether you’re interested in collecting data from social media platforms, e-commerce sites, or news articles, AI lets you tailor the scraping to match your business goals.

10. Improved Decision-Making

The ability to collect real-time, relevant data from multiple sources gives businesses the insights they need to make smarter decisions faster. Whether it’s adjusting pricing strategies, responding to customer feedback, or analyzing competitors, AI-powered web scraping ensures you’re always in the know.

Top Web Scraping Tools: Key Features, Benefits and Limitations

1. Gumloop: Simple, No-Code Automation

Gumloop is a no-code platform that allows users to automate basic web scraping tasks easily. It’s designed for those who want a straightforward solution for simple data extraction.

Key Features:

- Drag-and-drop interface for ease of use.

- No coding required to set up workflows.

Best Use Cases:

- Ideal for small-scale scraping tasks, especially for non-technical users who need to pull data without coding knowledge.

- Suitable for simple data extraction like news articles, social media posts, or product listings.

2. AgentQL: Natural Language Queries for Data Extraction

AgentQL uses natural language to query websites, making it easy for users to ask questions and get structured data. It’s perfect for those looking for an intuitive way to interact with web data.

Key Features:

- Allows users to interact with websites using plain English.

- Focuses on querying websites with simple, conversational language.

Best Use Cases:

- Best suited for those who need quick, simple data extraction without technical jargon.

- Great for smaller projects where complex data extraction (like PDFs) isn’t needed.

3. ScrapeGraphAI: AI-Powered Web Scraping

ScrapeGraphAI uses artificial intelligence to scrape web data intelligently, adapting as it collects more information. It’s designed for businesses looking to add some level of AI into their data extraction process.

Key Features:

- AI-driven scraping that learns and adapts to the data it encounters.

- Designed to handle dynamic websites with ever-changing content.

Best Use Cases:

- Ideal for businesses that want to scrape data from websites that change often, like news outlets or e-commerce sites.

- Great for smaller-scale projects with less structured data.

4. Landing.ai: Visual AI for Document Processing

Landing.ai focuses on interpreting and processing complex visual elements within documents, like tables and charts, especially in PDFs. It’s perfect for businesses that need to extract data from visual-heavy content.

Key Features:

- Specializes in parsing visual content such as charts, tables, and images in PDFs.

- Outputs structured data in Markdown or JSON formats.

Best Use Cases:

- Perfect for businesses that need to extract data from architectural plans, reports, or documents rich in visuals.

- Ideal for industries like real estate, finance, and engineering where documents are more than just text.

5. LlamaIndex: Modular Document Parsing

LlamaIndex offers flexible document parsing, extraction, and indexing features, providing businesses with a robust tool for handling diverse data sources.

Key Features:

- Modular setup for easy customization of scraping workflows.

- Allows indexing of data for easier retrieval and processing.

Best Use Cases:

- Ideal for businesses that need flexibility in managing and extracting data from large document collections.

- Works well for internal or research-focused tasks where customization is key.

6. PyMuPDF: Fast, Efficient PDF Text Extraction

PyMuPDF is a Python-based library known for its speed and efficiency in extracting text and images from PDFs. It’s a go-to tool for anyone needing quick data extraction from PDFs.

Key Features:

- High-speed extraction of text, images, and structured data from PDFs.

- Supports large volumes of documents.

Best Use Cases:

- Perfect for businesses needing to extract raw content from large volumes of PDF documents quickly.

- Best used in data-heavy industries, like legal, finance, or research, where PDFs are frequently used.

7. OpenAI (GPT Models): Advanced Data Interpretation

OpenAI’s GPT models provide powerful data interpretation and extraction capabilities, making them an excellent choice for businesses needing deep semantic understanding of web content.

Key Features:

- Uses advanced natural language models to extract meaningful insights from unstructured text.

- Excellent at semantic filtering and identifying key points within large volumes of data.

Best Use Cases:

- Ideal for businesses dealing with complex, unstructured text that requires understanding and extraction of key information.

- Works best in industries like marketing, finance, and research, where precise data interpretation is crucial.

8. Google Gemini 1.5 (Pro/Flash): The All-Rounder

Google Gemini 1.5 is a cutting-edge AI tool that combines powerful reasoning abilities with a large context window, making it highly efficient for large-scale web scraping tasks.

Key Features:

- Large context window capable of processing vast amounts of data in fewer calls.

- Advanced reasoning capabilities for improved data extraction accuracy.

Best Use Cases:

- Best for large-scale data extraction projects that require both speed and accuracy.

- Ideal for businesses in need of a reliable all-rounder for diverse data sources.

Transform Your Business with AI-Powered Solutions!

Partner with Kanerika for Expert AI implementation Services

Choosing the Best Tool for Your Web Scraping Needs

1. For Basic Data Extraction: Gumloop

If you’re just starting with web scraping or need to automate basic data extraction without diving into complex coding, Gumloop is a fantastic option. This no-code platform allows you to quickly set up web scraping workflows using a drag-and-drop interface.

It’s ideal for non-technical users who need a simple solution to gather data without the hassle of learning programming languages or setting up intricate scripts.

- No coding required: The drag-and-drop interface makes it accessible to everyone, even those with no technical background.

- Quick setup: You can create and run scraping tasks in a matter of minutes.

- User-friendly: Designed with beginners in mind, it makes web scraping easy for non-technical users.

- Cost-effective: Ideal for businesses on a budget, especially for small-scale scraping needs.

- Perfect for simple data collection: Great for extracting text-based information like news, product listings, or social media content.

2. For Complex Data and Visual Documents, Landing.ai and PyMuPDF

If your needs go beyond simple text scraping and involve complex documents, Landing.ai and PyMuPDF are great choices. These tools excel at handling intricate data extraction tasks, especially when your data is spread across PDFs, charts, or documents with heavy visual content.

Landing.ai

Landing.ai is designed to process documents that are visually complex, such as PDFs containing tables, charts, or images. It excels at understanding and structuring these elements, making it ideal for industries that rely on visual data.

- Visual AI: Capable of interpreting complex documents with charts, tables, and images.

- Structured Data Outputs: Converts visual content into structured formats like Markdown or JSON.

- Ideal for Visual Industries: Perfect for real estate, architecture, and finance where documents are often rich in non-text content.

- Advanced Layout Parsing: Handles multi-page documents and complex layouts with ease.

PyMuPDF

When dealing with large numbers of PDFs and needing fast, efficient text extraction, PyMuPDF is your go-to tool. It works well for businesses that need to extract large volumes of text quickly from structured documents like research papers, contracts, or legal documents.

- Fast and Efficient: Extracts large volumes of text quickly from PDFs.

- Handles High-Volume PDFs: Great for businesses with large collections of PDF documents that need quick processing.

- Free and Open-Source: Ideal for businesses on a budget, as it’s a powerful and cost-effective solution for text extraction.

- Python Integration: Easy to integrate with existing workflows for more complex applications.

3. For Advanced, AI-Driven Extraction, Google Gemini and OpenAI GPT models

When you need advanced AI-powered extraction for complex, unstructured data, Google Gemini and OpenAI’s GPT models offer exceptional capabilities. These tools provide semantic understanding and deep insights, making them perfect for large-scale, complex web scraping projects that require more than just raw data collection.

Google Gemini

Google’s Gemini 1.5 (Pro/Flash) offers the best combination of speed, accuracy, and reasoning capabilities. It’s highly efficient at processing large datasets with complex structures, handling not just raw scraping but also deep content interpretation.

- Large Context Window: Efficiently processes larger chunks of data in fewer requests.

- Highly Accurate: Known for excellent performance in extracting structured data from unstructured text.

- Cost-effective: Better suited for large datasets with its scalable processing and reduced costs.

- Strong Reasoning: Can understand and extract context, making it ideal for businesses needing in-depth insights.

OpenAI GPT Models

OpenAI’s GPT models provide unmatched natural language processing (NLP) power, making them perfect for businesses needing to extract meaningful data from complex and unstructured content. These models excel in interpreting large amounts of text, making them highly effective for semantic filtering and key-value pair extraction.

- Deep Semantic Understanding: Perfect for businesses that require nuanced understanding of data.

- Flexibility: Can be tailored for a variety of industries, from finance to marketing, for extracting valuable insights.

- Accurate Key-Value Extraction: Excels at identifying and extracting key-value pairs from unstructured documents.

- Customizable: Easily adaptable to different data extraction needs through prompt tuning.

AI Agents Vs AI Assistants: Which AI Technology Is Best for Your Business?

Compare AI Agents and AI Assistants to determine which technology best suits your business needs and drives optimal results.

What Are the Best Practices for Web Scraping?

1. Respect Robots.txt and Terms of Service

Before you start scraping a website, always check its robots.txt file and the terms of service. These files provide guidelines on what is permissible to scrape and what is off-limits, ensuring that you don’t accidentally breach any rules. Not following these guidelines can lead to legal trouble or your IP being blocked.

- Robots.txt: Indicates which parts of the website can be scraped and which can’t.

- Terms of Service: Outlines the legal boundaries for data extraction from the website.

- Compliance: Helps you avoid any legal issues or potential penalties.

2. Implement Rate Limiting

Web scraping can overwhelm a website’s server if too many requests are made in a short time. To avoid this, implement rate limiting, which means adding delays between your requests. This way, you reduce the chances of getting your IP blocked and ensure you’re not disrupting the website’s performance.

- Request Delays: Set a time delay between requests to prevent overloading servers.

- Lower Bounce Rates: Prevent being flagged as a bot by behaving like a real user.

- Sustainability: Long-term scraping efforts will remain stable and reliable.

3. Use Rotating IPs

If you’re scraping a large volume of data from the same website, your IP might get flagged or blocked. To avoid this, use rotating IPs. This allows your scraper to switch between different IP addresses, making it harder for websites to detect and block your scraping activities.

- IP Rotation: Distribute your requests across multiple IPs to reduce detection.

- Avoid Detection: Prevent your IP from being flagged or restricted by the website.

- Scalability: Helps in handling large-scale scraping tasks without interruption.

Use Cases for Web Scraping in Different Industries

1. Ensure Data Accuracy

When scraping data, especially from unstructured content like blogs or social media, it’s important to validate and clean your data. Using automated tools that rely on AI or machine learning may lead to errors or incorrect data extraction. You should always double-check and clean your scraped data to ensure it’s accurate and useful for your business.

- Data Validation: Cross-check extracted data against known sources to ensure it’s correct.

- Error Handling: Use built-in functions to catch and fix data anomalies.

- Data Cleaning: Remove duplicates, fix formatting, and ensure data is structured properly for analysis.

2. E-commerce and Retail

In the e-commerce and retail industry, web scraping plays a key role in staying competitive. By scraping competitor prices, product listings, and customer reviews, businesses can adjust their strategies in real-time. It’s a great way to track market trends, monitor customer sentiment, and ensure products are priced correctly.

- Price Monitoring: Keep track of competitor prices to remain competitive.

- Product Listings: Extract details about product availability, specifications, and descriptions.

- Competitor Analysis: Track competitors’ offerings and identify gaps in your product range.

- Customer Sentiment: Scrape reviews to understand what customers like or dislike about products.

3. Real Estate

Real estate companies use web scraping to collect property listings, monitor market trends, and stay updated on local zoning laws. By scraping data on property prices, square footage, and neighborhood trends, they can gain valuable insights into the market, helping them make informed decisions.

- Property Listings: Collect data on available properties, prices, and features.

- Local Zoning Regulations: Stay updated on local laws, zoning changes, and land use restrictions.

- Demographic Data: Understand the demographic trends of specific areas to target potential buyers.

- Market Trends: Track property price trends and rental yields for better investment decisions.

4. Finance and Investment

For finance and investment sectors, web scraping is used to monitor market trends, extract financial reports, and analyze news articles for stock market insights. By gathering real-time financial data and news, investors can make better-informed decisions and stay ahead of market movements.

- Market Trends: Track stock prices, bonds, commodities, and forex rates.

- News Analysis: Scrape financial news sites to stay updated on economic events.

- Financial Reports: Extract key financial data like earnings reports, balance sheets, and income statements.

- Investment Opportunities: Identify trends and investment opportunities by scraping data from multiple financial sources.

5. Market Research

Web scraping is a powerful tool for market researchers who need to gather large volumes of data from various online sources. By scraping customer reviews, competitor data, and sentiment analysis, businesses can gain insights into customer behavior and market demand.

- Customer Reviews: Collect and analyze feedback from customers across different platforms.

- Sentiment Analysis: Scrape social media and review sites to understand customer feelings about brands or products.

- Competitor Data: Gather competitor pricing, marketing strategies, and product offerings.

- Market Trends: Monitor changes in customer preferences, product demand, and industry shifts.

Transforming Tech Leadership: A Generative AI CTO and CIO Guide

Explore as a CIO/CTO, what should be your top priorities in terms of making your enterprise GenAI ready.

Kanerika’s Intelligent Web Scraping Services for Faster Data Insights

Kanerika has evaluated several advanced tools and services for automating data extraction and has identified the most effective solutions for optimizing web scraping processes. By leveraging powerful APIs and AI-driven models like Gemini 2.5 LLM, we can significantly enhance the efficiency and scalability of data extraction tasks.

With our capabilities, we can automate up to 88% of the data extraction process from web, drastically reducing manual effort, time, and costs. We ensure a streamlined process that enables businesses to gather data faster, more accurately, and at a lower operational cost, while maintaining high scalability for future growth.

Redefine Enterprise Efficiency With AI-Powered Solutions!

Partner with Kanerika for Expert AI implementation Services

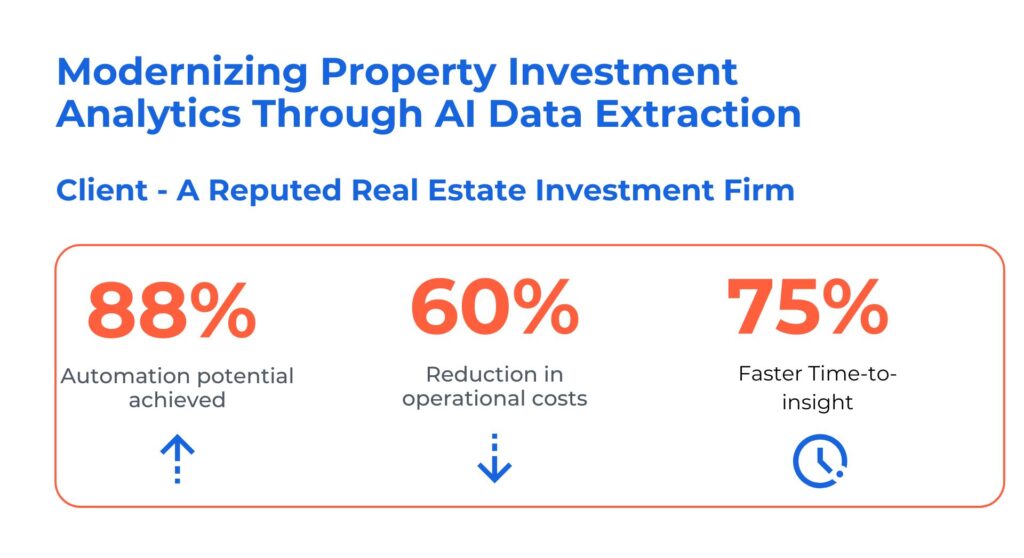

Case Study: Modernizing Property Investment Analytics Through AI-Powered Data Extraction

A reputed real estate investment firm wanted to overcome inefficient data collection and gain faster, more reliable insights to stay competitive in fast-moving markets. Kanerika helped achieve these goals with the following solutions:

- Leveraged APIs and scalable web scraping to unify raw data collection and ensure consistent access across all websites.

- Deployed PDF extraction tools to process both text-heavy and visually complex documents, improving structured output accuracy.

- Implemented Google Gemini LLMs for semantic filtering and precise key-value pair extraction, drastically reducing manual effort.

Results Achieved

Frequently Answered Questions

What is web scraping?

Web scraping is the automated process of extracting data from websites. It involves using a tool or script to access and retrieve information from webpages, which is then structured for analysis or storage. This process is widely used for data collection, market research, and monitoring.

Is scraping the web illegal?

Web scraping is not inherently illegal, but it can violate a website’s terms of service or intellectual property rights. It’s important to check a site’s robots.txt file and review legal agreements to ensure compliance. Some sites explicitly prohibit scraping, which can lead to legal consequences.

What is an example of web scraping?

An example of web scraping is extracting product prices from various e-commerce websites to compare prices across competitors. The data is automatically retrieved and structured into a format, like a spreadsheet, for easy analysis, saving time compared to manual data collection.

Is Google a web scraper?

Google is not a traditional web scraper but uses a process known as crawling. It scans websites using bots (Googlebot) to index content for its search engine. While both web scraping and crawling retrieve data, crawling is focused on indexing, not extracting it for external use.

Can ChatGPT scrape websites?

No, ChatGPT cannot scrape websites. It is a language model that processes and generates text based on inputs. Web scraping requires specific tools or scripts designed to access and extract data from websites, which is outside of ChatGPT’s capabilities.

Does YouTube allow web scraping?

YouTube’s terms of service prohibit scraping its website, as it violates their rules on data access and usage. Instead, YouTube provides an official API for developers to access data in a controlled, legal, and structured way, ensuring compliance with their policies.

Is Python a web scraping tool?

Python itself is not a web scraping tool, but it is a popular programming language used for building web scraping scripts. Libraries like BeautifulSoup, Scrapy, and Selenium are used in Python to scrape and process data from websites efficiently.

Is web scraping an API?

No, web scraping is not an API. Web scraping refers to the technique of extracting data from websites, while an API (Application Programming Interface) provides a structured method for accessing data from services, often with built-in permissions and rules, unlike scraping which can bypass these restrictions.

Can we use AI to scrape websites?

Yes, AI can enhance web scraping by intelligently identifying, extracting, and structuring data. AI-powered tools use machine learning models to process unstructured data from websites and adapt to dynamic content, improving accuracy and efficiency compared to traditional scraping methods.

Will AI replace web scrapers?

AI is unlikely to fully replace web scrapers but will enhance them. AI can optimize data extraction by improving accuracy, handling unstructured content, and automating complex tasks. However, AI still requires web scraping frameworks to collect the data, making them complementary rather than interchangeable.