Despite enterprises pouring $30-40 billion into artificial intelligence initiatives, 95% of AI pilots fail to deliver measurable return on investment, with 42% of companies abandoning most AI projects in 2025, up from 17% in 2024. The solution isn’t better machine learning models—it’s a comprehensive enterprise AI adoption strategy combining verticalized AI solutions, business process redesign, data governance frameworks, and organizational change management.

This blog outlines an 8-phase AI implementation roadmap that transforms AI from experimental pilots into core business capabilities delivering sustainable competitive advantage and measurable business outcomes.

Key Takeaways

- Process integration over technology adoption: Successful AI requires redesigning workflows, not just adding tools to existing processes

- Verticalized solutions deliver higher ROI: Industry-specific AI models outperform generic implementations by addressing domain-specific challenges

- Governance frameworks prevent costly failures: Establishing ethical guidelines, compliance controls, and risk management from day one reduces project abandonment rates

- Data readiness is non-negotiable: Unified data architecture and quality standards are prerequisites for AI success, not afterthoughts

- Bottom-up adoption scales faster: Organizations leveraging power users who experimented with tools like ChatGPT achieve faster scaling than top-down approaches

Enhance Customer Experiences Using AI Technology!

Partner with Kanerika for Expert AI implementation Services

The AI Implementation Crisis: Why Pilots Rarely Become Production

The artificial intelligence revolution promised to transform enterprise operations, yet a stark reality has emerged from corporate boardrooms across America. MIT’s comprehensive study of over 300 public AI deployments found that 95% of enterprise generative AI pilots deliver no measurable profit and loss impact. This represents a staggering waste of the $30-40 billion invested in enterprise AI initiatives during 2024-2025.

The problem extends beyond individual project failures. Recent IDC research in partnership with Lenovo found that 88% of AI proof-of-concepts never reach production deployment, while the share of companies abandoning most artificial intelligence projects jumped from 17% in 2024 to 42% in 2025. This represents a fundamental disconnect between AI’s theoretical potential and its practical business application in enterprise environments.

Research from leading analyst firms including Gartner and Forrester confirms these trends, with Gartner predicting that over 40% of agentic AI projects will be canceled by the end of 2027 due to escalating costs, unclear business value propositions, or inadequate risk controls. More than half of IT leaders admit their AI projects are not yet profitable, meaning for every AI success story, there is at least one initiative that has yet to deliver return on investment.

What separates the 5% of successful AI implementations from the overwhelming majority of failures? The answer lies not in superior algorithms or larger training datasets, but in how organizations approach AI adoption holistically treating it as an operating system for business transformation rather than a bolt-on technology solution.

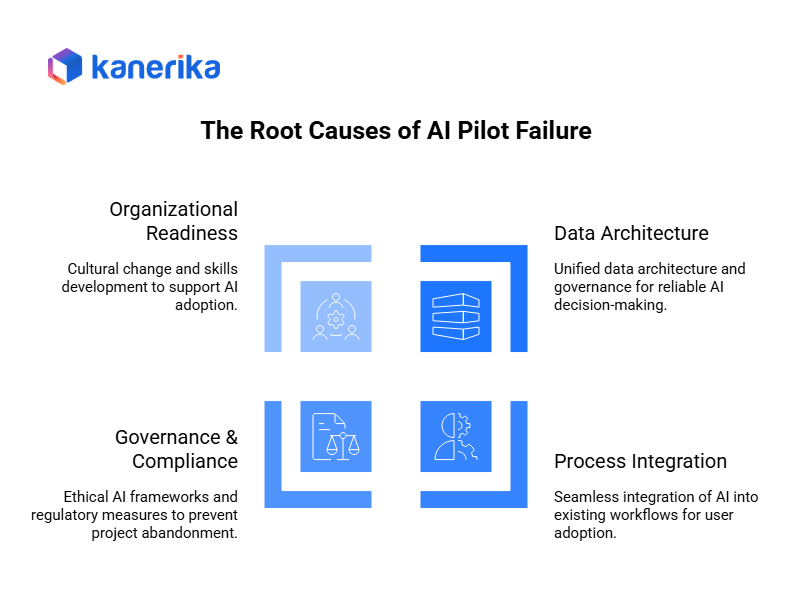

The Root Causes of AI Pilot Failures

What are the main reasons AI pilots fail in enterprises?

AI pilot failures stem from four fundamental issues that organizations consistently underestimate during their digital transformation journey:

1. Data Architecture Deficiencies

Most enterprises attempt AI implementation with fragmented, poor-quality data scattered across legacy systems. Without unified data architecture and comprehensive data governance frameworks, machine learning models cannot access the clean, integrated datasets required for reliable decision-making. Organizations frequently underestimate the data preparation work required, treating it as a technical task rather than a strategic foundation for AI success.

According to MIT research, data scientists spend 80% of their time on data preparation and cleaning, indicating that data readiness is the primary bottleneck for successful AI deployment in enterprise environments. Kanerika’s data governance services address this challenge by establishing unified data foundations before AI development begins.

2. Process Disconnection and Integration Gaps

The fundamental issue is that generic artificial intelligence tools like ChatGPT don’t adapt to enterprise workflows unless given the right context, governance, and systems integration. Companies bolt AI onto existing legacy business processes without reimagining how work actually gets done. This results in AI recommendations that don’t align with operational realities, leading to user resistance and project abandonment.

Process integration challenges include workflow redesign, change management, user training, and ensuring AI outputs align with existing decision-making frameworks and compliance requirements.

3. Governance and Compliance Blind Spots

Top reasons for AI project abandonment include escalating costs, data privacy concerns, and missing operational controls. Organizations rush into AI implementation without establishing ethical AI frameworks, regulatory compliance measures, or comprehensive risk management protocols. This creates vulnerability to bias, privacy violations, and regulatory penalties that can halt projects entirely.

Gartner research indicates that at least 30% of generative AI projects will be abandoned after proof of concept by the end of 2025, due to poor data quality, inadequate risk controls, escalating costs or unclear business value. AI governance frameworks must address model explainability, bias detection, regulatory compliance, data privacy, and ongoing monitoring to ensure responsible AI deployment at enterprise scale. Kanerika’s AI governance consulting helps organizations establish these critical frameworks from day one.

4. Organizational Readiness Misalignment

According to MIT CISR research, the high number of AI POCs but low conversion to production indicates the low level of organizational readiness in terms of data maturity, business processes and IT infrastructure capability. MIT’s study of 771 companies found that companies in the first two stages of AI maturity had financial performance below their industry’s average, while those in later stages performed above average.

Success requires cultural change, skills development, upskilling programs, and cross-functional collaboration that most organizations haven’t prioritized alongside technology deployment. Organizational change management for AI adoption includes executive sponsorship, user training, change communication, and building AI literacy across business functions to ensure sustainable adoption. Kanerika’s comprehensive AI transformation services address these organizational readiness challenges systematically.

| Common AI Pilot Failure Patterns | Impact on Business Operations |

| Fragmented data sources and poor data quality | Inconsistent AI model outputs, reduced prediction accuracy |

| Process disconnection and workflow misalignment | User resistance, operational disruption, low adoption rates |

| Missing AI governance frameworks and compliance controls | Regulatory risks, ethical concerns, audit failures |

| Inadequate change management and organizational readiness | Low user adoption, cultural resistance, project abandonment |

| Unrealistic ROI expectations and unclear success metrics | Budget cuts, executive skepticism, project cancellation |

Why Enterprise-Grade AI Roadmaps Create Sustainable Value

What is an enterprise AI implementation roadmap?

An enterprise AI implementation roadmap is a comprehensive strategic plan that transforms scattered AI experiments into coordinated business capabilities. Unlike one-off pilots, strategic AI roadmaps align artificial intelligence initiatives with business objectives, establish data governance standards, and create scalable frameworks for long-term digital transformation success.

How does an AI roadmap differ from individual AI pilots?

AI roadmaps address four critical dimensions simultaneously: technical infrastructure development, organizational readiness assessment, governance frameworks implementation, and business process optimization. This holistic approach ensures AI becomes embedded in daily business operations rather than remaining an experimental add-on technology.

The roadmap methodology also enables better resource allocation and enterprise risk management. Instead of pursuing multiple disconnected AI pilots, organizations can prioritize high-impact use cases, establish shared data infrastructure, and build institutional AI capabilities that compound over time, creating sustainable competitive advantages.

Successful AI transformation requires coordinated efforts across data engineering, machine learning operations, change management, and business process reengineering to achieve measurable return on investment and scalable business value.

Kanerika’s Process-First, Verticalized AI Philosophy

Kanerika’s enterprise AI consulting approach emphasizes that AI success requires more than sophisticated algorithms—it demands deep understanding of industry-specific challenges, business process optimization, and organizational transformation dynamics. As a Microsoft Data & AI Solutions Partner and Databricks certified partner, Kanerika brings proven expertise in enterprise AI implementation across manufacturing, retail, healthcare, finance, and logistics industries.

According to Gartner predictions, by 2027, more than 50% of the GenAI models that enterprises use will be specific to either an industry or business function — up from approximately 1% in 2023. Additionally, MIT research shows that enterprises in stages 3 and 4 of AI maturity had financial performance well above the industry average. This philosophy manifests in several key principles backed by years of implementation experience:

1. Verticalized AI Solutions

Rather than deploying generic AI tools, Kanerika develops industry-specific AI solutions tailored to domain expertise and regulatory requirements. Manufacturing clients receive predictive maintenance models trained on industrial sensor data and equipment specifications. Retail organizations get demand forecasting algorithms optimized for seasonal patterns, promotional impacts, and supply chain variables.

Healthcare organizations benefit from clinical decision support systems that comply with HIPAA requirements. Financial services clients receive risk assessment models that meet regulatory compliance standards, including SOX, Basel III, and other financial regulations. Kanerika’s industry expertise spans multiple verticals with deep domain knowledge.

2. Process Redesign Integration

Every AI implementation includes comprehensive workflow analysis and business process optimization. Teams don’t simply add AI tools to existing procedures; they reimagine how work gets done when intelligent automation becomes available. This approach ensures AI outputs integrate seamlessly with human decision-making processes and existing enterprise software systems.

Process integration includes workflow mapping, stakeholder analysis, change impact assessment, and user experience design to maximize adoption rates and business value realization.

3. Data-First Architecture

Kanerika’s data integration services establish unified data foundations before AI development begins. This includes data governance frameworks, data quality standards, master data management, and access controls that ensure machine learning models operate on reliable, comprehensive datasets from day one.

Data architecture services include data lake design, data warehouse modernization, real-time data pipelines, and cloud data platform implementation using Microsoft Azure, AWS, and Google Cloud technologies. Kanerika’s Microsoft partnership enables access to cutting-edge tools like Microsoft Fabric, Power BI, and Azure AI services for comprehensive data solutions.

4. Governance and Compliance Framework

AI governance isn’t an afterthought, it’s built into every solution from conception through deployment and ongoing operations. This includes ethical AI guidelines, regulatory compliance measures, audit trails, bias detection systems, and ongoing monitoring frameworks that maintain trust and reduce risk throughout the AI lifecycle.

Governance frameworks address model explainability, fairness testing, privacy protection, security controls, and regulatory compliance specific to each industry vertical and geographic region.

| Governance Component | Implementation Level | Regulatory Focus | Monitoring Frequency |

| Ethical AI Guidelines | Organization-wide policies | GDPR, CCPA, industry standards | Quarterly review |

| Bias Detection & Mitigation | Model-level testing | Equal opportunity, fairness laws | Continuous monitoring |

| Model Explainability | Use case specific | Financial services, healthcare | Per model deployment |

| Data Privacy Controls | Data pipeline level | HIPAA, SOX, regional privacy laws | Real-time monitoring |

| Audit Trail Management | System-wide logging | SOX, Basel III, industry audits | Continuous logging |

| Risk Assessment Framework | Enterprise governance | Industry-specific regulations | Monthly assessment |

Phase-by-Phase AI Implementation Roadmap

This comprehensive enterprise AI adoption framework provides organizations with a structured approach to transform from experimental pilots to production-ready AI capabilities:

Phase 0: Strategy Alignment and Vision Setting

Duration: 2-4 weeks

Key Activities: Define AI vision, secure leadership sponsorship, establish success metrics

Deliverables: AI strategy document, stakeholder alignment, budget allocation

Begin with clear articulation of how artificial intelligence will support broader business objectives and digital transformation goals. This isn’t about technology capabilities—it’s about identifying specific business problems AI can solve profitably. Executive sponsorship during this phase determines whether subsequent phases receive adequate resources and organizational support for successful AI transformation.

Phase 1: AI Maturity Assessment and Infrastructure Readiness

Duration: 4-6 weeks

Key Activities: Data audit, infrastructure evaluation, skills assessment

Deliverables: Maturity assessment report, readiness gap analysis, remediation plan

Kanerika’s AI readiness assessment methodology evaluates five critical dimensions: data quality and accessibility, technical infrastructure capacity, organizational AI literacy, governance frameworks maturity, and regulatory compliance readiness. This comprehensive evaluation identifies prerequisite investments before AI development begins.

According to MIT research, enterprises today are making significant progress in their AI maturity, with the greatest financial impact seen in the progression from stage 2 (building pilots and capabilities) to stage 3 (developing scaled AI ways of working). The assessment reveals whether organizations need data consolidation, cloud infrastructure upgrades, skills development programs, or governance establishment before proceeding with AI pilots. Addressing these gaps early prevents costly rework and project delays later in the implementation process.

Phase 2: Data Foundation and Architecture Development

Duration: 6-12 weeks

Key Activities: Data consolidation, governance implementation, quality frameworks

Deliverables: Unified data platform, governance policies, quality standards

Establish the data foundation required for AI success. This includes consolidating data sources, implementing governance frameworks, and ensuring data quality standards. Without this foundation, AI models cannot deliver consistent, reliable results.

Data architecture work includes establishing data lakes or warehouses, implementing integration pipelines, and creating access controls that balance accessibility with security requirements.

| Data Foundation Components | Implementation Timeline | Key Technologies | Success Metrics |

| Data Lake/Warehouse Setup | 2-4 weeks | Azure Data Lake, Snowflake, Databricks | Data ingestion volume, query performance |

| Data Quality Framework | 3-6 weeks | Data quality tools, validation rules | Data accuracy %, completeness scores |

| Governance Policies | 4-8 weeks | Data catalogs, lineage tools | Policy compliance rate, audit readiness |

| Integration Pipelines | 2-6 weeks | ETL/ELT tools, APIs | Data freshness, processing speed |

| Access Controls | 1-3 weeks | IAM, RBAC systems | Security compliance, user access audit |

Phase 3: Use Case Prioritization and Pilot Development

Duration: 8-12 weeks

Key Activities: Use case evaluation, pilot design, team formation

Deliverables: Prioritized use case list, pilot specifications, success metrics

Select 2-3 high-impact use cases with clear business value and technical feasibility. Successful organizations allow budget holders and domain managers to surface problems, vet tools, and lead rollouts rather than relying on centralized AI functions.

Prioritization considers business impact potential, technical complexity, data readiness, and organizational change requirements. Starting with achievable wins builds momentum and organizational confidence in AI capabilities.

| Use Case Evaluation Matrix | High Impact | Medium Impact | Low Impact |

| Low Complexity | Immediate pilot | Quick win candidate | Deprioritize |

| Medium Complexity | Priority pilot | Future phase | Evaluate alternatives |

| High Complexity | Strategic initiative | Future development | Avoid initially |

Phase 4: Verticalized AI Development and Process Integration

Duration: 12-16 weeks

Key Activities: Model development, workflow redesign, integration testing

Deliverables: Custom AI solutions, redesigned processes, integration protocols

Develop industry-specific AI models that address domain expertise and regulatory requirements. This includes natural language processing for document processing, predictive analytics for operational optimization, and decision support systems for complex business scenarios.

Process integration ensures AI outputs align with existing workflows while enabling new capabilities. Teams learn how to incorporate AI recommendations into daily decision-making without disrupting proven operational practices. Kanerika’s business process automation services complement AI development by optimizing workflows for intelligent automation integration.

Phase 5: Deployment, Monitoring, and Feedback Integration

Duration: 4-8 weeks

Key Activities: Production deployment, user training, performance monitoring

Deliverables: Live AI systems, trained users, monitoring dashboards

Deploy AI solutions with comprehensive monitoring and feedback systems. Initial deployment includes extensive user training, change management support, and performance tracking to identify optimization opportunities quickly.

Feedback loops capture both technical performance metrics and user experience data, enabling rapid refinements that improve adoption rates and business impact.

Phase 6: ModelOps and Long-term Governance

Duration: Ongoing

Key Activities: Model monitoring, retraining, performance optimization

Deliverables: MLOps framework, governance policies, audit systems

Implement comprehensive model lifecycle management to ensure AI systems remain accurate, compliant, and valuable over time. This includes automated monitoring for model drift, scheduled retraining protocols, and governance reviews.

ModelOps capabilities prevent the degradation that causes many AI systems to lose effectiveness months after deployment. Regular auditing ensures models continue meeting ethical standards and regulatory requirements as business conditions evolve.

Phase 7: Organizational Transformation and Culture Development

Duration: 6-12 months

Key Activities: Skills development, change management, governance maturation

Deliverables: AI-ready workforce, governance frameworks, cultural adoption

Transform organizational culture to embrace AI as a core business capability rather than experimental technology. This includes comprehensive training programs, updated job descriptions, and governance structures that support responsible AI adoption at scale.

Cultural transformation ensures AI capabilities are sustained and expanded over time rather than abandoning them when initial champions leave the organization.

Phase 8: Scaling and Continuous Innovation

Duration: 12-24 months and ongoing

Key Activities: Cross-functional expansion, capability enhancement, strategic alignment

Deliverables: Enterprise AI platform, expanded use cases, competitive advantages

Scale successful pilots across departments, functions, and geographies while maintaining governance standards and quality controls. Moreover, this phase transforms AI from isolated solutions into integrated business capabilities that create sustainable competitive advantages.

Continuous innovation includes staying current with technological advances, expanding use cases based on lessons learned, and maintaining alignment with evolving business strategies.

Real-World Success Patterns: Industry-Specific Applications

Case Study 1: Global Pharmaceutical Company – Unified Data Architecture for Business Intelligence

Context: A multinational pharmaceutical company employing nearly 25,000 people worldwide needed to integrate complex data sources across sales, finance, MIS, budgeting, and business operations to enable faster decision-making and innovation.

Problem: The pharmaceutical giant faced significant challenges with data fragmentation across applications and departments, causing:

- Slow response times for critical business decisions

- Inaccurate information due to siloed data systems

- Inability to leverage machine learning for business improvement

- Lack of self-service analytics capabilities for business users

- Delayed time-to-market for new medical solutions

Solution: Kanerika partnered with Microsoft to build an Enterprise Business Intelligence platform powered by Microsoft Fabric, implementing:

- Unified data architecture with lake-centric data mesh design

- Comprehensive Hadoop stack with data pipelines using Hortonworks, Hive, Sqoop, and Apache

- Self-service dashboards and reports using Power BI

- Machine learning-ready data foundation for advanced analytics

- Performance-tuned system to support growing data analytics needs

Similar enterprise transformations have been documented in Harvard Business School case studies, demonstrating the business value of unified data architectures in pharmaceutical companies.

Impact:

- 20x reduction in time to get answers – from hours/days to minutes

- Real-time informed decision-making enabled across all business functions

- Machine learning algorithms deployment for innovation and growth acceleration

- Cohesive business view enabling faster identification of improvement opportunities

- Enhanced time-to-market for new pharmaceutical products and cost reduction initiatives

Case Study 2: Leading Israeli Skincare Company – AI-Powered Value Chain Transformation

Context: A prominent Israeli skincare company sought to implement artificial intelligence across its entire value chain to enhance operational efficiency, improve customer experiences, and build an AI-empowered workforce.

Problem: The skincare manufacturer encountered several operational challenges:

- Manual processes limiting efficiency across production and supply chain

- Inconsistent customer experience delivery across multiple channels

- Lack of data-driven insights for product development and marketing

- Limited workforce capabilities for AI adoption and utilization

- Fragmented systems preventing unified view of business operations

Solution: Kanerika implemented a comprehensive AI transformation strategy including:

- AI-powered production optimization for manufacturing efficiency

- Customer analytics and personalization engines for enhanced experience

- Supply chain intelligence for demand forecasting and inventory optimization

- Workforce training and AI literacy programs for sustainable adoption

- Integrated data platforms connecting production, sales, and customer data

Impact:

- Enhanced operational efficiency across the entire value chain

- Improved customer experience delivery through personalized interactions

- AI-empowered workforce capable of leveraging intelligent automation

- Data-driven decision making enabling faster response to market changes

- Competitive advantage through advanced analytics and process optimization

Case Study 3: Logistics Company – Invoice Processing Automation

Context: A logistics company needed to streamline the processing of diverse logistics invoices while maintaining secure communication with multiple trading partners across different file formats.

Problem:

- Manual processing of invoices from multiple trading partners

- Inconsistent file formats creating processing bottlenecks

- Security concerns with trading partner communications

- Time-consuming validation and approval workflows

- Lack of real-time visibility into invoice processing status

Solution:

- Automated invoice processing system handling multiple file formats

- Secure communication protocols with trading partners

- AI-powered document extraction and validation capabilities

- Workflow automation for approvals and exception handling

- Real-time dashboards for process monitoring and analytics

Impact:

- Streamlined processing of diverse invoice formats

- Efficient handling of multiple trading partner requirements

- Secure communication channels ensuring data protection

- Reduced processing time and improved accuracy

- Enhanced visibility into logistics operations and financial workflows

| Industry Application | Primary AI Technologies | Typical ROI Timeline | Key Success Factors |

| Pharmaceutical | Unified data architecture, machine learning, BI platforms | 6-18 months | Data integration, real-time analytics, regulatory compliance |

| Skincare/Manufacturing | AI-powered production optimization, customer analytics | 6-12 months | Value chain integration, workforce training, process automation |

| Logistics & Supply Chain | Invoice processing automation, document AI, workflow optimization | 3-9 months | Multi-format handling, secure communications, real-time visibility |

| Financial Services | Risk assessment, fraud detection, algorithmic trading | 6-18 months | Regulatory compliance, real-time processing, model explainability |

| Healthcare | Clinical decision support, operational efficiency, patient analytics | 12-24 months | Data privacy, regulatory approval, clinical workflow integration |

The Business Impact of Strategic AI Implementation

Organizations following comprehensive roadmaps achieve fundamentally different outcomes than those pursuing isolated pilots. Hence, strategic implementation creates compound benefits that extend far beyond individual use cases.

1. Operational Efficiency and Cost Optimization

Well-implemented AI systems eliminate repetitive manual tasks, optimize resource allocation, and enhance decision-making speed and accuracy. According to McKinsey research, AI’s progress shows up not just across jobs but within them, at the task level, with demand falling steeply for some occupations like office support and customer service by 2030.

These improvements compound over time as teams develop expertise in leveraging AI capabilities effectively. Cost optimization occurs through reduced external services dependency, improved process efficiency, and better resource utilization. Additionally, organizations often eliminate expensive business process outsourcing contracts while achieving superior service levels internally through intelligent automation solutions.

2. Enhanced Decision-Making Velocity

AI-powered analytics provide real-time insights that enable faster, more informed strategic decisions. Moreover, teams can respond to market changes, operational issues, and customer needs with unprecedented speed and precision.

Decision-making improvements cascade through organizations, enabling more agile responses to competitive pressures and market opportunities.

| Business Impact Area | Typical Improvements | Timeline to Results | Measurement Methods |

| Operational Efficiency | 20-40% cost reduction | 3-9 months | Process automation metrics, labor cost savings |

| Decision Speed | 50-70% faster decisions | 1-6 months | Time-to-decision tracking, response times |

| Customer Experience | 15-30% satisfaction increase | 6-12 months | NPS scores, customer retention rates |

| Revenue Growth | 10-25% revenue lift | 6-18 months | Sales attribution, conversion rates |

| Risk Reduction | 30-60% risk mitigation | 3-12 months | Compliance scores, incident reduction |

| Market Responsiveness | 40-80% faster adaptation | 3-9 months | Time-to-market, competitive positioning |

3. Competitive Advantage and Market Positioning

Organizations with mature AI capabilities can offer superior customer experiences, operational efficiency, and innovation velocity compared to competitors still struggling with pilot programs.

These advantages become self-reinforcing as AI-enabled organizations can invest efficiency gains into further innovation and market expansion.

4. Risk Reduction and Compliance Enhancement

Comprehensive governance frameworks and monitoring systems reduce regulatory risks while ensuring ethical AI deployment. Hence, organizations avoid costly compliance failures and reputational damage that can result from poorly managed AI initiatives.

Risk management capabilities enable organizations to pursue more ambitious AI applications with confidence in their ability to maintain control and accountability.

AI in Robotics: Pushing Boundaries and Creating New Possibilities

Explore how AI in robotics is creating new possibilities, enhancing efficiency, and driving innovation across sectors.

Getting Started: Your Path from Pilot Purgatory to Production Success

Enterprise AI transformation success begins with an honest assessment of current organizational readiness across data infrastructure, business processes, governance frameworks, and company culture dimensions. Additionally, most organizations overestimate their AI readiness while underestimating the coordination required for sustainable artificial intelligence adoption and digital transformation.

1. Initial Assessment and Strategy Development

Begin with a comprehensive evaluation of data quality and accessibility, infrastructure capacity and scalability, organizational AI literacy levels, and governance frameworks’ maturity. Moreover, this AI readiness assessment reveals prerequisite investments and helps prioritize early wins that build momentum and executive confidence.

AI strategy development includes identifying high-impact use cases with clear business value, establishing success metrics and KPIs, creating realistic implementation timelines, and securing adequate budget allocation that accounts for organizational change requirements alongside technical development costs.

| Readiness Assessment Criteria | Maturity Levels | Evaluation Methods | Recommended Actions |

| Data Quality & Accessibility | Basic/Intermediate/Advanced | Data profiling, quality audits | Data cleansing, integration planning |

| Infrastructure Capacity | On-premise/Hybrid/Cloud-native | Performance testing, scalability assessment | Cloud migration, capacity planning |

| AI Literacy & Skills | Beginner/Developing/Proficient | Skills assessment, training needs analysis | Training programs, hiring strategy |

| Governance Maturity | Ad-hoc/Defined/Managed/Optimized | Policy review, compliance audit | Framework development, policy creation |

| Change Readiness | Resistant/Neutral/Supportive | Culture survey, stakeholder analysis | Change management, communication planning |

2. Building Your AI-Ready Foundation

Invest in data consolidation and comprehensive data governance frameworks before pursuing AI development and machine learning model training. According to MIT research, organizations with strong data foundations achieve faster AI success with lower risks compared to those attempting AI implementation on fragmented data environments and legacy systems.

Infrastructure preparation includes establishing cloud platforms, developing integration capabilities, and implementing security frameworks that support AI workloads while maintaining regulatory compliance requirements and enterprise security standards. Kanerika’s cloud migration services help organizations build the foundational infrastructure necessary for enterprise AI deployment.

3. Selecting the Right Partnership Strategy

External AI consulting partnerships double the likelihood of successful deployment compared to purely internal development efforts according to MIT research. Partner selection should prioritize domain expertise, proven implementation methodologies, industry certifications, and comprehensive service capabilities over lowest cost proposals.

Effective AI partnerships combine external artificial intelligence expertise with internal business knowledge to create solutions that are both technically sophisticated and operationally practical for long-term sustainability and business value creation. Kanerika’s proven track record with Fortune 500 companies and industry certifications make it an ideal partner for AI transformation initiatives.

Measuring Success: KPIs and ROI Tracking

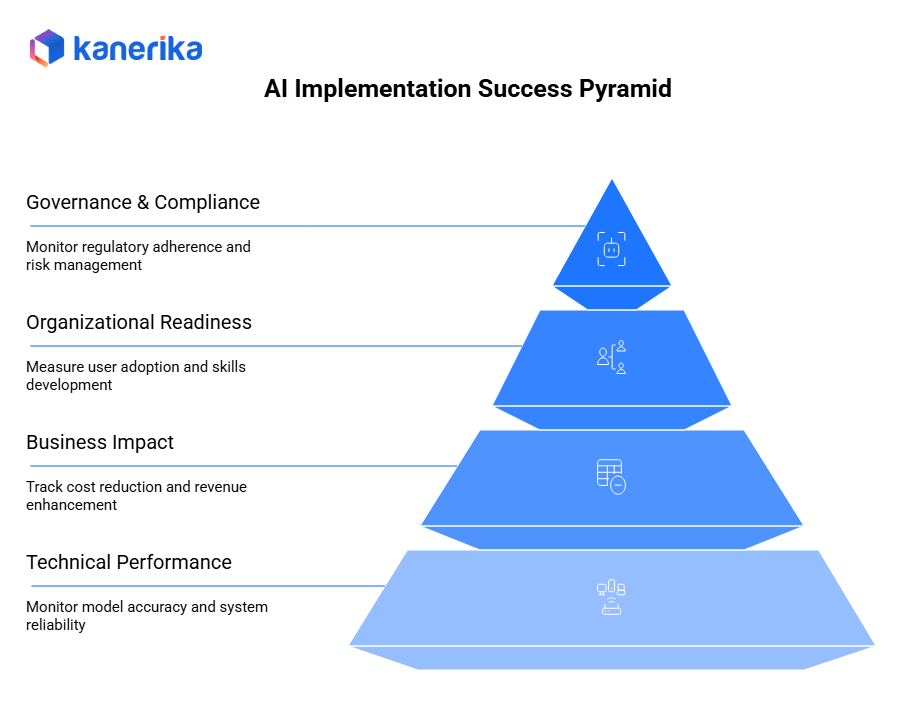

Successful enterprise AI implementations require comprehensive measurement frameworks that capture both immediate operational improvements and longer-term strategic benefits. Traditional ROI calculations often miss significant value creation in efficiency gains, risk reduction, customer experience enhancement, and competitive positioning advantages.

1. Technical Performance Metrics

Monitor machine learning model accuracy, processing speed, system reliability, and scalability to ensure AI solutions maintain performance standards over time. Include drift detection algorithms, retraining frequency optimization, error rates analysis, and system availability metrics in ongoing evaluation frameworks.

Key technical KPIs include prediction accuracy rates, model inference latency, system uptime, data processing throughput, and model performance degradation alerts.

| Metric Category | Key Performance Indicators | Target Ranges | Monitoring Tools |

| Model Performance | Accuracy, Precision, Recall, F1-Score | >85% accuracy, <5% drift | MLflow, Weights & Biases |

| System Performance | Latency, Throughput, Uptime | <200ms response, 99.9% uptime | DataDog, New Relic |

| Data Quality | Completeness, Accuracy, Consistency | >95% data quality score | Great Expectations, Deequ |

| User Adoption | Active users, Feature usage, Satisfaction | >70% adoption, 4+ NPS score | Analytics platforms, Surveys |

| Business Impact | ROI, Cost savings, Efficiency gains | Positive ROI within 12 months | Business intelligence tools |

| Governance | Compliance rate, Audit results | 100% regulatory compliance | Audit management systems |

2. Business Impact Indicators

Track specific business outcomes such as cost reduction percentages, revenue enhancement metrics, process efficiency improvements, customer satisfaction scores, and employee productivity gains. Connect these directly to AI system performance to demonstrate clear value attribution and return on investment.

Business KPIs should include operational efficiency gains, cost savings calculations, revenue attribution, customer experience metrics, and competitive advantage indicators.

3. Organizational Readiness Progress

Measure user adoption rates, employee satisfaction scores, skills development progress, and cultural acceptance indicators to ensure AI capabilities are sustainable and scalable across the organization. Change management success metrics are critical for long-term AI program viability.

4. Governance and Compliance Tracking

Monitor regulatory compliance adherence, audit results, ethical guideline implementation, bias detection alerts, and risk management effectiveness to maintain trustworthy AI operations and avoid regulatory penalties.

Frequently Asked Questions

How long does it typically take to see ROI from AI implementations?

Organizations using proven external AI platforms report return on investment within four to six weeks, significantly faster than the six to twelve months required for custom-built artificial intelligence solutions.

What's the difference between AI pilots and production implementations?

AI pilots focus on proving technical feasibility in controlled environments, while production implementations require business process integration, data governance frameworks, user training programs, and organizational change management.

How do you prevent AI model degradation over time?

MLOps frameworks include automated monitoring for data drift, model performance degradation, and prediction accuracy decline.

What role does data quality play in AI success?

Data quality is foundational to artificial intelligence success. Poor data quality causes unreliable AI outputs, user distrust, and project abandonment.

How do you handle employee resistance to AI adoption?

Change management strategies for AI adoption include transparent communication about AI’s role in augmenting rather than replacing human capabilities.

What's the most critical factor for AI implementation success?

Organizational readiness across data infrastructure, business processes, governance frameworks, and company culture is more critical than AI technology itself.

How do you ensure AI governance and ethical compliance?

Establish comprehensive AI governance frameworks before development begins, including ethical AI guidelines, regulatory compliance measures, audit trails, bias detection systems, and ongoing monitoring protocols.