Modern data pipelines are growing more complex than ever, driven by the rise of AI, real-time analytics, and massive data volumes. For organizations leveraging the Databricks Lakehouse architecture, the challenge is no longer just about moving data and it’s about orchestrating it seamlessly across batch, streaming, and operational systems. That’s where Databricks Lakeflow comes in.

Announced at the Databricks Data + AI Summit 2024 and gaining rapid adoption in 2025, Lakeflow is designed to simplify how enterprises build and manage data pipelines. It unifies ETL, streaming, and workflow orchestration into one visual, low-code interface by eliminating the need for fragmented tools like Airflow, Kafka, and custom scripts.

Take JetBlue, for example, which is modernizing its analytics stack to support real-time customer experience enhancements. By adopting Lakeflow, they’ve streamlined complex ingestion workflows and reduced latency across operations.

Say goodbye to fragmented tools as Lakeflow brings your batch, streaming, and orchestration under one roof.

Key Learnings

- Lakeflow unifies batch, streaming, and orchestration in one platform

Databricks Lakeflow removes the need for multiple tools by combining ingestion, transformation, and orchestration into a single, integrated experience. - Operational complexity is significantly reduced with automation

Built-in scheduling, retries, and monitoring minimize manual intervention and simplify pipeline management at scale. - Data reliability and quality are built into the pipeline design

Lakeflow leverages Delta Lake features such as ACID transactions, schema enforcement, and incremental processing to ensure trusted data. - Lakeflow is designed for enterprise-scale workloads

The platform supports high data volumes, complex dependencies, and distributed processing, making it suitable for mission-critical pipelines. - Governance and security are natively supported

Integration with Unity Catalog ensures centralized access control, lineage, and compliance across data pipelines.

Partner with Kanerika to Modernize Your Enterprise Operations with High-Impact Data & AI Solutions

What is Databricks Lakeflow?

Databricks Lakeflow is a unified solution that simplifies all aspects of data engineering, from data ingestion to transformation and orchestration. Built natively on top of the Databricks Data Intelligence Platform, Lakeflow provides serverless compute and unified governance with Unity Catalog.

Lakeflow combines batch and streaming data pipelines through its three key components: Lakeflow Connect, Lakeflow Pipelines, and Lakeflow Jobs.

- Lakeflow Connect provides point-and-click data ingestion from databases like SQL Server and enterprise applications such as Salesforce, Workday, Google Analytics, and ServiceNow. This visual interface eliminates the need for custom coding when connecting to common data sources.

- Lakeflow Pipelines lower the complexity of building efficient batch and streaming data pipelines. Built on the declarative Delta Live Tables framework, this component allows teams to define data transformations using simple SQL or Python rather than managing complex orchestration logic.

- Lakeflow Jobs reliably orchestrates and monitors production workloads across the entire platform. This native orchestration engine schedules, triggers, and tracks all data workflows in one centralized location.

Understanding Databricks Lakeflow

The platform is designed for real-time data ingestion, transformation, and orchestration with advanced capabilities including Real Time Mode for Apache Spark, allowing stream processing at orders of magnitude faster latencies than microbatch. Users can transform data in batch and streaming using standard SQL and Python, while orchestrating and monitoring workflows and deploying to production using CI/CD.

A key principle of Lakeflow is unifying data engineering and AI workflows on a single platform. The solution addresses the complexity of stitching together multiple tools by providing native, highly scalable connectors and automated data orchestration, incremental processing, and compute infrastructure autoscaling. This unified approach enables data teams to deliver fresher, more complete, and higher-quality data to support AI and analytics initiatives across the organization.

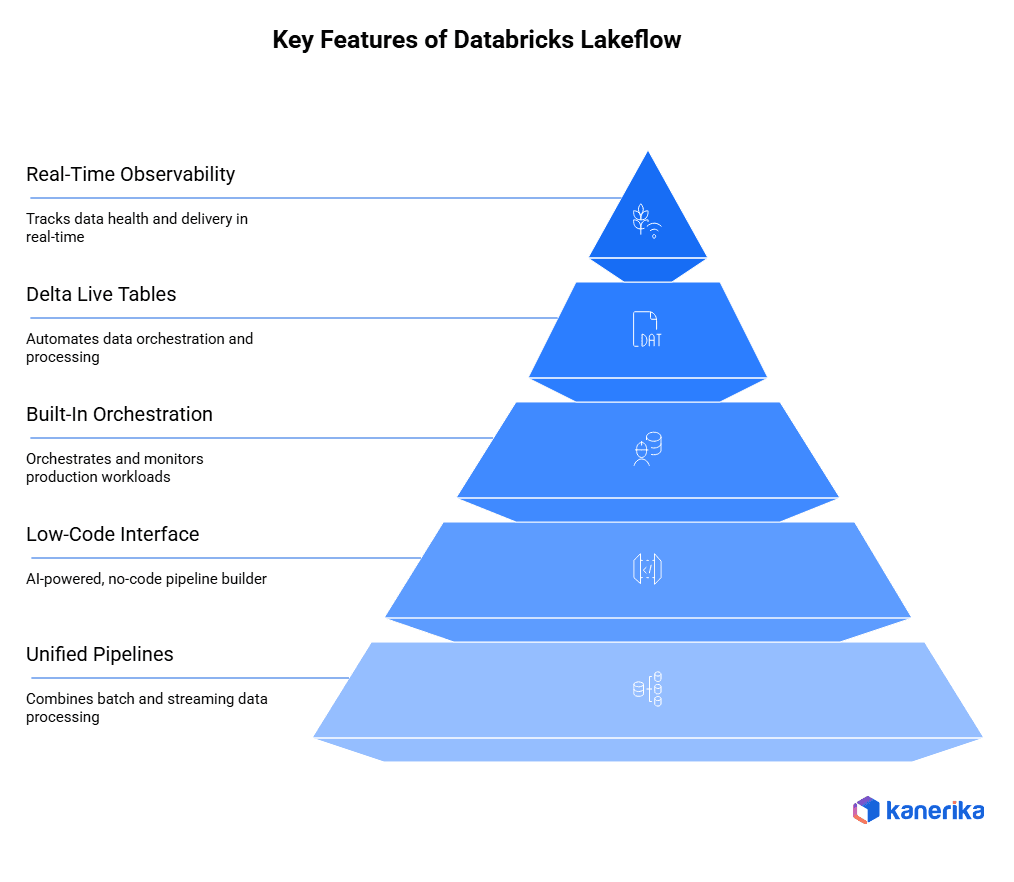

Key Features of Databricks Lakeflow

1. Unified ETL + Streaming Pipelines

Lakeflow enables data teams to implement data transformation and ETL in SQL or Python, with customers able to enable Real Time Mode for low latency streaming without any code changes. Instead of stitching together Spark, Kafka, and Airflow separately, organizations can handle both real-time and batch processing through a single pipeline infrastructure. Lakeflow combines batch and streaming data pipelines, with Real Time Mode for Apache Spark allowing stream processing at orders of magnitude faster latencies than microbatch.

2. Low-Code Interface

Lakeflow Designer is an AI-powered, no-code pipeline builder with a visual canvas and built-in natural language interface that lets business analysts build scalable production pipelines without writing a single line of code. This no-code ETL capability lets non-technical users author production data pipelines using a visual drag-and-drop interface and a natural language GenAI assistant.

3. Built-In Orchestration

Lakeflow Jobs reliably orchestrates and monitors production workloads, built on advanced Databricks Workflows capabilities, orchestrating any workload including ingestion, pipelines, notebooks, SQL queries, machine learning training, model deployment and inference. This native orchestration engine eliminates the need for external tools like Airflow, allowing teams to schedule, trigger, and monitor jobs in one centralized location.

4. Delta Live Tables Integration

Lakeflow Pipelines are built on the declarative Delta Live Tables framework, freeing teams to write business logic while Databricks automates data orchestration, incremental processing, and compute infrastructure autoscaling. This integration enables versioned data pipelines with built-in quality checks, significantly simplifying maintenance and debugging processes.

5. Real-Time Observability

Lakeflow Jobs automates the process of understanding and tracking data health and delivery, providing full lineage including relationships between ingestion, transformations, tables and dashboards, while tracking data freshness and quality. This comprehensive monitoring includes built-in logs, metrics, and lineage visibility, enabling teams to track job progress, monitor latency, and troubleshoot errors efficiently within the unified platform environment.

Benefits of Using Databricks Lakeflow

1. Reduced Tooling Complexity

Conventional data stacks compel organizations to deal with various isolated tools to ingest data, transform it and coordinate its delivery. Such fragmentation introduces the needless complexity as well as increasing costs. Lakeflow is the solution to this burden as it combines all three functions into one platform. Also, teams do not spend time anymore developing elaborate integrations between the various systems or licensing various tools.

Consequently, data engineers are able to stop spending their time on infrastructure maintenance and focus on creating actual business value. This combination makes work easier and ensures that the level of technical debt and overhead in the operation is diminished considerably.

2. Faster Development Cycles

Lakeflow is a fresh IDE of data engineering which makes the creation of pipelines many times faster. The integrated environment facilitates easy handoffs within the team members and eradicates the expensive restructurings that normally take engineering cycles. Moreover, the teams are able to create pipelines, which can be used in any environment without alteration.

The platform also offers backward-compatibility to 100 percent, which implies that the current pipelines do not need rewrites in order to upgrade to the new one. As a result, organizations attain a shorter time to market data product thought the organization remains stable and the development friction across the data lifecycle is mitigated.

3. Lower Total Cost of Ownership

Usually, organizations incur a large budget to purchase individual ingestion, transformation, and orchestration tools. Lakeflow brings all these capabilities in a single platform and this saves on licensing cost instantly. Furthermore, the single platform strategy does not have the overhead of integration that would otherwise consume dedicated engineering effort.

Serverless compute model is the most efficient in terms of resource management as it is a model which scales automatically depending on the real demand instead of having to over provision infrastructure. Also, a single solution of governance eases the process of collecting data and quality management. Combined, these aspects have a strong negative impact on total cost of ownership and enhance operational efficiency and relieve administrative necessity.

4. Better Collaboration

Lakeflow Designer makes data engineering more democratic, allowing business analysts to create production pipelines without code. Such a no-code feature dismantles the previous technical and business divisions. Moreover, data scientists and engineers are able to work together in the same platform environment without any difficulties.

Any pipeline is maintained as a version and offered by Unity Catalog, which has complete observability and control. This single environment helps the teams to exchange knowledge with ease, to go through the work of each other effectively and to ensure uniformity of standards. Finally, enhanced cooperation enhances quicker innovation without compromising governance and quality of all data initiatives.

5. Real-Time Analytics and Insights

In the contemporary business environment, speed is important, and Lakeflow provides insights within shortest time possible due to its Real Time Mode features. After adoption, customers have reported 3-5x performance raises in latency performance. Whereas a processing time used to take 10 minutes, it currently takes a 2-3 minutes.

Such a dramatic acceleration allows it to have much faster feedback loops to make critical business decisions. Also, real-time processing facilitates applications such as fraud detection, personalized recommendations and operational monitoring which have to be responded to promptly. The competitive advantage of organizations is that they take action with new data, but not hours later when the batch processing is done.

6. Enhanced Data Reliability and Governance

The traditional data governance issues are traditionally time-intensive in engineering and pose compliance risks. Lakeflow deals with those issues by integrating with Unity Catalog all the way to the point of being able to see every data pipeline and control it. The platform guarantees consistency in the quality of data throughout all the workflows and at the same time keeps a complete lineage of the source to destination.

Standardized access controls guard sensitive data assets without need of manual configuration of complex configuration. Moreover, automated quality controls help to early detect problems before they spread into the downstream. This strong governance base minimizes risk, meets regulatory demands and establishes trust in data throughout the organization.

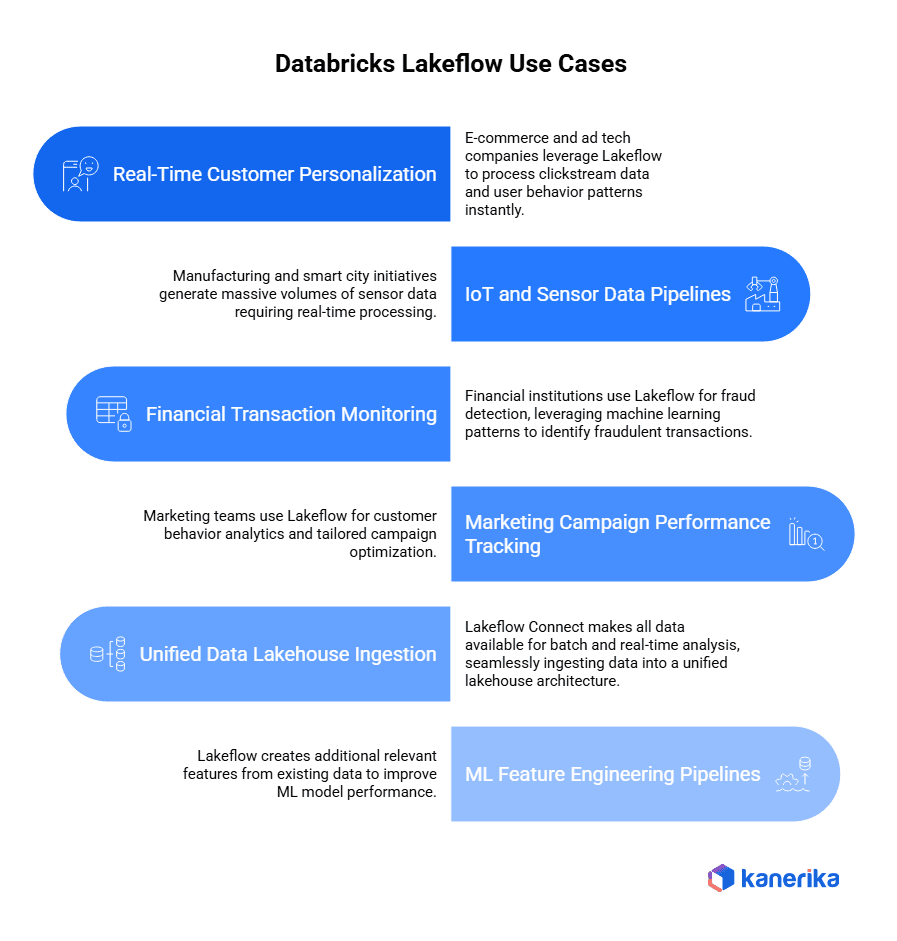

Common Use Cases of Databricks Lakeflow

1. Real-Time Customer Personalization

E-commerce and ad tech companies leverage Lakeflow to process clickstream data and user behavior patterns instantly. By identifying key trends in customer buying patterns, businesses can send personalized communication with exclusive product offers in real time tailored to exact customer needs and wants. This enables dynamic product recommendations, personalized pricing, and targeted marketing campaigns that respond to customer actions within milliseconds.

2. IoT and Sensor Data Pipelines

Manufacturing and smart city initiatives generate massive volumes of sensor data requiring real-time processing. Lakeflow processes data arriving in real-time from sensors, clickstreams and IoT devices to feed real-time applications. This supports predictive maintenance in factories, traffic optimization in smart cities, and environmental monitoring systems that need immediate responses to changing conditions.

3. Financial Transaction Monitoring

Financial institutions use Lakeflow for fraud detection, leveraging machine learning patterns to identify fraudulent transactions during training and creating real-time fraud detection data pipelines. Lakeflow’s Real Time Mode enables consistently low-latency delivery of time-sensitive datasets without any code changes, crucial for preventing fraudulent transactions and ensuring regulatory compliance.

4. Marketing Campaign Performance Tracking

Marketing teams use Lakeflow for customer behavior analytics and tailored campaign optimization, processing multi-channel campaign data to measure attribution, ROI, and customer engagement across email, social media, and paid advertising platforms in real-time.

5. Unified Data Lakehouse Ingestion

Lakeflow Connect makes all data, regardless of size, format or location available for batch and real-time analysis, seamlessly ingesting data from legacy systems, modern APIs, and streaming sources into a unified lakehouse architecture.

6. ML Feature Engineering Pipelines

Lakeflow creates additional relevant features from existing data to improve ML model performance, requiring domain knowledge to capture underlying patterns for both real-time inference and offline model training workflows.

Lakeflow vs Traditional Data Pipelines

Modern data teams are shifting away from fragmented architectures that require multiple tools for data movement, orchestration, and streaming. Databricks Lakeflow offers an all-in-one solution that simplifies pipeline development and operations across batch, streaming, and AI workloads. Here’s how it compares to traditional data pipeline architectures:

| Feature | Traditional Pipelines | Databricks Lakeflow |

| Batch & Streaming Support | Requires separate tools (e.g., Spark for batch, Kafka for streaming) | Unified pipeline handles both batch and real-time data seamlessly |

| Orchestration | External tools like Apache Airflow, Oozie, or custom schedulers | Built-in orchestration engine with visual scheduling |

| UI/UX | Code-heavy setup, often managed via CLI or text-based scripts | Low-code, visual pipeline builder in Databricks UI |

| Real-Time Readiness | Complex to set up and scale, often requires managing Kafka infrastructure | Native streaming support with minimal configuration |

| Data Lineage & Observability | Basic or requires third-party integration (e.g., OpenLineage) | End-to-end lineage, built-in monitoring, and error tracing |

| Data Quality Management | Manual or via separate data quality tools | Integrated with Delta Live Tables for built-in data validation |

| Scalability | Scaling requires tuning individual components (compute clusters, queues, etc.) | Auto-scaling and elastic resource management within Databricks |

| Governance & Security | Often ad hoc; RBAC and metadata management vary across tools | Centralized via Unity Catalog for unified access control and audit logs |

| Cost Efficiency | Multiple platforms and cloud resources can increase operational cost | Lower TCO by consolidating tools and reducing infrastructure complexity |

| AI/ML Integration | Requires custom pipelines to feed ML models | Seamlessly integrates with MLflow and Databricks notebooks |

The Data Pipeline Revolution: How Databricks Lakeflow Transforms Modern Data Engineering

The Data Challenge

- Organizations manage 400+ different data sources on average

- 80% of data teams spend more time fixing broken connections than creating useful reports.

- Companies like Netflix handle 2+ trillion daily events while JPMorgan Chase processes massive transaction volumes in real-time.

- Traditional data tools break down under the pressure of handling both scheduled batch jobs and live streaming data

Why Current Solutions Fall Short

- Teams waste time connecting dozens of separate tools for data collection, processing, and delivery

- Organizations struggle with fragmented systems that don’t work well together

- Data engineers spend their days maintaining infrastructure instead of solving business problems

- Companies need both scheduled data processing and instant real-time analysis

Enter Databricks Lakeflow: Lakeflow solves these problems by putting all your data processing needs into one platform. Instead of juggling multiple tools, you get batch processing, real-time streaming, and workflow management in a single system.

Say goodbye to fragmented tools—Lakeflow brings your batch, streaming, and orchestration under one roof, enabling teams to focus on business value rather than infrastructure complexity.

Drive Business Innovation and Growth with Expert Machine Learning Consulting

Partner with Kanerika Today.

Getting Started with Lakeflow

Before diving into Lakeflow, ensure you have an active Databricks workspace with Unity Catalog enabled. Databricks began automatically enabling new workspaces for Unity Catalog on November 9, 2023, but existing workspaces may require manual upgrade. Unity Catalog provides unified access, classification and compliance policies across every business unit, platform and data type.

Building Your First Pipeline

- Connect Data Sources: Ingest data from databases, enterprise apps and cloud sources using Lakeflow’s native connectors for MySQL, PostgreSQL, SQL Server, and Oracle.

- Define Transformations: Transform data in batch and near real-time using SQL and Python through intuitive notebook interfaces or declarative pipeline definitions.

- Schedule and Monitor: Confidently deploy and operate in production with built-in scheduling, monitoring, and alerting capabilities.

Key Success Tips: Start small with simple transformations, leverage Delta Live Tables (DLT) for version control and data quality, and monitor pipeline performance through comprehensive logging. Learn how to create and deploy an ETL pipeline using change data capture (CDC) with Lakeflow Declarative Pipelines in the official documentation.

Quick Start Resources

Data Intelligence: Transformative Strategies That Drive Business Growth

Explore how data intelligence strategies help businesses make smarter decisions, streamline operations, and fuel sustainable growth.

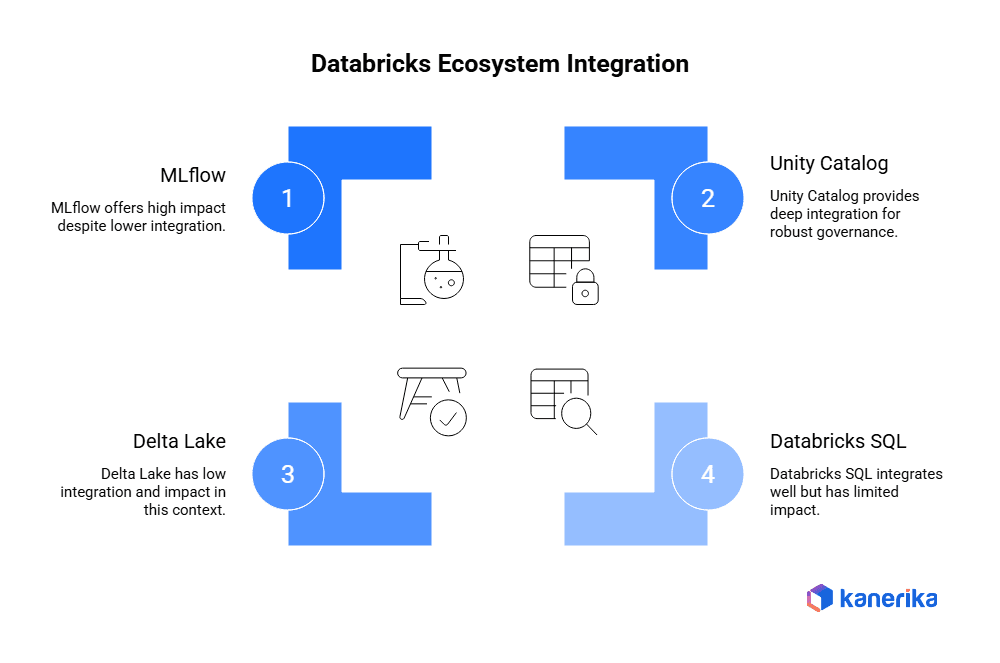

How Lakeflow Fits into the Databricks Ecosystem

Lakeflow isn’t a standalone tool as it’s designed to work seamlessly with the entire Databricks platform. This integration creates a complete data solution where each component enhances the others.

1. Unity Catalog for Governance

Lakeflow is deeply integrated with Unity Catalog, which powers lineage and data quality. The resulting ingestion pipeline is governed by Unity Catalog and is powered by serverless compute. This means every data pipeline you build automatically inherits security policies, access controls, and compliance features. Unity Catalog applies access control lists (ACLs), accesses the data, performs queries and provides full governance and audit of operations.

2. Delta Lake for Transactional Storage

Unity Catalog, when combined with Delta Lake, ensures ACID transactions, schema enforcement, and efficient data sharing across teams. Your Lakeflow pipelines write directly to Delta Lake tables, providing reliable, versioned storage that supports both batch and streaming workloads. This eliminates data corruption issues and enables time travel capabilities for your processed data.

3. MLflow for Model Lifecycle Integration

Once Delta Lake tables are registered as external tables in Unity Catalog, this unlocks powerful downstream capabilities such as leveraging Mosaic AI to build and deploy machine learning models. Delta Lake, MLflow, and Unity Catalog are intricately woven elements that operate in concert to furnish an all-encompassing solution for data engineering and data science.

4. Databricks SQL for Analytics

Once tables are registered in Unity Catalog, you can query them directly from Databricks using familiar tools like Databricks SQL. This creates a seamless flow from data ingestion through Lakeflow to business intelligence and reporting, all within the same platform ecosystem.

Case Study: Accelerating Sales Intelligence with Databricks for Faster Decision-Making

The client is a rapidly growing AI-powered sales intelligence platform that delivers real-time company and industry insights to go-to-market teams. The platform processes large volumes of unstructured data sourced from the web and enterprise documents to support sales and marketing decisions.

Client Challenges

As data volumes increased, the client’s existing architecture struggled to scale. Legacy document processing built in JavaScript was difficult to maintain and slow to update. Data spread across MongoDB, Postgres, and standalone processing pipelines made it challenging to deliver consistent and timely insights. Additionally, handling unstructured PDFs and metadata required significant manual effort, slowing overall data processing.

Kanerika’s Solution

Kanerika re-engineered the document processing workflows using Python on Databricks, significantly improving scalability and maintainability. All data sources were integrated into Databricks, providing a unified and reliable data foundation. The team optimized PDF processing, metadata extraction, and classification workflows to streamline operations and accelerate insight delivery.

Key Outcomes

- 80% faster document processing

- 95% improvement in metadata accuracy

- 45% reduction in time to deliver insights to users

Partner with Kanerika to Modernize Your Enterprise Operations with High-Impact Data & AI Solutions

The Kanerika-Databricks Partnership

Strategic Alliance Formation

- Kanerika’s collaboration with Databricks brings together two complementary strengths in the data ecosystem:

- Kanerika specializes in helping organizations implement data solutions and AI capabilities

- Databricks has developed the innovative Data Intelligence Platform

- Together, they provide end-to-end solutions from technology to implementation

Complementary Expertise

The partnership combines Databricks’ cutting-edge technology with Kanerika’s implementation expertise to deliver comprehensive solutions to clients. This synergy creates value through:

- Databricks’ technological foundation through their lakehouse architecture

- Kanerika’s practical experience in tailoring solutions to specific business needs

- Joint capability to address complex data challenges at scale

Addressing Common Data Challenges

- Fragmented data sources across multiple systems

- Governance concerns and security requirements

- Difficulties scaling AI projects beyond initial pilots

Shared Vision for Data Transformation

The shared vision focuses on transforming data challenges into competitive advantages. Rather than viewing data fragmentation or governance as obstacles, the partnership helps organizations:

- Turn data management issues into opportunities for better decision-making

- Convert fragmented data into unified business intelligence

- Transform governance requirements into strategic assets

- Scale AI capabilities from isolated projects to enterprise-wide implementation

By working together, we aim to help businesses move beyond collecting data to actually use it effectively across their organizations. The partnership represents a practical approach to making data intelligence accessible and valuable to all enterprises dealing with complex data management problems.

A New Chapter in Data Intelligence: Kanerika Partners with Databricks

Explore how Kanerika’s strategic partnership with Databricks is reshaping data intelligence, unlocking smarter solutions and driving innovation for businesses worldwide.

FAQs

1. What is Databricks Lakeflow?

Databricks Lakeflow is a unified pipeline orchestration tool that enables users to build, manage, and monitor both batch and streaming data pipelines within the Databricks Lakehouse Platform. It simplifies ETL, streaming ingestion, and data transformation in one low-code interface.

2. How is Lakeflow different from traditional tools like Apache Airflow or Kafka?

Unlike traditional tools that require separate systems for orchestration (Airflow), streaming (Kafka), and ETL (Spark), Lakeflow combines all of these into one native environment with built-in governance, observability, and a low-code UI.

3. How does Lakeflow support modern data engineering?

Lakeflow supports both real-time and batch data processing in one framework. It enables engineers to build reliable pipelines using declarative configurations. Built-in orchestration reduces dependency on external workflow tools. This allows teams to focus more on data logic than infrastructure management.

4. Do I need to know how to code to use Lakeflow?

Not necessarily. Lakeflow offers a visual, low-code interface, allowing analysts and non-engineers to build and manage pipelines. However, advanced users can extend functionality with Python and SQL.

5. What components make up Databricks Lakeflow?

Lakeflow includes ingestion, transformation, orchestration, and monitoring capabilities. It integrates tightly with Delta Lake for reliability and consistency. The platform supports schema enforcement, incremental processing, and error handling. Together, these components simplify end-to-end pipeline management.

6. How does Lakeflow reduce operational overhead?

Lakeflow automates pipeline scheduling, retries, and dependency handling. It eliminates the need for multiple third-party orchestration tools. Centralized monitoring provides visibility into pipeline health. As a result, teams spend less time on maintenance and troubleshooting.

7. How does Databricks Lakeflow fit into the Databricks Lakehouse platform?

Lakeflow is a core part of the Databricks Lakehouse architecture.

It works seamlessly with Delta Lake, Unity Catalog, and Databricks SQL. This integration ensures governance, security, and lineage across pipelines. Lakeflow helps unify data engineering, analytics, and AI workflows.