In October 2025, Meta Platforms unveiled its most advanced AI models to date, Llama 4 Scout and Llama 4 Maverick. These multimodal systems can process and translate a wide range of data formats, including text, video, images, and audio, marking a significant leap in AI’s ability to understand and interact with the world. This development underscores the growing trend of integrating multiple data types to enhance AI capabilities.

The global multimodal AI market is experiencing rapid growth. According to Grand View Research, the market was valued at $1.73 billion in 2024 and is projected to reach $10.89 billion by 2030, growing at a CAGR of 36.8%. This surge is driven by advancements in AI technologies and the increasing demand for systems that can process diverse data inputs.

In this blog, we’ll explore the capabilities of multimodal AI, its real-world applications, and how it is transforming industries by enabling more nuanced and accurate insights. Continue reading to discover how combining text, image, and audio processing is reshaping the future of AI.

Key Takeaways

- Multimodal AI combines text, images, audio, video, and sensor data for richer, more accurate analysis than single-modality AI.

- Key technologies include Machine Learning, Deep Learning, NLP, Computer Vision, Speech Recognition, and Sensor Fusion.

- Leading models in 2025: GPT-5, Claude 3, Google Gemini 2.0, Gemma 3, Kosmos-2, LLaMA 3.2.

- Applications span healthcare, autonomous vehicles, human-computer interaction, robotics, education, and security.

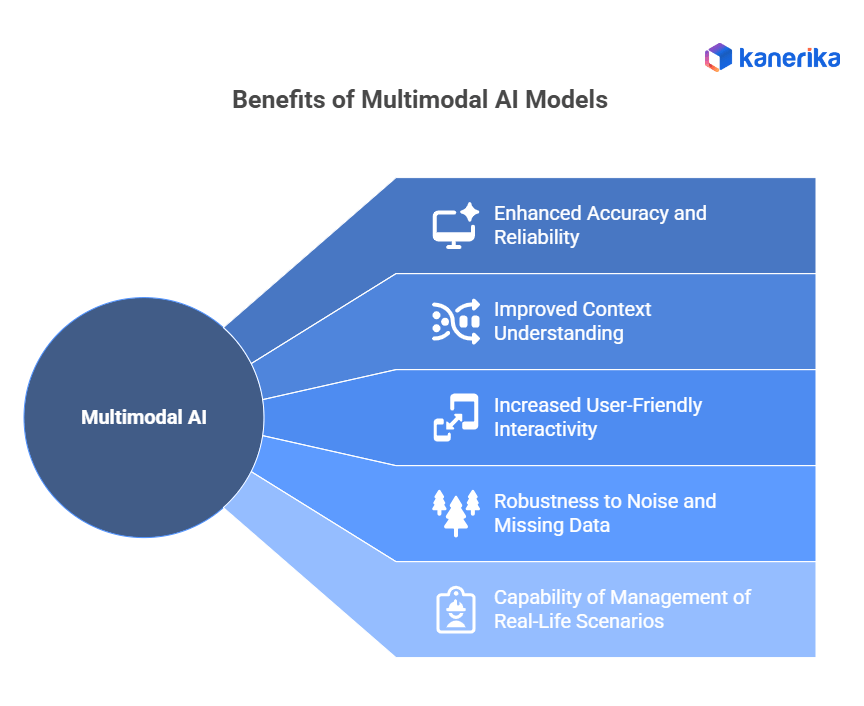

- Benefits: improved accuracy, better understanding of context, interactivity, robustness, and the ability to handle complex real-life scenarios.

- Advances include multimodal LLMs, cross-modal learning, transformers, and few- and zero-shot learning.

- Challenges: data integration, scalability, missing/noisy data, interpretability, privacy, and security.

- Ethical considerations: privacy, bias reduction, transparency, informed consent, and employment impact.

- Kanerika’s AI agents automate real workflows, integrate multiple data types, ensure compliance, and improve efficiency and decision-making.

Revolutionize Your Decision-Making with Multimodal AI Insights

Partner with Kanerika for Expert AI implementation Services

What is Multimodal AI?

Multimodal AI is a branch of artificial intelligence that combines data from multiple sources, such as text, images, audio, and video, to gain a deeper understanding of information. Unlike traditional AI models, which typically process a single type of data, multimodal AI integrates diverse inputs to improve context, interpretation, and overall performance. The main idea of multimodal AI is to leverage multiple data types for richer, more accurate analysis.

A real-life example is Zoom, which uses AI to enhance virtual meetings by analyzing both audio and video. If a participant shows signs of confusion or frustration while speaking, the system can detect these emotions from speech tone and facial expressions, enabling features such as sentiment analysis and automatic meeting highlights.

Another example is Kustomer by Meta, a customer service platform that combines chat text with images or videos shared by customers. If a customer types “I’m having trouble with my order” and shares a video of a damaged product, the AI can detect frustration and context from both inputs, enabling faster, more empathetic responses.

Multimodal AI is transforming industries by enabling systems to understand human communication and context more accurately than single-modality AI, enabling smarter, more human-like interactions.

Responsible AI: Balancing Innovation and Ethics in the Digital Age

Join us in exploring the intersection of innovation and ethics with Responsible AI. Learn how to create AI solutions that are both cutting-edge and ethically sound—start today!

Types of Modalities

1. Visual (Images, Video)

Imagery data is a data modality that involves imagery data obtained from cameras and sensors. It covers still photographs, video images, and video recordings. It includes recognizing images, detecting objects, and analyzing videos.

Examples: Another term for face recognition that treats a person’s face as a password is the understanding of a scene from still photographs and movies.

2. Auditory (Speech, Sound)

This modality comprises audio data like language, sounds from people or environments, and music. Sound data involves interpreting and recognizing waves to complete tasks such as remembering words and identifying sound sources.

Examples: Application software where users marry their voices to mobile devices and conduct activities. Mobile phone users dictate their messages, which the software then reveals to them. Additionally, software detects emotions from music.

3. Textual (Natural Language)

Textual data is anything that can be read or written, such as documents, chats, or social media posts. This is achieved through Natural Language Processing.

Examples: Chatbots, sentiment analysis, and automated text generation.

4. Tactile/Haptic

This modality includes any touch and its effects, like vibration, pressure, and textural feedback. It is used in applications where tactile information helps augment or explain the obtained data.

Examples: Haptic feedback in VR, touchscreen, robotic arm.

5. Other Sensor Data

This category includes various types of sensor data not covered by the other modalities, such as temperature, humidity, motion, etc. It provides additional contextual information.

Examples: Environmental monitoring, wearable health devices, and smart home sensors.

Core Technologies Enabling Multimodal AI

1. Machine Learning and Deep Learning

Machine Learning and Deep Learning are efficient and integral AI concepts requiring minimal programming. They can predict or make decisions based on programmed information and the data they receive.

Role in Multimodal AI: ML and DL methodologies fuse data from multiple sources to support a specific task, developing sophisticated algorithms that enhance the system’s comprehension and interactive capabilities with complex inputs.

Key Techniques: Multimodal AI employs a range of techniques, including neural networks, convolutional networks, and recurrent networks, across different datasets.

2. Natural Language Processing NLP

NLP’s Artificial Intelligence technology is designed to help computers engage with human languages and comprehend text, images, and videos.

Role in Multimodal AI: Verbal text is translated via NLP; these textual representations can be enhanced with audio or images to improve responses, reactions, and actions.

Key Techniques: Tokenization, named entity recognition (NER), sentiment analysis, and generative language models, including GPT-4.

3. Computer Vision

Computer vision involves creating machines that perceive and comprehend information in image formats such as video and photography.

Role in Multimodal AI: Computer vision analyzes visual data, and when combined with audio or text, it is better equipped to handle hostile environmental conditions.

Key Techniques: Image classification, object segmentation, image annotation, and face detection.

4. Speech Recognition

In its simplest form, speech recognition means listening to someone and converting what they say into a written form.

Role in Multimodal AI: Speech recognition enables interaction in which audio is an input, which can be used alongside visuals or text for richer interaction.

Key Techniques: Contextual acoustics, language modeling, and ASR systems.

5. Sensor Fusion Techniques

Sensor fusion integrates data from numerous and possibly disparate sensors into a unified understanding of the environment or system.

Role in Multimodal AI: AI Sensor fusion makes available more types of sensor data, such as temperature, motion, and touch, deepens context, and helps the AI make more nuanced decisions.

Key Techniques: ANOVA, Bayesian fusion, and multi-sensor data integration methods.

Key Components of Multimodal AI

1. Data Integration

Developing these systems involves merging and harmonizing data from distinct sources or modalities. This means combining text, images, audio, and video into one representation.

Good data integration enables the AI to understand a given context by focusing on all the available information.

2. Feature Extraction

This component entails deriving meaningful features from the respective modalities. For instance, in images, feature extraction involves recognizing different objects or patterns, whereas in textual data, it involves parsing context, sentiment, and key phrases.

Feature extraction is critical to the AI since it helps the AI understand different types of data very well.

3. Cross-Modal Representation Learning

Shared representations are also learned across multiple modalities. The AI’s knowledge is enhanced as it tries to map features learned from different types of data, based on how they relate to one another.

Cross-modal representation aids the AI in relating different types of data to each other, thus improving its overall understanding of the task at hand and/or its decision-making.

4. Fusion Techniques

Fusion techniques pull data from numerous modalities and produce an integrated output. These techniques can also take various forms, such as snipping, appeal processes, or higher scaffolds of neural networks.

All effective information synthesis techniques pull information from different sources to form a single coherent output or make a particular prediction.

5. Multi-Task Learning

Multimodal AI uses multitask learning, in which a model is trained on multiple tasks using data from different modalities.

Multi-task learning helps the AI tap into all the relevant facts within the task framework, enhancing its speed and accuracy in handling task complexity.

Elevate Your AI Strategy with Multimodal Capabilities

Partner with Kanerika for Expert AI implementation Services

Top 6 Multimodal AI Models Leading Innovation in 2025

1. GPT-5 (OpenAI)

GPT-5 is OpenAI’s most advanced multimodal model, capable of understanding and generating text, images, and code simultaneously. Its unified architecture enables it to reason across multiple modalities in real time, making conversations, content creation, and problem-solving more natural and context-aware. GPT-5 also incorporates improved safety features, reduced hallucinations, and enhanced reasoning capabilities, making it highly reliable for enterprise and creative applications.

2. Claude Sonnet 4.5 (Anthropic)

Claude Sonnet 4.5 is Anthropic’s most intelligent and stable multimodal model to date. It supports text, images, and document interpretation, with extended reasoning for long-context and coding tasks. The model includes an “extended thinking” mode and improved tool-use capabilities, making it ideal for enterprise agents, data analysis, and creative work requiring accuracy and consistency.

3. Gemini 2.5 Pro (Google DeepMind)

Replacing Gemini 2.0, Gemini 2.5 Pro supports 1M+ token context windows and handles text, image, audio, and video inputs. It’s tightly integrated with Google’s ecosystem (Docs, Sheets, YouTube, and Cloud AI). With deeper cross-modal reasoning and faster inference, it’s built for large-scale enterprise workflows and creative collaboration.

4. LLaMA 4 (Meta)

Meta’s LLaMA 4 succeeds LLaMA 3.2 and introduces powerful variants, Scout, Maverick, and Behemoth. It supports multimodal input and excels at long-context reasoning. Designed for both research and commercial use, LLaMA 4 offers flexibility across deployment scales, from lightweight mobile inference to enterprise-grade multimodal AI systems.

5. DeepSeek-OCR (DeepSeek AI)

DeepSeek-OCR is a specialized multimodal model optimized for document understanding and structured visual content. It compresses long text using visual encoding to extract meaning efficiently from images, PDFs, and scanned files. Ideal for automation in data-heavy industries, DeepSeek-OCR bridges text and visual data for intelligent document analysis.

6. Phi‑4‑multimodal (Microsoft)

Microsoft’s latest AI model that processes text, images, and speech/audio simultaneously. With 5.6 billion parameters, it delivers efficient on-device performance and real-time multimodal reasoning. Part of the “Phi” family, it supports applications like voice assistants with visual context, mobile apps analyzing images with audio commands, and integrated text-image-audio workflows, making it ideal for enterprise and edge-device use.

What Are the Applications of Multimodal AI?

1. Healthcare and Medical Diagnosis

- Multimodal Disease Detection: Combines data from medical imaging, genetics, and patient history to enhance diagnostic accuracy and support early intervention.

- Patient Monitoring Systems: Integrates data from wearable devices and medical instruments to continuously track health and provide timely alerts for proactive care.

2. Autonomous Vehicles

- Sensor Fusion for Environment Perception: Merges inputs from cameras, LIDAR, radars, and GPS to improve navigation, obstacle detection, and overall vehicle safety.

- Human-Vehicle Interaction: Uses voice, gesture, and facial recognition to enable intuitive interaction between humans and autonomous systems.

3. Human-Computer Interaction

- Virtual Assistants: Processes audio, text, and gesture inputs to create more efficient, contextual, and seamless user interactions.

- Emotion Recognition: Analyzes visual and auditory cues to understand user emotions, enabling AI systems to respond appropriately and improve engagement.

4. Robotics

- Enhanced Environmental Understanding: Combines vision, touch, and sensor data for precise perception and effective task execution in complex environments.

- Improved Human-Robot Interaction: Uses speech, gesture, and vision inputs to interpret human cues, enabling natural, collaborative interactions.

5. Education and E-Learning

- Personalized Learning Experiences: Leverages interaction data, performance records, and learning styles to adapt educational content to individual learners.

- Intelligent Tutoring Systems: Provides guidance and feedback through multiple input modalities, enabling interactive and adaptive learning experiences.

6. Security and Surveillance

- Multimodal Biometrics: Integrates face, voice, and fingerprint recognition to strengthen authentication and access control systems.

- Anomaly Detection: Uses video, sensor, and other data sources to efficiently detect abnormal behaviors or potential security threats.

Experience the Future of AI – Explore Multimodal Technology Today

Partner with Kanerika for Expert AI implementation Services

What Makes Multimodal AI Valuable for Businesses?

1. Enhanced Accuracy and Reliability

Multimodal AI is more accurate and dependable than single-modality systems because it integrates inputs from multiple sources. This combination minimizes plagiarism and facilitates information validation.

2. Improved Context Understanding

Multimodal AI can grasp the context and subtleties of data by synthesizing and processing heterogeneous data, leading to better actions or responses.

3. Increased User-Friendly Interactivity

By embracing Multimodal AI technology, users will have an interface that is not limited to texting, speaking, or other people’s gestures; these blend to make the interaction more user-friendly.

4. Robustness to Noise and Missing Data

The opportunity for multimodal AIs to draw on multiple sources increases the performance and reliability of systems with multimodal data in situations of limited information. This redundancy enhances general system reliability.

5. Capability of Management of Real-Life Scenarios

Multimodal AI proves effective in complex situations that involve both internal and external information and is better suited to practical applications where issues are multidimensional and interlinked.

What Are the Recent Advances in Multimodal AI?

A. Large Language Models with Multimodal Capabilities

Modern large language models (LLMs), such as GPT-5, are now designed to process multiple types of data—text, images, audio, and even code—simultaneously. This allows models to generate more contextual, accurate, and creative outputs by understanding the relationships across data types.

Example: OpenAI’s GPT-5 can analyze text and images together, enabling natural, context-aware conversations and generating content from both visual and textual inputs.

B. Cross-Modal Learning and Transfer

Cross-modal learning enables models to apply insights from one modality (e.g., text) to another (e.g., images or audio). This improves understanding and allows better integration of diverse information sources.

Example: A model trained to describe images can use its learned textual understanding to improve performance on unseen visual tasks, or vice versa, enhancing adaptability to new datasets.

C. Multimodal Transformers

Transformer-based models designed for multimodal tasks can efficiently process diverse types of data together. Attention mechanisms allow the model to align and integrate information across modalities, improving performance on complex tasks.

Example: CLIP and Meta’s LLaMA 3.2 integrate text and image data for tasks like image classification, caption generation, and multimodal reasoning, supporting applications from high-performance GPUs to mobile devices.

D. Few-Shot and Zero-Shot Learning in Multimodal Contexts

Few-shot and zero-shot learning enable models to perform tasks with very little or no training data. In multimodal AI, this allows the model to generalize to new domains with limited examples or prior knowledge.

Example: A model can classify new types of images or generate text in unfamiliar contexts using prior multimodal training, without needing extensive additional training.

E. Vision-Language and Advanced Algorithms

Recent multimodal models, such as Google’s Gemma 3, support high-resolution and non-standard images, combining visual and textual data with dynamic algorithms to improve performance across diverse tasks.

Example: Gemma 3 can process complex vision-language inputs to generate accurate descriptions, answer questions about images, or assist in creative content generation.

Transform Your Data Analysis with Multimodal AI Solutions

Partner with Kanerika for Expert AI implementation Services

Challenges in Multimodal AI

1. Data Integration and Alignment

The task of combining data from various modalities requires combining different types of information in a single document—a task that the model should hoodwink and the reader. This includes reconciling differences in data formats, scales, and contexts.

2. Scalability and Computational Requirements

The development of systems like Multimodal AI is very capital-intensive, as they process large amounts of data in different formats and analyze them. The high processing and memory requirements when scaling these systems for real-world applications can be problematic.

3. Handling Missing or Noisy Modalities

Some modalities might not be present, or, worse, there might be noise in the data. A critical concern is providing efficient mechanisms for processing incomplete or dirty data and still performing well.

4. Interpretability and explainability

Multimodal models, or rather AI models that process multiple modalities, are very complex, and therefore, it isn’t easy to see how the conclusion is reached from the fused data. Realizing these models and ensuring their decisions can be justified remains a huge task.

5. Privacy and Security Issues

Multimodal systems and synthesis, particularly systems with sensitive and private individual data. The privacy and security of this information largely hinge on the challenge of unifying and processing multimodal data.

AI Sentiment Analysis: The Key to Unlock Customer Experience

Unlock the full potential of your customer experience with AI Sentiment Analysis. Discover how to gain deeper insights and drive better engagement—start today!

Ethical Considerations in Multimodal AI

1. Data Privacy and Security

Providing data securely while also protecting users’ privacy is very important. Many multimodal AI systems often need to access sensitive information such as text, images, or sound. Organizations should develop robust data protection strategies and comply with applicable data privacy laws.

2. Bias and Fairness

As with most AI models, including multimodal systems, they have the potential to reinforce or amplify the biases in the data on which they are trained. This is also common in multitargeted models. It is critical to consult and try to reduce such biases to promote equity and fairness in all AI-related decisions and outputs.

3. Transparency and Accountability

Accountability requires clarity on the decision-making process and data processing in multimodal AI systems. Transparent communication about the algorithms’ systems and the rationale behind their decisions helps build confidence and encourages review of the artificial intelligence systems developed.

4. Informed Consent

People should know the activities that will follow data collection and how the data will be used, which is what consenting means. Understanding how consent is ’embedded’ is important, given the heterogeneity of data use and other factors.

5. Impact on Employment

Job-loss concerns arise as the impact of multimodal technologies will ultimately take away people’s roles in organizations, and some of those roles will be lost.

AI TRiSM: The Essential Framework for Trust, Risk, And Security In AI

Secure your AI systems with AI TRiSM—learn how to build trust, manage risks, and enhance security in AI. Discover the essential framework to safeguard your innovations today!

Case Studies: Kanerika Transforms Business Efficiency Through AI

1. Centralized Data Analytics Platform Modernization

Overview: Kanerika’s expertise in data and AI played a crucial role in modernizing a client’s data analytics platform. This modernization aimed to consolidate disparate data sources into a single, efficient system.

Challenges:

- Fragmented Data Sources: The client faced inefficiencies and delayed decision-making due to data spread across multiple systems.

- Outdated Technology: The existing analytics platform was outdated, limiting the ability to harness advanced AI capabilities for data analysis.

Solution: Kanerika implemented a centralized data analytics platform that integrated data from various sources into a unified system. This solution utilized advanced AI algorithms to provide real-time insights and enhance data-driven decision-making.

Impact: The modernization of the centralized analytics platform significantly enhanced the client’s ability to leverage AI for better insights and operational efficiency, supporting strategic decision-making and growth.

2. Enhancing Data Integration Capabilities with Generative AI

Overview: Kanerika’s implementation of generative AI technology transformed the client’s data integration capabilities, allowing them to streamline data processes and improve overall efficiency.

Challenges:

- Complex Data Integration: The client struggled with integrating various data sources and formats, leading to inefficiencies and errors.

- Manual Data Processing: Data integration was largely manual, resulting in slower processes and a higher chance of inaccuracies.

Solution: Kanerika deployed generative AI techniques to automate and enhance data integration processes. This included using AI models to generate synthetic data for testing and improving integration workflows.

Impact: By leveraging generative AI, Kanerika enhanced the client’s data integration capabilities, resulting in more efficient processes, greater data accuracy, and faster insights, ultimately improving the client’s operational efficiency and decision-making.

How Kanerika’s AI Agents Address Everyday Enterprise Challenges

Kanerika develops AI agents that work with real business data, including documents, images, voice, and structured inputs, rather than just text. These agents integrate smoothly into existing workflows across industries such as manufacturing, retail, finance, and healthcare. Their purpose is to solve real business problems, whether automating inventory tracking, validating invoices, or analyzing video streams, rather than offering generic tools.

As a Microsoft Solutions Partner for Data and AI, Kanerika leverages platforms such as Azure, Power BI, and Microsoft Fabric to build secure, scalable systems. These agents combine predictive analytics, natural language processing, and automation to reduce manual work, accelerate decision-making, provide real-time insights, improve forecasting, and streamline operations across departments.

Kanerika’s Specialized AI Agents:

- DokGPT – Retrieves information from scanned documents and PDFs to answer natural language queries

- Jennifer – Manages phone calls, scheduling, and routine voice interactions

- Karl – Analyzes structured data and generates charts or trend summaries

- Alan – Condenses lengthy legal contracts into short, actionable insights

- Susan – Automatically redacts sensitive information to comply with GDPR and HIPAA

- Mike – Detects errors in documents, including math mistakes and formatting issues

Privacy is a top priority. Kanerika holds ISO 27701 and ISO 27001 certifications, ensuring compliance with strict data-handling standards. Their end-to-end services, from data engineering to AI deployment, provide enterprises with a clear and secure pathway to adopting agent-based AI solutions.

Unleash the Power of Multimodal AI – Start Your Journey Now

Partner with Kanerika for Expert AI implementation Services

FAQs

What is multimodal AI?

Multimodal AI goes beyond just text or images; it’s about systems that understand and process information from multiple sources like text, images, audio, and even video *simultaneously*. This allows for a richer, more nuanced understanding of context, leading to more accurate and human-like interactions. Think of it as giving AI a “full sensory experience” to improve its capabilities. It’s the next leap in AI sophistication.

What is the difference between generative AI and multimodal AI?

Generative AI creates new content (text, images, audio) from learned patterns, while Multimodal AI processes and understands multiple types of input (text, image, audio) together for richer comprehension and interaction.

What is an example of a multimodal model?

A multimodal model isn’t just about words; it uses different kinds of information together. Think of Google Lens: it combines image analysis (seeing a picture) with text processing (understanding what’s in the image and giving you information about it). This fusion of data types allows for a richer and more nuanced understanding than any single modality could achieve alone. Essentially, it’s about leveraging the strengths of various input types.

What are the challenges of multimodal AI?

Multimodal AI faces hurdles in effectively integrating different data types (text, images, audio etc.), as each modality carries unique biases and noise. Harmonizing these disparate sources requires sophisticated fusion techniques and substantial computational resources. Finally, evaluating performance across multiple modalities remains a significant challenge, lacking standardized benchmarks and metrics.

What is multimodal chatbot?

A multimodal chatbot isn’t just text-based; it understands and responds using multiple communication modes. Think of it as having a conversation that includes text, images, voice, and even video. This richer interaction makes the bot more engaging and capable of handling complex tasks, going beyond simple text exchanges. Essentially, it’s a more natural and intuitive way to interact with AI.

What are multimodal devices?

Multimodal devices are gadgets that let you interact using multiple senses and methods, not just typing or clicking. Think of it as engaging with technology through a richer, more natural experience – voice, touch, gestures, even eye movements. This allows for more intuitive and accessible ways to use technology. They offer a more human-centered approach to interacting with computing.