Are your data pipelines slowing down as workloads grow larger and more complex? With organizations processing more data than ever before, Databricks Performance Optimization has become a critical priority. Enterprises now expect faster insights, real-time analytics, and lower cloud costs, yet performance bottlenecks continue to waste time, compute, and money. According to Databricks, poorly tuned pipelines and inefficient cluster usage are among the most common reasons for rising cloud spend and missed SLAs.

Databricks delivers a powerful Lakehouse platform that combines the strengths of Apache Spark and Delta Lake, but even with this robust foundation, performance tuning still plays a crucial role. Without optimized storage, compute, and query design, workloads can slow down significantly as data scales.

In this blog, we will break down everything you need to know about optimizing Databricks workloads. We will explore the underlying architecture, storage and data layout best practices, compute and cluster tuning, job and query optimization techniques, monitoring strategies, cost controls, and real-world success stories. By the end, you’ll have a complete roadmap to improve speed, reliability, and efficiency across your Databricks environment.

Optimize Your Performance For Reliability, Speed, And Lower Compute Costs.

Partner With Kanerika To Transform Your Data Into Actionable Intelligence.

Key Takeaways

- Storage layout is critical, file sizes, Delta design, and partitioning impact performance as much as compute power.

- Use the latest Databricks Runtime and optimize cluster settings to improve speed, stability, and cost efficiency.

- Join strategy, caching, and partitioning to deliver some of the biggest performance gains across large workloads.

- Observability and KPI tracking, such as monitoring shuffle volume, GC time, and job duration, enable continuous optimization.

- Performance tuning is ongoing, not a one-time activity; workloads must be reviewed and refined as data volume and complexity evolve.

Why Databricks Performance Optimization Matters

Databricks optimization of performance has direct consequences on your operational performance and budgeting. When your data processing is operating well, you are not only saving resources but you are also empowering your organization to make timely decisions using new data insights

1. Cost Control and Budget Management

Unproductive jobs are very high on the compute resource and this causes surprisingly large cloud bills that can break the budgets. By fine-tuning your Databricks workloads, less resources will be wasted, and predictable cost structures will allow finance teams to comfortably plan.

2. Faster Time-to-Insight

Slowness in queries and data delays in pipes lead to bottlenecks, which irritate stakeholders and slow down important business decisions. Performance optimization will make your analytics team able to deliver on urgent requests with certainty and integrity.

3. Better Resource Utilization

Well-tuned clusters do not experience typical problems such as memory crunches and wasteful use of resources. This means that you will have a predictable time associated with completing any job and reduced unforeseen failures that disorient your data pipeline schedules.

4. Scalability for Growing Data Volumes

With the growth in data volumes, unoptimized systems will start forming a performance bottleneck that can only hold so much of your analytical processes. Anticipated optimization will mean that your infrastructure is capable of sustaining growth even without emergency-level fixes or costly infrastructure upgrades.

Understanding the Databricks Architecture

To optimize any Databricks workload, you must first understand how the platform is built.

1. High-Level Architecture

The Databricks platform is built on top of the following layers:

- Apache Spark Engine: Distributed processing engine that handles large-scale transformations and computations.

- Databricks Runtime (DBR): A tuned, optimized version of Spark with enhancements for speed and stability.

- Delta Lake Storage: Provides ACID transactions, schema enforcement, and file-level optimizations.

- Cluster Management Layer: Automates provisioning, scaling, and terminating compute clusters.

2. Key Components

Databricks includes several important elements that influence performance:

- Compute Clusters: Worker and driver nodes running jobs. The driver node coordinates job execution while worker nodes handle the actual data processing tasks.

- Autoscaling: Automatically adjusts cluster size based on workload. This feature dynamically adds or removes worker nodes

- Photon Engine: Vectorized query engine for high-performance SQL workloads. Photon processes data in batches rather than row-by-row, dramatically speeding up SQL queries and data transformations.

- Delta Transaction Log: Tracks changes in Delta tables for reliability and optimization.

3. Storage and Compute Interplay

Performance depends heavily on how storage and compute work together:

- Parquet/Delta Files: Efficient columnar formats that support compression. Columnar storage allows Spark to read only the specific columns needed for queries, dramatically reducing I/O overhead compared to row-based formats.

- Shuffle Operations: Occur when data is redistributed across nodes. Shuffles happen during operations like joins, aggregations, and window functions, requiring data movement across the network between worker nodes.

- Caching: Speeds up repeated reads of frequently accessed data. This is particularly effective for iterative machine learning workloads and interactive analytics

Therefore, optimizing Databricks workloads is essential for speed, cost efficiency, and reliability. Before exploring optimization techniques, it is important to understand the fundamentals of the Databricks architecture and how the platform processes data.

Storage & Data Layout Optimization

1. Use Delta Lake as the Default Storage Format

To begin with, Databricks strongly recommends using Delta Lake as the default table format. Delta provides:

- ACID transactions for reliable pipelines. This eliminates data corruption issues that can occur with concurrent reads and writes,

- Schema enforcement and evolution for cleaner data. It helps catches data quality issues early, preventing downstream processing errors

- Time travel for versioning and troubleshooting. Beyond debugging capabilities, time travel enables efficient incremental processing

- Unified batch + streaming with a single storage layer. This eliminates the need for separate systems and data duplication, reducing storage costs and complexity.

This foundation improves performance by enabling efficient reads, writes, and incremental updates.

2. File Size Tuning: Avoid Tiny Files

Next, file size plays a major role in query performance. Too many small files cause excessive metadata overhead and slow down Spark jobs. Databricks recommends targeting 100–500 MB per file, depending on workload.

- Use OPTIMIZE to compact small files. Schedule Optimze operations as part of your regular maintenance workflow, especially after heavy streaming ingestion or frequent updates.

- Use Auto Loader with file notification mode to enforce optimal file sizing. Also, Set up trigger intervals that balance data freshness requirements with optimal file size targets.

- Implement automated file size monitoring and alerting. Create monitoring dashboards that track file size distributions and alert when tables accumulate too many small files

3. Data Skipping, Z-Ordering & Caching

Query performance greatly improves when Databricks can skip unnecessary files.

- Data skipping uses statistics to avoid scanning irrelevant files. This works most effectively on columns with natural ordering like timestamps, sequential IDs, or sorted categorical values.

- Z-Ordering clusters data based on frequently filtered columns (e.g., customer_id, date).

- Caching accelerates repeated reads for interactive analytics. elta caching works at the file level and persists across cluster restarts, making it ideal for dashboards and iterative data science workflows.

These techniques reduce I/O and improve latency significantly.

4. Partitioning Strategy

Partitioning is useful but must be applied carefully.

- Use data, region, or category for high-cardinality datasets. Choose partition columns that align with your query patterns and create roughly equal-sized partitions.

- Avoid over-partitioning, which leads to tiny files and slow queries. Maintain partition sizes in the multi-gigabyte range to ensure efficient processing.

- Use multilevel partitions only when absolutely necessary. Nested partitioning like year/month/day can be effective for very large datasets

A balanced partitioning strategy improves pruning and speeds up scans.

5. Predictive Optimization for Unity Catalog Tables

Unity Catalog-managed tables support Predictive Optimization, which automates file management tasks such as:

- Compaction

- Statistics collection

- Sorting

- Vacuum scheduling

This reduces the need for manual maintenance and ensures that data remains query ready.

6. Transition to Compute and Cluster Tuning

With storage and layout optimized, the next step is improving compute and cluster performance, ensuring Spark jobs to execute efficiently and cost-effectively.

- Properly optimized storage reduces the I/O burden on your compute clusters, making CPU and memory the limiting factors rather than data access speed

- The storage optimizations covered earlier directly impact compute efficiency—fewer small files mean faster job startup times,

Data Warehouse to Data Lake Migration: Modernizing Your Data Architecture

Learn how data warehouse to data lake migration, modernize your data architecture with Kanerika.

Job & Query Performance Techniques for Databricks Performance Optimization

1. Adaptive Query Execution (AQE)

To begin with, Spark 3.x introduced Adaptive Query Execution (AQE), a feature that dynamically adjusts query plans based on runtime statistics. AQE can optimise joins, aggregations, and shuffle partitions automatically, leading to better performance with little manual tuning.

2. Join Optimization

Joins are often the most expensive operations in Spark. To optimize them:

- Use the correct join order so the smallest tables are processed first.

- Avoid cross joins, which create massive shuffle operations.

- Broadcast small tables to all workers to eliminate shuffle for dimension lookups.

A good join strategy greatly reduces computation costs and speeds up queries.

3. Caching for Repeated Access

Next, caching can improve performance for repeated reads.

- Use Delta Cache for faster I/O on frequently scanned files.

- Use in-memory tables (cache table) when the same dataset is used across multiple steps.

However, only cache when needed and uncache when done to avoid memory pressure.

4. Reducing Shuffles and Spills

Shuffle operations cause major slowdowns. You can reduce them by:

- Using proper partitioning to minimize data movement.

- Avoiding wide transformations when possible.

- Persisting intermediate results with persist() to prevent recomputation.

- Ensuring that skewed data does not create imbalanced tasks.

5. Code Review & Best Practices

Regular code reviews help eliminate inefficiencies:

- Remove outdated Spark configs that may slow down workloads.

- Avoid UDFs when native Spark functions exist; they are faster and more optimized.

- Use built-in SQL functions and window functions for performance and readability.

Monitoring, Metrics & Cost Controls

Strong monitoring is essential for sustaining high-performance Databricks workloads. Even after optimizing storage, compute, and queries, performance can decline over time due to data growth, schema changes, or new processing patterns. Therefore, continuous visibility into system behavior helps identify issues early and maintain efficiency.

1. Use Databricks Built-In Monitoring

To begin with, Databricks provides powerful built-in tools that help engineers understand how jobs execute internally:

- Query Profile shows detailed operator-level information such as scan time, shuffle cost, and join performance.

- Spark UI provides a visual breakdown of tasks, stages, shuffle operations, skewed tasks, and execution timelines.

- Ganglia Metrics display low-level system stats like CPU load, memory usage, disk I/O, and network throughput.

These tools make it easier to troubleshoot bottlenecks such as slow stages, imbalanced tasks, or memory pressure.

2. Track KPIs

Next, it is important to track key performance indicators that capture overall workload health:

- Job duration to identify slow-running tasks.

- Resource usage to detect underutilized or overloaded clusters.

- Shuffle volume to understand data movement costs.

- I/O wait time to find storage bottlenecks.

- Cache hit rate for evaluating caching effectiveness.

Monitoring these KPIs over time helps detect patterns and take corrective actions before performance degrades.

3. Cost Controls

Since Databricks runs on cloud compute, cost optimization is equally important. Useful strategies include:

- Cluster tagging for cost allocation and visibility.

- Quota limits to prevent excessive resource usage.

- Auto termination to stop idle clusters from consuming compute.

- Resource clean-up to remove unused jobs, tables, or clusters.

These practices avoid waste and support predictable budgeting.

4. Observability

Beyond standard metrics, advanced observability tools help detect deeper issues such as:

- Data skew, where a few tasks process most of the data.

- Memory bottlenecks, often caused by large joins or caching.

- Long GC cycles, slowing down execution.

- Driver bottlenecks, where the driver becomes overloaded.

These insights enable proactive tuning and reduce downtime.

Real-World Use Cases & Success Stories

Real-world success stories underline how important performance optimization on the Databricks platform can unlock value, speed, and reliability at scale.

1. Block (Financial Services)

Block is a parent company of Cash App and Square—migrated to the Databricks Lakehouse platform and adopted its unified governance (Unity Catalog) architecture. As a result, they achieved a 12× reduction in compute cost, a 20% reduction in data egress cost, and now manage 12 PB of data on the platform.

2. Aditya Birla Fashion & Retail Ltd. (Retail)

ABFRL implemented Databricks’ Data Intelligence Platform, which enabled 20× faster machine-learning, serving markdown marketing models and faster BI reporting. They also achieved “more value on less infrastructure spend.”

Key Takeaways

- Migrating to modern platforms like Databricks can lead to massive compute cost savings and performance improvements.

- Unified governance and catalog solutions (e.g., Unity Catalog) help large organizations scale analytics while maintaining security and compliance.

- Retail and financial services organizations both benefit from architecture that enables faster ML, BI, and decision-making at scale.

Why Databricks Advanced Analytics is Becoming a Top Choice for Data Teams

Explore how Databricks enables advanced analytics, faster data processing and smarter business insights

Kanerika + Databricks: Building Intelligent Data Ecosystems for Enterprises

Kanerika assists businesses in transforming their data architecture with the help of AI- Powered analytics and automation. Moreover, we provide full AI, and cloud transformation solutions to healthcare, fintech, manufacturing, retail, education, and governmental services. We have expertise in the areas of data migration, engineering, business intelligence, and automation, which produce quantifiable results in organizations.

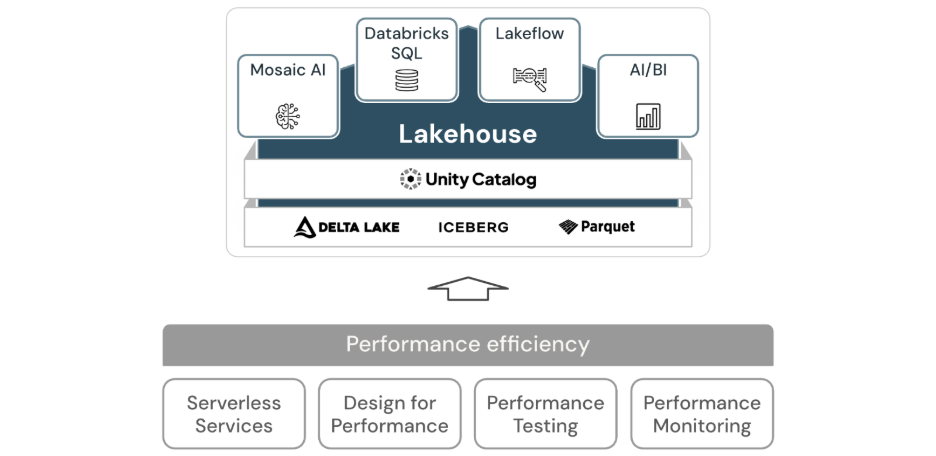

As a Databricks Partner, we add the Lakehouse Platform to bring together data management and analytics. Also, we have Delta Lake that will be used as a reliable storage, Unity Catalog as a governance system, and Mosaic AI as a model controller. This helps businesses to proceed beyond disjointed big data systems to a unified cost-effective platform to assist ingestion, processing, machine learning, and real-time analytics.

Kanerika ensures security and compliance with global standards, such as ISO 27001, ISO 27701, SOC 2, and GDPR. Besides, having solid experience in Databricks movement, optimization, and integration with AI, we assist enterprises in transforming messy data into valuable insights and accelerating innovations.

Unlock Faster Queries And Cost Efficient Analytics With Databricks Performance Optimization.

Partner With Kanerika To Boost Your Data Performance Optimization.

FAQs

1. What is Databricks Performance Optimization?

It refers to a set of best practices, tools, and techniques that improve the speed, efficiency, and cost-effectiveness of Databricks workloads, including Spark jobs, Delta Lake tables, and SQL queries.

2. Why is performance optimization important in Databricks?

Without proper tuning, pipelines run slower, clusters consume more compute, and cloud costs increase. Optimization ensures faster insights, stable jobs, and efficient resource usage.

3. What are the most common performance bottlenecks in Databricks?

Typical issues include small files, data skew, inefficient joins, large shuffles, under-sized clusters, old runtime versions, and poor partitioning strategies.

4. How can I optimize Delta Lake tables?

Use OPTIMIZE for compaction, apply Z-ordering on frequently filtered columns, avoid tiny partitions, enable data skipping, and leverage Predictive Optimization for Unity Catalog tables.

5. Does cluster size affect performance?

Yes. More worker nodes often improve job throughput. Use autoscaling, instance pools, and the latest Databricks Runtime or Photon for optimal performance.

6. What tools help monitor performance issues?

Databricks provides the Spark UI, Query Profile, and Ganglia. For deeper observability, tools like Unravel detect skew, GC issues, driver bottlenecks, and memory pressure.

7. How can I reduce Databricks costs while improving performance?

Optimize storage, tune queries, right-size clusters, use spot instances, enable auto-termination, and monitor KPIs such as shuffle volume, job duration, and cache hit rate.