Did you know that humans create approximately 2.5 quintillion bytes of data every day? Data is the new oil, but it’s useless without refinement. ETL and ELT are the refineries of the information age. Now, the big question is which of these two data processing techniques is suitable for your business. This sparks the ETL vs ELT debate.

ELT (Extract, Load, and Transform) and ETL (Extract, Transform, and Load) are two different ways of data integration. Each has advantages and considerations that organizations may have to take into account when looking for means of improving their data handling processes. The choice between ETL and ELT is more significant now than ever because it determines how quickly, flexibly, and effectively raw data can be turned into actionable insights.

Data landscapes are dynamic and constantly expanding. The battle between ETL vs ELT in data management stimulates innovation and pushes businesses to adapt to the ever-evolving demands of modern data environments.

What is ETL?

ETL stands for Extract, Transform, Load. It is a fundamental process in data warehousing and integration. It functions as a pipeline, receiving raw data from multiple sources, transforming and cleaning it, and then delivering it to a predetermined destination for analysis. ETL involves three primary stages: extraction, transformation, and loading. Request Proposal about each stage in turn:

Extraction

Extraction is the initial step where data is gathered from various sources, such as databases, spreadsheets, or flat files, and transferred to a staging area. This is similar to a library with books scattered across different rooms. Extraction involves retrieving this data from its original locations. ETL tools can connect to various sources, schedule automated extractions, and handle different data formats.

Data is read from source systems and stored in a staging area for further processing. This phase ensures that data is collected accurately and completely from different sources before any modifications are made. The retrieved data might be inconsistent, incomplete, or incompatible.

Transformation

Transformation involves converting and organizing the extracted data into a format suitable for loading into the data warehouse. The retrieved data might be inconsistent, incomplete, or incompatible. Transformation is where the magic happens. Think of it as organizing the library books. Data is cleaned by removing duplicates, correcting errors, and filling in missing values. It can also be standardized to a consistent format and structure.

Additionally, transformations like filtering, aggregation, and calculations can be applied to meet specific analytical needs. Transformation ensures that the data is structured, formatted, and cleansed for accurate analysis and reporting.

Loading

Loading is the final step, where the transformed data is loaded into the target data warehouse, which is similar to carefully placing the organized books on shelves in the library. The loading process ensures the data arrives at the target system efficiently and without errors.

In this step, data is physically structured and loaded into the warehouse, making it available for analysis and reporting. The ETL process is iterative, repeating as new data is added to the warehouse to maintain accuracy and completeness.

A Glimpse of Kanerika’s Data Integration Project

Business Context

The client is a leading technology company offering spend management solutions. They faced challenges managing the entire invoice processing, as handling multiple file formats proved to be a cumbersome and time-consuming task.

We standardized the invoice data exchange to reduce errors and enhance business transaction efficiency. With automated validation and data transformation, we achieved streamlined communication, accelerated data exchange, and improved accuracy.

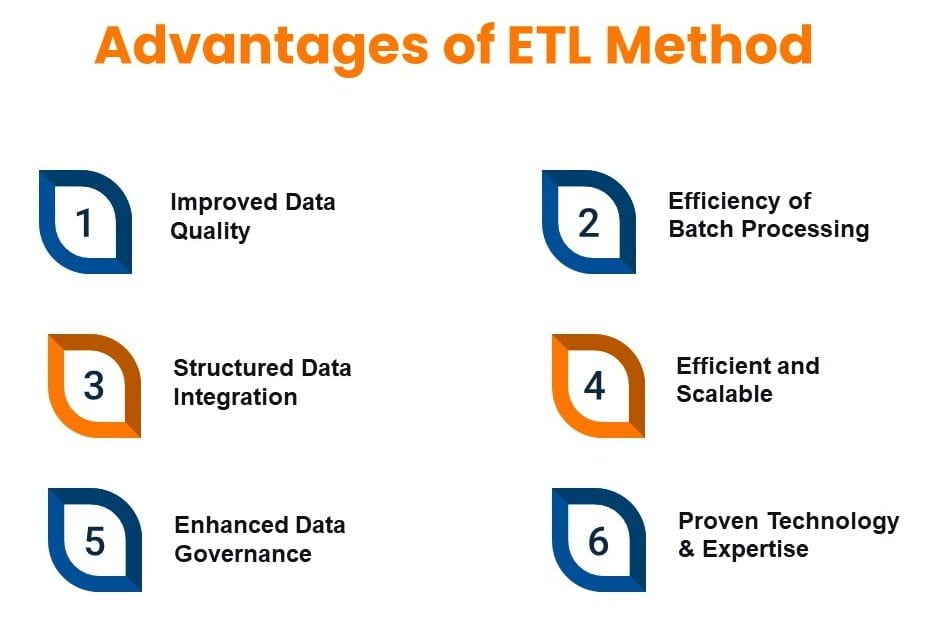

Advantages and Limitations of ETL

Advantages

1. Improved Data Quality

ETL tools enhance data quality by removing inconsistencies and anomalies. It allows for thorough data cleansing and transformation before loading it into the data warehouse. This ensures the data is accurate, complete, and consistent, leading to reliable insights. Techniques like deduplication, error correction, and standardization happen during the transformation stage.

2. Structured Data Integration

ETL simplifies the integration of data from multiple sources, making it more accessible and usable for analysis. This method excels at handling well-defined, structured data from traditional sources like relational databases. The transformation step allows for conversion into a format optimized for data warehouse queries and analysis.

3. Efficiency of Batch Processing

ETL works effectively for processing massive volumes of data in batches. In order to minimize interference with operating systems, scheduled ETL jobs might be executed at off-peak or during night.

4. Improved Data Governance

Since ETL procedures are usually clearly defined and documented, it is simpler to keep track of data lineage (the beginning and ending points of each data point’s transformation). Data security and regulatory compliance rely on this transparency.

5. Proven Technology and Expertise

ETL has been around for many years, and there are several trustworthy and well-developed ETL technologies out there. Building and managing ETL pipelines is a skill that many data professionals have.

6. Efficient and Scalable

Automation in ETL processes reduces manual effort, leading to quicker and error-free workflows. Moreover, modern ETL tools, especially cloud-based solutions, offer scalability to handle large volumes of data effectively.

Limitations

1. Latency in Insights

Since ETL processes data in batches, it may cause delays in data updates. This might not be the best option for situations requiring real-time or almost real-time insights.

2. Complexity and Development Time

Creating and managing intricate ETL pipelines can take a lot of effort and specific expertise. Costs for development and continuous maintenance may increase as a result..

3. Limited Flexibility for Unstructured Data

Unstructured data, such as direct sensor data or social media feeds, is difficult for ETL to handle directly. Additional pre-processing or conversion operations may be necessary.

4. Resource Intensive for Large Data Volumes

Huge datasets may require a lot of processing and storage power to transform before loading, which becomes a major concern in the big data era.

What is ELT?

ELT stands for Extract, Load, Transform. It’s a data processing approach where data is first extracted from various sources, then loaded into a data warehouse or data lake in its raw format, and finally transformed as needed for analysis. This is in contrast to ETL (Extract, Transform, Load) where transformation happens before loading. Let’s discuss the three main stages: extraction, loading, and transformation.

Extraction

During the extraction phase, data is collected from multiple sources and loaded directly into the target system. Similar to ETL, ELT starts by retrieving data from various sources, including databases, customer relationship management (CRM) systems, social media platforms, and more. This phase focuses on efficiently moving data from source to target without any intermediate processing.

Loading

This is the stage where the extracted data is loaded into the target system, typically a data warehouse or data lake– a vast storage repository for all your data, structured or unstructured. Think of dumping all the library books in a central location. Loading ensures that data is efficiently stored in the target system for subsequent analysis and reporting.

Transformation

Transformation occurs after the data has been loaded into the target system, where it is then processed and transformed as needed. Data manipulation happens within the data lake. Cleaning, standardization, filtering, aggregation, and calculations can all be performed on-demand for specific queries. Imagine having librarians sort and organize the books only when a specific topic is requested. Transformation post-loading allows for flexibility in data processing, enabling organizations to adapt data for various analytical needs.

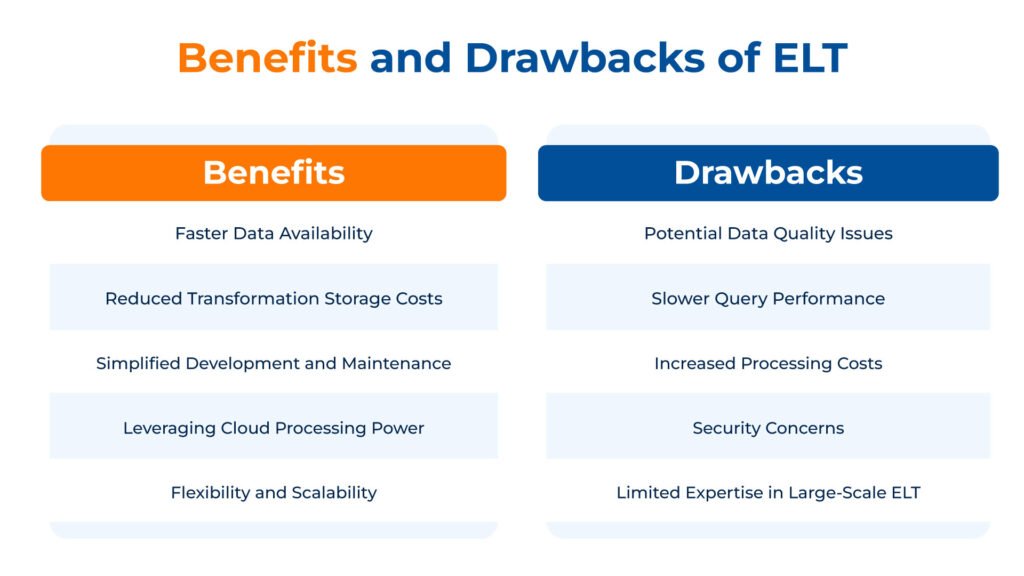

Benefits and Drawbacks of ELT

Benefits

1. Faster Data Availability

By loading data first, ELT provides quicker access to raw information for exploration and analysis. This is crucial for real-time or near-real-time insights.

2. Flexibility and Scalability

ELT excels at handling large and diverse datasets, including unstructured data like social media feeds or sensor readings. The raw data in the data lake can be transformed for various purposes later, providing greater flexibility for evolving analytical needs.

3. Reduced Storage Costs for Transformation

ELT avoids pre-transformation, potentially reducing storage requirements for intermediate processed data. This can be a significant cost-saving for massive datasets.

4. Simplified Development and Maintenance

ELT pipelines can be simpler to set up initially compared to complex ETL transformations. This reduces development time and ongoing maintenance overhead.

5. Leveraging Cloud Processing Power

Cloud data warehouses and data lakes often provide on-demand processing power for data transformation. This allows scaling processing resources to handle large data volumes efficiently.

Drawbacks

1. Potential Data Quality Issues

Since data is loaded raw, data quality checks and transformations happen later. This can lead to issues with data consistency and accuracy if not addressed properly within the data lake.

2. Slower Query Performance

Raw data in the data lake may require additional processing before analysis, potentially impacting query performance compared to pre-transformed data in ETL.

3. Increased Processing Costs

While storage costs may be lower, complex transformations within the data lake can incur processing costs depending on the cloud platform used.

4. Security Concerns

Loading raw data can raise security concerns if sensitive information isn’t anonymized or filtered before entering the data lake. Proper data governance practices are crucial.

5. Limited Expertise in Large-Scale ELT

While ELT tools are emerging, expertise in managing and optimizing large-scale data lakes for transformation is still developing.

ETL vs ELT: Key Differences

Aspect | ETL | ELT |

| Order of Operations | Extract -> Transform -> Load | Extract -> Load -> Transform |

| Data Staging | Requires a separate staging area for data transformation | Data is loaded directly into the target system |

| Data Transformation | Transformations occur before data is loaded, ensuring data quality upfront | Transformations happen on-demand within the target system |

| Data Latency | Can have higher latency due to upfront transformations | Offers lower latency as data is readily available for analysis |

| Data Quality | Generally higher data quality due to pre-processing | Requires additional data governance measures within the data lake |

| Scalability | Can struggle with very large datasets | Scales well for big data volumes |

| Flexibility | Less flexible as transformations are pre-defined | More flexible as transformations can be adapted for specific needs |

| Cost | Can be expensive for complex ETL processes and large datasets | Potentially more cost-effective, especially for big data |

| Suitability | Ideal for situations requiring high data quality and pre-defined analysis needs | Well-suited for big data environments, real-time analytics, and agile data exploration |

ETL vs ELT: Integration with Modern Technologies

ETL and ELT strategies align with emerging technologies like AI, machine learning, and IoT in different ways. While ETL is more suited for structured data and can help with data privacy and compliance by cleaning sensitive data before loading it into the data warehouse, ELT, on the other hand, is more flexible in handling unstructured data and can be more cost-effective, especially with cloud-based data warehouse solutions.

AI & Machine Learning

ETL:

- ETL can be used to prepare high-quality training data for machine learning models.

- By transforming data upfront in the ETL process, you can ensure data consistency and remove anomalies that might impact model training.

- However, ETL might introduce latency, delaying the availability of data for training models requiring real-time updates.

ELT:

- ELT allows for storing all data (including potentially useful data for future models) in the data lake.

- Machine learning pipelines can then access and transform this data on-demand for training various models.

- This flexibility is beneficial for exploring new machine learning use cases without pre-defining transformations in ETL.

IoT (Internet of Things)

ETL:

- ETL can be used to extract data from diverse IoT sensors and devices, potentially performing initial transformations to ensure compatibility with the data warehouse schema.

- This structured data can then be readily used for traditional data warehousing and business intelligence tasks.

ELT:

- ELT is well-suited for handling the high volume and velocity of data generated by IoT devices.

- The raw data can be loaded into the data lake, and transformations can be applied later based on specific analytics needs.

- This is useful for exploratory analysis of sensor data or identifying patterns that might not be initially apparent.

Cloud Platforms & Data Lakes

ETL:

- Modern ETL tools are increasingly cloud-based, offering scalability and easier integration with cloud data warehouses like Snowflake or Google BigQuery.

- ETL pipelines can extract data from various cloud sources and transform it before loading it into the target data warehouse.

ELT:

- ELT leverages cloud data lakes like Amazon S3 or Azure Data Lake Storage.

- These platforms offer flexible storage for the raw, semi-structured, and unstructured data that ELT excels at handling.

- Cloud-based data lake management tools can then be used to catalog, secure, and transform data within the lake for further analysis.

ETL vs ELT: Powering Data Integration Across Industries

ETL and ELT play crucial roles in transforming raw data into valuable insights across various industries. Here’s a glimpse into how these approaches are utilized in different sectors:

1. Finance

Financial institutions heavily rely on ETL for regulatory compliance. Consolidating data from trading platforms, customer accounts, and risk management systems requires data cleansing, standardization, and transformation before loading into data warehouses for reporting and audit purposes.

Fraud detection and real-time market analysis benefit from ELT. Raw transaction data can be quickly loaded into a data lake, allowing for near real-time analysis to identify fraudulent activities and capitalize on market fluctuations.

2. Healthcare

ETL ensures patient data privacy and adherence to HIPAA regulations. Extracting data from Electronic Health Records (EHRs), lab results, and billing systems requires transformation and cleaning before loading into data warehouses for research, quality improvement initiatives, and billing analysis.

Pharmaceutical companies leverage ELT for clinical trial data analysis. Large datasets from wearables, patient sensors, and medical imaging can be readily available in a data lake for faster analysis and drug development processes.

3. Retail

ETL streamlines customer data management for targeted marketing campaigns. Extracting data from CRM systems, loyalty programs, and point-of-sale systems allows for data enrichment and segmentation before loading into data warehouses for customer behavior analysis and targeted promotions.

Real-time customer behavior analysis thrives with ELT. Website clickstream data, social media interactions, and in-store sensor data can be loaded into a data lake, enabling near real-time personalization of product recommendations and in-store promotions.

4. Manufacturing

ETL ensures quality control and production efficiency. Extracting data from machine sensors, production lines, and quality control checks requires transformation and validation before loading into data warehouses for identifying production bottlenecks and optimizing manufacturing processes.

Predictive maintenance benefits from ELT. Sensor data from equipment can be readily available in a data lake for real-time monitoring and analysis, allowing for preventive maintenance and avoiding costly downtime.

5. Media & Entertainment

ETL for marketers streamlines and audience analysis. Extracting data from streaming platforms, social media, and content distribution networks requires transformation and filtering before loading into data warehouses for optimizing content delivery, personalization, and audience segmentation for advertising campaigns.

Real-time social media sentiment analysis utilizes ELT. Social media data can be quickly loaded into a data lake, allowing for near real-time analysis of audience reactions to content and identifying emerging trends.

Factors to Consider While Choosing Between ETL and ELT

1. Data Size and Complexity

ETL: Well-suited for smaller, well-structured datasets where complex transformations are required to ensure data quality before loading into the warehouse.

ELT: Ideal for massive and diverse datasets (structured, semi-structured, unstructured) where initial transformations might not be necessary.

2. Data Quality Requirements

ETL: Offers more control over data quality upfront during the transformation stage, filtering anomalies and ensuring consistency before loading.

ELT: Requires additional attention to data quality within the data lake, potentially involving separate processes after loading.

3. Speed of Insights

ELT: Provides faster access to raw data since transformation happens later, enabling near-real-time analytics for time-sensitive scenarios.

ETL: Can introduce latency due to the upfront transformation step, potentially delaying the availability of insights.

4. Technical Expertise

ETL: Requires expertise in designing and maintaining complex ETL pipelines, especially for intricate transformations.

ELT: ELT pipelines might be simpler to set up initially, but managing large-scale data lakes for efficient transformation demands expertise in data lake management tools and big data processing engines.

5. Security Considerations

ETL: Allows for data filtering and anonymization during transformation, potentially enhancing data security before loading into the warehouse.

ELT: Requires robust security measures for the data lake, as sensitive information might be present in the raw data before any transformations occur.

Our Case Study Video

Business Context

The client is a multi-site global IT enterprise operating in various geographies. They needed help with fragmented HR systems and data islands, primarily resulting from varying local regulations and M&As.

We implemented a common and integrated Data Warehouse on Azure SQL and enabled a Power BI dashboard, consolidating HR data and providing the client with a comprehensive view of their human resources.

Kanerika: Empowering Businesses with Expert Data Processing Services

Kanerika, one of the globally recognized technology consulting firms, offers exceptional data processing, analysis, and integration services that help businesses address their data challenges and utilize the full potential of data. Our team of skilled data professionals is equipped with the latest tools and technologies, ensuring top-quality data that’s both accessible and actionable.

Our flagship product, FLIP, an AI-powered data operations platform, revolutionizes data transformation with its flexible deployment options, pay-as-you-go pricing, and intuitive interface. With FLIP, businesses can streamline their data processes effortlessly, making data management a breeze.

Kanerika also offers exceptional AI/ML and RPA services, empowering businesses to outsmart competitors and propel towards success. Experience the difference with Kanerika and unleash the true potential of your data. Let us be your partner in innovation and transformation, guiding you towards a future where data is not just information but a strategic asset driving your success.

Frequently Asked Questions

What is difference between ETL and ELT?

ETL (Extract, Transform, Load) cleans and shapes data *before* loading it into the target database, like pre-cooking a meal. ELT (Extract, Load, Transform) loads raw data first, then transforms it in the data warehouse – think of it as cooking the meal after it’s already on the plate. ELT leverages the target system’s processing power, while ETL relies more on dedicated transformation tools. The choice depends on data volume and target system capabilities.

What is the difference between ETL and ELT in BigQuery?

ETL (Extract, Transform, Load) cleans and prepares data *before* it enters BigQuery, demanding more upfront processing power. ELT (Extract, Load, Transform) moves raw data into BigQuery first, then transforms it within the cloud, leveraging BigQuery’s processing capabilities for efficiency and scalability. Essentially, ELT shifts the heavy lifting to the powerful data warehouse itself. This often results in faster initial ingestion and more flexibility.

Is Snowflake an ETL tool or ELT tool?

Snowflake isn’t strictly an ETL *tool*, but rather a data warehouse that excels at the *’L’ (Load)* phase of both ETL and ELT processes. It’s designed to efficiently ingest and store vast amounts of data, making it a preferred destination for both approaches. Whether you use ETL or ELT depends on your data preparation and transformation preferences, not Snowflake’s capabilities.

Is Databricks ETL or ELT?

Databricks isn’t strictly ETL *or* ELT; it’s a platform enabling both. You can build either type of pipeline using its tools. The choice depends on your data volume, transformation complexity, and preferred data warehousing strategy. Essentially, Databricks provides the flexibility to choose the best approach for your specific needs.

What are ELT tools?

ELT tools are software and resources that help educators teach English as a foreign or second language. They range from interactive whiteboards and vocabulary builders to sophisticated learning management systems and assessment platforms. Essentially, they’re designed to make English language teaching more efficient, engaging, and effective for both teachers and students. Think of them as the modern toolkit for the modern English language classroom.

What is the difference between Delta Lake and Data Lake?

A data lake is a raw, unstructured repository of all your data; think of it as a giant data dump. Delta Lake, in contrast, is a *structured* layer *on top* of a data lake, providing ACID transactions and schema enforcement. It essentially brings order and reliability to the chaos of a raw data lake, making the data much more usable for analytics. Think of it as organizing the data lake for easier access and analysis.

What is an example of ELT?

ELT, or English Language Teaching, isn’t just one thing; it’s a broad field. A simple example is a classroom lesson using interactive games to teach vocabulary. More broadly, ELT encompasses everything from online courses to immersive language exchange programs, all focused on effective English acquisition. Think of it as the entire ecosystem of English language learning.

What is the best ETL tool?

There’s no single “best” ETL tool; the ideal choice depends entirely on your specific needs and context. Factors like data volume, complexity, budget, and existing infrastructure heavily influence the decision. Consider your team’s expertise and the tool’s ease of integration with your systems. Ultimately, the best tool is the one that efficiently and reliably handles *your* data.

What is an example of an ETL?

ETL stands for Extract, Transform, Load – a process that cleans and prepares data for analysis. Imagine sifting through a messy attic (raw data), organizing items (transforming), and neatly placing them in storage (loading into a database). A simple example: pulling sales data from multiple spreadsheets, standardizing formats and units, then loading it into a central database for reporting.

What is the difference between ETL and API?

ETL (Extract, Transform, Load) is about moving and reshaping data *between* systems, like consolidating sales figures from various branches into a central database. APIs (Application Programming Interfaces) are about exchanging data *between* applications in real-time, like fetching product information from an online store into your shopping cart. ETL focuses on batch processing; APIs on immediate interactions. They serve different purposes, one for data warehousing, the other for application integration.

What are the types of data warehouses?

Data warehouses aren’t one-size-fits-all; they come in various forms depending on needs. You’ll find enterprise data warehouses (EDW) for large-scale, centralized data, and data marts, smaller focused subsets of an EDW. Operational data stores (ODS) bridge the gap between transactional systems and data warehouses, providing near real-time data for operational decisions. Cloud-based data warehouses offer scalability and cost efficiency.

Is Snowflake ETL or ELT?

Snowflake isn’t strictly ETL or ELT; it’s more accurately an ELT platform optimized for the cloud. It excels at loading raw data (Extract, Load) and then transforming it within the data warehouse (Transform) leveraging its powerful SQL capabilities. This avoids the performance bottlenecks and data movement associated with traditional ETL processes. Therefore, it’s best described as a cloud-native ELT solution.

Is data Lake ETL or ELT?

A Data Lake is primarily associated with ELT (Extract, Load, Transform). This means you load raw data first, and then transform it later only when it’s needed for analysis. However, a Data Lake can also serve as the initial source for traditional ETL (Extract, Transform, Load) processes that feed structured data warehouses.

What is the difference between EDI and ETL?

EDI is about exchanging standardized business documents (like invoices or purchase orders) electronically between different companies. ETL, on the other hand, is about taking data from various internal sources, cleaning and transforming it, then loading it into a central system for internal analysis and reporting. Essentially, EDI facilitates external business communication, while ETL prepares data for internal use.

Why is ETL not ELT?

ETL (Extract, Transform, Load) processes and transforms data before loading it into a data warehouse. ELT (Extract, Load, Transform) loads raw data directly into a powerful data system first. All transformations then happen within that system, leveraging its processing power. The key difference is the order and location of data transformation.

Is SQL an ETL tool?

No, SQL isn’t an ETL tool itself. SQL is a programming language used to manage and query data in databases. While you can use SQL commands for the Extract, Transform, and Load steps of an ETL process, dedicated ETL tools provide a complete environment to build, manage, and automate these complex data flows.

Is ELT better than ETL?

Neither ELT nor ETL is universally better; the best choice depends on your specific needs, data volume, and the tools you use. ELT (Extract, Load, Transform) is often favored with modern cloud data warehouses for its flexibility and ability to store raw data. ETL (Extract, Transform, Load) is a traditional approach, well-suited for structured data and on-premise systems. Consider your existing setup and future goals to decide which fits best.

What are the 5 steps of ETL?

The 5 steps of ETL are: 1. Extract: Gathering raw data from various source systems. 2. Clean: Fixing errors, removing duplicates, and standardizing data. 3. Transform: Reshaping and combining data to fit new requirements. 4. Validate: Checking the data’s quality and accuracy after transformation. 5. Load: Moving the final, processed data into its target destination, like a data warehouse.

Is informatica ETL or ELT?

Informatica is primarily an ETL (Extract, Transform, Load) tool. It typically performs data transformations using its own processing engine before loading it into a target system. However, modern Informatica platforms are flexible and can also facilitate ELT (Extract, Load, Transform) processes. This is common when leveraging powerful cloud data warehouses to transform data within the target. So, while traditionally known for ETL, Informatica solutions support both approaches depending on your architectural needs.

Is Excel an ETL tool?

No, Excel is not a dedicated ETL (Extract, Transform, Load) tool. While you can import data (Extract) and use its features like formulas or pivot tables to clean and reshape it (Transform), it’s not designed for automatically loading data into other systems or databases. Excel is primarily for personal data analysis and reporting, not for robust, automated data integration.

Is DBT for ETL or ELT?

DBT is primarily used for ELT (Extract, Load, Transform). It focuses entirely on the Transform step, allowing you to clean, organize, and prepare your data after it has been loaded into a data warehouse. This means DBT handles the T in ELT, not the extraction or initial loading parts.

Is Informatica a data lake?

No, Informatica is not a data lake. Informatica is a leading platform for data integration, management, and governance. A data lake is a large storage system that holds all your raw, unprocessed data. Informatica provides the tools to move, process, clean, and analyze data within your data lake and other systems.

Which tool is used for ETL?

Many tools are used for ETL (Extract, Transform, Load). Popular options include Informatica PowerCenter, Talend, IBM DataStage, and Microsoft SQL Server Integration Services (SSIS). These tools extract data from different sources, transform it into a usable format, and load it into a destination, often for analysis.

What will replace ETL?

ETL isn’t disappearing, but it’s largely being replaced by modern data integration methods. Primarily, this is ELT (Extract, Load, Transform), where data is loaded directly into powerful cloud data warehouses first, then transformed. This leverages cloud computing for faster, more flexible analysis. Real-time data streaming is also replacing batch processes for immediate insights.

Is ETL the same as API?

No, they are not the same. ETL (Extract, Transform, Load) is a process for moving and preparing data from one system to another. An API (Application Programming Interface) is a way for different software systems to communicate and share information. While an API can be used to extract data in an ETL process, it is a communication method, not the entire data movement and transformation process itself.

Is Azure data Factory an ETL tool?

Yes, Azure Data Factory (ADF) is primarily a cloud-based service used for data integration. It allows you to create pipelines to Extract, Transform, and Load (ETL) data from various sources into a desired destination. ADF is also very effective for ELT (Extract, Load, Transform) patterns, making it a flexible tool for your data movement needs.