When Zuellig Pharma migrated its complex SAP landscape and operational data across 40 integrated systems into Microsoft Azure, the process went far beyond simply “copying data.” It required staged extraction, quality checks, transformation rules, and governance controls to ensure continuity across 13 Asian markets. This example shows that successful enterprise migrations follow a lifecycle of planning, profiling, execution, validation, and monitoring, not a single technical step.

The numbers reflect just how important this lifecycle approach has become. Studies show that nearly 80% of data migration projects exceed their original timelines or budgets because organizations overlook essential stages such as data profiling, cleansing, and post-migration testing. Following a structured lifecycle helps teams catch quality issues early, reduce rework, and improve trust in migrated data, especially in hybrid and multi-cloud environments where complexity is high.

Continue reading this blog to understand the data migration lifecycle, dive into each phase from discovery to validation, and learn practical strategies to ensure your next migration is predictable, secure, and effective.

Accelerate Your Data Transformation by Migrating to Power BI!

Partner with Kanerika for Expert Data Modernization Services

Key Takeaways

- Enterprise data migration is a structured lifecycle involving assessment, planning, execution, validation, and optimization, not a one-time technical task.

- Skipping early activities like data discovery, profiling, and cleansing is a key reason many migration projects run over budget or miss deadlines.

- Breaking the migration into clear phases reduces risk, improves data quality, and builds confidence in the target system.

- Ongoing testing, reconciliation, and business user involvement ensure the migrated data supports real-world workflows.

- Governance, automation, and security must be part of the process throughout the lifecycle for compliance and scalability.

- Kanerika’s FLIP platform helps automate the migration lifecycle, enabling faster and lower-risk enterprise migrations.

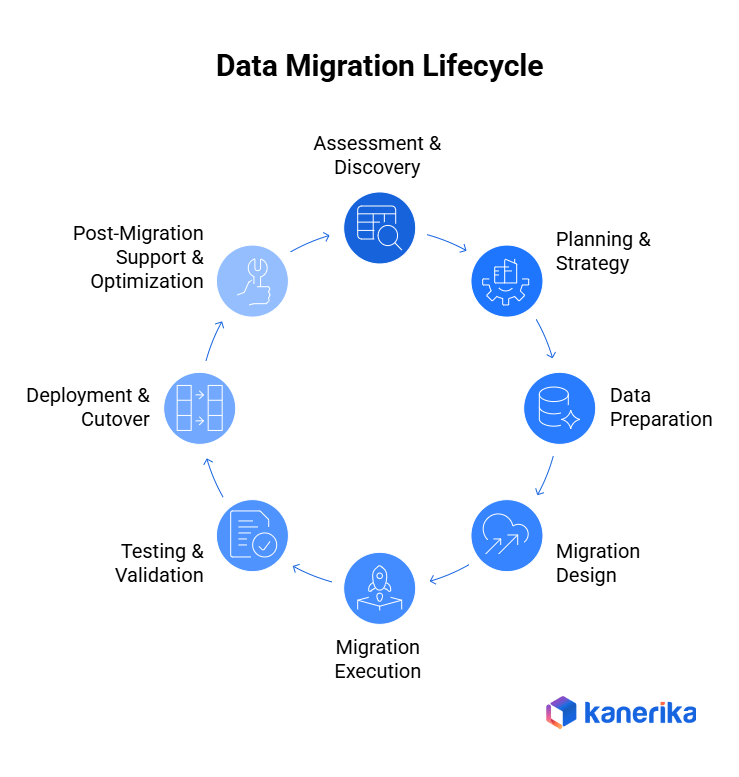

What Is the Data Migration Lifecycle?

The data migration lifecycle is an end-to-end procedure that takes a structured approach to assessing, preparing, relocating, validating, and stabilising data for transfer between systems. The lifecycle approach does not consider migration as a one-time technical activity; rather, it makes data transfer planned, controlled, and business-aligned. Techniques for breaking down migration into distinct stages minimize risks such as information loss, quality issues, downtime, and expensive rework.

Moreover, all phases involve validation and checkpoints, and problems are detected at an early stage instead of once the system goes live. The data migration lifecycle is a key factor in digital transformation initiatives such as cloud adoption, ERP modernization, and analytics enablement. Reliable information ensures that new platforms deliver value immediately and can scale to meet future business requirements. Concisely, the lifecycle approach transforms data migration from a risky occurrence into a predictable and repeatable process.

Phase 1: Assessment and Discovery

The assessment phase establishes the foundation for your migration project by thoroughly understanding your current data landscape before making strategic decisions. Organizations that invest in comprehensive discovery are 50% more likely to stay on budget and schedule.

1. Inventory Source Systems and Data Assets

- Catalog all data repositories including databases, ERP systems, CRM platforms, file shares, cloud applications, and third-party integrations

- Document structured, semi-structured, and unstructured data types

- Map information flow between systems to identify dependencies that could disrupt operations

- Create comprehensive asset inventory highlighting datasets, schemas, application interfaces, and dependent processes

2. Evaluate Data Quality and Technical Constraints

- Assess data for completeness, accuracy, consistency, and duplication using automated profiling tools

- Poor data quality costs organizations an average of $12.9 million annually

- Identify legacy system limitations including restricted extraction capabilities or embedded business logic

- Document technical debt and identify ROT (Redundant, Obsolete, Trivial) data, averaging 30% of enterprise datasets

3. Classify Sensitive Data and Define Scope

- Identify regulated and sensitive information to establish appropriate security controls

- Align with stakeholders to clarify business objectives and define migration scope

- Determine which data should be migrated, archived, or retired

- Establish compliance requirements related to privacy regulations and data governance

Phase 2: Planning and Strategy

Strategic planning translates discovery insights into an executable roadmap, defining how migration will proceed while reducing business disruption and managing risk.

1. Choose the Right Migration Approach

- Select between big bang, phased, or hybrid strategies based on risk tolerance and business requirements

- Big bang migrations execute in single cutover windows, offering speed but higher risk

- Phased migrations move data incrementally, allowing validation between stages

- Organizations like Amazon and Airbnb use phased approaches to maintain stability during complex transitions

2. Establish Timelines, Budgets, and Governance

- Develop realistic schedules aligned with business calendars to avoid peak periods

- Account for buffer time between phases to address unexpected issues

- Define clear ownership across IT, business, and compliance teams

- Document risk mitigation strategies for downtime, data loss, or performance issues

3. Define Success Metrics and Validation Criteria

- Establish KPIs including data accuracy thresholds, acceptable downtime, and performance benchmarks

- Set quantifiable targets for migration speed, error rates, and system performance

- Define reconciliation requirements and completeness criteria

- Document acceptance criteria that guide testing and final sign-off

Phase 3: Data Preparation

Data preparation determines migration quality by addressing issues before they reach the target system, focusing on cleansing and standardizing data for the new environment.

1. Execute Data Cleansing and Standardization

- Address inaccurate values, missing fields, inconsistent formats, and obsolete records

- Approximately 30% of enterprise data is redundant, obsolete, or trivial and should be eliminated

- Remove duplicates using business rules and standardize reference values and formats

- Correct invalid entries and flag missing values for resolution

2. Validate Data Readiness

- Test prepared data against quality thresholds before initiating migration workflows

- Early validation catches issues when they are easier and less costly to resolve

- Implement automated profiling tools to detect anomalies and inconsistencies

- Pre-migration validation reduces iteration cycles during execution

3. Define Transformation Logic and Metadata

- Document transformation rules converting source formats to target requirements

- Include field mappings, calculated values, data type conversions, and business logic

- Standardize metadata, schemas, and master data elements for consistency

- Apply security controls including data masking, encryption, and access restrictions

Phase 4: Migration Design

Migration design creates the technical blueprint for moving data in a controlled, repeatable manner, directly impacting performance, reliability, and automation potential.

1. Design ETL or ELT Workflows

- Choose between Extract-Transform-Load (ETL) or Extract-Load-Transform (ELT) based on platform capabilities

- Modern cloud environments favor ELT, using platforms like Snowflake, BigQuery, or Azure Synapse

- ETL remains relevant for complex cleansing before loading or with legacy systems

- Define extraction, transformation, loading processes including error handling and retry logic

- Implement checkpoint management for recovery without restarting entire processes

2. Create Detailed Source-to-Target Mappings

- Document field-level mappings specifying how source elements translate to target schema

- Plan load sequencing to preserve referential integrity between datasets

- Ensure parent records load before child records to maintain relationships

- Design validation checkpoints throughout workflows to catch errors early

3. Implement Automation and Orchestration

- Deploy orchestration tools to manage scheduling, dependencies, monitoring, and retries

- Build automated logging, alerts, and performance tracking for real-time visibility

- Configure dependency management ensuring tasks execute in correct order

- Implement automated error handling and notification systems for rapid detection

Phase 5: Migration Execution

Execution transforms planning into action through carefully managed data movement, requiring precision, monitoring, and rapid issue resolution.

1. Conduct Test Migrations in Controlled Environments

- Execute test runs to validate mappings, transformation logic, and workflow design

- Uncover format mismatches, performance bottlenecks, and unexpected dependencies

- Verify error handling, logging mechanisms, and rollback procedures function correctly

- Document test results and refine workflows based on findings

2. Execute Pilot Migrations with Real Data

- Move data subsets under near-production conditions to expose real-world issues

- Validate the approach with actual scenarios before full-scale execution

- Use feedback to refine workflows, adjust sequences, and optimize performance

- Involve business users to validate migrated data meets operational requirements

3. Perform Full-Scale Data Loads

- Execute production migrations according to the approved strategy, whether bulk or incremental

- Monitor system performance, error logs, and data throughput continuously

- Coordinate downtime windows with stakeholders and maintain clear communication

- Implement escalation procedures for rapid issue resolution

Phase 6: Testing and Validation

Validation ensures migrated data is accurate, complete, and functional. Research shows 48% of migration failures stem from inadequate validation.

1. Verify Data Integrity and Completeness

- Compare record counts, validate key fields, and confirm no data loss or duplication

- Use automated reconciliation tools to systematically compare source and target records

- Implement hash totals, checksums, and statistical sampling for efficient verification

- Validate that referential integrity and relationships between datasets are preserved

2. Execute Functional and Application Testing

- Test applications to confirm workflows, reports, calculations, and integrations function correctly

- Involve business users to validate real-world scenarios and use cases

- Conduct parallel run testing to operate old and new systems simultaneously

- Verify business logic produces expected outcomes across end-to-end processes

3. Conduct Performance Testing

- Validate target system handles expected workloads under realistic conditions

- Measure query response times, transaction speed, report generation, and concurrent loads

- Compare performance against benchmarks established during assessment

- Test system scalability under peak load conditions for future growth

4. Perform Reconciliation and Compliance Validation

- Execute final reconciliation comparing source and target systems for alignment

- Generate audit trails, validation reports, and compliance documentation

- Create comprehensive records providing traceability and governance support

- Validate security controls and data protection measures function correctly

Phase 7: Deployment and Cutover

Cutover marks the transition from legacy systems to the new environment, demanding precision, coordination, and clear communication.

1. Execute Final Verification and Go/No-Go Decision

- Conduct final validation checks against predetermined success criteria

- Stakeholders review validation results, performance metrics, and risk assessments

- Ensure all acceptance criteria are met before production cutover

- Document outstanding issues and assess their impact on readiness

2. Transition Users and Business Processes

- Migrate users according to defined communication and training plans

- Ensure support teams are prepared to address issues rapidly during transition

- Provide clear guidance on new workflows, interface changes, and support channels

- Monitor user adoption and provide additional support where needed

3. Prepare and Test Rollback Procedures

- Maintain tested rollback plans as critical safeguards for serious issues

- Test rollback processes in advance to ensure reliability under pressure

- Define clear triggers and decision authority for rollback activation

- Establish specific rollback procedures for each phase enabling targeted reversals

4. Communicate Effectively with Stakeholders

- Keep affected parties informed about timelines, changes, impacts, and support availability

- Provide regular status updates and maintain transparent channels for questions

- Effective communication reduces anxiety and builds user confidence during transition

- Document lessons learned and share with stakeholders for future improvements

Phase 8: Post-Migration Support and Optimization

Migration success extends beyond go-live, ensuring long-term stability, addressing emerging issues, and optimizing performance based on usage patterns.

1. Monitor System Health and Data Quality

- Implement continuous monitoring to detect quality degradation or performance issues early

- Establish proactive alerting for anomalies before they impact users

- Track key performance indicators to ensure system meets established benchmarks

- Monitor data quality metrics to catch issues before affecting operations

2. Resolve Post-Migration Issues Systematically

- Prioritize and address defects identified after deployment methodically

- Track issues through resolution ensuring nothing falls through the cracks

- Categorize issues by severity and impact to focus resources effectively

- Maintain transparent communication with stakeholders about issue status

3. Conduct User Training and Knowledge Transfer

- Ensure users understand new capabilities and workflows through comprehensive training

- Transfer operational ownership to support teams with detailed documentation and runbooks

- Provide escalation procedures and contact information for ongoing support

- Conduct hands-on training sessions and create reference materials for future use

4. Optimize and Document for Future Success

- Fine-tune system performance based on real usage patterns and feedback

- Update documentation to reflect the final environment state accurately

- Capture lessons learned to inform future migrations and continuous improvement

- Prepare migration framework and tools for reuse in future projects

Cognos vs Power BI: A Complete Comparison and Migration Roadmap

A comprehensive guide comparing Cognos and Power BI, highlighting key differences, benefits, and a step-by-step migration roadmap for enterprises looking to modernize their analytics.

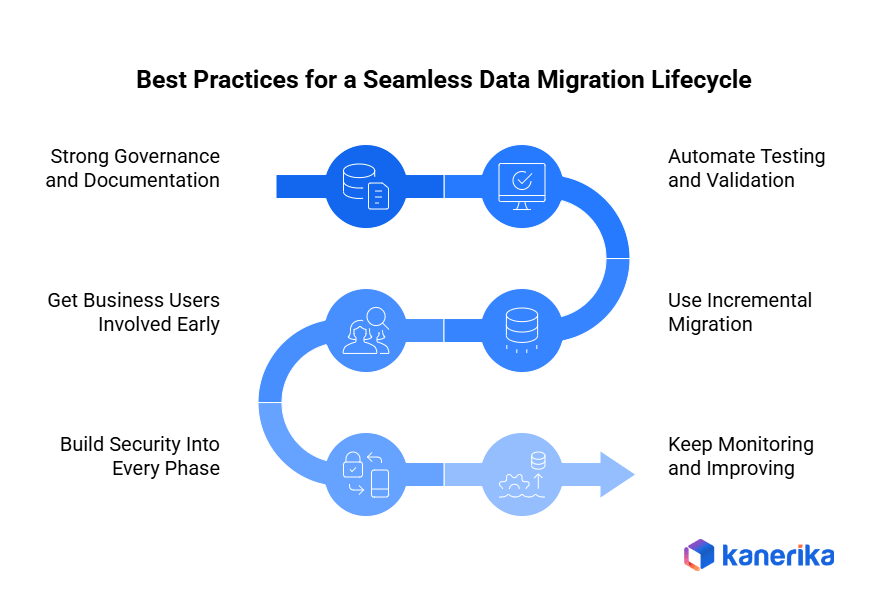

Best Practices for a Seamless Data Migration Lifecycle

Even carefully designed migrations can fall apart without proper execution. Following proven practices helps you reduce risk, maintain quality, and keep business operations running.

1. Strong Governance and Documentation

You need good governance for clarity and accountability. When data ownership is clear, decisions about scope, quality, and approvals happen fast. Getting IT teams and business stakeholders aligned cuts down on miscommunication and delays.

Documentation matters just as much. Capture the critical stuff like data mappings, transformation rules, validation criteria, and operational procedures. When docs are well-maintained, future audits and troubleshooting get much easier. Plus, you can repeat the process for other migrations without starting from scratch.

2. Automate Testing and Validation

Manual testing becomes a problem as your data grows. It’s unreliable and takes forever. Automated testing keeps things consistent across multiple runs. Human error goes down, too.

With automation, teams can run the same integrity checks every time. Source and target reconciliation happens faster. Performance validation becomes routine. The big win? You catch issues early, when they’re easier to fix, not during cutover, when everything’s on the line.

3. Use Incremental Migration

Moving everything at once is risky. A phased approach is usually smarter. You migrate data in stages, which lets teams validate each chunk, get feedback, and fix problems before moving forward. The whole system doesn’t blow up if something goes wrong.

Downtime gets minimized. Business disruption decreases. You maintain better control when things get complex. For enterprise systems that need to stay up and running, this approach makes way more sense.

4. Get Business Users Involved Early

Business users are the ones who know if migrated data actually works. Get them involved during discovery, not after everything’s done. Keep them engaged through validation and post-migration support.

When they join early, you can confirm business rules are correct. They spot data problems that technical teams miss. They help define what success looks like in practical terms. Their ongoing involvement builds confidence in the new system. Adoption rates after launch improve significantly.

5. Build Security Into Every Phase

Don’t bolt security on at the end. Build it into each phase from the start. Find sensitive data early. Protect it with encryption, masking, and proper access controls.

This keeps you compliant with whatever regulations apply to your business. Hence, the risk of data exposure during migration decreases. Customers, partners, and regulators maintain their trust in how you handle information.

6. Keep Monitoring and Improving

Deployment isn’t the finish line. Keep monitoring after launch. Data quality issues pop up. Performance gaps appear. Unexpected behavior happens. Catch these things quickly.

Document what you learn. Refine your processes based on real experience. Future migrations get smoother. What starts as a risky event becomes a standard capability your organization can handle confidently.

Why Data Migration with Kanerika Is Faster and More Accurate

Kanerika offers end-to-end data migration services that focus on speed, accuracy, and low disruption. By using automated migration connectors built for complex shifts such as Tableau to Power BI, SSIS to Microsoft Fabric, Informatica to Talend, and UiPath to Power Automate. These tools cut migration time, reduce costs, and keep data accuracy intact throughout the transition. Our platform, FLIP, supports this work as an AI-powered low-code system that automates up to 80% of the migration effort. It maps, converts, and validates data assets while preserving logic and structure so teams can shift to cloud-first, AI-ready platforms without downtime.

We design our migration accelerators to support real enterprise needs like BI modernization, ETL upgrades, and cloud adoption. These accelerators keep dashboards, visuals, transformation logic, and metadata intact so teams do not need to rebuild assets from scratch. FLIP supports migrations across Microsoft Fabric, Power BI, Talend, Alteryx, Databricks, and other platforms, which helps companies unify their data landscape with minimal disruption. Additionally, we also ensure secure migration. Our security model carries forward existing access controls and permissions so data stays protected during transitions and when connected to AI agents. The result is fast migration with minimal risk and full operational continuity.

Elevate Your Enterprise Reporting with Expert Migration Solutions!

Partner with Kanerika for Data Modernization Services

FAQs

1. What is the data migration lifecycle?

The data migration lifecycle is a structured, end to end process used to move data from one system to another safely and accurately. It includes stages such as discovery, planning, data preparation, migration execution, validation, and post migration optimization. Following a lifecycle helps organizations reduce risk, maintain data quality, and ensure the new system works as expected before and after go live.

2. Why is following a data migration lifecycle important?

Following a defined data migration lifecycle helps prevent common issues like data loss, mismatched records, downtime, and reporting errors. It ensures that data is assessed, cleaned, and validated before being used in the target system. Organizations that follow a lifecycle approach typically experience fewer failures, better data reliability, and smoother adoption by business users.

3. What are the key stages in a data migration lifecycle?

The main stages include data discovery and assessment, migration planning, data cleansing and transformation, migration execution, testing and validation, and post migration monitoring. Each stage plays a critical role in ensuring data accuracy and system stability. Skipping or rushing any phase increases the chances of errors and rework later.

4. How long does a typical data migration lifecycle take?

The duration of a data migration lifecycle depends on data volume, system complexity, data quality, and business requirements. Small migrations may take a few weeks, while enterprise scale migrations can take several months. Proper planning, automation, and early testing can significantly reduce timelines without compromising data quality.

5. What are the most common challenges in the data migration lifecycle?

Common challenges include poor data quality, incomplete source system knowledge, weak data mapping, and limited testing. Lack of stakeholder involvement and unrealistic timelines also create issues. These challenges can be addressed by conducting early assessments, documenting data rules clearly, testing continuously, and involving business users throughout the lifecycle.