What happens when your company’s data is growing at an uncontrollable pace, but your reports are still slow, inconsistent, or unreliable? This is a challenge many businesses face today. Netflix, for example, deals with over 100 petabytes of data daily and relies on efficient data architecture to analyze customer preferences, optimize recommendations, and improve content decisions. The difference between a Data Lake and a Data Lakehouse can mean the difference between insightful decisions and wasted opportunities.

A Data Lake offers flexibility for storing raw data, but without structure, it can become a mess. On the other hand, a Data Lakehouse blends the best of data lakes and warehouses, ensuring better query performance, governance, and usability for business intelligence. But which one truly delivers the best results for decision-making? Let’s break it down and see which architecture is the smarter choice for your business needs.

Elevate Your Data Workflows with Innovative Data Management Solutions

Partner with Kanerika Today.

What is a Data Lake?

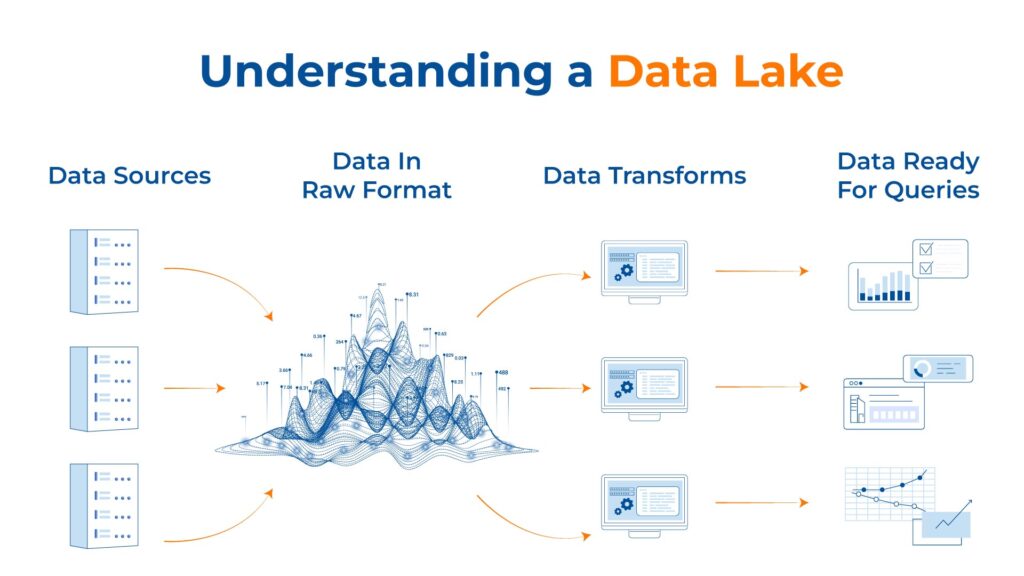

A data lake is a centralized storage repository that holds a vast amount of raw data in its native format until it is needed. Unlike traditional databases, the data lake can store structured, semi-structured, and unstructured data, such as text, images, and social media postings.

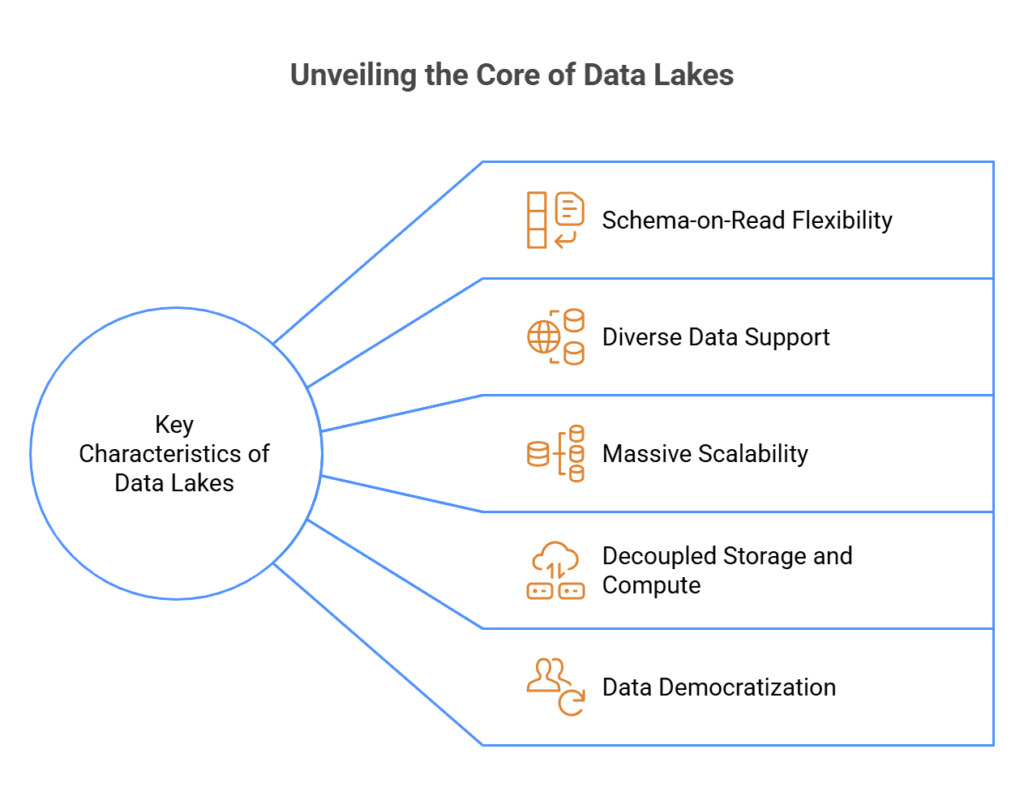

Key Characteristics of Data Lakes

1. Schema-on-Read Flexibility

Data lakes store raw data in its native format without requiring predefined schemas. This “load first, structure later” approach allows organizations to ingest data quickly without upfront modeling, giving analysts the freedom to interpret data according to their specific analytical needs.

2. Diverse Data Support

Data lakes can store structured data (like databases), semi-structured data (JSON, XML), and unstructured data (text documents, images, videos) in a single repository. This unified storage eliminates silos and enables comprehensive analytics across all organizational data assets.

3. Massive Scalability

Data lakes are built on distributed storage systems that can scale horizontally by adding more nodes. This architecture enables organizations to store petabytes of data cost-effectively without performance degradation, supporting the exponential growth of business data.

4. Decoupled Storage and Compute

Data lakes separate storage from processing resources, allowing each to scale independently. This architecture enables organizations to allocate computational power based on specific workload requirements rather than storage capacity, optimizing performance and cost.

5. Data Democratization

Data lakes provide a central repository accessible to various stakeholders across an organization. This democratized access enables data scientists, analysts, and business users to explore and extract insights from the same data source using their preferred tools.

Core Components of a Data Lake Architecture

1. Ingestion Layer

The data ingestion layer handles the collection and import of raw data from various sources into the data lake. It includes batch processing for historical data and stream processing for real-time data, ensuring all organizational data flows into the central repository.

2. Storage Layer

The foundation of a data lake, the storage layer holds raw data in its native format across distributed file systems. Typically object-based storage, it manages the physical storage of data files, organizing them in ways that balance accessibility, performance, and cost.

3. Processing Layer

This component transforms raw data into formats suitable for analysis. It includes various computational frameworks for batch processing, stream processing, and interactive queries that enable data preparation, cleansing, and transformation to support downstream analytics.

4. Metadata Management

The metadata layer maintains information about the data stored in the lake, including source, format, creation date, and access permissions. This “data about data” enables effective cataloging, search, and governance, preventing the data lake from becoming an unmanageable swamp.

5. Security and Governance

This component manages access controls, encryption, and compliance with data regulations. It ensures that sensitive data is protected while appropriate users can access the information they need, maintaining both security and usability across the data environment.

Popular Data Lake Technologies

1. Amazon S3 (Simple Storage Service)

Amazon S3 provides scalable object storage optimized for data lakes. It offers virtually unlimited capacity, 99.999999999% durability, integrated security features, and native integration with AWS analytics services like Athena and EMR, making it the foundation for many enterprise data lakes.

2. Azure Data Lake Storage (ADLS)

Microsoft’s hierarchical storage solution combines the scalability of blob storage with HDFS-compatible access. ADLS features enterprise-grade security with Azure Active Directory integration, transaction support, and optimized performance for both big data and AI workloads.

3. Google Cloud Storage

Google’s object storage service supports data lakes with multi-regional availability, automatic encryption, and seamless integration with BigQuery and Dataproc. Its global edge network delivers low-latency access while its lifecycle management policies optimize storage costs across data tiers.

4. Apache Hadoop HDFS

The original data lake technology, HDFS provides distributed storage across commodity hardware. Its data replication ensures fault tolerance, while data locality principles minimize network traffic by processing data where it resides, optimizing performance for large-scale analytics.

5. MinIO

This high-performance, Kubernetes-native object storage system enables on-premises data lakes with S3-compatible APIs. MinIO’s lightweight architecture delivers cloud-like scalability in private environments, with features like erasure coding, encryption, and identity management for enterprise deployments.

Advantages of Data Lake Implementation

1. Cost-Effective Storage

Data lakes leverage commodity hardware and cheap storage tiers for rarely accessed data. This approach reduces storage costs by up to 70% compared to traditional data warehouses, especially for organizations managing petabytes of information.

2. Future-Proofed Data Collection

By storing raw data without predetermined schemas, data lakes preserve information that might seem irrelevant today but could become valuable tomorrow. This approach prevents data loss and enables retroactive analysis as business questions evolve.

3. Analytical Versatility

Data lakes support diverse analytical workloads from descriptive statistics to complex machine learning. This versatility enables both traditional business intelligence and advanced AI applications from a single data repository, maximizing the value of collected information.

Data Visualization Tools: A Comprehensive Guide to Choosing the Right One

Explore how data intelligence strategies help businesses make smarter decisions, streamline operations, and fuel sustainable growth.

Limitations and Challenges of Data Lakes

1. Data Quality Issues

Without enforced schemas or validation at ingestion, data lakes often accumulate inconsistent, duplicate, or erroneous data. This “garbage in, garbage out” problem can undermine analytics efforts and erode trust in derived insights.

2. Performance Limitations

Querying raw, unoptimized data typically results in slower performance than structured data warehouses. Complex transformations during query time create latency issues that can frustrate business users expecting quick responses to analytical questions.

3. Governance Complexities

The same flexibility that makes data lakes powerful also creates governance challenges. Without robust metadata management and access controls, organizations struggle with data lineage, regulatory compliance, and preventing unauthorized access to sensitive information.

What is a Data Lakehouse?

A data lakehouse is an innovative data management solution that brings together the flexible storage capabilities of data lakes with the schema management and data management features typically associated with data warehouses. It is designed to store a wide variety of data formats while maintaining data integrity and offering capabilities similar to traditional data warehouses for analytics purposes.

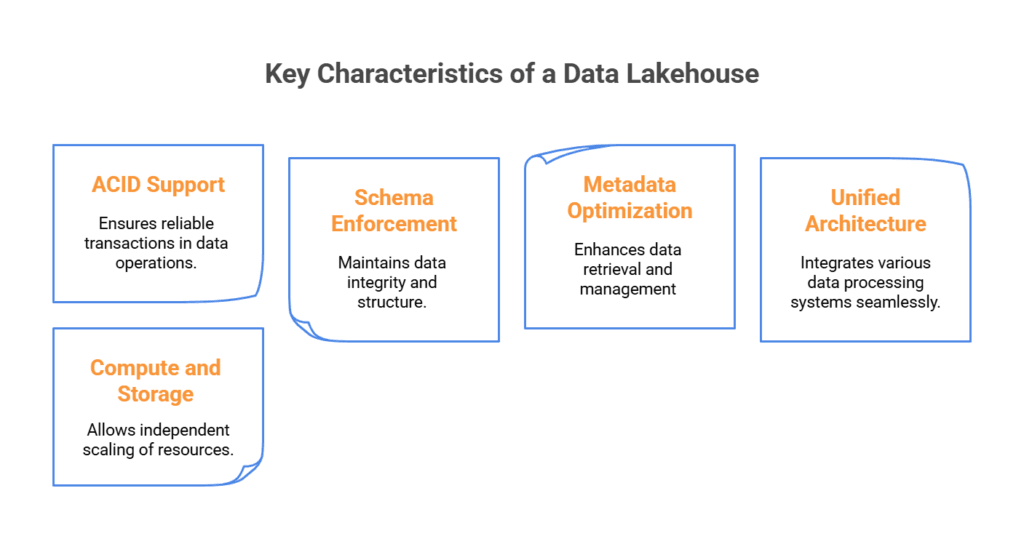

Key Characteristics of a Data Lakehouse

1. ACID Transaction Support

Data lakehouses implement ACID (Atomicity, Consistency, Isolation, Durability) properties traditionally found in databases. This ensures data reliability and consistency even with concurrent operations, enabling multiple users to read and write without data corruption or conflicts.

2. Schema Enforcement and Evolution

Lakehouses implement schema validation at write time while allowing schema evolution. This hybrid approach ensures data consistency without the rigidity of traditional warehouses, enabling both data quality control and the flexibility to adapt to changing business requirements.

3. Metadata Layer Optimization

Lakehouses feature enhanced metadata management that indexes and organizes data for efficient querying. This intelligent layer tracks statistics about data distribution, enabling query optimizers to generate efficient execution plans that significantly accelerate analytical workloads.

4. Unified Architecture

Lakehouses combine data lake storage with data warehouse functionality in a single platform. This unified approach eliminates the need to move data between separate systems for different workloads, supporting everything from BI reporting to machine learning on the same data assets.

5. Separation of Compute and Storage

Like data lakes, lakehouses maintain independent scaling for storage and computation resources. This architecture allows organizations to optimize costs by scaling each component according to workload demands, rather than overprovisioning both to handle peak usage.

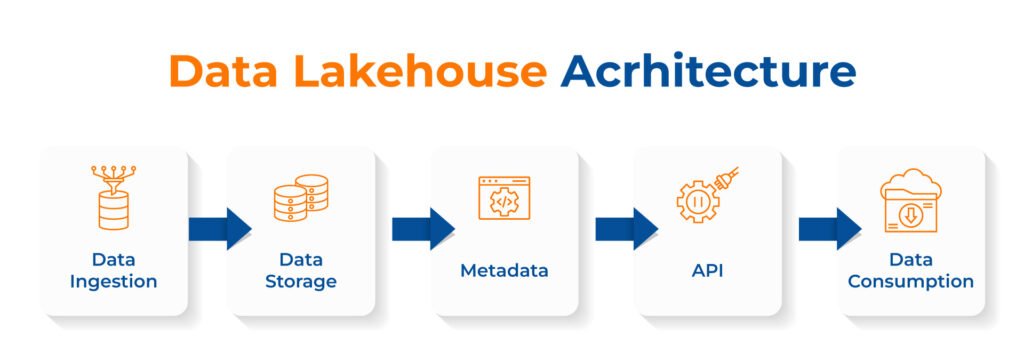

Essential Components of a Data Lakehouse Architecture

1. Storage Layer

The foundation of a lakehouse, this component stores data in open file formats like Parquet or ORC on low-cost object storage. Unlike traditional data lakes, this layer implements file organization techniques like partitioning and compaction to optimize read performance for analytical queries.

2. Metadata and Catalog Layer

This component maintains detailed information about data structure, statistics, and lineage. It powers schema enforcement, efficient query planning, and data discovery capabilities, functioning like a comprehensive “map” of the lakehouse that enables both governance and performance optimization.

3. Transaction Management Layer

This layer implements ACID guarantees, enabling reliable concurrent operations. It handles table versioning, rollbacks, and conflict resolution when multiple users or processes modify data simultaneously, ensuring consistency without sacrificing the multi-user access that analytics platforms require.

5. Query Engine

Specialized for data lakehouse patterns, these engines efficiently process SQL queries across distributed storage. They leverage metadata for optimization, employ columnar data access patterns, and implement data skipping techniques to deliver performance comparable to traditional data warehouses.

6. Data Quality and Governance Framework

This component enforces data quality rules, access controls, and compliance policies. It includes tools for data lineage tracking, sensitive data discovery, and audit logging that ensure the lakehouse remains both accessible to legitimate users and protected from unauthorized access.

Popular Data Lakehouse Technologies

1. Delta Lake

Developed by Databricks, Delta Lake brings ACID transactions to data lakes with time travel capabilities and schema enforcement. Its merge/upsert operations, compaction optimization, and caching mechanisms have made it the dominant open-source lakehouse format with broad ecosystem support.

2. Apache Iceberg

Originally developed by Netflix, Iceberg provides table formats for massive analytic datasets with schema evolution and partition evolution. Its hidden partitioning, time travel, and incremental planning capabilities enable high-performance analytics while maintaining compatibility with multiple processing engines.

3. Apache Hudi

Hudi enables stream processing on data lakes with upsert support and incremental data pipelines. Developed at Uber, it combines near real-time ingestion with efficient queries through specialized file layouts that balance write and read optimization for operational analytics use cases.

4. Databricks Lakehouse Platform

This commercial platform integrates Delta Lake with performance-optimized Photon engine and Unity Catalog for governance. It provides enterprise features including advanced security, optimized query performance, and integrated machine learning capabilities for end-to-end data management workflows.

5. AWS Lake Formation

Amazon’s managed service simplifies lakehouse creation with centralized permissions, automated data discovery, and governance tools. It integrates tightly with AWS analytics services while providing a security-focused control plane that simplifies compliance with regulatory requirements for enterprise data.

Data Migration Tools: Making Complex Data Transfers Simple and Seamless

Enable organizations to efficiently manage and execute intricate data transfers, ensuring accuracy, minimizing downtime, and maintaining data integrity throughout the migration process.

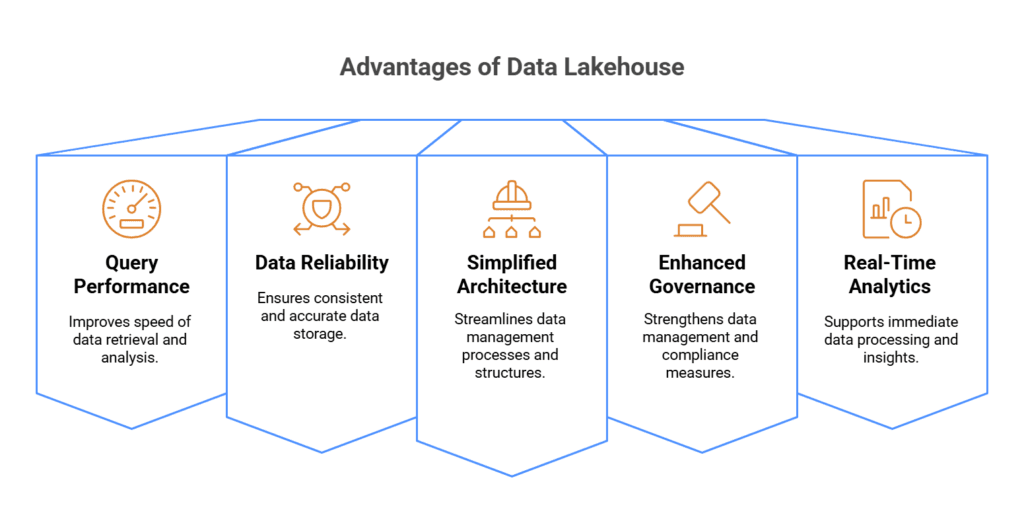

Advantages of Data Lakehouse Over Data Lake

1. Query Performance Acceleration

Lakehouses implement data skipping, indexing, and caching mechanisms that can deliver 10-100x faster query performance than traditional data lakes. This speed enables interactive analytics and BI dashboards that previously required separate data warehouse implementations.

2. Data Reliability Guarantees

ACID transaction support prevents partial file writes, race conditions, and conflicting changes that plague traditional data lakes. These guarantees ensure data consistency for critical business operations, eliminating the need to verify data integrity before making important decisions.

3. Simplified Data Architecture

Lakehouses eliminate the need for separate data lakes and warehouses, reducing architectural complexity. This unified approach cuts infrastructure costs by 40-60% while streamlining data engineering workflows and minimizing the technical debt associated with maintaining multiple specialized systems.

4. Enhanced Data Governance

Centralized schema enforcement, access controls, and lineage tracking enable stronger governance than traditional lakes. These capabilities help organizations meet regulatory requirements like GDPR and CCPA while providing the auditability needed for sensitive applications in finance and healthcare.

5. Real-Time Analytics Support

Lakehouses enable continuous data ingestion and immediate query access without ETL delays. This capability delivers insights on fresh data—minutes or seconds old rather than hours or days—enabling time-sensitive use cases like fraud detection and operational monitoring.

Data Lake vs Data Lakehouse: Key Differences

| Feature | Data Lake | Data Lakehouse |

|---|---|---|

| Data Storage | Stores raw, unstructured data | Stores both raw and structured data efficiently |

| Schema Approach | Schema-on-read (applied when querying) | Schema-on-write (structured upon ingestion) |

| Data Governance | Limited governance and control | Stronger governance with built-in data quality measures |

| Performance | Slower queries due to unstructured storage | Faster queries with optimized indexing and caching |

| Support for BI & Analytics | Requires additional processing for structured analysis | Natively supports BI, AI, and ML workflows |

| ACID Compliance | Lacks ACID transaction support | Supports ACID transactions for data reliability |

| Cost Efficiency | Lower storage costs but higher processing costs | Balanced cost with optimized performance |

| Security & Compliance | Basic security features | Advanced security, auditing, and compliance options |

| Use Cases | Best for raw data storage, data science, and ML | Ideal for business intelligence, reporting, and analytics |

Data Lake vs Lakehouse: Architecture Comparison

When debating data lakes vs. data lakehouses, one must consider their storage architecture, data processing layers, and the governance mechanisms that organize and secure data.

Storage Architecture

Data Lake: The data lake is built on a flat architecture that stores data in its raw format, including structured, semi-structured, and unstructured data. The data is often stored in object stores like Amazon S3 or Azure Blob Storage.

Data Lakehouse: In contrast, a data lakehouse maintains the vast storage capabilities of a data lake but introduces an additional layer of organization. This layer indexes data and enforces schema upon ingestion, facilitating efficient data queries and management.

Also Read- Data Lake vs. Data Warehouse: Understanding The Differences

Data Processing Layers

Data Lake: Data lakes typically have a decoupled compute and storage environment. They perform processing with the schema-on-read approach, where data is applied to a schema as it’s read from storage. This allows for flexibility in data analytics and machine learning processes.

Data Lakehouse: Data lakehouses build upon this by offering transaction support and incorporating features of both schema-on-read and schema-on-write methodologies. A data lakehouse provides an environment that streamlines both batch and stream processing with improved concurrency.

Data Governance and Cataloging

Data Lake: Governance in a data lake relies on metadata tagging and access controls to ensure proper data usage and compliance. However, cataloging in a pure data lake may be less structured, leading to potential challenges in searching and managing data assets.

Data Lakehouse: Data lakehouses enhance governance through a consolidated metadata and cataloging strategy, often coupling with data management tools to provide fine-grained access control and auditing. They bridge the gap between data lakes and warehouses by offering structured cataloging akin to that of a traditional data warehouse, improving data discoverability and compliance.

Data Lake vs Data Lakehouse: Performance and Scalability Comparison

Within the realm of data lake vs. data lakehouse, performance and scalability are critical factors that determine an architecture’s efficiency and future readiness. Performance relates to the speed of data processing and retrieval, while scalability denotes the system’s ability to grow and manage increased demand.

Query Performance

Data Lakes traditionally manage vast quantities of raw data, which can impact query performance due to the lack of structure. Users typically experience high latency when querying large tables or processing complex analytics directly on a Data Lake.

Data Lakehouse aims to mitigate these issues by blending the vast storage capability of Data Lakes with the structured environment of a Data Warehouse. By organizing data into a manageable schema and maintaining metadata, they can provide more efficient data retrieval and faster query execution.

Scaling Capabilities

Data Lakes excel at storing enormous volumes of structured, semi-structured, or unstructured data. They are inherently designed to scale out with respect to data volume, but they can be limited by cataloging systems, such as Hive or Glue, when handling metadata for the stored content.

On the contrary, Data Lakehouses are architected to scale effectively not only in terms of data size but also in performance. The incorporation of features from data warehouses enables better management of metadata and schema, which can improve scalability when responding to more complex analytical workloads.

Data Lake vs Data Lakehouse: Data Management and Quality

Effective data management and quality are crucial in the data lake vs. data lakehouse debate. These structures handle the organization, cleansing, and enrichment of data differently, directly impacting analytics and business intelligence outcomes.

Metadata Handling

In a data lake, metadata is often handled separately and may require additional tools to manage efficiently. It serves as a catalog for the raw data, but users must typically access and manage it through manual processes or separate systems.

- Pros: Provides a flexible approach to metadata management

- Cons: Can become unwieldy as the data lake grows

Conversely, a data lakehouse incorporates metadata handling into its architecture, enabling automatic metadata logging and tighter data governance practices.

- Pros: Streamlines metadata management, allowing for better data discoverability and governance

- Cons: May require rigorous design and setup to ensure metadata is captured and utilized effectively

Data Cleansing and Enrichment

Data lakes store vast amounts of raw data, which might include duplicates, incomplete, or inaccurate records. They rely on the users’ ability to cleanse and enrich data.

- Cleansing: Users apply their own tools and processes to remove errors and inconsistencies

- Enrichment: Users must manually link and augment data with additional sources for analysis

Data lakehouses, however, are designed with data quality in mind, providing built-in mechanisms for cleansing and enriching data as part of the data pipeline.

- Cleansing: Automated processes detect and correct data quality issues, ensuring higher integrity

- Enrichment: Integrated tools allow for seamless data augmentation, improving the richness of datasets for analytics

8 Best Data Modeling Tools to Elevate Your Data Game

Explore the top 8 data modeling tools that can streamline your data architecture, improve efficiency, and enhance decision-making for your business..

Data Lake vs Data Lakehouse: Cost and Complexity

Choosing between a data lake and a data lakehouse involves weighing the cost and complexity associated with each, with a clear understanding of your organization’s data strategy and operational capabilities.

Infrastructure Costs

Data Lake: Typically, data lakes offer a cost-effective solution for storing massive volumes of raw data across various formats. Organizations can benefit from the pay-as-you-go pricing model that cloud storage offers. This aligns well with the scalable nature of data lakes. The separation of storage and compute resources allows for granular cost management.

Data Lakehouse: A data lakehouse inherits the cost benefits of a data lake’s storage and adds a layer designed to improve data governance and reliability, which may increase initial infrastructure costs. However, by integrating the qualities of a data warehouse, a data lakehouse often streamlines analytics, potentially offering long-term cost savings in operational efficiency and resource utilization.

Maintenance and Management Complexity

Data Lake: Maintenance entails ensuring the security and accessibility of a wide variety of data formats. A data lake requires substantial metadata management to prevent it from becoming a ‘data swamp’. The complexity arises in cataloging and indexing the data efficiently for future access and use.

Data Lakehouse: Data lakehouses introduce more sophisticated data management tools and processes to enforce governance and consistency, which can increase complexity. They require the implementation of data quality measures and a metadata layer that simplifies data discovery and querying, usually necessitating a skilled team to maintain these systems effectively.

Also Read- Which One Do You Need? Data Governance Vs Management

Data Lake vs Data Lakehouse: Security and Compliance

Security and compliance are critical factors in the data lake vs. data lakehouse debate. They have distinct access control mechanisms and adhere to compliance standards essential for protecting data integrity and privacy.

Access Control and Security Features

Data Lakes typically provide robust access control mechanisms to manage who can access and manipulate data. They allow administrators to set permissions at the file and folder level, offering a fine-grained access control structure. However, the security features may require additional tools to enforce more complex data security policies.

In contrast, Data Lakehouses integrate advanced security features inherent in their architecture. They incorporate built-in Access Control Lists (ACLs) and role-based access control, ensuring only authorized users can perform actions on the data sets. Moreover, they often include features like schema enforcement and data masking, which are pivotal in maintaining data security.

Compliance Standards and Audits

Data Lakes must conform to various compliance standards such as HIPAA, GDPR, and CCPA, depending on the industry and data type. They often rely on third-party tools and manual interventions to ensure compliance and facilitate audits.

On the other hand, Data Lakehouses are designed to simplify compliance adherence. They offer ACID compliance, ensuring transactional integrity and supporting consistent data snapshots for auditing purposes. A Data Lakehouse’s architecture promotes an environment that can more easily adapt to changing compliance standards and manage data lineage and metadata for audits.

Data Integration Tools: The Ultimate Guide for Businesses

Explore the top data integration tools that help businesses streamline workflows, unify data sources, and drive smarter decision-making.

Data Lake vs Data Lakehouse: Integration and Ecosystem

Data lakes vs. lakehouses differ in their approaches to integrating diverse data types and in the degree to which they are supported by communities and tooling ecosystems.

Support for Data Integration Tools

Data lakes are designed to store vast amounts of raw data in their native formats. They provide extensive support for data integration tools that can ingest both structured and unstructured data. These data integration tools enable organizations to pull data from multiple sources into the data lake, accommodating a wide range of data types and formats without the need for initial cleansing or structuring.

In contrast, data lakehouses not only absorb the capabilities of data lakes in handling massive, diverse datasets but also streamline data management tasks. They enhance data lakes’ integration tools with additional features such as schema enforcement and metadata management, facilitating a more ordered and discoverable dataset.

Ecosystem and Community Support

The ecosystem surrounding data lakes typically consists of a combination of open-source and proprietary solutions that aid in data ingestion, processing, and analysis. Tools like Apache Hadoop and Apache Spark have grown alongside data lakes, creating a robust suite of tools for various data operations.

Data lakehouses tend to inherit this established ecosystem while cultivating their own communities focused on improving performance, governance, and analytical capabilities. The lakehouse architecture advocates for an open environment where tools for real-time analytics, machine learning, and data governance can be seamlessly integrated, bolstering community support for a comprehensive and unified data platform.

Explore more on: What is Data Lakehouse? Exploring the Next-Gen Data Management Platform

Data Lake vs. Data Lakehouse: Ideal Use Case

When to Choose a Data Lake

Organizations primarily storing raw data for future analysis

Data lakes excel when your strategy involves collecting vast amounts of raw data now while deferring analysis decisions. This approach preserves all potential insights without committing to specific analytical frameworks prematurely.

Budget-constrained environments requiring economical storage

Data lakes leverage low-cost object storage and open-source processing tools, reducing infrastructure expenses by 60-80% compared to traditional warehouses. This makes them ideal for organizations with limited budgets but extensive data collection needs.

Simple analytics workflows with minimal transformation needs

When your analytics primarily involve basic reporting and exploratory data science without complex joins or aggregations, data lakes provide sufficient performance without the overhead of more sophisticated architectures.

When to Choose a Data Lakehouse

Organizations requiring both BI and ML workloads on the same data

Lakehouses support SQL analytics for business intelligence alongside machine learning workflows using the same data assets. This eliminates data silos and synchronization challenges when different teams need different views of the same information.

Environments needing real-time analytics capabilities

Lakehouses enable near-instantaneous data availability through streaming ingestion and optimized query engines. This architecture supports operational dashboards and time-sensitive analytics that traditional data lakes struggle to deliver consistently.

Use cases requiring ACID compliance and stronger data quality guarantees

When data integrity is non-negotiable—such as in financial services, healthcare, or regulatory environments—lakehouses provide the transaction support and schema enforcement necessary to ensure reliable, consistent, and auditable data operations.

Data Complexity Simplified: Kanerika’s Advanced Management Services

Managing data shouldn’t be a headache, yet many businesses struggle with fragmented workflows, inconsistent insights, and inefficient processes. Kanerika, a trusted name in data and AI services, has been transforming how companies across industries handle their data.

From data consolidation and modeling to transformation and analysis, we build custom solutions that address critical bottlenecks and drive predictable ROI. Whether it’s optimizing large-scale data pipelines, ensuring seamless integrations, or enabling AI-powered analytics, our expertise helps businesses turn data into a strategic advantage.

By leveraging the latest tools and technologies, we design solutions that enhance efficiency, accuracy, and decision-making, keeping you ahead of the competition. Kanerika’s tailored approach ensures that your data ecosystem works for you—not against you.

Partner with us and see how smart data management can unlock new opportunities, improve operational efficiency, and fuel business growth. Let’s simplify your data, together.

FAQs

What is the difference between a data lake and a data swamp?

A data lake is a centralized repository for storing raw data in its native format, aiming for future analysis. A data swamp, however, is a poorly managed data lake; it’s disorganized, lacks metadata, and is essentially unusable for meaningful insights, becoming a costly burden rather than an asset. The key difference lies in governance and structure: a data lake *aspires* to be organized; a data swamp is inherently chaotic.

Is Databricks a data lakehouse?

Databricks isn’t just a *data lakehouse*, it’s a *platform* that *enables* the creation and management of data lakehouses. It provides the tools and infrastructure – including storage, processing, and governance – needed to build one. Think of it as the architect and construction crew, rather than the house itself. Therefore, the answer is nuanced: Databricks helps *you* build a data lakehouse.

What is the difference between data hub and data lake?

A data hub is like a curated library – it organizes and cleans data for immediate use, focusing on specific business needs. A data lake, conversely, is a raw data warehouse; it stores everything, regardless of structure or quality, for later exploration and potential future use. Think of it this way: the hub serves up ready-to-eat meals, while the lake offers raw ingredients for you to cook with. Essentially, the hub prioritizes usability, while the lake prioritizes comprehensiveness.

What is the difference between data lake and data stream?

A data lake is a vast, unstructured repository storing all types of data, like a giant digital swamp. A data stream, conversely, is a continuous, rapidly flowing current of data points, more like a river constantly supplying fresh information. The key difference is how the data is stored and accessed: on-demand versus real-time. Think of a lake for historical analysis and a stream for immediate insights.

What is the difference between data lake and data factory?

A data lake is a raw, unstructured storage for all your data, like a giant digital swamp. A data factory is the processing engine; it’s the machinery that cleans, transforms, and prepares that swamp-data into usable information for analysis. Think of the lake as the *source* and the factory as the *refinery*. They work together, but serve very different purposes.

What is an example of a data lakehouse?

A data lakehouse blends the best of data lakes (schema-on-read flexibility) and data warehouses (schema-on-write structure and ACID transactions). Think of it as a highly organized data lake, offering both raw data storage *and* structured query capabilities for efficient analysis. A practical example is a system using technologies like Delta Lake on top of cloud storage, enabling both raw data ingestion and efficient, reliable querying. This allows for faster insights without sacrificing the flexibility of a data lake.