As organizations modernize legacy systems and move to cloud or hybrid environments, data migration is no longer a one-time technical task. Failed or delayed migrations have made it clear that success depends on structure, not just tools. This is where a data migration framework becomes essential. In fact, a well-defined framework provides a repeatable, governed approach to planning, executing, and validating data movement across systems while minimizing risk and disruption.

The need for structure is backed by numbers. Moreover, industry research shows that nearly 80% of data migration projects run over budget or miss deadlines, often due to poor planning, unclear ownership, and a lack of validation. Additionally, organizations that follow a formal migration framework report faster execution, fewer data quality issues, and up to 40% reduction in migration errors, especially in large-scale cloud and multi-platform migrations.

In this blog, we explain what a data migration framework is, its key phases and components, and how it helps organizations deliver predictable, secure, and scalable migration outcomes.

Key Takeaways

- Data migration is successful when supported by a systematic system that focuses on migration as a managed corporate process, but not an exceptional technical exercise.

- The vast majority of migration failures are due to bad planning, poor data quality management, lack of ownership, and overdependence on manual processes.

- Mighty migration schema encompasses evaluation, preparation, implementation, validation, and post-migration optimization to minimize risk and rework.

- Migration strategy needs to be implemented to support business objectives, and the choice between phased, incremental, or big bang migration should depend on risk, tolerance of downtime, and system sensitivity.

- Kanerika’s FLIP platform and migration accelerators enable faster, repeatable, and lower-risk migrations by automating conversion while preserving business logic.

What Is a Data Migration Framework?

A data migration framework is a structured approach that defines how data gets assessed, prepared, moved, validated, and stabilized when you’re transitioning from one system to another. Essentially, it treats migration as a controlled business process instead of a one-time technical task.

Organizations are moving toward cloud platforms, modern ERPs, CRMs, and analytics systems. As a result, data migration has gotten more complex because of this. Furthermore, a framework brings consistency, accountability, and predictability to the process.

A solid framework typically includes:

- Clear migration objectives aligned with business goals

- Defined scope and data ownership

- Standard processes for data profiling and cleansing

- Documented migration and validation rules

- Security, compliance, and access controls

Industry studies show that organizations using structured frameworks experience fewer delays, lower rework costs, and faster system adoption. In addition, they build trust in the migrated data from day one. Users need confidence that the information in new systems actually reflects business reality.

Cognos vs Power BI: A Complete Comparison and Migration Roadmap

A comprehensive guide comparing Cognos and Power BI, highlighting key differences, benefits, and a step-by-step migration roadmap for enterprises looking to modernize their analytics.

Why Most Data Migrations Fail Without a Framework

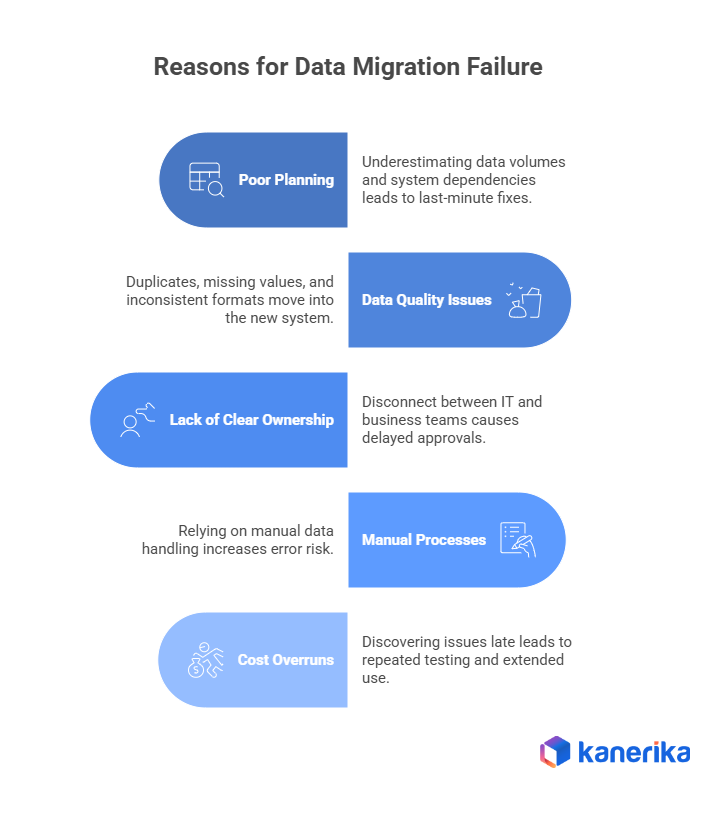

1. Poor Planning and Incomplete Assessment

Teams usually underestimate data volumes, system dependencies, and how complex transformations will be. Meanwhile, legacy systems might contain years of undocumented changes, hidden logic, and inconsistent structures. Without proper assessment, these issues pop up during execution. Consequently, you get last-minute fixes and missed deadlines. Research shows that nearly 40% of migration delays stem from insufficient upfront analysis.

2. Data Quality Issues

Enterprise data is rarely clean. Duplicates, missing values, outdated information, and inconsistent formats are everywhere. When you don’t catch quality issues early, they just move into the new system. In fact, studies estimate that data quality problems cost organizations between 15% and 25% of annual revenue.

3. Lack of Clear Ownership

In migrations without a framework, nobody really owns the data. IT teams focus on technical execution. Meanwhile, business teams assume somebody else is handling accuracy. Unfortunately, this disconnect causes delayed approvals and unresolved validation issues.

4. Manual Processes and Human Error

Many failed migrations rely too much on manual data handling. Manual extraction, transformation, and validation increase error risk at scale. Therefore, a structured framework promotes automation and repeatability. Less manual work means fewer mistakes.

5. Cost Overruns and Timeline Slippages

When migrations lack structure, you discover issues late. This means repeated testing cycles and extended use of the parallel system. As a result, industry data shows projects without a framework can blow past planned budgets by 30% to 50%.

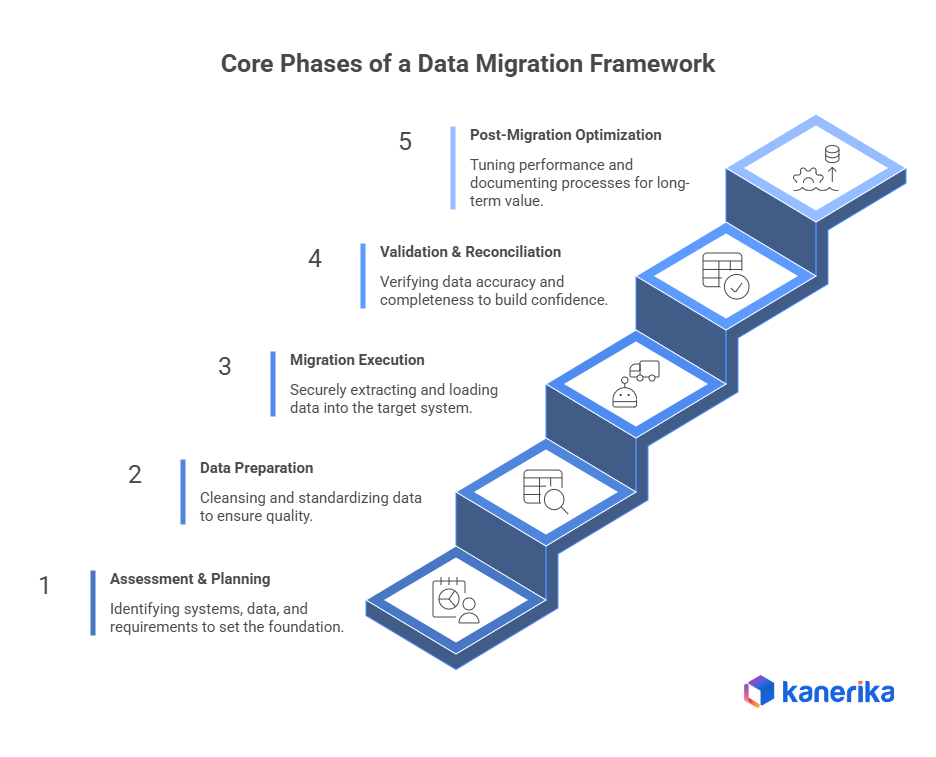

Core Phases of a Data Migration Framework

1. Assessment and Planning

This phase sets the foundation. Specifically, teams focus on:

- Identifying source and target systems

- Understanding data volumes, formats, and dependencies

- Determining business-critical datasets

- Evaluating regulatory and compliance requirements

- Defining success metrics and migration timelines

A detailed assessment helps you avoid surprises during execution. Notably, projects with thorough planning are way more likely to stay within budget and timeline.

2. Data Preparation and Cleansing

Most enterprise data has quality issues that pile up over time. Migrating without cleansing just moves existing problems into the new system.

Key activities include:

- Data profiling to identify duplicates, gaps, and inconsistencies

- Removing obsolete or redundant records

- Standardizing values, formats, and reference data

- Applying business rules and transformation logic

Organizations that invest in cleansing before migration report fewer post-go-live issues and higher user trust. Furthermore, studies suggest proper data preparation can cut migration-related defects by over 30%.

3. Migration Execution

This phase is where data actually moves from source to target based on predefined rules. Moreover, execution should follow a controlled and monitored process.

Execution typically involves:

- Secure data extraction from source systems

- Transformation aligned with target schemas

- Incremental or batch loading strategies

- Continuous monitoring and error tracking

A structured framework makes execution repeatable and auditable. Consequently, this supports pilot migrations, testing cycles, and recovery from failures.

4. Validation and Reconciliation

Validation makes sure the migrated data is accurate, complete, and usable for business operations. Therefore, this phase is critical for building stakeholder confidence.

Validation activities include:

- Record count and completeness checks

- Field-level accuracy validation

- Business rule verification

- User acceptance testing with key stakeholders

Organizations that validate throughout the migration instead of only at the end catch issues earlier and reduce go-live risks.

5. Post-Migration Optimization

Migration doesn’t end when the system goes live. Instead, this phase focuses on stabilization, performance tuning, and long-term optimization.

This phase typically includes:

- Performance and query optimization

- Fine-tuning access controls and security

- Documentation and knowledge transfer

- Decommissioning or archiving legacy systems

Post-migration optimization makes sure the new system delivers business value and supports future scalability. Additionally, it transforms successful migration into sustained business value. Lessons learned help improve frameworks and processes. As a result, subsequent migrations get progressively smoother and more predictable.

Designing the Right Migration Strategy for Your Business

A data migration strategy translates the framework into execution. The framework defines what needs to be done. In contrast, the strategy defines how and in what sequence it happens. Choosing the wrong strategy can increase downtime, inflate costs, and disrupt core business operations. An effective migration strategy balances technical feasibility with business priorities.

1. Aligning the Migration Strategy With Business Objectives

A migration should never start with tools or timelines. Instead, it should start with business intent. Different objectives require different strategies.

For example, a cost-optimization-driven migration may prioritize moving cold or archival data first. Meanwhile, a customer experience initiative may require real-time or near-zero downtime migration. On the other hand, a regulatory or compliance-driven migration may require additional validation and audit controls.

By aligning the strategy with business goals, teams can decide which datasets are critical, which can be deprioritized, and which shouldn’t be migrated at all. Consequently, this prevents unnecessary data movement and reduces complexity.

2. Choosing the Appropriate Migration Approach

The migration approach determines how data moves over time and how risk gets managed.

Common approaches include:

- Phased migration, where data is migrated in logical groups such as modules, regions, or business units. This approach reduces risk by allowing teams to validate results incrementally and apply lessons learned to later phases.

- Incremental migration, where data changes are continuously synchronized between source and target systems until final cutover. This works well when downtime must be minimized.

- Big bang migration, where all data is migrated in a single cutover window. While faster in theory, this approach carries a higher risk and requires extensive testing and rollback planning.

For large enterprises, phased or incremental approaches are generally more resilient and easier to control.

3. Deciding Between Manual and Automated Migration

Manual migration methods may seem manageable for small datasets, but they become risky and inefficient as data volumes grow. Furthermore, manual handling increases dependency on individuals and introduces inconsistencies across migration runs.

Automated migration approaches offer several advantages:

- Ensure consistency across repeated migration cycles

- Reduce human error during extraction, transformation, and loading

- Provide built in logging, monitoring, and validation

- Support scalability when future migrations are required

For organizations planning multiple migrations or long-term modernization, automation is usually a strategic investment rather than a cost.

4. Planning for Downtime, Risk, and Rollback

Even the most carefully designed migration strategy must assume something can go wrong. Therefore, downtime planning and rollback mechanisms are critical safeguards.

A mature strategy includes:

- Clearly defined downtime windows approved by business stakeholders

- Pre-tested rollback procedures to restore systems if validation fails

- Clear go or no-go decision checkpoints during cutover

- Communication plans for business users and leadership

Rollback planning isn’t a sign of weak execution. Rather, it’s a sign of responsible risk management.

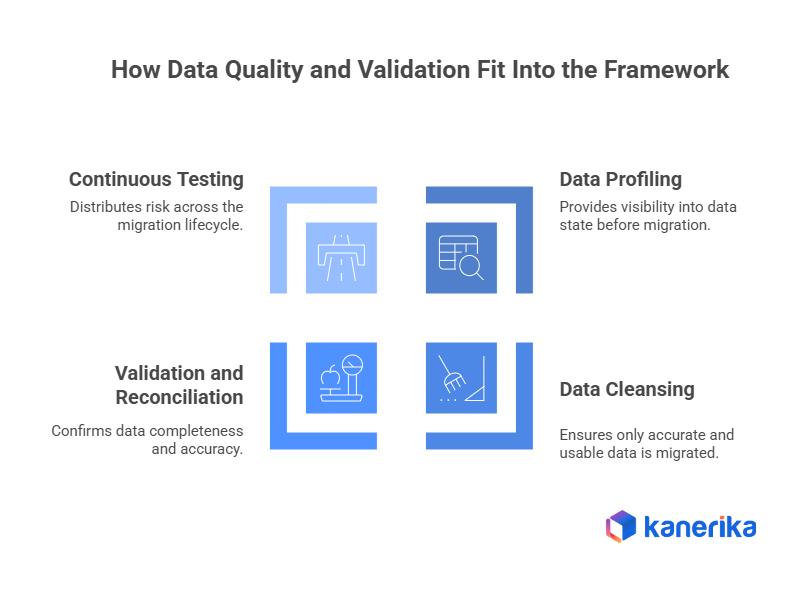

How Data Quality and Validation Fit Into the Framework

Data quality and validation aren’t supporting activities. Instead, they’re central to the success of the entire migration framework. Without them, even a technically successful migration can fail from a business perspective. Poor data quality leads to incorrect reports, broken workflows, and loss of confidence in the new system.

1. Data Profiling as the Foundation of Quality Control

Data profiling provides visibility into the actual state of the data before migration. Essentially, it replaces assumptions with evidence.

Through profiling, teams can:

- Identify duplicate records and conflicting values

- Detect missing or incomplete fields

- Understand data distribution and anomalies

- Reveal hidden dependencies between datasets

This insight is essential for creating accurate mappings and transformation rules. Moreover, skipping profiling often results in incorrect assumptions that surface late in the migration.

2. Data Cleansing to Reduce Downstream Risk

Cleansing ensures that only relevant, accurate, and usable data gets migrated. Migrating poor-quality data simply transfers existing problems into the new environment.

Effective cleansing involves:

- Removing outdated or unused records that no longer serve business needs

- Standardizing formats, codes, and reference values

- Correcting invalid or inconsistent entries based on business rules

- Resolving duplicates using defined matching logic

Organizations that prioritize cleansing before migration consistently report fewer post-go-live issues and faster system adoption.

3. Validation and Reconciliation to Build Trust

Validation confirms that the migrated data is complete and accurate. Meanwhile, reconciliation ensures alignment between source and target systems.

A comprehensive validation process includes:

- Comparing record counts across systems

- Verifying field-level accuracy for critical attributes

- Ensuring business rules and calculations behave as expected

- Involving business users in acceptance testing

Trust in migrated data is built through systematic validation, not informal checks or assumptions.

4. Continuous Testing Instead of End-Only Testing

Testing only at the end of migration concentrates risk at the most critical moment. In contrast, continuous testing distributes risk across the lifecycle.

Benefits of continuous testing include:

- Early detection of mapping or transformation issues

- Reduced rework and remediation effort

- More predictable cutover timelines

- Lower stress during final migration phases

Embedding validation into each phase strengthens the overall framework and improves outcomes.

Best Practices to Build a Scalable and Repeatable Migration Framework

A strong migration framework should be reusable. Organizations that treat migration as a one-time exercise often struggle when future migrations arise. Scalability and repeatability should be designed into the framework from the beginning.

1. Governance and Clear Accountability

Governance provides structure, oversight, and consistency. Without governance, migration decisions become fragmented and reactive.

Effective governance includes:

- Clearly defined data owners responsible for accuracy and approvals

- Standardized policies for data handling and validation

- Defined escalation paths for issue resolution

- Regular checkpoints to review progress and risks

Governance aligns IT and business teams and ensures accountability throughout the migration.

2. Automation to Support Scale and Consistency

As data volumes and system complexity increase, manual processes become unsustainable.

Automation enables:

- Consistent execution across multiple migration cycles

- Reliable validation and audit trails

- Faster identification and resolution of issues

- Reduced dependency on individual resources

Automation transforms migration from a fragile process into a scalable capability.

3. Documentation as an Operational Asset

Documentation is essential for repeatability and long-term efficiency.

Comprehensive documentation should cover:

- Data mappings and transformation logic

- Validation rules and acceptance criteria

- Migration runbooks and operational procedures

- Lessons learned and improvement areas

Well-maintained documentation reduces onboarding time and improves future migration outcomes.

4. Rollback and Contingency Planning

A scalable framework must include tested contingency plans. Therefore, rollback procedures protect the business from prolonged disruption.

Best practices include:

- Backups taken immediately before cutover

- Clearly defined recovery timelines

- Pre-approved rollback decision criteria

- Dry runs of rollback scenarios

Rollback planning ensures resilience during high-risk transitions.

5. Future-Proofing the Migration Framework

Future-proofing ensures the framework remains relevant as systems evolve.

Key considerations include:

- Modular design that supports new platforms and integrations

- Compatibility with cloud, hybrid, and multi-system environments

- Ability to handle both structured and unstructured data

- Continuous improvement based on feedback and outcomes

A future-ready framework enables organizations to adapt quickly without rebuilding migration processes from scratch.

Accelerate Your Data Transformation by Migrating to Power BI!

Partner with Kanerika for Expert Data Modernization Services

Case Study 1: SQL Server Services to Microsoft Fabric

Challenge

The client relied on SSIS, SSAS, and SSRS. Each system worked alone, created delays, and required ongoing maintenance. Furthermore, the setup slowed reporting and made scaling difficult. A manual move to Fabric risked long timelines and logic breakage.

Solution

Kanerika used its migration accelerator to convert SSIS packages, SSAS models, and SSRS reports into Microsoft Fabric. Additionally, logic, security, and structure were preserved during the conversion. The client received ready-to-use pipelines, models, and reports inside a single Fabric workspace.

Results

- One unified analytics environment replaced multiple legacy tools.

- Migration completed in weeks through automated conversion.

- Maintenance effort dropped because Fabric runs on a scalable cloud model.

- Teams delivered analytics faster due to unified workflows.

Case Study 2: Informatica to Talend Migration

Challenge

A global manufacturer depended on complex Informatica workflows. Costs were rising, updates were slow, and scaling was difficult. Meanwhile, manual reconstruction of mappings carried high risk and long delivery cycles.

Solution

Kanerika automated the conversion of Informatica mappings and workflows into Talend. Additionally, all transformation rules were recreated accurately. Teams could test and deploy Talend jobs without rewriting logic by hand.

Results

- Complete preservation of workflow logic during transition.

- 70% reduction in manual effort.

- 60% faster overall delivery.

- 45% lower migration cost.

Kanerika: Your Trusted Partner for Seamless Data Migrations

Kanerika is a trusted partner for organizations looking to modernize their data platforms efficiently and securely. Modernizing legacy systems unlocks enhanced data accessibility, real-time analytics, scalable cloud solutions, and AI-driven decision-making. Traditional migration approaches can be complex, resource-intensive, and prone to errors, but Kanerika addresses these challenges through purpose-built migration accelerators and our FLIP platform, ensuring smooth, accurate, and reliable transitions.

Our accelerators support a wide range of migrations, including Tableau to Power BI, Crystal Reports to Power BI, SSRS to Power BI, SSIS to Fabric, SSAS to Fabric, Cognos to Power BI, Informatica to Talend, and Azure to Fabric. Additionally, by leveraging automation, standardized templates, and deep domain expertise, Kanerika helps organizations reduce downtime, maintain data integrity, and accelerate adoption of modern analytics platforms. Moreover, with Kanerika, businesses can confidently future-proof their data infrastructure and maximize the value of every migration project.

Elevate Your Enterprise Reporting with Expert Migration Solutions!

Partner with Kanerika for Data Modernization Services

FAQs

What is a data migration framework?

A data migration framework is a structured approach for moving data from source systems to target platforms. It defines the processes, tools, and controls needed to transfer data securely, accurately, and efficiently. The framework ensures consistency across planning, execution, and validation, reducing risks during migration.

Why is a data migration framework important?

A data migration framework helps minimize errors, reduce downtime, and maintain data quality during migration. It provides a clear structure that prevents ad hoc execution and unexpected failures. This is critical when modernizing analytics or moving to cloud platforms where data accuracy directly impacts business decisions.

What are the key components of a data migration framework?

Key components include data assessment and mapping, transformation rules, data cleansing, extraction and loading processes, and validation checks. Monitoring, logging, and error handling ensure visibility and control throughout the migration. Together, these components help deliver reliable and repeatable migrations.

What are the common types of data migration frameworks?

Common migration approaches include Big Bang, Trickle, and Zero Downtime migrations. Big Bang moves all data at once, Trickle migrates data in phases, and Zero Downtime ensures continuous system availability. The choice depends on system complexity and business continuity requirements.

How does AI help in a data migration framework?

AI automates tasks like data mapping, cleansing, and validation, reducing manual effort and human error. It can detect anomalies early and optimize performance during migration. This results in faster, more accurate, and scalable data migrations.

How can Kanerika help with a data migration framework?

Kanerika supports data migration through accelerators and the FLIP platform. These tools simplify transitions from legacy systems to modern analytics and cloud environments. Kanerika helps reduce migration risk while improving speed and data reliability.

What are best practices for implementing a data migration framework?

Best practices include clear requirement definition, phased execution, and continuous testing during migration. Post migration validation ensures data accuracy and trust. Using automation and proven accelerators helps deliver faster and more reliable outcomes.