Two key processes come into play when managing data: data ingestion and integration. While both processes are essential for effective data management, their approach and objectives differ. Understanding data ingestion vs. data integration is crucial for making informed decisions about your data management strategy.

Data ingestion involves collecting raw data from various sources and loading it into a target storage. This process aims to bring all your data from different sources into a single location, eliminating data silos and providing a holistic view of your data. It includes extracting, transforming, and loading (ETL Process) data into a target system, such as a data warehouse or data lake. On the other hand, data integration focuses on combining data from multiple sources to create a unified view.

What is Data Ingestion?

Data ingestion is a fundamental process in data management that involves extracting raw data from various sources and loading it into a database, data warehouse, or data lake. This raw data, also known as the source data, can come from databases, APIs, log files, sensors, social media, or IoT devices. The primary objective of data ingestion is to bring all this diverse and scattered data into a single location, eliminating data silos and enabling organizations to have a holistic view of their data.

Data ingestion can be done manually, where data is manually extracted and loaded, or it can be automated using data ingestion tools. Automated data ingestion simplifies the process by automatically collecting and transforming the data, making it more efficient and reliable. This process is often referred to as a data pipeline, where data flows from the source to the target storage, ensuring a continuous and seamless flow of data.

Benefits of Data Ingestion

1. Centralized Data Access:

It brings data from multiple sources into one place, enabling easier access and analysis.

2. Real-Time Insights:

Streaming ingestion allows organizations to act on fresh data instantly, supporting faster decision-making.

3. Improved Data Quality:

Ingestion processes often include basic validation and cleansing, helping maintain cleaner, more reliable data.

4. Enhanced Efficiency:

Automated ingestion reduces manual work, accelerates data availability, and improves operational workflows.

5. Supports AI & Analytics:

Ingested data fuels machine learning models and analytics tools, driving innovation and business intelligence.

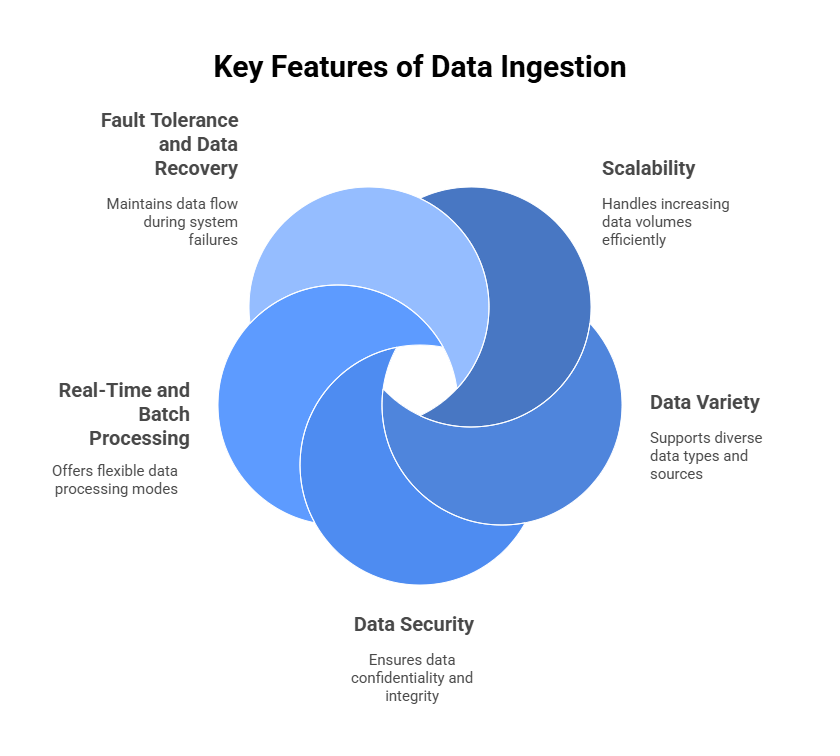

Key Features of Data Ingestion

Data ingestion offers several key features that make it an essential component of data management strategies. These features enable organizations to handle large volumes of data effectively, support different data types, and ensure data security and privacy.

1. Scalability

One of the key features of data ingestion is its scalability. As organizations accumulate more data, it is crucial to have a data ingestion process that can handle increasing volumes without sacrificing performance. Scalability allows organizations to expand their data storage and processing capabilities as their needs grow, ensuring a smooth and efficient data ingestion workflow.

2. Data Variety

Data ingestion supports various types of data, including structured, semi-structured, and unstructured data. This feature enables organizations to collect data from diverse sources, such as databases, APIs, logs, and social media platforms. By ingesting data of different formats and structures, organizations can gain insights from a wide range of sources and have a comprehensive view of their data.

3. Data Security

Data security and privacy are essential aspects of data management. Ingestion incorporates security measures, such as encryption and protocols like Secure Sockets Layer (SSL) and HTTP over SSL, to ensure the confidentiality and integrity of the ingested data. These measures protect sensitive information from unauthorized access and maintain data privacy compliance.

4. Real-Time and Batch Processing Support

Data ingestion systems can operate in both real-time and batch modes. Real-time ingestion allows immediate data processing for use cases like fraud detection or live analytics, while batch processing is ideal for large datasets that don’t require instant updates. This flexibility enables organizations to choose the best processing mode based on their business requirements and use cases.

5. Fault Tolerance and Data Recovery

A robust data ingestion framework includes mechanisms for fault tolerance and recovery. In the event of system failures, network issues, or data corruption, the ingestion process can resume without data loss. This ensures continuous data flow, minimizes downtime, and maintains the reliability and accuracy of your data pipelines.

What is Data Integration?

Data integration is a crucial process that involves combining data from different sources to create a unified view of the data. It goes beyond the simple act of data ingestion and includes extracting data from various sources, transforming it into a consistent format, and loading it into a target system such as a data warehouse or data lake. This unified view gives organizations a holistic understanding of their data, facilitating better decision-making and analysis.

Why is Data Integration important?

Data integration is pivotal in enabling organizations to gain valuable insights from their data. By combining data from disparate sources, businesses can break down data silos and create a comprehensive view of their operations, customers, and market trends. This unified view allows for a more accurate analysis of data, leading to informed business strategies, improved efficiency, and better customer experiences.

In addition, data integration helps organizations overcome challenges related to data quality and consistency. By transforming and standardizing data from different sources, organizations can ensure data accuracy and reliability, enhancing the overall quality of their analytics. Furthermore, data integration supports data governance efforts, providing a structured approach to data management and ensuring compliance with regulatory requirements.

Benefits of Data Integration

Data integration offers several key benefits to organizations:

1. Improved decision-making:

By providing a unified view of data, data integration enables organizations to make informed decisions based on accurate and comprehensive insights.

2. Enhanced data quality:

Data integration processes include data cleansing and transformation, resulting in improved data quality and consistency.

3. Streamlined operations:

With a unified view of data, organizations can streamline their operations by eliminating redundant processes and optimizing resource allocation.

4. Better customer experiences:

Data integration allows organizations to gain a deeper understanding of their customers by consolidating data from various touchpoints, enabling personalized and targeted experiences.

5. Increased efficiency:

By automating data integration processes, organizations can save time and resources, freeing up valuable personnel for other tasks.

Data integration plays a vital role in enabling organizations to leverage the full potential of their data. By combining data from multiple sources and creating a unified view, businesses can make more informed decisions, improve operational efficiency, and enhance customer experiences. With the right data integration strategy and tools in place, organizations can unlock the true value of their data and gain a competitive edge in the market.

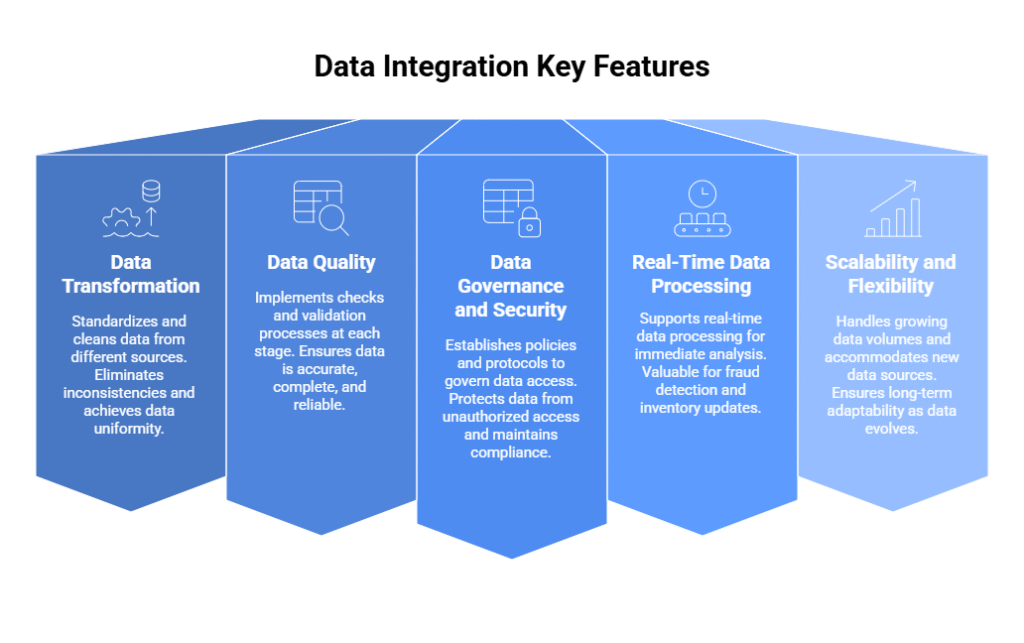

Key Features of Data Integration

Data integration offers several key features that are essential for efficient and effective data management. These features contribute to the smooth integration of data from various sources, ensuring data transformation, quality, and security.

1. Data Transformation

Data transformation is a crucial feature of data integration that facilitates the standardization and cleaning of data from different sources. By transforming data into a consistent format, organizations can eliminate inconsistencies and achieve data uniformity, making it more usable for analysis and decision-making.

2. Data Quality

Data quality is another important aspect of data integration. It involves implementing checks and validation processes at each stage of the integration process to ensure that the data is accurate, complete, and reliable. By maintaining high-quality data, organizations can make informed decisions and avoid costly errors that may arise from using inaccurate or incomplete data.

3. Data Governance and Security

Data governance and security are paramount in data integration. Organizations must establish policies and protocols to govern the access, storage, and usage of data. This ensures that data is protected from unauthorized access and maintains compliance with privacy regulations. By implementing robust data security measures, organizations can safeguard sensitive information and build trust with their stakeholders.

4. Real-Time Data Processing

Modern data integration tools often support real-time or near-real-time data processing, enabling organizations to access the most up-to-date information for immediate analysis and action. This is especially valuable for use cases like fraud detection, inventory updates, or personalized customer interactions.

5. Scalability and Flexibility

A robust data integration framework should be scalable to handle growing data volumes and flexible enough to accommodate new data sources, formats, and business needs. This ensures long-term adaptability as the organization’s data landscape evolves.

Data integration also enables flexibility and scalability, allowing organizations to handle large volumes of data efficiently without compromising performance. Through the seamless integration of data from various sources, organizations can unlock valuable insights, drive business growth, and gain a competitive edge in today’s data-driven world.

Data Ingestion vs Data Integration: Key Differences

Data ingestion and data integration are two essential processes in data management strategies. While they both involve handling data from multiple sources, they have distinct objectives and approaches. Understanding the key differences between data ingestion and data integration is crucial for organizations to make informed decisions and effectively manage their data.

Data Ingestion:

Data ingestion focuses on efficiently collecting raw data from various sources and loading it into a target storage, such as a database, data warehouse, or data lake. The primary goal is to bring data from different sources into a centralized location, eliminating data silos and enabling a holistic view of the data. Data ingestion can be performed manually or automated using specialized data ingestion tools.

Data Integration:

On the other hand, data integration involves combining data from different sources to create a unified view of the data. It includes extracting data from multiple sources, transforming it into a consistent format, and loading it into a target system, typically a data warehouse or data lake. The objective of data integration is to harmonize data from various sources, ensuring data accuracy and enabling better decision-making and analysis.

Enhance Data Accuracy and Efficiency With Expert Integration Solutions!

Partner with Kanerika Today.

Key Differences Between Data Ingestion vs Data Integration

| Aspect | Data Ingestion | Data Integration |

| Definition | The process of collecting raw data from various sources | The process of combining data from multiple sources into a unified view |

| Primary Goal | Move data from source to destination quickly | Provide a consistent, clean, and usable dataset |

| Focus Area | Speed, connectivity, and real-time or batch intake | Accuracy, consistency, and harmonization |

| Data State | Often raw or unprocessed | Cleaned, transformed, and standardized |

| Common Tools | Apache Kafka, NiFi, Logstash, AWS Kinesis | Talend, Informatica, Azure Data Factory, dbt |

| Timing | Near real-time or scheduled batch loads | Scheduled, rule-based integration |

| Complexity | Lower complexity — mainly focused on transfer | Higher complexity — includes transformation, mapping, and schema alignment |

| Use Case Example | Streaming IoT data into a data lake | Merging customer data from CRM, ERP, and web platforms |

| Data Quality Handling | Minimal — raw data may contain duplicates or errors | Strong emphasis on validation, cleansing, and enrichment |

| Output Destination | Data lakes, staging areas, message queues | Data warehouses, analytical databases, master data hubs |

| Skill Set Required | Engineers familiar with pipelines and APIs | Data engineers, architects, and integration specialists |

| Goal in Data Stack | Capture data efficiently | Deliver unified, analysis-ready datasets |

Maximizing Efficiency: The Power of Automated Data Integration

Discover the key differences between data ingestion and data integration, and learn how each plays a vital role in managing your organization’s data pipeline.

Challenges of Data Ingestion and Data Integration

Both data ingestion and data integration processes come with their fair share of challenges.

Let’s explore some of the common hurdles faced in these areas:

1. Limited Data Quality Control in Data Ingestion

Data ingestion involves collecting raw data from various sources, which can sometimes lead to issues with data quality. As the volume of data increases, ensuring the accuracy and consistency of the ingested data becomes crucial. Inaccurate or incomplete data can hinder decision-making and analysis. Therefore, organizations must implement robust data quality control measures to validate and cleanse the ingested data.

2. Data Compatibility and System Integration Challenges in Data Integration

Data integration is a complex process that requires harmonizing data from different sources into a unified view. One significant challenge is ensuring compatibility between various systems and data formats. Integrating data from disparate sources may involve incompatible schemas, data structures, and system constraints. It requires careful mapping and transformation of data to ensure seamless integration and avoid inconsistencies.

3. Scalability and Performance Issues

Both data ingestion and data integration can face scalability and performance challenges. As the volume of data grows, the processes need to handle large datasets efficiently without compromising performance. Ensuring that the infrastructure, hardware, and software scale is crucial. Organizations need to invest in scalable solutions and optimize the performance of their data ingestion and integration pipelines.

4. Real-Time Processing Complexities

Implementing real-time data ingestion and integration is challenging due to the need for low-latency processing, high availability, and synchronization across systems. Handling streaming data from multiple sources while maintaining consistency, deduplication, and order of events requires advanced architecture and monitoring. Without the right tools, organizations may struggle to deliver timely insights or respond quickly to operational changes.

5. Security and Compliance Risks

When dealing with data ingestion and integration, maintaining data security and regulatory compliance becomes a major concern. Transferring data across networks, platforms, and regions exposes it to potential breaches. Ensuring end-to-end encryption, access controls, and compliance with frameworks like GDPR, HIPAA, or CCPA can be difficult—especially when integrating data from third-party or legacy systems.

Simplify Your Data Management With Powerful Integration Services!!

Partner with Kanerika Today.

Benefits of Automation in Data Management

Automation plays a crucial role in data management, offering numerous benefits for organizations seeking to streamline their processes and improve efficiency. Whether it’s data ingestion, data integration, or overall data management, automation can provide significant advantages for your business.

1. Time and Resource Savings

One of the key benefits of automation in data management is the ability to save time and resources. By automating data ingestion and integration processes, organizations can eliminate manual data entry and repetitive tasks. This allows professionals to focus on higher-value activities that require their expertise, ultimately increasing productivity and freeing up valuable time and resources.

2. Enhanced Accuracy and Data Quality

Automation also improves data accuracy and quality. Manual data entry is prone to human errors, such as typos or data inconsistencies. By automating data management processes, organizations can ensure data consistency and eliminate common mistakes, resulting in more accurate insights and analysis. Automation can also include data validation and cleansing processes, further enhancing the overall quality of the data.

3. Improved Data Security

Data security is a top priority for any organization, and automation can help enhance data protection. With automated data management, organizations can implement robust security measures, such as encryption and secure data transfer protocols, to safeguard sensitive information. Automation also reduces the risk of unauthorized access or data breaches, ensuring that data remains secure throughout the entire data management process.

Data Integration and Data Ingestion Tools

When it comes to data integration and data ingestion, organizations have a range of tools at their disposal. These tools are designed to facilitate the process of combining data from various sources and loading it into a target storage. By choosing the right tool, organizations can streamline their data management processes and improve their overall efficiency.

Popular Data Integration Tools

Data integration tools are instrumental in harmonizing data from multiple sources and providing a unified view. Some popular data integration tools include:

- Informatica PowerCenter: A comprehensive data integration platform that offers end-to-end functionality for data integration.

- Talend Data Integration: An open-source tool that allows users to connect, access, and transform data from various sources.

- Microsoft SQL Server Integration Services (SSIS): A powerful ETL (Extract, Transform, Load) tool for data integration within the Microsoft SQL Server environment.

- Apache NiFi: Ideal for real-time data flow automation with a user-friendly drag-and-drop interface.

- Fivetran: Fully managed ELT platform with pre-built connectors and minimal maintenance.

- IBM InfoSphere DataStage: Enterprise-grade integration with high-performance parallel processing for complex data workloads.

Effective Data Ingestion Tools

Data ingestion tools are vital in collecting raw data from different sources and loading it into target storage for further analysis. Some effective data ingestion tools include:

- Apache Kafka: A distributed streaming platform that allows for scalable and reliable data ingestion.

- AWS Glue: A fully managed extract, transform, and load (ETL) service that makes it easy to prepare and load data for analytics.

- Google Cloud Dataflow: A serverless data processing service that allows for easy data ingestion and transformation.

- Azure Data Factory: Microsoft’s cloud-native ETL and data movement service with broad connector support.

- Apache Flume: Specialized in ingesting and transporting large volumes of log data into Hadoop environments.

Choosing the right data integration and data ingestion tools depends on various factors; such as the organization’s technical expertise, budget, and specific business needs. It is essential to evaluate the features, scalability, and compatibility of these tools to ensure they align with your data management requirements.

Data Integration for Insurance Companies: Benefits and Advantages

Leverage data integration to enhance efficiency, improve customer insights, and streamline claims processing for insurance companies, unlocking new levels of operational excellence..

Making the Right Choice for Your Business

When it comes to managing your data, choosing between data ingestion and data integration can have a significant impact on your business. Understanding your specific needs and goals is crucial in making the right decision. Data ingestion is ideal for quickly transferring data from multiple sources to a central repository. It allows you to collect raw data and load it into target storage, eliminating data silos and providing a holistic view of your data.

On the other hand, data integration focuses on combining data from different sources to create a unified view. It involves data transformation, cleansing, and harmonization processes to ensure data consistency and accuracy. With data integration, you can have a single source of truth, enabling better decision-making and analysis.

When considering whether to choose data ingestion or data integration, it’s important to assess your business needs. If you require immediate access to raw data from various sources, data ingestion may be the right choice. However, if you need a comprehensive and unified view of your data, data integration is the way to go.

To determine which approach suits your business best, ask yourself the following questions:

- What are your data requirements? Consider the volume, variety, and types of data you need to work with.

- Do you need real-time access to raw data? Data ingestion allows you to collect and load data quickly, while data integration might involve additional processing time.

- How scalable does your data solution need to be? Consider the growth of your data and whether your chosen approach can handle increasing volumes efficiently.

- What resources and expertise are available in your organization? Data integration may require more technical knowledge and resources than data ingestion.

By evaluating your business needs and goals, you can make an informed decision on whether to prioritize data ingestion or data integration. Remember, automation tools can also play a significant role in streamlining these processes, improving efficiency, and ensuring data accuracy and security.

Enhance Data Accuracy and Efficiency With Expert Integration Solutions!

Partner with Kanerika Today.

Case Studies: Kanerika’s Successful Data Integration Projects

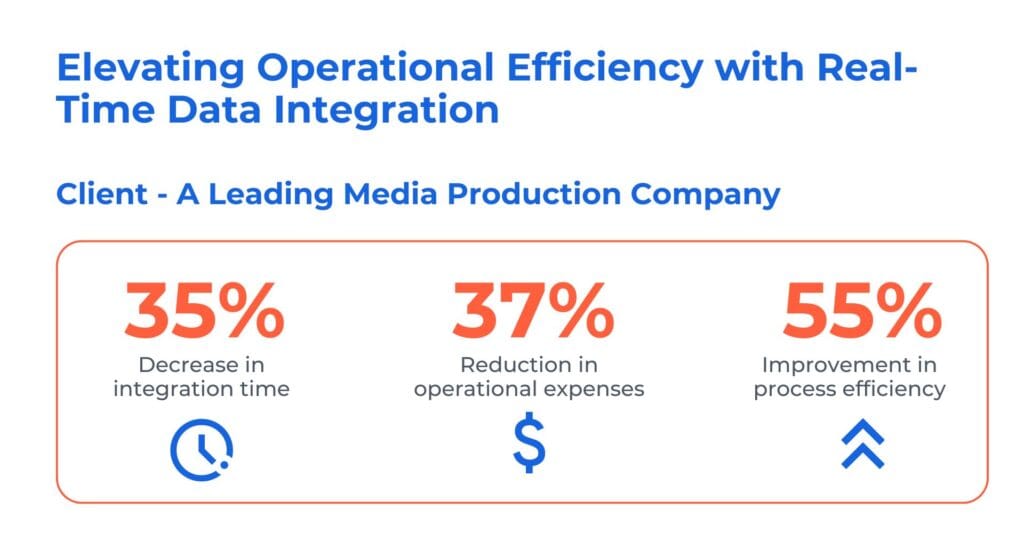

1. Unlocking Operational Efficiency with Real-Time Data Integration

The client is a prominent media production company operating in the global film, television, and streaming industry. They faced a significant challenge while upgrading its CRM to the new MS Dynamics CRM. This complexity in accessing multiple systems slowed down response times and posed security and efficiency concerns.

Kanerika has reolved their problem by leevraging tools like Informatica and Dynamics 365. Here’s how we our real-time data integration solution to streamline, expedite, and reduce operating costs while maintaining data security.

- Implemented iPass integration with Dynamics 365 connector, ensuring future-ready app integration and reducing pension processing time

- Enhanced Dynamics 365 with real-time data integration to paginated data, guaranteeing compliance with PHI and PCI

- Streamlined exception management, enabled proactive monitoring, and automated third-party integration, driving efficiency

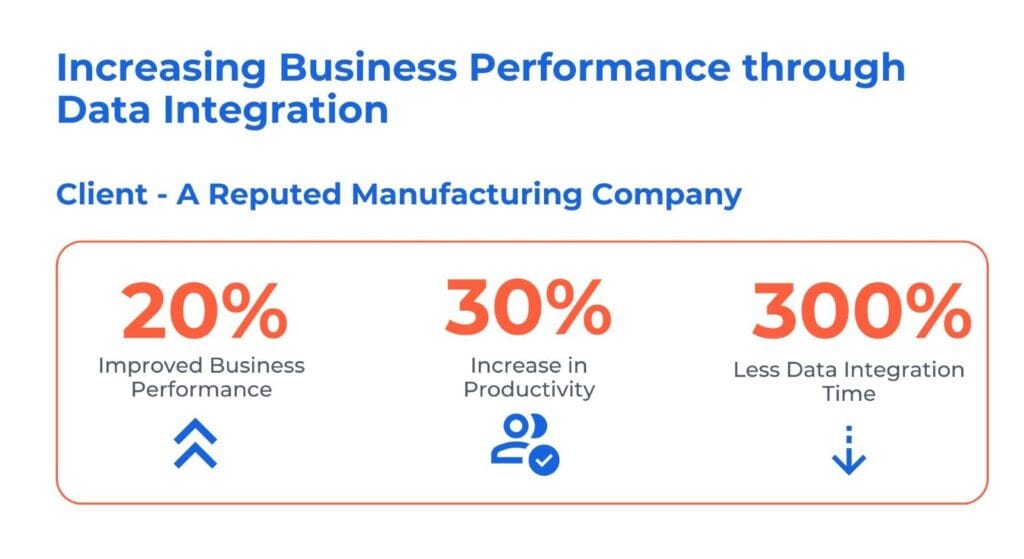

2. Enhancing Business Performance through Data Integration

The client is a prominent edible oil manufacturer and distributor, with a nationwide reach. The usage of both SAP and non-SAP systems led to inconsistent and delayed data insights, affecting precise decision-making. Furthermore, the manual synchronization of financial and HR data introduced both inefficiencies and inaccuracies.

Kanerika has addressed the client challenges by delvering follwoing data integration solutions:

- Consolidated and centralized SAP and non-SAP data sources, providing insights for accurate decision-making

- Streamlined integration of financial and HR data, ensuring synchronization and enhancing overall business performance

- Automated integration processes to eliminate manual efforts and minimize error risks, saving cost and improving efficiency

Kanerika: The Trusted Choice for Streamlined and Secure Data Integration

At Kanerika, we excel in unifying your data landscapes, leveraging cutting-edge tools and techniques to create seamless, powerful data ecosystems. Our expertise spans the most advanced data integration platforms, ensuring your information flows efficiently and securely across your entire organization.

With a proven track record of success, we’ve tackled complex data integration challenges for diverse clients in banking, retail, logistics, healthcare, and manufacturing. Our tailored solutions address the unique needs of each industry, driving innovation and fueling growth.

We understand that well-managed data is the cornerstone of informed decision-making and operational excellence. That’s why we’re committed to building and maintaining robust data infrastructures that empower you to extract maximum value from your information assets.

Choose Kanerika for data integration that’s not just about connecting systems, but about unlocking your data’s full potential to propel your business forward.

FAQs

Ingestion is like *consuming* data – getting it into your system. Integration is about making that data *useful* – connecting it with other systems and making it work together. Think of ingestion as eating, and integration as digestion and using the nutrients. They’re distinct steps in a complete data pipeline. Data ingestion boils down to two core approaches: batch and streaming. Batch processing gathers data in large chunks at scheduled intervals, like a nightly summary. Streaming ingestion, conversely, handles data in real-time as it arrives, continuously updating systems. The best choice depends on your needs for speed versus processing efficiency. Data acquisition is the process of gathering raw data from various sources. Data integration, on the other hand, focuses on combining and harmonizing that acquired data into a unified, usable form. Think of acquisition as collecting ingredients, while integration is the act of preparing a delicious meal from those ingredients. The result is a coherent and insightful whole, rather than disparate parts. Data integration combines data from diverse sources into a unified view, focusing on consistency and relationships between data points. Data aggregation, conversely, summarizes data from multiple sources into a concise, higher-level representation, often losing granular detail in the process. Think of integration as building a complete puzzle, while aggregation is creating a summary of the puzzle’s overall image. The key difference lies in the level of detail preserved. No, data ingestion is a broader concept than ETL. Ingestion simply means getting data into your system – it’s the *first* step. ETL (Extract, Transform, Load) encompasses ingestion *plus* the cleaning, transforming, and loading into a target database. Think of ingestion as bringing the raw ingredients home, while ETL is the whole process of preparing and cooking a meal. Data integration goes by many names! You might hear it called data consolidation, where disparate sources are combined. It’s also sometimes referred to as data unification, emphasizing the creation of a single, consistent view. Essentially, it’s all about bringing data together. Data integration is the process of combining data from different sources into a unified view. Think of it as assembling a puzzle: each piece represents a different dataset, and the final picture is a comprehensive, actionable understanding. This eliminates data silos and allows for more efficient analysis and decision-making. Data ingestion tools vary greatly depending on the data source and format. We use a range of tools, from ETL (Extract, Transform, Load) pipelines for structured data to specialized streaming platforms for real-time data. The best choice depends on your specific needs and data volume. Ultimately, it’s about selecting the most efficient way to get your data into the system. In APIs, ingestion is the process of receiving and handling data from external sources. Think of it as the API “swallowing” information, like a digital pipeline bringing in raw data. This data then undergoes processing before being used within the API’s system or passed along to other parts of an application. Essentially, it’s the crucial first step in getting data *into* the API. Data ingestion is like *feeding* data into a system – getting it in from various sources and preparing it for use. Data extraction is the *opposite* – retrieving specific data *from* a system, usually for analysis or transfer elsewhere. Think of ingestion as input and extraction as output. They are two sides of the same data management coin. Data collection is the broad process of gathering raw information, like conducting surveys or scraping websites. Ingestion, however, is the *specific* act of loading that collected data into a system for processing and analysis—it’s the pipeline that gets the data *in*. Think of collection as finding gold nuggets, and ingestion as putting them into a refining mill. Data integration relies on several ingestion methods. We can pull data directly from sources (like databases or APIs), push data from sources that actively transmit it, or use change data capture to only ingest modified records, optimizing efficiency. The best method depends on the source’s capabilities and your real-time needs. Data ingestion is the process of getting data from various sources into your system. Think of it as the “feeding” stage – you’re collecting raw materials (data) to be processed later. This involves cleaning, transforming, and loading it into a usable format for analysis or storage, preparing it for its next step in the data lifecycle. An API is like a restaurant menu – it lists the available services a system offers. Integration is the actual act of ordering and receiving the food (data/functionality) from that menu, connecting different systems. Essentially, an API *enables* integration; integration *utilizes* the API. Think of APIs as the tools, and integration as the process of building something with those tools. Data ingestion is the process of getting raw data *into* your system – think of it as bringing groceries home from the store. Data preparation, on the other hand, is transforming those raw groceries (data) into a usable meal (clean, organized, and analyzable data). It’s about cleaning, transforming, and enriching the data to fit your needs. Ingestion is the first step; preparation makes it edible.What is the difference between ingestion and integration?

What are the 2 main types of data ingestion?

What is the difference between data acquisition and data integration?

What is the difference between data integration and data aggregation?

Is data ingestion same as ETL?

What is another name for data integration?

What do you mean by data integration?

Which tool is used for data ingestion?

What is ingestion in API?

What is the difference between data ingestion and data extraction?

What is the difference between data collection and ingestion?

Which are the different ways of ingesting the data for integration?

What is data ingestion?

What is the difference between API and integration?

What is data ingestion vs data preparation?