Snowflake has emerged as a transformative force in data warehousing. Founded in 2012, Snowflake has revolutionized data storage and analysis, offering cloud-based solutions that separate storage and compute functionalities, allowing businesses to scale dynamically based on their needs.

This blog aims to unpack the innovative architecture of Snowflake, which combines elements of both shared-disk and shared-nothing models to provide a flexible, scalable solution for modern data needs. We will explore how each layer of this architecture works and the unique advantages it brings to businesses. From its ability to handle vast amounts of data with ease to its cost-effective scalability options, understanding Snowflake’s architecture will reveal why it stands out as a leading platform in the data warehousing space.

Unveiling Snowflake’s Hybrid Architecture

The revolutionary data design of Snowflake combines the shared-disk and shared-nothing architectures, two conventional models. This method takes advantage of every approach’s strength to create an effective and scalable solution for warehousing information.

Shared-Disk vs. Shared-Nothing Architectures

Shared-Disk Architecture: In this model, a central storage repository is accessed by all computing nodes. Data management is simplified because each node can read from or write to the same data source. However, there may be bottlenecks when many nodes try to use the central storage concurrently.

Shared-Nothing Architecture: Each node has its own CPU, memory, and disk storage in this highly scalable and fault-tolerant architecture. Nodes do not contend for resources as much as they would if data were not partitioned across them; however, partitioning also necessitates more advanced strategies for distributing queries and processing them.

Snowflake’s Hybrid Model

Snowflake fuses these two architectures together into one system by having a central data repository (like shared-disk systems) but spreading query processing over multiple independent computer clusters (like shared-nothing systems). Here are some details about this setup that are mentioned in different sources:

Database Storage Layer

It forms the base layer which uses shared-disk model to manage cloud infrastructure-based storage of data so that it remains flexible as well as cost-effective. The information is automatically split into compressed micro-partitions which are optimized for performance.

Query Processing Layer (Virtual Warehouses)

Each virtual warehouse acts as a standalone computer cluster where a shared-nothing model is implemented. These warehouses do not have any resource sharing hence no typical performance problems associated with shared disks systems happen here; they handle queries in parallel hence allowing massive scalability with concurrent processing without affecting each other.

Core Features of Snowflake Architecture

Snowflake’s architecture is renowned for its robust features that enhance scalability, enable efficient data sharing, and provide advanced data cloning capabilities. These features collectively contribute to operational efficiency and overall performance, making Snowflake a competitive choice for modern data warehousing needs.

1. Scalability

Elastic Scalability: One of Snowflake’s standout features is its ability to scale computing resources up or down without interrupting service. Users can scale their compute resources independently of their data storage, meaning they can adjust the size of their virtual warehouses to match the workload demand in real-time. This flexibility helps in managing costs and ensures that performance is maintained without over-provisioning resources.

Multi-Cluster Shared Data Architecture: This architecture allows multiple compute clusters to operate simultaneously on the same set of data without degradation in performance, facilitating high concurrency and parallel processing.

2. Data Sharing

Secure Data Sharing: Snowflake enables secure and easy sharing of live data across different accounts. This feature does not require data movement or copies, which enhances data governance and security. Companies can share any slice of their data with consumers inside or outside their organization, who can then access this data in real-time without the need to transfer it physically.

Cross-Region and Cross-Cloud Sharing: Snowflake supports data sharing across different geographic regions and even across different cloud providers, which is a significant advantage for businesses operating in multiple regions or with customers in various locations.

3. Data Cloning

Zero-Copy Cloning: Snowflake’s data cloning feature allows users to make full copies of databases, schemas, or tables instantly without duplicating the data physically. This capability is particularly useful for development, testing, or data analysis purposes, as it allows businesses to experiment and develop in a cost-effective and risk-free environment. Cloning happens in a matter of seconds, regardless of the size of the data set, which dramatically speeds up development cycles and encourages experimentation.

Key Layers of Snowflake Architecture

1. Storage Layer

In Snowflake, the storage layer forms the core part of its architecture that takes care of arranging and managing information. It acts as a central repository which holds all the data stored by users and applications on Snowflake. This layer is unique in that it uses what are known as micro-partitions – small compact units for efficient data management.

Micro-partitions: These are smaller divisions of data that Snowflake automatically creates and optimizes internally through compression. The structure supports columnar storage where each column has its own file. The columnar format accelerates read speeds especially for analytic queries which usually scan specific columns rather than entire rows.

2. Compute Layer

The Compute Layer is different from other components because it does not depend on the storage infrastructure; instead, virtual warehouses define this layer. Virtual Warehouses are clusters of computing resources used to process queries and perform computational tasks.

Virtual Warehouses: These compute resources can be scaled up or down depending on demand and they are created whenever needed. Each warehouse operates independently so that no two activities interfere with one another; thus, many queries could run at once without any performance degradation due to resource contention.

3. Cloud Services Layer

This is where all background services that hold Snowflake’s architecture together are managed by snowflake itself i.e., Cloud Services Layer. This level also ensures various operations within the platform are well coordinated hence comprising multiple services ranging from system security up-to query optimization among others.

Services Provided: User authentication is carried out in this layer; infrastructure management is done here too besides metadata management being done here as well as Query parsing & optimization – everything necessary to make sure all parts work together seamlessly so there can be a smooth user experience while using snowflake.

Integration of Components: Cloud service layers separate storage and compute layers by taking care of essential operations like authentication or even query optimization thus allowing them to work independently without duplicating each other’s roles within snowflake which doesn’t only improve security & performance but also simplifies scalability and maintenance.

Advantages of Snowflake’s Hybrid Architecture

This design gives users ease of use with shared disks models and the performance and scalability enhancements of shared nothing architectures. Some benefits are:

1. Scalability

The ability to scale storage and compute independently enables organizations to flex their resources up or down as required without significant disruption or loss in productivity.

2. Performance

Snowflake can provide faster access speeds and better query performance by reducing resource contention.

3. Cost-effectiveness

Companies are only charged for what they consume in terms of storage and compute power due to Snowflake’s unique architecture, thus helping them optimize their investments into data infrastructure.

4. Unified Platform

Snowflake supports a variety of data formats and structures, allowing you to consolidate data from various sources into a single platform. This simplifies data management, improves accessibility, and facilitates comprehensive data analysis.

5. Enhanced Security

Snowflake offers robust security features like fine-grained access control. You can manage data access precisely, ensuring sensitive information is protected according to industry standards and regulations.

6. Streamlined Data Operations

Snowflake’s ability to handle structured and semi-structured data within the same platform eliminates the need for complex data transformation processes. This simplifies data preparation and accelerates insights generation.

7. Improved Query Performance

Snowflake’s architecture is optimized for fast query performance. You can perform complex queries on large datasets efficiently, allowing you to gain valuable insights from your data quickly.

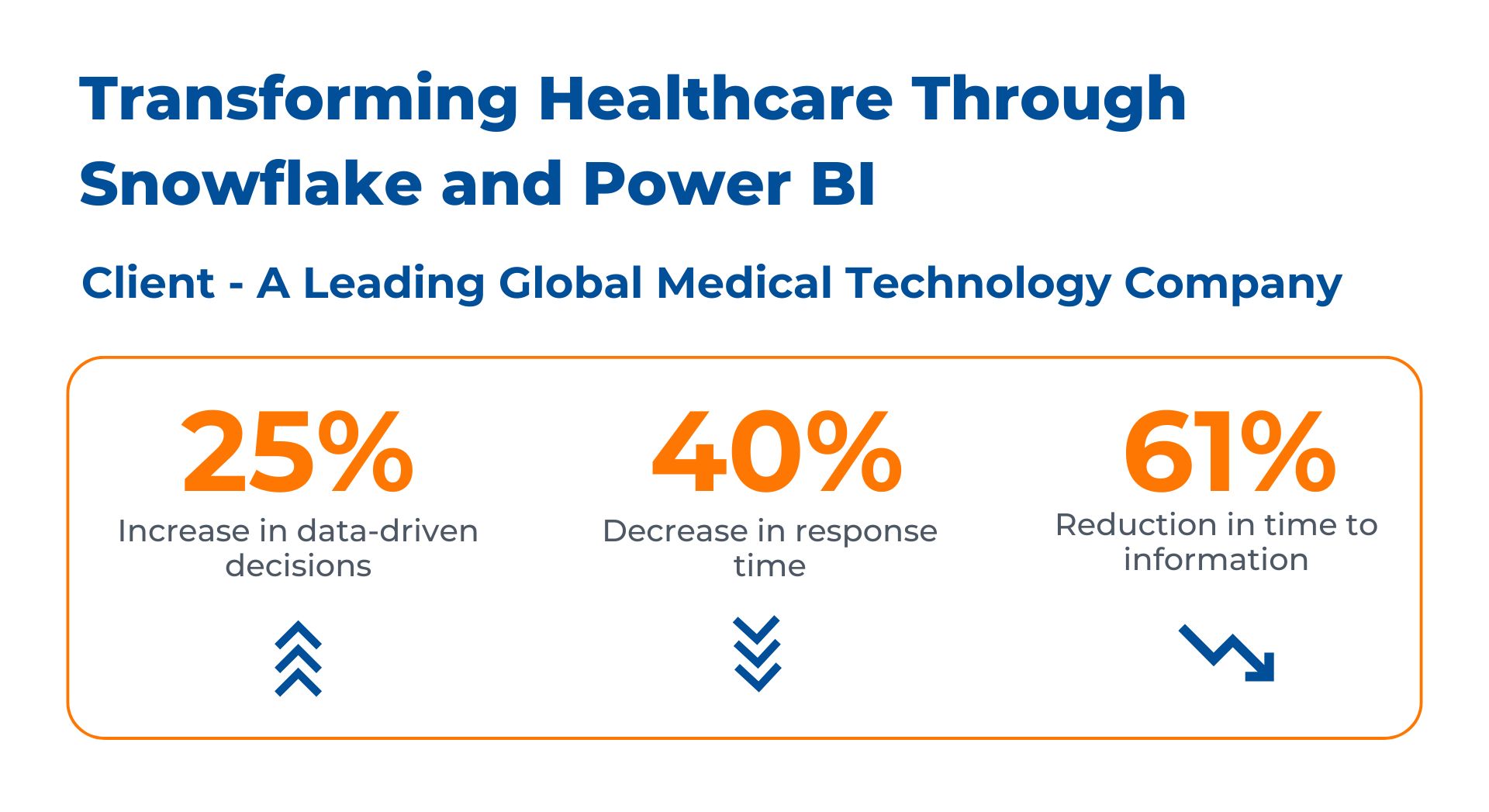

Case Study: Healthcare Giant Streamlines Analytics with Snowflake and Power BI

Challenge: A leading healthcare organization, grappling with a complex and fragmented data landscape, sought help extracting actionable insights from their extensive patient data. The data, scattered across numerous departments and geographical locations, presented a significant hurdle to effective decision-making and the enhancement of patient care.

Solution: Kanerika helped the organization embark on a data modernization project, implementing a two-pronged approach:

- Centralized Data Management with Snowflake: We adopted Snowflake’s cloud-based data warehouse solution. Snowflake’s ability to handle massive datasets and its inherent scalability made it ideal for consolidating data from disparate sources.

- Empowering Data Visualization with Power BI: Once the data was centralized in Snowflake, we leveraged Power BI, a business intelligence tool, to gain insights from the unified data set.

Results:

By leveraging Snowflake and Power BI together, Kanerika helped the healthcare expert achieve significant improvements:

- Faster and More Accurate Insights: Snowflake’s unified data platform and Power BI’s user-friendly interface enabled faster and more accurate analysis of patient data.

- Improved Decision-Making: Data-driven insights from Power BI empowered healthcare professionals to make better decisions regarding patient care, resource allocation, and overall healthcare strategy.

- Enhanced Patient Care: Having a holistic view of patient data allowed for improved care coordination and the development of more personalized treatment plans.

Real-world Use Cases of Snowflake Architecture

Companies like Western Union and Cisco have adopted Snowflake to leverage its powerful data warehousing capabilities, achieving significant operational benefits, including cost reduction and enhanced data governance. These examples illustrate the practical impacts of Snowflake’s innovative architecture in diverse business environments.

1. Western Union

- Scalability and Flexibility: Western Union uses Snowflake to manage its extensive global financial network, handling vast amounts of financial data and transactions. Snowflake’s architecture allows Western Union to scale resources dynamically, accommodating spikes in data processing demand without compromising performance.

- Cost Efficiency: By utilizing Snowflake’s on-demand virtual warehouses, Western Union can optimize its computing costs. This flexibility in scaling ensures that they only pay for the compute resources they use, significantly reducing overhead costs associated with data processing.

- Data Consolidation: Western Union consolidated over 30 different data stores into Snowflake, streamlining data management and improving accessibility. This consolidation has not only reduced infrastructure complexity but also enhanced data analysis capabilities, providing deeper insights at lower costs.

2. Cisco

- Enhanced Data Governance: Cisco utilizes Snowflake’s fine-grained access control features to improve data security and compliance. This capability allows Cisco to manage data access precisely, ensuring that sensitive information is protected according to industry standards and regulations.

- Operational Efficiency: Snowflake’s support for various data structures and formats has enabled Cisco to streamline its data operations. The ability to perform complex queries and analytics on structured and semi-structured data within the same platform reduces the need for data transformation and speeds up insight generation.

- Cost Reduction: Cisco has benefited from Snowflake’s storage and compute separation, which allows for more cost-effective data management. By fine-tuning the storage and compute usage based on actual needs, Cisco has minimized unnecessary expenses, enhancing overall financial efficiency.

Challenges and Considerations in Snowflake’s Architecture

While Snowflake offers a robust data warehousing solution with numerous benefits, it’s not without its challenges and limitations. Companies considering adopting Snowflake should be aware of these potential issues and plan accordingly to mitigate them.

Challenges

- Complexity in Cost Management: Although Snowflake’s pay-as-you-go model is advantageous, it can also lead to unpredictability in costs. Without proper management, costs can escalate, especially if large volumes of data transfers occur or if there is inefficient use of virtual warehouses.

- Data Transfer Costs: While Snowflake provides significant advantages in data storage and access, data transfer costs can be a concern, especially when integrating with data sources across different clouds or regions. This might require additional planning and budgeting to manage effectively.

- Learning Curve: Despite its user-friendly interface, Snowflake has a unique architecture that can be complex to understand and implement effectively. Organizations might face challenges with initial setup and optimization unless they invest in training or hire experienced personnel.

Considerations for Adoption

- Evaluate Data Strategy: Before adopting Snowflake, companies should evaluate their current and future data needs. Understanding the type of data, volume, and analysis requirements can help in designing an architecture that leverages Snowflake’s strengths effectively.

- Budget Planning: Due to the potential variability in costs, it is crucial for companies to plan their budgets carefully. Implementing monitoring tools and setting up alerts for abnormal spikes in usage can help in managing costs effectively.

- Integration with Existing Systems: Companies need to consider how Snowflake will fit into their existing data ecosystem. This includes evaluating how it integrates with existing applications and whether additional tools or services are needed to bridge any gaps.

- Security and Compliance: While Snowflake provides robust security features, companies must ensure that these features align with their security policies and compliance requirements. It’s important to configure these settings correctly to protect sensitive data and meet regulatory standards.

Kanerika: Your Reliable Partner for Efficient Snowflake Implementations

Kanerika, with its expertise in building efficient enterprises through automated and integrated solutions, plays a crucial role in enhancing the implementation and optimization of Snowflake architectures for global brands.

Strategic Integration and Customization

Kanerika leverages its proprietary digital consulting frameworks to design tailored Snowflake solutions that align with an organization’s specific data strategies and goals. By automating routine data operations and integrations, we can help organizations reduce operational overhead and accelerate data workflows in Snowflake. This includes automating data ingestion, transformation, and the management of virtual warehouses, enhancing the overall efficiency of the data platform.

Enhanced Decision-Making and Market Responsiveness

Leveraging advanced analytics capabilities, we transform Snowflake’s stored data into actionable insights, empowering decision-makers with accurate and timely information to respond swiftly to evolving market conditions. We can develop custom analytics and reporting solutions that integrate seamlessly with Snowflake.

Security and Compliance

We ensure that Snowflake implementations comply with the latest security standards and regulatory requirements. This includes configuring Snowflake’s security settings to match an organization’s specific compliance and governance frameworks.

Through strategic partnership with Kanerika, organizations using Snowflake can enhance their data warehousing capabilities significantly. Kanerika’s role in streamlining integration, optimizing costs, and enabling informed decision-making helps businesses maximize their investment in Snowflake, ensuring they are not only prepared to meet current data challenges but are also positioned to capitalize on future opportunities.

Frequently Asked Questions

What is Snowflake architecture?

Snowflake’s architecture is a cloud-based, massively parallel data warehouse designed for scalability and performance. It separates compute and storage completely, allowing independent scaling of each, and uses a multi-cluster shared-nothing architecture for efficient query processing. This means you only pay for what you use, significantly reducing costs compared to traditional data warehouses. Essentially, it’s a highly flexible and cost-effective way to manage and analyze massive datasets.

What are the three layers of Snowflake architecture?

Snowflake’s architecture is built on three core layers: the Cloud Services layer handles user interaction and security; the Compute layer executes queries using on-demand virtual warehouses; and the Storage layer persistently stores your data in a highly scalable, cloud-based data lake. This separation allows for independent scaling of compute and storage resources for optimal efficiency and cost management.

Does Snowflake use ETL or ELT?

Snowflake primarily employs an ELT (Extract, Load, Transform) approach. Instead of transforming data *before* loading it (ETL’s method), Snowflake loads the raw data first and then performs transformations within its powerful, cloud-based environment. This leverages Snowflake’s parallel processing and scalability for faster and more efficient data warehousing. Think of it as loading the raw ingredients and then cooking them in a super-efficient kitchen.

What is Snowflake tool used for?

Snowflake is a cloud-based data warehouse, meaning it’s a system designed for storing and analyzing massive amounts of data quickly and efficiently. Unlike traditional data warehouses, it scales on demand, automatically adjusting resources based on your needs. This makes it incredibly flexible and cost-effective for businesses of all sizes needing powerful data analysis capabilities. Essentially, it helps you unlock the insights hidden within your data.

Is Snowflake better than Databricks?

Snowflake and Databricks excel in different areas. Snowflake is a superior choice for primarily analytical workloads needing massive scalability and ease of use, especially with existing cloud data. Databricks shines when you need a unified platform blending data engineering, machine learning, and analytics, offering greater control and customization. The “better” choice depends entirely on your specific needs and priorities.

What is the role of the Snowflake architect?

A Snowflake architect designs and implements optimal data solutions within the Snowflake cloud data platform. They bridge the gap between business needs and technical execution, ensuring scalability, security, and performance. This includes database design, data modeling, and optimizing queries for efficiency. Essentially, they’re the masterminds behind a company’s Snowflake infrastructure.

What is a Snowflake and why is it used?

Snowflake is a cloud-based data warehouse designed for scalability and ease of use. It separates compute and storage, letting you pay only for what you use, unlike traditional warehouses. This allows for incredibly flexible and cost-effective analysis of massive datasets, making it ideal for large-scale data warehousing and analytics. Essentially, it’s a highly adaptable and efficient way to manage and query your data in the cloud.

What is the difference between Star and Snowflake architecture?

Star schema is a simple, denormalized data warehouse design with a central fact table surrounded by dimension tables. Snowflake, a more complex variation, normalizes dimension tables to reduce redundancy, resulting in a more efficient but potentially slower query structure. Essentially, Snowflake enhances Star’s scalability but at the cost of increased query complexity. The choice depends on your data volume, query frequency, and performance needs.

What is a key feature of Snowflake architecture?

Snowflake’s core strength is its massively parallel processing (MPP) architecture. This means it can split up a query into many smaller tasks, processed concurrently across numerous servers, dramatically speeding up even the largest data analyses. Unlike traditional data warehouses, it scales compute and storage independently, optimizing cost and performance based on actual usage. This elasticity is a key differentiator.