The explosion of open-source text generation models has created multiple ways to run and serve them efficiently. As the LLM market grows from $6.4 billion in 2024 to a projected $36.1 billion by 2030, developers face an important choice in the vLLM vs Ollama decision when building smart text applications.

This comparison matters because picking the wrong platform can waste weeks of development time and thousands of dollars in computing costs. Recent benchmarks show “vLLM performed up to 3.23x times faster than Ollama with 128 concurrent requests.” But speed isn’t everything.

One platform focuses on lightweight local serving that works on your own computer, while the other builds high-performance systems for heavy cloud workloads. This guide provides a clear, unbiased comparison covering both technical capabilities and business considerations.

By the end, you’ll know which platform fits your team’s skills and project requirements. Whether you’re building a personal project or enterprise application, this comparison will help you make an informed decision.

Understanding vLLM

vLLM is an open-source library designed to make large language model (LLM) inference faster and more efficient, especially in production environments. Its key innovation lies in a technique called PagedAttention, which optimizes how attention states are stored and managed during inference. This allows vLLM to handle longer contexts with reduced memory overhead while delivering high-speed performance.

The library is built with throughput and latency optimization in mind. Unlike traditional inference engines that may struggle with large batch requests or memory fragmentation, vLLM ensures smooth and efficient processing, making it well-suited for applications where responsiveness is critical. It also supports distributed inference, enabling multiple GPUs or servers to work together, which is essential for enterprise-scale deployments and high-demand APIs.

Some of vLLM’s notable features include:

- Efficient memory usage through PagedAttention.

- High throughput for concurrent requests.

- Low latency for real-time applications.

- Compatibility with popular models like LLaMA 2, Mistral, Falcon, and others.

vLLM is best suited for scenarios where performance at scale is the priority—such as cloud deployments, AI-powered SaaS platforms, and large enterprise APIs. For example, a company deploying LLaMA 2 or Mistral with extended context lengths can rely on vLLM to serve multiple users simultaneously without hitting memory bottlenecks.

Understanding Ollama

Ollama is a lightweight framework built to make large language models (LLMs) easy to run locally on personal machines such as laptops and desktops. Unlike production-scale inference engines, Ollama is designed with developer-friendliness and accessibility as its core principles, enabling individuals to test and interact with powerful models without the need for cloud infrastructure.

The framework provides a straightforward experience through its simple CLI and configuration files. Developers can install Ollama in minutes—using commands like brew install ollama—and begin running models with a single line, such as ollama run mistral. This simplicity lowers the barrier to entry, making it possible for anyone with a reasonably capable machine to experiment with LLMs.

Some of Ollama’s key features include:

- Local model downloading and execution, removing the need for constant internet connectivity.

- Offline functionality, which ensures privacy and control over data.

- Developer-focused workflow, with simple customization through YAML config files.

Ollama is best suited for individual developers, researchers, and hobbyists who want to quickly prototype, test, or fine-tune models in a local environment. It also supports edge devices, making it relevant for scenarios where internet access is limited or data privacy is paramount.

For example, a developer on a MacBook can easily spin up Mistral or LLaMA 2 using Ollama, test prompts, and iterate on ideas without managing GPU clusters or cloud deployments.

vLLM vs Ollama: Core Differences

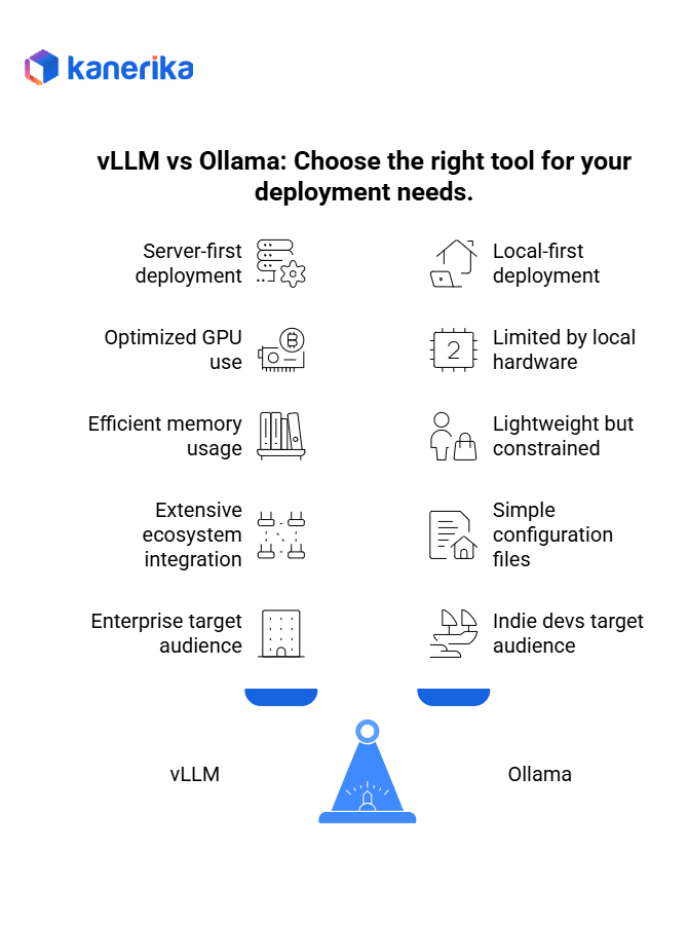

Understanding the fundamental differences between these two platforms helps developers and organizations choose the right tool for their specific language model deployment needs. While both enable running large language models, they serve distinctly different use cases and operational requirements.

| Feature | vLLM | Ollama |

| Deployment Focus | Server-first, cloud & distributed environments | Local-first, lightweight, offline operation |

| Performance Target | Optimized GPU usage, large batch processing | Limited by local hardware, small-scale runs |

| Memory Management | Advanced PagedAttention for efficient memory usage | Lightweight but constrained by system RAM/VRAM |

| Integration Style | Rich ecosystem with Hugging Face, Ray Serve, OpenAI APIs | Simple configuration files, minimal dependencies |

| Primary Users | Enterprise teams, production workloads | Independent developers, researchers, hobbyists |

| Scalability | Horizontal scaling across multiple servers | Single machine limitations |

| Setup Complexity | Requires infrastructure planning and configuration | Quick installation with minimal setup |

1. Deployment Model

vLLM: Server-First Architecture

- Designed specifically for API-driven deployments in cloud and distributed environments

- Built to serve multiple concurrent users through web service interfaces

- Optimized for production environments requiring high availability and reliability

- Furthermore, supports horizontal scaling across multiple servers for enterprise workloads

- Integrates seamlessly with existing cloud infrastructure and container orchestration systems

Ollama: Local-First Simplicity

- Focused on running language models directly on individual computers or local servers

- Operates completely offline without requiring internet connectivity for model inference

- Lightweight installation that doesn’t require complex infrastructure setup

- Moreover, perfect for developers who want to experiment privately without cloud dependencies

- Ideal for scenarios where data privacy requires keeping information on local machines

Strategic Implications

- Consequently, vLLM suits organizations planning to deploy language models as business services

- Ollama works better for individual developers or teams needing local development and testing capabilities

2. Performance Characteristics

vLLM: Enterprise-Grade Performance

- Optimized GPU utilization that maximizes hardware efficiency for large-scale operations

- Handles large batch inference efficiently, processing multiple requests simultaneously

- Advanced memory management reduces computational overhead in high-traffic scenarios

- Additionally, designed for sustained high-performance operation under enterprise workloads

- Supports advanced optimization techniques for reducing latency and increasing throughput

Ollama: Hardware-Constrained Efficiency

- Performance limited by local hardware capabilities including available GPU memory and processing power

- Excellent efficiency for small-scale runs and individual user scenarios

- Optimized for running models on consumer-grade hardware without specialized infrastructure

- Therefore, provides good performance within hardware constraints but cannot scale beyond single-machine limits

- Focuses on making language models accessible on typical development machines

Performance Reality

- Hence, vLLM delivers superior performance for organizations with dedicated hardware and high-volume requirements

- Ollama provides adequate performance for development, testing, and small-scale production use cases

3. Memory & Efficiency Management

vLLM: Advanced Memory Optimization

- Uses PagedAttention technology for highly efficient memory usage during model inference

- Advanced memory pooling and sharing techniques reduce overall resource requirements

- Optimized for serving large models that typically require significant memory resources

- Furthermore, intelligent memory management allows running larger models on available hardware

- Batch processing capabilities reduce per-request memory overhead

Ollama: Lightweight Resource Management

- Lightweight architecture designed to work within typical system RAM and video memory constraints

- Simple memory management that’s easy to understand and troubleshoot

- Optimized for single-user scenarios where resource sharing isn’t a primary concern

- Moreover, efficient use of available local resources without requiring specialized hardware

- Straightforward resource requirements that work on standard development machines

Resource Considerations

- Therefore, vLLM maximizes efficiency for organizations with dedicated infrastructure and multiple concurrent users

- Ollama optimizes for simplicity and accessibility on standard hardware configurations

Upgrade Your LLM Accuracy With Advanced Fine-Tuning Methods!

Partner with Kanerika for Expert AI implementation Services

4. Ecosystem & Integration Capabilities

vLLM: Rich Integration Ecosystem

- Seamless integration with Hugging Face model repositories for easy model deployment

- Compatible with Ray Serve for distributed serving and scaling capabilities

- Provides OpenAI-compatible APIs making it easy to integrate with existing applications

- Additionally, supports various deployment frameworks and cloud platforms

- Extensive tooling and monitoring capabilities for production environments

Ollama: Developer-Focused Simplicity

- Simple configuration files that developers can easily understand and modify

- Minimal dependencies and integration requirements for quick setup

- Less integration-heavy approach that reduces complexity and potential points of failure

- Furthermore, focused on developer experience rather than enterprise integration needs

- Straightforward command-line interface that works well for individual developers

Integration Philosophy

- Consequently, vLLM provides comprehensive integration options for enterprise environments

- Ollama prioritizes simplicity and ease of use for individual developers and small teams

5. Target Audience & Use Cases

vLLM: Enterprise and Production Focus

- Designed for organizations deploying language models as business-critical services

- Suitable for teams building customer-facing applications requiring high reliability

- Enterprise environments with dedicated infrastructure and technical operations teams

- Therefore, production workloads requiring consistent performance and scalability

- Organizations needing comprehensive monitoring, logging, and management capabilities

Ollama: Individual Developers and Research

- Perfect for independent developers experimenting with language models

- Research teams needing flexible local development environments

- Hobbyists exploring language model capabilities without cloud costs

- Moreover, scenarios requiring complete data privacy and offline operation

- Educational environments where simplicity and accessibility are priorities

Strategic Alignment

- Hence, organizations should choose based on their deployment scale, technical requirements, and operational complexity

- The right choice depends more on intended use case than technical superiority of either platform

Ollama vs. vLLM: Performance Comparison

| Performance Factor | Ollama | vLLM |

| Single Request Speed | Fast | Fast |

| Multiple Users | Slows down significantly | Maintains speed |

| Memory Efficiency | Basic, uses more RAM | Optimized, less waste |

| Hardware Requirements | Regular computers, laptops | Powerful GPUs, cloud servers |

| Concurrent Requests | Limited (1-10 users) | High (100+ users) |

| Response Time Consistency | Varies with load | Stable under load |

| Setup Complexity | Simple | Complex |

| Resource Usage | Higher per request | Lower per request at scale |

1. Speed and Throughput

vLLM dominates when handling multiple requests at once. It can process hundreds of text generation requests simultaneously while maintaining fast response times. Meanwhile, Ollama works better for single users or small teams with occasional requests.

The difference becomes clear under heavy load. vLLM uses advanced memory management and request batching to serve many users efficiently. But Ollama focuses on simplicity over raw speed, which makes it slower when traffic increases.

2. Memory Usage

vLLM optimizes memory usage through techniques like PagedAttention, which reduces wasted GPU memory. This lets you run larger models on the same hardware or serve more users with available resources.

Ollama takes a simpler approach to memory management. It’s easier to understand and debug, but uses more memory per request. This trade-off works fine for local development but becomes expensive when scaling up.

3. Hardware Requirements

vLLM needs powerful GPUs and significant RAM to deliver its best performance. It works well on cloud servers with multiple graphics cards but struggles on basic laptops or desktop computers.

Ollama runs on regular computers, including older hardware and systems without dedicated graphics cards. This makes it accessible to developers who don’t have access to expensive cloud infrastructure.

4. Latency Differences

For single requests, both platforms respond quickly. But vLLM maintains low latency even when serving many users simultaneously. Ollama’s response time increases noticeably as more requests come in.

The choice depends on your use case. Pick vLLM for production applications where consistent speed matters. Choose Ollama for development, testing, or applications with light usage patterns.

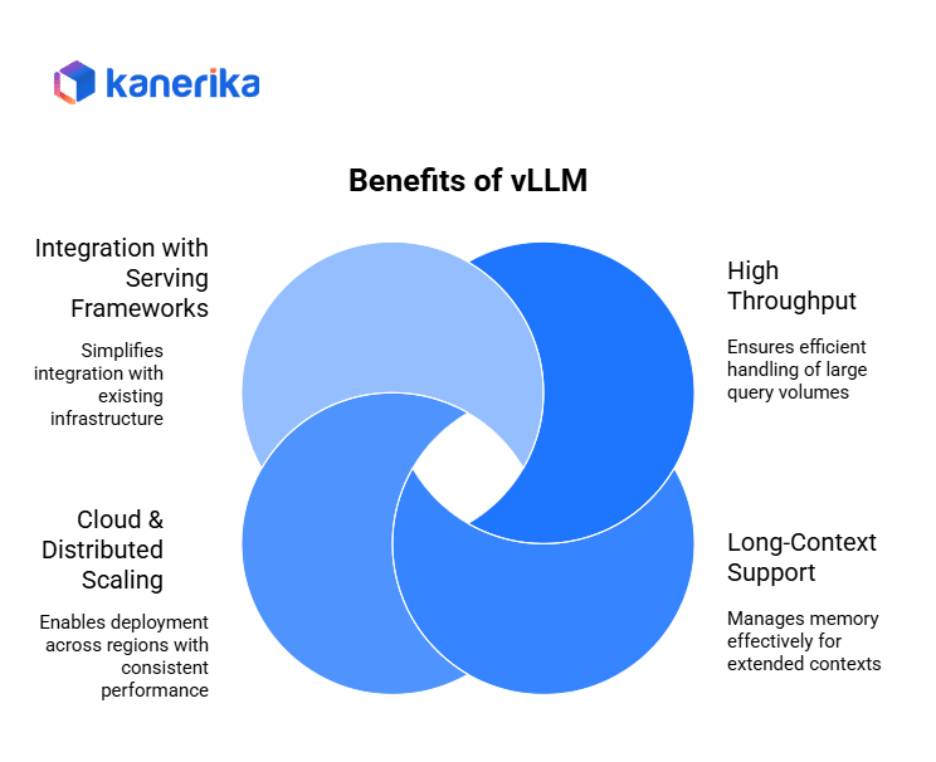

Benefits of vLLM

1. High Throughput for Enterprise Apps

vLLM is designed for performance at scale. It delivers high throughput, handling large volumes of concurrent queries efficiently. This makes it ideal for enterprise applications where responsiveness and consistency are critical, such as SaaS platforms or customer-facing AI products.

2. Long-Context Support

Thanks to its PagedAttention mechanism, vLLM can manage memory efficiently while supporting longer contexts. This allows applications like document summarization, research assistants, or advanced chatbots to maintain context across extended conversations or large text inputs without running into memory bottlenecks.

3. Cloud & Distributed Scaling

vLLM supports multi-GPU and distributed inference, enabling it to run in cloud-native environments with ease. This distributed scaling capability ensures that enterprises can deploy LLM workloads across regions and maintain consistent performance, even under heavy traffic.

4. Integration with Serving Frameworks

Another major benefit of vLLM is its integration with popular serving frameworks like Hugging Face, Ray Serve, and OpenAI-compatible APIs. This makes it simple to plug vLLM into existing infrastructure without significant re-engineering, reducing adoption barriers for teams already working with these ecosystems.

Example: SaaS Company Deploying Chatbots at Scale

Consider a SaaS company offering AI-powered customer support. With vLLM, they can deploy chatbots capable of handling thousands of simultaneous queries while keeping latency low and responses contextually accurate. This scalability is what sets vLLM apart for production workloads.

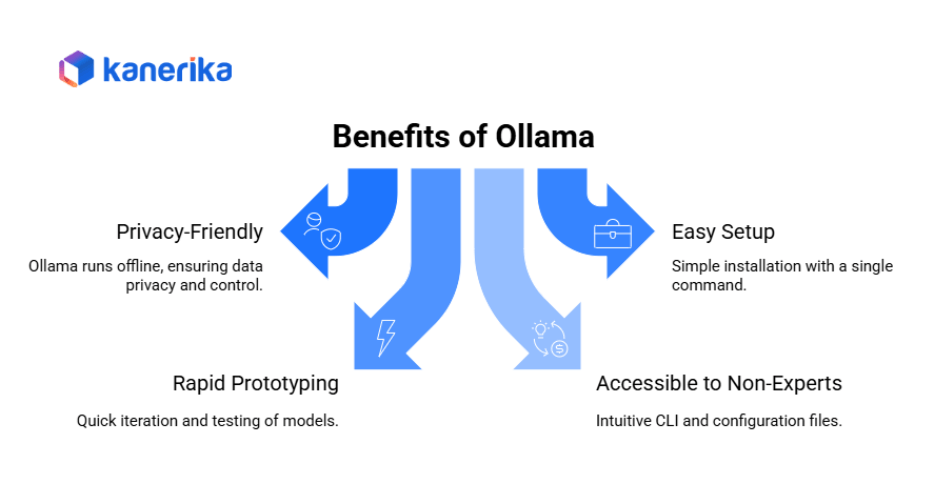

Benefits of Ollama

1. Runs Entirely Offline — Privacy-Friendly

One of Ollama’s strongest advantages is that it runs completely offline. This makes it ideal for developers, researchers, or organizations who need data privacy and control without sending information to the cloud. Sensitive use cases, such as healthcare or legal prototyping, benefit greatly from this local-first approach.

2. Extremely Easy Setup

Ollama is designed with simplicity in mind. Installation is straightforward—developers can get started with a single command (brew install ollama) and begin running models in minutes. This ease of setup lowers barriers to entry, allowing more people to experiment with LLMs without complex infrastructure.

3. Great for Rapid Prototyping

Because Ollama can run models locally with minimal configuration, it’s excellent for rapid prototyping and experimentation. Developers can quickly iterate on prompts, test workflows, and validate ideas before moving to production-scale frameworks like vLLM.

4. Accessible to Non-Experts

Ollama’s intuitive CLI and YAML-based configuration files make it accessible to non-experts. Even those without deep ML or infrastructure knowledge can download a model and start testing. This democratizes access to LLMs for students, indie developers, and small teams.

Example: Researcher Testing Small LLMs on MacBook

Imagine a researcher running Mistral or LLaMA 2 models on a MacBook using Ollama. They can test ideas offline, refine prompts, and explore small-scale applications—all without needing GPUs, servers, or cloud setups.

vLLM Challenges & Limitations

While vLLM is powerful for large-scale deployments, it comes with several challenges:

- Requires Powerful GPUs

vLLM is optimized for GPU performance, meaning teams need access to expensive, high-memory GPUs to achieve its full potential. This raises the barrier to entry for smaller organizations.

- Complex Setup Compared to Ollama

Unlike Ollama’s simple installation, vLLM requires environment setup, GPU drivers, and integration with serving frameworks. This makes it less beginner-friendly.

- Better Suited for Teams with Infra Expertise

Running vLLM effectively often requires DevOps, MLOps, and distributed systems knowledge. It’s not ideal for solo developers or non-technical users.

- High Infrastructure Costs

Beyond GPUs, vLLM deployments involve costs for cloud compute, networking, and scaling infrastructure, which may not be viable for startups or indie projects.

- Overhead in Small-Scale Use Cases

For simple testing or small applications, vLLM can feel over-engineered, adding unnecessary complexity where lighter frameworks (like Ollama) suffice.

Ollama Challenges & Limitations

Ollama simplifies local LLM usage but also comes with limitations:

- Limited by Local Hardware

Performance depends heavily on the RAM/VRAM of laptops or desktops. Running large models may be impractical without high-end machines.

- Not Suitable for Large-Scale Production Serving

Ollama is great for prototyping but cannot handle enterprise-level workloads or high-volume concurrent requests.

- Narrower Ecosystem Integrations

Compared to vLLM’s cloud and API integrations, Ollama offers fewer connectors to tools like Hugging Face or Ray Serve, making it less versatile for enterprise workflows.

- Constrained Context & Model Sizes

Large context windows or massive models may not run efficiently on local setups, limiting experimentation with cutting-edge research models.

- Energy & Battery Consumption

Running LLMs locally can drain laptop batteries quickly and cause overheating, especially during prolonged sessions.

- Less Community & Enterprise Support

Ollama is newer and still growing, so its ecosystem, documentation, and enterprise support are not as mature as frameworks like vLLM.

vLLM vs Ollama : Real-World Use Cases

vLLM Use Cases

1. SaaS Platforms with Concurrent LLM Queries

- Multi-tenant applications serving thousands of simultaneous users

- Customer support chatbots handling peak traffic loads

- Content generation platforms processing bulk requests

Case Study: A legal tech startup uses vLLM to serve their contract analysis platform, handling 500+ concurrent document reviews during business hours while maintaining sub-2-second response times.

2. Cloud-Native AI Products Requiring Scale

- Enterprise AI assistants integrated into productivity suites

- Real-time translation services for global applications

- Automated content moderation systems for social platforms

Case Study: An e-commerce company deployed vLLM to power their product description generator, processing 10,000+ SKUs daily across multiple languages with dynamic batching optimization.

3. Research Labs with Long-Context Experiments

- Academic institutions running large-scale language model studies

- Pharmaceutical companies analyzing extensive research literature

- Financial firms processing lengthy regulatory documents

Case Study: A biotech research lab uses vLLM’s PagedAttention to analyze 50,000+ token research papers, enabling breakthrough drug discovery insights from massive document collections.

SLMs vs LLMs: Which Model Offers the Best ROI?

Explore the cost-effectiveness, scalability, and use-case suitability of Small Language Models versus Large Language Models for maximizing your business returns.

Ollama Use Cases

1. Developers Running Models Offline

- Software engineers working in secure, air-gapped environments

- Mobile app developers testing LLM features without internet dependency

- Consultants requiring data privacy for sensitive client work

Case Study: A cybersecurity consultant uses Ollama with Llama 2 locally to analyze confidential network logs, ensuring zero data exposure while maintaining full analytical capabilities.

2. Rapid Application Prototyping

- Startup teams validating AI product concepts quickly

- Hackathon participants building proof-of-concept applications

- Product managers testing LLM integration feasibility

Case Study: A fintech startup prototyped their AI financial advisor using Ollama in just 2 days, testing conversation flows and response quality before committing to cloud infrastructure.

3. Education and Small-Scale Demonstrations

- University professors teaching AI concepts with hands-on examples

- Workshop facilitators demonstrating LLM capabilities

- Students learning prompt engineering and model behavior

Case Study: A computer science professor uses Ollama to run live coding demonstrations with Codellama, allowing 30 students to experiment simultaneously without cloud costs or API rate limits.

4. Personal Productivity and Learning

- Writers using local models for creative brainstorming

- Researchers summarizing academic papers privately

- Developers generating code snippets for personal projects

Case Study: A freelance technical writer uses Ollama with Mistral 7B on their laptop to brainstorm article outlines and refine drafts during flights, maintaining creative flow without internet connectivity while keeping sensitive client content completely private.

Generative AI Vs. LLM: Unique Features and Real-world Scenarios

Explore how Generative AI includes various content types like images and music, while LLMs specifically focus on generating and understanding text.

vLLM vs Ollama: Which One Should You Choose?

When to Pick vLLM

Choose vLLM if you need to handle lots of users at once or run complex text generation tasks. It works well when you have cloud servers and want maximum performance from your hardware. Plus, vLLM handles memory efficiently, so you can run larger models without running out of computing power.

This platform makes sense for production applications where speed and reliability matter most. It’s also the better choice when you need to integrate with existing cloud infrastructure or serve thousands of requests per day.

When to Pick Ollama

Go with Ollama if you want to run text generation models on your own computer without internet connection. It’s perfect for testing ideas, learning how these models work, or building applications that need to keep data private. Also, Ollama is much easier to set up and use for beginners.

This approach works well for personal projects, prototyping new ideas, or situations where you can’t send data to external servers due to privacy requirements.

Best of Both Worlds

Consider using both platforms in sequence. Start with Ollama to build and test your application locally. This lets you experiment quickly without worrying about cloud costs or complex setup procedures.

Once your application works well, then move to vLLM for production deployment. This hybrid approach gives you the simplicity of Ollama during development plus the performance of vLLM when you need to serve real users.

Many successful projects follow this pattern because it combines fast prototyping with reliable scaling. You get the benefits of both platforms without having to choose just one.

Get More Reliable Results With Better LLM Training!

Partner with Kanerika for Expert AI implementation Services

vLLM vs Ollama: Future Outlook

What’s Coming for vLLM

vLLM is working on making their system even faster and more efficient. They’re adding support for newer text generation models as they get released, so users can always access the latest capabilities. Plus, the team is building better memory management tools that will let people run bigger models on the same hardware.

The platform is also getting better integration with popular cloud services and development frameworks. This means companies will find it easier to build vLLM into their existing systems without major changes.

Ollama’s Development Plans

Ollama is expanding their library to include more types of models beyond just text generation. They want to support image recognition, code generation, and other types of smart applications. Meanwhile, they’re working on better cross-platform support so the software runs smoothly on more devices and operating systems.

The team is also focusing on making installation and setup even simpler. Future versions will require less technical knowledge to get started, which opens up the technology to more people.

Industry Direction

The market is moving toward having both lightweight local tools and powerful cloud systems working together. Companies want the flexibility to run simple tasks locally while having access to enterprise-grade systems for heavy workloads.

This trend benefits users because they get the privacy and control of local processing plus the power and scale of cloud systems. Rather than picking one approach, most organizations will likely use both depending on their specific needs.

The future looks like a world where local and cloud processing complement each other instead of competing.

Alpaca vs Llama AI: What’s Best for Your Business Growth?

Discover the strengths and advantages of Alpaca vs Llama AI to determine which technology best fuels your business growth and innovation.

Kanerika’s AI Custom AI Agents: Your Companions for Simplifying Workflows

1. DokGPT – Instant Information Retrieval

It is a custom AI agent that brings enterprise knowledge to everyday communication tools like WhatsApp and Teams. It pulls answers from documents, spreadsheets, videos, and business apps, delivering instant, secure responses in chat. With support for multiple languages, summaries, charts, and tool integrations, DokGPT helps teams work faster across HR, sales, support, onboarding, and healthcare.

2. Karl – AI-powered Data Analysis

It is an AI agent built for real-time data analysis—no coding required. It connects to your databases or spreadsheets, answers natural-language questions, and delivers instant charts, summaries, and insights. Whether you’re tracking sales, analyzing financials, or reviewing healthcare or academic data, Karl helps you explore and share findings with ease through a simple chat interface.

Private LLMs: Transforming AI for Business Success

Revolutionizing AI strategies, Private LLMs empower businesses with secure, customized solutions for success..

Kanerika: Your Reliable Partner for Efficient LLM-based Solutions

Kanerika offers innovative solutions leveraging Large Language Models (LLMs) to address business challenges effectively. By harnessing the power of LLMs, Kanerika enables intelligent decision-making, enhances customer engagement, and drives business growth. These solutions utilize LLMs to process vast amounts of text data, enabling advanced natural language processing capabilities that can be tailored to specific business needs, ultimately leading to improved operational efficiency and strategic decision-making.

Why Choose Us?

1. Expertise: With extensive experience in AI, machine learning, and data analytics, the team at Kanerika offers exceptional LLM-based solutions. We develop strategies tailored to address your unique business needs and deliver high-quality results.

2. Customization: Kanerika understands that one size does not fit all. So, we offer LLM-based solutions that are fully customized to solve your specific challenges and achieve your business objectives effectively.

3, Ethical AI: Trust in Kanerika’s commitment to ethical AI practices. We prioritize fairness, transparency, and accountability in all our solutions, ensuring ethical compliance and building trust with clients and other stakeholders.

5. Continuous Support: Beyond implementation, Kanerika provides ongoing support and guidance to optimize LLM-based solutions. Our team remains dedicated to your success, helping you navigate complexities and maximize the value of AI technologies.

Elevate your business with Kanerika’s LLM-based solutions. Contact us today to schedule a consultation and explore how our innovative approach can transform your organization.

Visit our website to access informative resources, case studies, and success stories showcasing the real-world impact of Kanerika’s LLM-based solutions.

Make Your LLM Smarter With Proven Training Techniques!

Partner with Kanerika for Expert AI implementation Services

FAQs

1. What's the main difference between vLLM and Ollama?

vLLM is built for high-performance serving with multiple users, while Ollama focuses on simple local deployment. vLLM excels when you need to handle hundreds of requests per second, but Ollama works better for personal projects or small teams that want easy setup on regular computers.

2. Which one is easier to install and use?

Ollama is much easier to get started with. You can install it with a single command and start running models immediately. vLLM requires more setup steps, GPU drivers, and technical knowledge. If you’re new to running text generation models, start with Ollama.

3. Can I run both on my laptop?

Ollama runs well on most laptops, even older ones without dedicated graphics cards. vLLM needs powerful hardware with good GPUs to perform well. You can technically install vLLM on a laptop, but it won’t show its performance benefits without proper hardware.

4. Which platform costs less to operate?

For small-scale use, Ollama costs less because it runs on your own hardware. For large-scale applications serving many users, vLLM becomes more cost-effective because it uses resources efficiently. The crossover point depends on your usage volume and hardware costs.

5. Do they support the same models?

Both platforms support popular open-source models like Llama, Mistral, and CodeLlama. However, vLLM often gets support for new models faster and handles larger models better. Ollama focuses on making models easy to download and run rather than supporting every possible option.

6. Which is better for production applications?

vLLM is designed for production use with features like load balancing, monitoring, and high availability. Ollama works for small production deployments but lacks enterprise features. If you’re building an application for many users, vLLM is the safer choice.

7. Can I switch from one to the other later?

Yes, both platforms use standard model formats, so switching is possible. However, you’ll need to rewrite deployment scripts and possibly change how your application sends requests. It’s better to choose the right platform from the start rather than switching later.

8. Which one should I choose for learning?

Start with Ollama if you want to learn how text generation models work. It’s simpler to set up and lets you focus on understanding the models rather than deployment complexity. Once you’re comfortable, you can explore vLLM for production scenarios.

9. Does vLLM work with Ollama?

No, vLLM and Ollama are separate, incompatible systems. They use different model formats, APIs, and serving architectures. vLLM works with Hugging Face models while Ollama uses its own .ollama format. Some teams use Ollama for development and vLLM for production, but they cannot be integrated together.

10. What is the difference between vLLM and LLM?

LLM refers to the AI models (like Llama, Mistral), while vLLM is the inference engine that runs them. Think of LLMs as the car engine and vLLM as the chassis and systems that make the car drivable. vLLM is a platform that optimizes memory, batching, and GPU utilization to serve LLMs efficiently to applications.

11. Does Ollama support VLMs (Vision Language Models)?

Yes, Ollama supports Vision Language Models like LLaVA, Bakllava, and Moondream. These models can analyze images, answer visual questions, and handle multi-modal conversations combining text and images. VLM models require more memory and computational resources compared to text-only models, with 16GB+ RAM recommended.