A few months into an AI consulting engagement with a global professional services company, Kanerika’s team hit a wall. They’d built an AI agent that worked exactly as designed — it pulled data from multiple systems, ran analysis, and presented clean recommendations to support associates. The demo was solid. Leadership was impressed.

Then they deployed it.

Associates ignored it. Usage was minimal. The agent sat there, fully functional, while people went back to their old routine of jumping between different tools and spending ten minutes per ticket.

The problem wasn’t the AI. The agent was doing its job. The problem was that it lived in a separate application. One extra click. And, one extra tab; which means one extra page load. That was enough for people to skip it entirely.

So the team rebuilt the integration — this time, embedded directly inside the tool associates already had open all day. No new tabs. No new interface. By the time someone opened a ticket, the agent had already done the work and the results were right there on their screen.

Adoption changed overnight.

That single lesson — build AI into existing workflows, never alongside them — became one of four principles that shaped the rest of the engagement. And those principles turned out to be more important than any model selection, architecture decision, or technical capability.

This blog captures those lessons. Not as theory, but as patterns that emerged from real enterprise deployments, backed by industry data that confirms most organizations are hitting the same walls.

Key Takeaways

- Over 80% of enterprise AI projects fail to reach production, and the primary causes are organizational, not technical.

- AI agents embedded into existing tools (Zendesk, Salesforce, Zoho, Hubspot, Marketo, internal dashboards) consistently outperform standalone applications in adoption metrics.

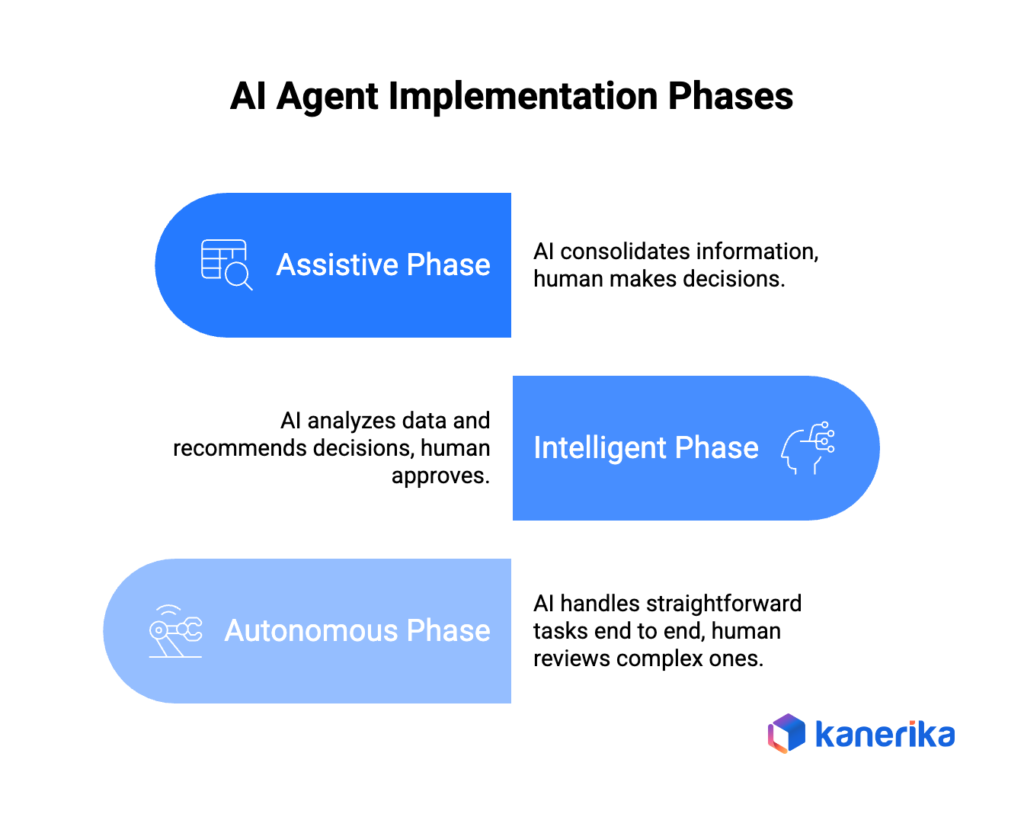

- Phased deployment — assistive, then intelligent, then autonomous — delivers incremental ROI and manages stakeholder expectations at each stage.

- AI implementation forces process standardization, which often delivers more value than the automation itself.

- Leadership buy-in must come before team-level rollout. Without it, frontline resistance stalls adoption regardless of how good the technology is.

The Pilot-to-Production Gap Is Real

Enterprise interest in AI agents has hit a tipping point. A PwC survey from 2025 found that 79% of organizations have adopted AI agents at some level, and 88% of senior executives plan to increase AI-related budgets in the next 12 months. The money and the intent are clearly there.

But the results tell a different story. Research from MIT and Futurum, referenced by Salesforce, showed that enterprises have invested an estimated $30 to $40 billion in generative AI, yet 95% of those investments have produced no financial return. Gartner projects that by 2027, more than 40% of agentic AI projects will be canceled due to unclear business value or insufficient governance.

The gap between pilot and production is where most AI initiatives die. A pilot works in a controlled environment with a small team, clean data, and executive attention. Scaling AI agents to production means hundreds of users, messy workflows, organizational politics, and the need for sustained adoption over months and years.

This guide covers the five areas where enterprise AI deployment breaks down most often — and what actually works to close those gaps, based on patterns observed across real implementations.

If your organization is still evaluating where AI fits, Kanerika’s AI maturity assessment can help identify your starting point before committing to a pilot.

1. The Leadership Alignment Problem

Every mention about AI adoption talks about “executive buy-in” as a checkbox item. In practice, it’s the single biggest predictor of whether an enterprise AI agent deployment succeeds or fails. And yet most AI implementation best practices guides treat it as a one-time activity rather than an ongoing requirement.

Here’s why it matters more than people think. When an AI consulting team enters an organization and starts mapping workflows, the immediate reaction from operational staff is fear. People assume AI means headcount reduction. Conversations become guarded. Team members downplay how long tasks take or claim their work is too nuanced for automation. This isn’t hypothetical — it’s a pattern that repeats across industries and company sizes.

A 2025 enterprise AI adoption report by Writer found that only 45% of employees believed their organization had successfully adopted AI, compared to 75% of the C-suite. That 30-point perception gap reflects exactly the resistance that stalls real deployments. The same report found that 42% of executives said AI adoption was creating internal tension.

What works in practice:

The deployments that move past this resistance share a common pattern. They start from the top and work down. Before any technology team talks to frontline staff, leadership has already been presented with clear metrics: if 17 people each spend 7 minutes per process and AI can reduce that to 2 minutes, the business case is concrete. Once leadership signs off, the directive flows downward — not as a suggestion, but as a clear mandate for cooperation.

An important nuance: the technology team should position itself as neutral. Questions about headcount impact, restructuring, or role changes get directed back to business leadership. This keeps the implementation team trusted by the people whose knowledge transfer they depend on. One enterprise deployment team described their approach directly: “We’re a technology team. Our job is to build the application and show you the numbers. What the business decides to do with those numbers is a management decision.” That positioning kept them effective even in politically sensitive environments.

2. The Integration Mistake That Kills Adoption

This is the most expensive lesson most organizations learn the hard way, and the single biggest factor in AI production readiness: standalone AI applications fail.

The pattern looks like this. A team builds an AI agent that consolidates data from multiple systems, runs analysis, and presents recommendations. It works well in demos. So they deploy it as a separate application — maybe a new web dashboard or a standalone tool that users access alongside their existing software.

Adoption drops off a cliff.

People don’t want to leave their primary tool, wait for another page to load, interact with an unfamiliar interface, and then switch back. Even a few seconds of additional friction is enough to make users skip the tool entirely. A 2026 report on AI agent adoption found that 62% of enterprises exploring AI agents lacked a clear starting point, and many that did build solutions struggled with fragmented workflows that didn’t connect to tools people already used.

A real deployment story illustrates this clearly.

A global professional services company built an AI agent for their customer support team. Associates were handling tickets in a cloud-based customer service portal but had to visit 3-4 different systems for each ticket — one for profile data, another for project history, a third for payment records. The AI agent was designed to consolidate all that information and run analysis automatically.

The first version opened in a separate window. Associates clicked a button in their support portal, a new application loaded, and they could see the consolidated data there. Technically, it worked exactly as designed.

Nobody used it.

The rebuild embedded the agent directly within their ecosystem. An API call triggered the agent the moment a ticket was created. By the time an associate opened the ticket, the analysis was already done and displayed in a sidebar — no new tabs, no waiting, no context switching. Adoption went up dramatically.

The same pattern repeated with a compliance screening tool at the same company. The standalone version had low usage. The embedded version — which ran screenings automatically in the background the moment a case was created in Salesforce — saw significantly higher adoption because results were waiting in the analyst’s existing dashboard before they even started working.

The principle

Every additional click, every new tab, every unfamiliar interface reduces adoption. Build AI into the tools people already use — Zendesk, Salesforce, Microsoft Teams, internal dashboards — rather than asking people to change their workflow to accommodate your technology.

Don’t build standalone applications. Always find a way to plug the AI solution into the existing workflow. When we moved from a separate application to embedding the customer support agent directly inside their platform of choice, adoption increased immediately. People don’t want to leave the tool they’re already in — and they shouldn’t have to.

– Varun Saket, Product Owner, AI Solutions, Kanerika

Transform Your Business with AI-Powered Solutions!

Kanerika builds AI agents that integrate directly into existing enterprise workflows rather than requiring teams to learn new tools.

3. Process Standardization: The Hidden Value of AI Agents

Most discussions about AI agents focus on time savings and automation. But some of the most valuable outcomes happen before the agent even goes live — during the process of codifying business rules.

Here’s what this looks like in practice. Before AI, associates in one enterprise support team handled rate negotiations based on instinct. A member would call asking for a rate increase, and the associate would make a gut-level judgment — “this person sounds serious about leaving, so I’ll approve a higher amount.” No formal rules. No consistency. Different people handling the same situation in completely different ways.

When the AI agent was being built, those business rules had to be made explicit. What’s the maximum rate increase allowed? Under what conditions? What data needs to be checked first? The agent can’t operate on gut feeling — it needs defined parameters.

This codification process forced the organization to look at how work was actually being done versus how it should be done. Leadership discovered that associates were routinely approving rate increases beyond sustainable levels, simply because they felt pressured in the moment. The AI agent enforced actual business rules — it would recommend a rate based on defined criteria, and the associate would approve or override.

PwC’s 2025 survey reinforced this observation: while 79% of organizations reported AI agent adoption, the real challenges were rooted in organizational change — specifically, connecting AI agents across applications and redesigning how work gets done.

The takeaway for organizations moving from pilot to production:

Expect process changes. Building an AI agent requires you to formalize rules that were previously informal. This surfaces inconsistencies, enforces standards, and often delivers value that’s harder to quantify but no less important than the automation itself. Don’t resist it. The process harmonization is a feature, not a side effect.

4. Why Staged Deployment Beats Big-Bang Launches

Every stakeholder wants immediate results. They want to flip a switch and have AI handle everything overnight. Setting realistic expectations upfront is one of the most important things a deployment team can do — and one of the most commonly skipped.

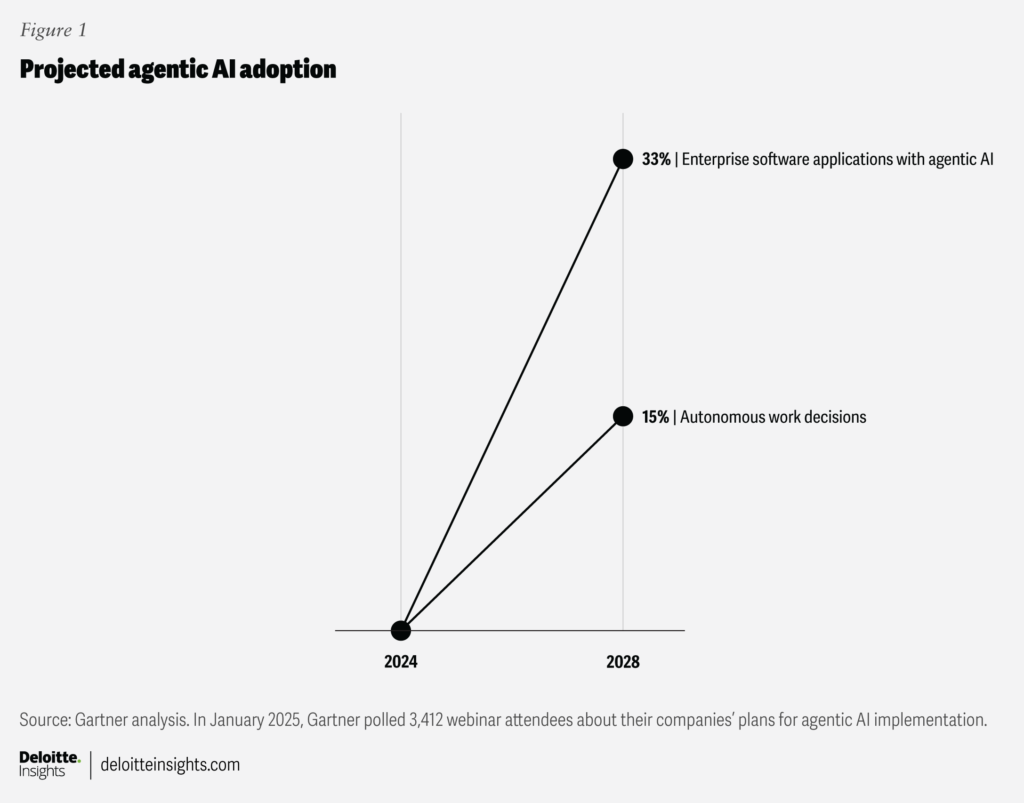

Gartner projects that by 2028, 33% of enterprise software will include agentic AI, up from less than 1% in 2024. But the organizations that get there won’t do it in a single release. They’ll build incrementally.

A phased approach that has proven effective in real deployments follows three stages:

Phase 1 — Assistive

The AI agent gathers and consolidates information from multiple systems into one screen. The human still makes every decision, but instead of spending 10 minutes hunting for data across 3-4 applications, they spend 2 minutes reviewing a summary. At this stage, nobody is being replaced. You’re removing the most tedious, lowest-value part of their job. This is also the easiest phase to get buy-in for because it’s clearly additive.

Phase 2 — Intelligent

The agent analyzes consolidated data against business rules and recommends a decision. The associate reviews and approves or overrides. Instead of reading through payment history and project data to determine a course of action, they see: “Based on these four conditions, the recommended action is X.” Their job shifts from data gathering and analysis to judgment and review.

Phase 3 — Autonomous

For straightforward, rule-based tasks with clear parameters, the agent handles everything end to end. A member asks for their payment status? The agent reads the request, checks the system, composes a response, and sends it. No human involvement. But for sensitive or complex tasks — rate negotiations, escalations, exception handling — a human still reviews.

Not every process reaches full autonomy, and that’s fine. The value of this approach is that each phase delivers measurable ROI. Leadership sees impact incrementally, which builds confidence and justifies continued investment. It also gives the organization time to adapt to new workflows rather than facing a sudden operational shift.

One enterprise deployment saw this play out clearly. In phase 1, average handling time per ticket dropped because associates no longer had to visit multiple systems. In phase 2, the time dropped further because the agent was doing the analysis. By the time certain ticket categories were candidates for phase 3 autonomy, the organization had months of data showing the agent’s accuracy and reliability — making the case for full automation straightforward.

5. Targeting the Right Processes First

One of the most common mistakes in AI agent deployment is trying to automate everything at once. Successful deployments start with a focused analysis of where the highest volume and highest impact intersect.

In one enterprise customer support deployment, the team analyzed ticket data across dozens of categories and found that roughly 15 categories accounted for about 60% of total volume. Those became the first automation targets — not because they were the most complex, but because they offered the clearest ROI.

This focus led to some unexpected wins. One category — NPS feedback tickets — was generating hundreds of tickets daily for comments that required zero action. Every time a member wrote any comment on a feedback form, even “the experience was great,” it created a support ticket. A classification engine was built to flag whether each comment was actionable or not. Non-actionable comments (95% of them) were filtered before a ticket was ever created. The result: a 95% reduction in ticket volume for that category alone.

Another high-volume target was compliance screening. The manual process took about 20 minutes per person, and the team could only screen about 33% of members before their scheduled engagements. The remaining 67% went unvetted — a compliance and reputational risk. After deploying an AI screening agent (and, critically, embedding it into existing workflows rather than building a standalone tool), coverage went from 33% to 100%, and screening time dropped from 20 minutes to under 5 minutes.

How to identify the right starting points:

Start by mapping your highest-volume processes. For each, measure time spent, number of people involved, number of systems touched, and error rates. Then filter for processes where the rules can be clearly defined — ambiguous, highly subjective processes are poor candidates for early deployment. The best first targets are processes that are high volume, rule-based, and spread across multiple systems. Those will deliver the most visible ROI and build organizational confidence for tackling more complex use cases later.

For a structured approach to identifying where AI agents can deliver the fastest value, process discovery should come before any technology decisions. This is where many implementations go wrong — they start building before they understand what to build.

Top 6 Project Management Tools for Startups to Enterprises

Explore the leading project management tools, their key features, and how to choose the right platform to improve efficiency and deliver successful outcomes.

What the Data Says About Getting This Right

The organizations that successfully move AI agents from pilot to production share a few characteristics. None of them are about having the best model or the most sophisticated technology.

According to Writer’s 2025 report, enterprises with a formal AI strategy reported 80% success in adoption, compared to just 37% for those without one. The difference isn’t the strategy document itself — it’s the alignment, expectation-setting, and organizational preparation that producing one requires.

Deloitte’s agentic AI analysis noted that many organizations are trying to automate existing processes without rethinking how work should actually be done — and that true value comes from redesigning operations, not just layering agents onto old workflows.

A CIO Dive report quoted one enterprise AI leader saying: “Whatever process you’re trying to automate or make more efficient needs to be a pretty good process. I haven’t seen AI actually fix a lot of bad processes.” This aligns directly with the process standardization pattern described earlier — AI agents work best when they force you to fix the process first, then automate the improved version.

A Quick Summary of What Works

For teams planning or currently executing an AI agent deployment, here’s what the evidence — both from industry research and real enterprise implementations — points to:

Discovery before development. Map processes, gather time-per-task metrics, identify system dependencies. Not every problem needs AI. Some need process fixes. Some need basic automation. AI agents should target high-volume, high-impact processes where the ROI math is clear.

Leadership alignment runs parallel to everything. It’s not a phase you complete and move on from. It’s an ongoing requirement throughout every stage of deployment. Without active executive support, frontline resistance will kill the project.

Embed, don’t isolate. Build AI into existing tools. Every extra click reduces adoption. The best AI agent is one that users don’t have to think about — it’s already there, working inside their existing workflow.

Expect and embrace process change. When you codify rules for an AI agent, you expose how work is actually being done versus how it should be done. That standardization is valuable.

Stage the rollout. Start assistive, move to intelligent, then go autonomous where the risk profile allows it. Each phase should have its own success metrics and deliver measurable value independently.

Transform Your Business with AI-Powered Solutions!

Kanerika builds AI agents that integrate directly into existing enterprise workflows rather than requiring teams to learn new tools.

Why Choose Kanerika

The insights in this guide come from hands-on AI consulting and agent deployment work across global enterprises. Kanerika is a data and AI consulting firm headquartered in Austin, Texas, with operations across the US and India.

A few relevant credentials:

Microsoft Solutions Partner for Data & AI — one of the early implementers of Microsoft Fabric and Copilot, with the Analytics on Microsoft Azure Advanced Specialization and Microsoft Fabric Featured Partner status. This means access to Microsoft co-funded pilots, PoCs, and deployment support for clients.

Certified Databricks Partner — building scalable lakehouse platforms, migrating legacy systems, and implementing governance across enterprise data environments.

Proprietary AI agents in production — Karl (data insights agent, also available as a Microsoft Fabric workload) and DokGPT (document intelligence agent deployable on WhatsApp and Teams) are actively used by enterprise clients across industries.

Industry recognition — named among Forbes’ America’s Best Startup Employers 2025, recognized in Everest Group’s Most Promising Data and AI Specialists, and ISO 27001/27701 certified with SOC II and GDPR compliance.

200+ implementations across healthcare, finance, manufacturing, retail, and professional services — covering AI/ML, data analytics, RPA, data governance, and migration.

The phased deployment model described in this blog (assistive → intelligent → autonomous), the integration-first approach to adoption, and the emphasis on process discovery before technology decisions all come directly from this implementation experience.

FAQs

Why do most AI agent pilots fail to reach production?

The primary causes are organizational, not technical. Most AI implementation best practices focus on technology, but the real blockers are poor leadership alignment, building standalone tools instead of embedding into existing workflows, unrealistic timelines, and skipping process discovery. MIT research estimates that over 80% of enterprise AI projects stall before production.

What's the difference between assistive, intelligent, and autonomous AI agents?

Assistive agents consolidate data from multiple systems into one view — the human still decides and acts. Intelligent agents analyze data against business rules and recommend actions — the human reviews and approves. Autonomous agents handle tasks end to end without human involvement. Most real-world deployments progress through these stages incrementally.

How long does it take to move an AI agent from pilot to production?

It depends on scope, but a phased approach typically delivers first production results within 6-8 weeks for the assistive stage. Moving through all three phases for a single process area usually takes 4-6 months. Organizations that try to skip phases or deploy across too many processes simultaneously tend to take longer and produce worse results.

Should we build AI agents as standalone applications or integrate them into existing tools?

Integrate into existing tools. This is one of the strongest patterns across successful deployments. Standalone applications consistently see lower adoption because they add friction to existing workflows.

How do we identify the right processes to automate first?

Map your highest-volume processes, measure time-per-task and number of systems involved, then filter for rule-based tasks with clear parameters. Avoid highly subjective or ambiguous processes for early deployment. The best first targets deliver visible ROI fast and build organizational confidence for more complex use cases. Kanerika’s consulting approach starts with this discovery phase before recommending any technology decisions.

Does AI implementation require changing our existing processes?

Almost always, yes. Building an AI agent requires codifying business rules that were previously informal or instinct-driven. This surfaces inconsistencies and forces standardization. Organizations that treat this as a benefit rather than a burden get significantly better results from their deployments.

What's the best approach to scale AI agents across an enterprise?

Start with one high-volume, rule-based process. Prove ROI there. Then expand to adjacent processes using the same phased approach (assistive → intelligent → autonomous). Trying to scale across multiple departments simultaneously usually leads to diluted focus and stalled projects. The organizations that scale successfully treat each new process as its own mini-deployment, applying lessons from the previous one.