Legacy Data Migration remains one of the most complex IT challenges facing organizations today, despite years of digital transformation initiatives. According to McKinsey’s 2024 Technology Trends Report, companies spend 60-80% of their IT budgets maintaining outdated systems rather than innovating.

The problem runs deeper than most executives realize. Legacy systems persist because they contain decades of business logic, support critical operations, and integrate with countless other applications. Meanwhile, the hidden costs keep mounting. Organizations lose competitive advantage when data scientists cannot access historical information, compliance teams struggle with fragmented audit trails, and business analysts work with incomplete datasets.

But here’s what makes migration so challenging: it’s never just about moving old data. Each legacy system represents years of customizations, unique business rules, and institutional knowledge that must be preserved during transition. For regulated industries like healthcare and finance, the stakes become even higher with strict compliance requirements and zero tolerance for data loss.

Key Learnings

- Legacy data migration is a modernization initiative, not a data move

Successful enterprises treat legacy migration as a transformation effort that unlocks agility, analytics, and long-term scalability rather than simply relocating old data.

- Automation is essential for scaling legacy migrations

Manual approaches do not scale for large, complex legacy environments; automation ensures consistency, speed, and accuracy while preserving business logic.

- Legacy systems hide critical business logic and risk

Decades of embedded rules, undocumented dependencies, and manual workarounds make legacy migrations high-risk without structured discovery and validation.

- Data quality issues amplify during legacy migration

Historical inconsistencies, duplicates, and broken relationships surface quickly in modern platforms, making early profiling and cleansing essential.

- Governance and compliance must be built in from the start

Audit trails, lineage, and data ownership are critical when migrating legacy systems, especially in regulated industries.

Migration Made Easy with Kanerika’s Accelerator

Partner with Kanerika for Seamless, Error-free Migration

What Is Legacy Data and Why It’s Hard to Migrate?

Legacy data extends beyond simply old information as it’s critical business data trapped in outdated systems using obsolete technologies. Understanding these complexities helps organizations plan realistic migration strategies.

1. Defining Legacy Data Beyond Age

Legacy systems are not only old, but also systems on which the replacement poses a great business risk. These systems may operate fundamental functions in decades. Also, the legacy data contains the data that is either in a format that is no longer supported or programming language is no longer used and also the data is in hardware that is no longer in use by its manufacturer. The information is critical even in instances where technology has become redundant.

2. Common Legacy Platforms

Banking, insurance and government systems are all still running on mainframe systems such as IBM z/OS which are over 50 years old. Decades of business data are stored on on-premises databases that run either Oracle, SQL Server or DB2. Also, proprietary formats of the deceased vendors imprison data in inaccessible formats. Custom-built systems developed internally lack documentation as original developers retired.

3. Embedded Business Logic

Business rules are embedded in old code as opposed to being documented. Calculation of interest, algorithms that compute prices and approvals are all present in programs written in COBOL which nobody can read. Also, logic data validation implemented in applications does not map to more modern platforms. Extracting this hidden knowledge before migration becomes critical but extremely difficult.

4. Operational and Regulatory Risks

Legacy data risks multiply during migration. Systems lack proper audit trails regulators now require. Additionally, compliance documentation disappeared over decades. Quality problems kept on accumulating undetected within systems that no one would dare to tamper with. Moreover, operational dependencies are not documented at all- disruption of relationships of the unknown type leads to production failures in terms of revenue and customer services.

The Ultimate Data Migration Checklist for Enterprises: 2026 Edition

Explore a practical, end-to-end data migration checklist that enterprises can actually follow from planning and execution to validation and post-migration success.

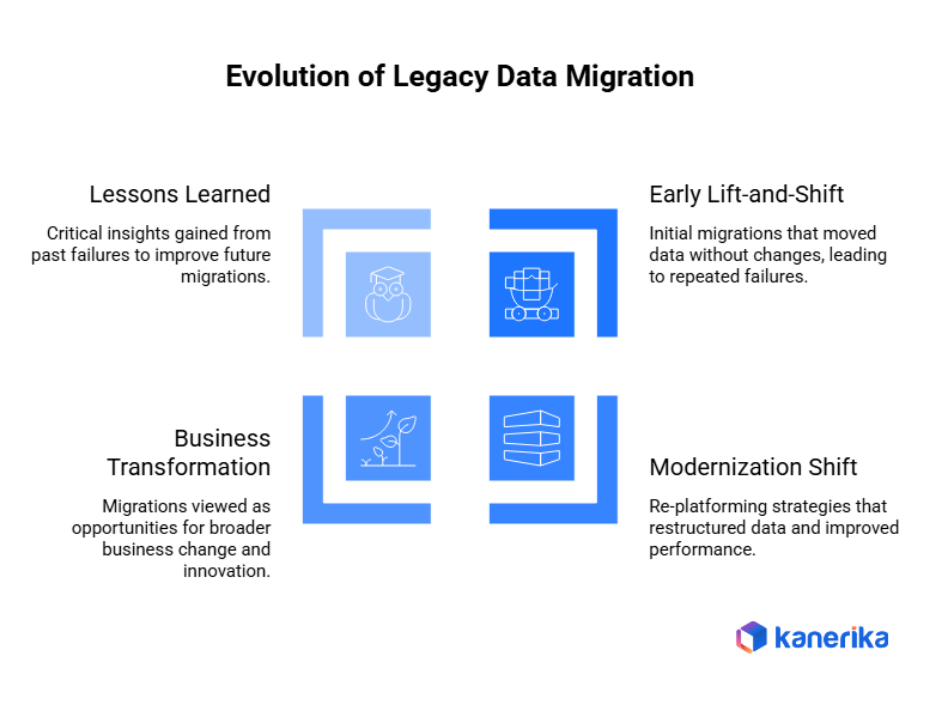

Evolution of Legacy Data Migration Approaches

Legacy migration strategies evolved significantly as organizations learned from expensive failures. Understanding this evolution helps avoid repeating past mistakes.

1. Early Lift-and-Shift Attempts

Initial lift-and-shift migrations simply moved legacy data to modern platforms without changes. Organizations hoped new infrastructure would magically improve performance. However, this approach failed repeatedly. Additionally, migrating bad data faster didn’t solve underlying problems. Legacy data quality issues, inefficient structures, and embedded business logic complications all transferred unchanged. Moreover, new platforms couldn’t optimize poorly designed legacy schemas.

2. Shift Toward Modernization

Organizations recognized migration required data modernization, not just relocation. Re-platforming strategies restructured data while moving it. Teams normalized databases, standardized formats, and cleaned quality issues during transfer. Additionally, business logic got extracted from code into configurable rules. This approach took longer but delivered actual improvements rather than perpetuating legacy problems on expensive new infrastructure.

3. Migration as Business Transformation

The enterprises today look at the legacy migration as transformation opportunities. Migrations are consistent with business strategy – integrating post-acquisitions, facilitating digital initiatives or supporting new business models. Also, transformation also encompasses process, organizational, and capability development other than technical migration. Data movement becomes one component of broader modernization programs.

4. Lessons from Past Failures

Failed migrations taught critical lessons. Underestimating complexity causes timeline disasters. Ignoring data quality creates post-migration chaos. Additionally, insufficient business involvement produces technically successful but operationally useless systems. Furthermore, big-bang cutovers risk catastrophic failures. Organizations now adopt phased approaches, invest in quality upfront, and maintain business continuity throughout migrations.

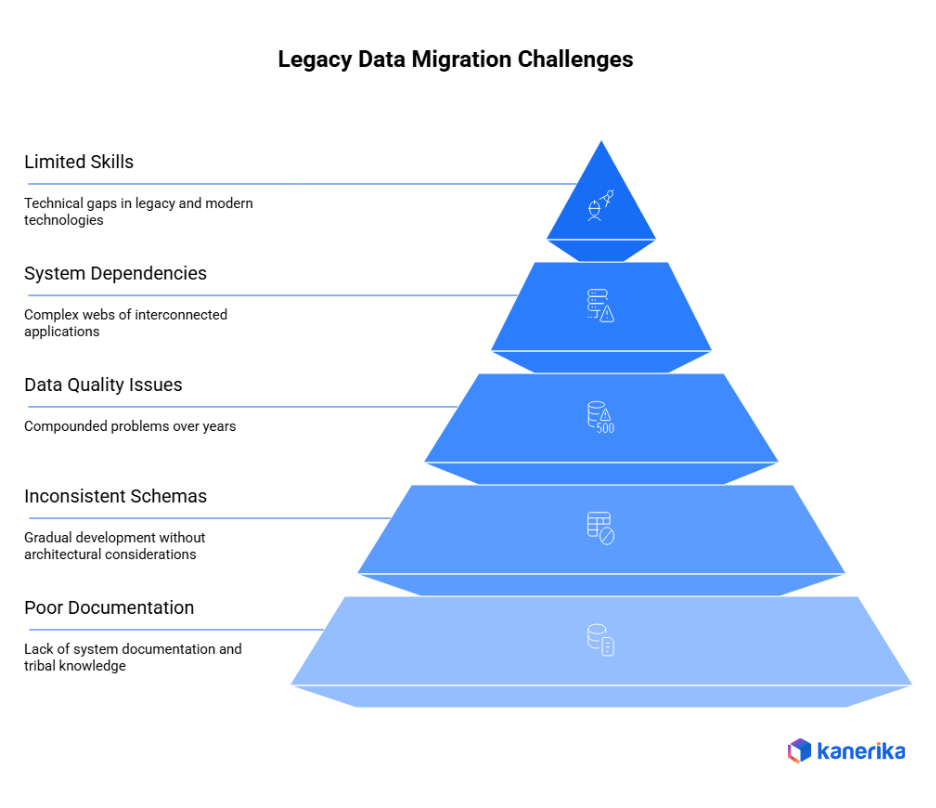

Core Challenges in Legacy Data Migration

Migration of legacy data presents special hurdles that need special skills and wise planning. These challenges are known to aid organizations in making practical plans.

1. Poor Documentation and Tribal Knowledge

Legacy system documentation either doesn’t exist or became outdated decades ago. Original developers retired taking critical knowledge with them. Additionally, tribal knowledge resides in few remaining employees’ heads rather than documented processes. System behaviors are grounded on unwritten rules that no one recalls the reason why they exist. Besides, the discovery of these latent requirements occurs during unexpected failures of migrations.

2. Inconsistent Schemas and Embedded Business Rules

Database schemas developed gradually through the decades without architectural considerations. There are duplicate rows and columns in tables, inconsistency of column names and obscure ties. Additionally, business logic lives embedded within application code rather than databases. COBOL programs contain pricing rules, validation logic, and calculation methods impossible to extract without reverse engineering. Furthermore, different systems implement identical business rules differently creating conflicts during consolidation.

3. Accumulated Data Quality Issues

Legacy data quality problems compound over years. Duplicate records multiply as systems aged. Missing values increase as validation rules weakened. Additionally, inconsistent formats proliferate across departments using systems differently. Old data contains codes referencing discontinued products, deleted customers, or obsolete business units. Moreover, nobody dared clean quality issues fearing production system disruptions.

4. High System Dependencies

Legacy system dependencies create complex webs difficult to untangle. Applications connect through undocumented interfaces. Batch jobs depend on specific data formats. Additionally, downstream systems expect legacy data structures exactly. The dependency breakage has a cascading failure effect on enterprise activities. Also, dependencies are usually across the organizational boundaries and hence make coordination difficult. Low Modernization competence.

5. Limited Modernization Skills

Technical skills gaps slow legacy migrations significantly. Few developers understand mainframe technologies like COBOL, JCL, or CICS. Additionally, modern cloud architects lack experience with legacy platforms. Organizations struggle finding talent bridging both worlds. Moreover, training takes months while migration timelines demand immediate expertise creating resource bottlenecks throughout projects.

Strategic Decisions That Shape Legacy Migration Outcomes

Legacy migration decisions made early determine project success or failure. These critical choices affect timelines, budgets, and final system quality significantly.

1. What Data Should Be Migrated vs Retired

Not all legacy data deserves migration. Data retirement decisions reduce project scope and costs substantially. Should you move 20-year-old customer records nobody accesses? Archive completed transactions beyond regulatory requirements instead of migrating them. Additionally, test data, temporary records, and obsolete product information waste migration resources. Smart organizations analyze data usage patterns before deciding what moves forward versus what gets archived or deleted permanently.

2. Migrating Logic vs Redesigning Logic

Legacy systems contain decades of business logic embedded in code. Should you replicate this logic exactly or redesign it? Additionally, exact replication preserves proven business rules but perpetuates inefficiencies. Redesigning improves processes but risks introducing errors. Moreover, some logic represents competitive advantages worth preserving while other rules became obsolete years ago. This choice dramatically impacts migration complexity and timeline.

3. Phased vs Parallel Migration Approaches

Phased migration moves data incrementally with validation between stages, reducing risk but extending timelines. Conversely, parallel migration runs old and new systems simultaneously until confidence builds, maintaining business continuity but doubling operational costs temporarily. Additionally, big-bang approaches cut over completely, fastest but riskiest option. Your choice depends on acceptable downtime, budget constraints, and risk tolerance levels.

4. Impact on Cost, Risk, and Quality

Early strategic choices cascade throughout projects. Retiring unnecessary data saves millions in transfer and storage costs. Additionally, redesigning logic extends timelines but improves long-term maintainability. Parallel approaches cost more initially but reduce cutover risks substantially. Furthermore, rushed decisions create technical debt requiring expensive fixes later.

5. Technology Platform Selection

Choosing target technology platforms shapes entire migration strategies. Moving to cloud-native databases versus traditional on-premises systems requires different approaches. Additionally, selecting modern data lakes versus conventional warehouses affects architecture design fundamentally. Platform decisions determine which tools work, what skills teams need, and how data gets structured. Moreover, wrong platform choices discovered mid-project cause expensive rework and extended delays.

Data Quality Risks Unique to Legacy Migrations

Legacy data quality issues create serious problems during migration that don’t exist with newer systems. Understanding these unique risks helps organizations prepare appropriate solutions.

1. Duplicate Records and Outdated Reference Data

Legacy systems accumulate duplicates over decades without proper master data management. The same customer appears five times with slight name variations. Additionally, outdated reference data references products discontinued years ago, employees who retired, or organizational units that merged. Old systems tolerate these problems through workarounds. New systems reject invalid references causing migration failures.

2. Broken Relationships and Orphan Records

Data relationships break in legacy systems creating orphan records pointing nowhere. Orders reference customers that don’t exist anymore. Transactions link to deleted accounts. Additionally, foreign key relationships lack proper enforcement in old databases. Parent-child connections get severed over time. These broken links hide in legacy systems but surface immediately during migration validation.

3. Impact on Modern Use Cases

Poor legacy data quality destroys reporting accuracy after migration. Analytics dashboards show wrong totals because duplicate records get counted multiple times. Additionally, AI and machine learning models fail when trained on dirty data containing inconsistent patterns. Business intelligence tools can’t reconcile conflicting information. Data-driven decisions become unreliable when built on flawed foundations.

4. Why Quality Issues Surface Post-Migration

Legacy quality problems remain hidden because old systems accommodate them through custom code and manual workarounds. Users knew which data to ignore. Additionally, validation rules weakened over time as business requirements changed. Modern platforms enforce stricter standards rejecting poor-quality data. Automated processes lack human judgment compensating for errors. Therefore, problems tolerated for years suddenly become critical blockers.

5. Inconsistent Data Formats Across Systems

Format inconsistencies multiply in legacy environments where different departments used systems independently. One system stores dates as MM/DD/YYYY while another uses DD-MM-YYYY. Phone numbers appear with dashes, spaces, or continuously. Additionally, measurement units mix as some fields use pounds, others kilograms. Currency codes vary across international operations. These format variations complicate transformation logic and validation rules during migration.

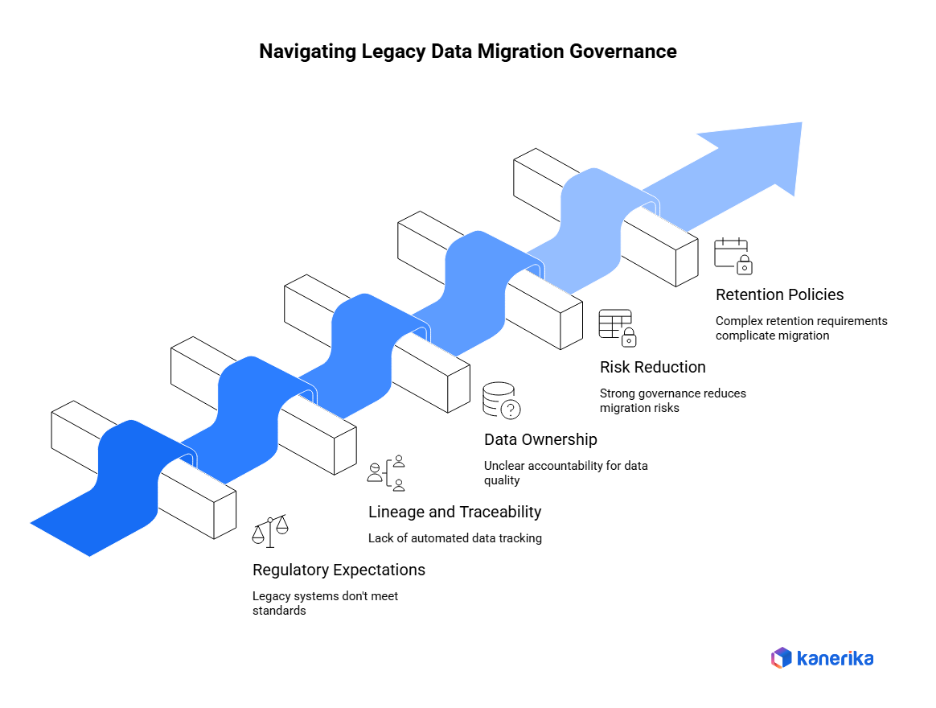

Governance and Compliance in Legacy Data Migration

Legacy migration governance becomes critical when moving decades of regulated data between systems. Poor governance creates compliance violations and operational chaos.

1. Regulatory Expectations

Legacy data often contains information subject to strict regulations. GDPR requires tracking personal data location and usage. SOX demands financial data accuracy and audit trails. Additionally, HIPAA protects healthcare records throughout their lifecycle. PCI-DSS governs payment information handling. Legacy systems built before these regulations may not meet current standards. Therefore, migration provides opportunities implementing proper controls while transferring data.

2. Lineage, Audit Trails, and Traceability

Regulators demand complete data lineage showing how information moved and transformed. Audit trails must document who accessed data, when changes occurred, and why modifications happened. Additionally, traceability requirements prove data integrity throughout migration. Legacy systems often lack these capabilities. Modern platforms must capture comprehensive lineage automatically. Furthermore, audit evidence needs generation without manual effort.

3. Data Ownership Challenges

Legacy environments rarely have clear data ownership. Nobody knows who’s accountable for customer data quality or transaction accuracy. Departments blame each other when problems surface. Additionally, organizational changes over decades obscured original ownership assignments. Migration forces establishing explicit ownership. Business units must claim responsibility for their data domains before migration proceeds.

4. Governance as Risk Reduction

Strong governance frameworks reduce migration risks substantially. Clear policies prevent unauthorized data access during transfers. Quality standards catch errors before production deployment. Additionally, defined approval workflows ensure proper review happens. Change control processes to document all decisions. Furthermore, governance provides structure for managing complex legacy migrations systematically.

5. Retention and Archival Policies

Data retention requirements complicate legacy migrations significantly. Different data types have varying retention periods like financial records for seven years, medical records decades longer. Additionally, legacy systems mixed retention requirements together. Modern platforms need proper archival strategies to separate active data from historical records. Organizations must comply with both retention minimums and deletion maximums simultaneously.

Modern Target Platforms for Legacy Data

Legacy data modernization moves information from outdated systems to platforms supporting current business needs. Understanding target options helps organizations choose appropriate destinations.

1. Cloud Data Warehouses and Lakehouses

Cloud warehouses like Snowflake, Azure Synapse, and Google BigQuery provide scalable analytics platforms replacing legacy databases. They handle massive data volumes efficiently. Additionally, lakehouse platforms including Databricks and Microsoft Fabric combine data lake flexibility with warehouse performance. These modern solutions eliminate infrastructure management headaches plaguing legacy systems. Organizations gain automatic scaling, better disaster recovery, and lower operational costs.

2. BI and Analytics Modernization

The use of old-fashioned reporting systems such as Cognos and Crystal Reports is substituted with new BI systems like Power BI, Tableau, and Looker. These tools offer the self-service features that could not be offered by the legacy systems. Moreover, there are interactive dashboards instead of traditional ones. Real-time data visualization allows businesses to make decisions faster. In addition, mobile access enables executives to track operations at any location.

3. Supporting Advanced Use Cases

Modern platforms enable real-time analytics to process streaming data instantly impossible with batch-oriented legacy systems. Moreover, AI and machine learning need clean and integrated information such platforms deliver by default. Fraud detection, predictive models, and personalization engines rely on current infrastructure. The old systems are just incapable of these high-tech capabilities, which are the demand of business today.

4. Platform Choice Impact

Different target platforms create varying migration complexity. Moving to similar relational databases proves simpler than switching to completely different architectures. Additionally, cloud platforms require rethinking security and access patterns. Data lakes need new governance approaches. Furthermore, choosing wrong platforms discovered mid-project causes expensive rework.

5. Integration and Ecosystem Considerations

Newer platforms have to blend well with the current enterprise systems. APIs, connectors and data exchange protocols are important. Additionally, vendor ecosystem strength affects long-term success as strong communities provide better support and third-party tools. Organizations benefit from platforms offering robust partner networks. Furthermore, interoperability standards prevent future lock-in when business needs evolve again.

Microsoft Fabric Vs Databricks: A Comparison Guide

Explore key differences between Microsoft Fabric and Databricks in pricing, features, and capabilities.

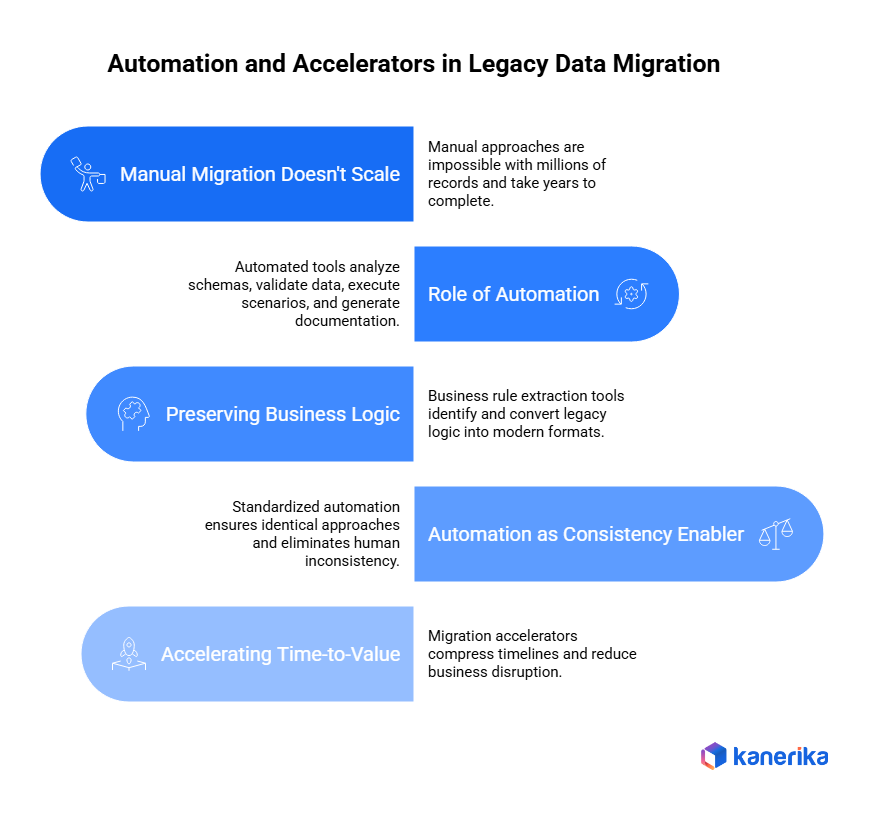

Automation and Accelerators in Legacy Data Migration

Migration automation transforms how organizations handle complex legacy data transfers. Manual approaches simply cannot handle the scale and complexity modern migrations require.

1. Why Manual Migration Doesn’t Scale

Manual legacy migration becomes impossible with millions of records across dozens of systems. Hand-coding transformations for thousands of tables takes years. Additionally, manual validation misses errors human reviewers cannot catch in massive datasets. Testing every scenario manually extends timelines unreasonably. Furthermore, documentation created manually becomes outdated immediately as requirements change continuously.

2. Role of Automation

Automated mapping tools analyze legacy schemas and suggest target structures reducing weeks of manual analysis to hours. Additionally, validation automation compares source and target data continuously catching discrepancies immediately. Testing frameworks execute thousands of scenarios automatically that manual teams never attempt. Furthermore, automated documentation generation maintains current records without manual effort.

3. Preserving Business Logic

Business rule extraction tools identify logic embedded in legacy code automatically. Automated analysis discovers calculations, validations, and workflows hidden in COBOL programs. Additionally, these tools convert legacy logic into modern formats without manual rewriting. This preservation maintains proven business processes while reducing migration effort substantially.

4. Automation as Consistency Enabler

Standardized automation ensures identical approaches across all migration workstreams. Manual teams introduce variations based on individual preferences. Additionally, automated processes execute identically every time eliminating human inconsistency. Quality standards apply uniformly rather than varying by team. Furthermore, repeatable automation enables continuous improvement—lessons learned enhance future migrations automatically.

5. Accelerating Time-to-Value

Migration accelerators compress timelines from years to months through pre-built templates and reusable components. Organizations don’t rebuild common patterns repeatedly. Additionally, accelerators include proven transformation logic for typical legacy scenarios. Quick wins early in projects build stakeholder confidence. Furthermore, faster migrations reduce business disruption and opportunity costs significantly.

Managing Business Continuity During Legacy Migration

Migration business continuity ensures that business does not suffer as the systems migrate to new systems. Interruptions in essential system modifications are costly in millions and a blow to customer confidence.

1. Avoiding Downtime in Mission-Critical Systems

There is zero-downtime migration which ensures that business operates even during transitions. Revenue generating processes can not be halted in long durations using legacy systems. Also, the services facing the customers must be available whenever needed.

Some of the methods that organizations employ during the migration process include parallel processing, incremental cutover, and data synchronization where the organization continues to operate. Moreover, it is advisable to plan the migration when the traffic is low to reduce the effect when temporary disruptions are inevitable.

2. Parallel Run and Reconciliation Strategies

Parallel running operates old and new systems simultaneously until confidence builds. Both platforms process identical transactions allowing comparison. Additionally, reconciliation processes verify results match between systems continuously. Discrepancies get investigated immediately rather than discovered after legacy shutdown. This approach catches problems before they affect customers.

3. Rollback and Recovery Planning

Every migration needs tested rollback procedures reverting to legacy systems if critical issues arise. Document exact steps, assign responsibilities, and practice rollbacks during testing. Additionally, maintain legacy system capability throughout transition periods. Quick recovery options prevent minor problems becoming disasters. Furthermore, backup strategies ensure data protection if rollbacks require restoring earlier states.

4. Maintaining Stakeholder Confidence

Open communication helps to keep the executives, users, and customers at par with migrations. Frequent engagement of users with status updates on progress fosters trust. Moreover, concerns should be handled in advance before there is panic in the event of minor problems. Essential escalation lines solve issues fast without the need to lose confidence in the face of adversity.

Measuring Success in Legacy Data Migration

Are you certain that your legacy data migration project provided anything other than transfer of files between systems? Most organizations rejoice that data transfer have been done, yet true success is found in further quantification of business and operational enhancement.

1. Quality and Adoption Matter Most

Success starts with data quality metrics like accuracy rates, completeness scores, and validation results. But equally important are adoption metrics showing how quickly users embrace new systems. High-quality data means nothing if teams still rely on old spreadsheets or shadow systems.

2. Performance and Risk Improvements

A successful legacy data migration process must provide quantifiable performance improvements. Seek quicker response of queries, better report creation speed and less system downtimes. Also, monitor operational risk mitigation by improving backup processes, increased security control measures, and removed single points of failure.

3. Long-Term Modernization Benefits

The largest victories will be in the long run. The migrations achieved successfully will lower maintenance fees by 30-50 percent and allow the implementation of new functionality, such as real-time analytics, automated reporting, and advanced data science projects. There is also enhanced compliance in organizations through enhanced audit trails and standardized data governance practices.

The Bottom Line

Legacy data migration success extends far beyond technical completion. Organizations that measure quality, adoption, performance, and long-term benefits create sustainable competitive advantages through modern data infrastructure.

Future of Legacy Data Migration

The future of legacy data migration is not a one-time process anymore, but an ongoing process of modernization that is able to adapt itself to shift in the business requirements.

1. Continuous Modernization Replaces Big Bang Projects

Sophisticated organizations have come to view migration as a continuous process and not a huge project. This ongoing modernization strategy limits the risk, keeps the business running, and enables firms to upgrade the systems gradually as the technology changes.

2. AI Changes Everything

The use of AI to support the discovery and validation is transforming the way companies manage the legacy systems. With minimum human intervention, machine learning algorithms can automatically detect the relationships of data, predict the issues of migration, and validate them. The technology reduces the migration time by 60 percent but enhances accuracy.

3. Legacy Data Becomes Strategic Asset

Companies have become more interested in legacy data as a source of analytics and artificial intelligence programs. The information that appeared to be outdated in history plays a critical role in machine learning models, predictive analytics, and business intelligence application.

4. Migration as Core Enterprise Capability

Progressive firms are developing migratory replicable capacity rather than considering projects as atypical. Automated tools, standardized processes, and dedicated teams will ensure that future migrations are quicker, less expensive, and more dependable and allow the implementation of digital transformation plans.

How BI Migration for Logistics Organizations Improves Efficiency

BI migration helps in streamline data, improve decision-making, and modernize systems for faster, clearer operations.

Kanerika: Proven Data Migration Excellence

When evaluating partners for your data migration project, Kanerika brings the capabilities and track record enterprises need for successful cloud transitions.

Technical Foundation and Partnerships

Kanerika holds strategic partnerships with major cloud and data platform providers. We are Microsoft Solutions Partner (Data & AI), Databricks Implementation Partner, and Snowflake Consulting Partner.

These partnerships reflect deep technical expertise across leading platforms. Kanerika’s certified professionals maintain current knowledge of platform capabilities, so migrations take advantage of the latest features and optimization techniques.

FLIP Migration Accelerators: Automation at Scale

Kanerika’s proprietary FLIP (Fast, Low-cost, Intelligent, Programmatic) accelerators address common migration challenges through intelligent automation. These tools are available on Azure Marketplace, so organizations can start migration projects quickly with free trial options.

Business Intelligence & Reporting Migrations

The Crystal Reports to Power BI Accelerator automates legacy report migration, delivering 5X faster insights compared to manual conversion. Organizations eliminate outdated reporting infrastructure while maintaining business continuity. This accelerator handles complex report logic, data connections, and visualization transformations automatically.

The Tableau to Microsoft Fabric/Power BI Accelerator reduces migration time by 84% while helping organizations escape rising Tableau licensing costs. The accelerator converts dashboards, data sources, and calculations to Power BI, enabling seamless transitions to Microsoft’s unified analytics platform.

The Cognos to Power BI Accelerator modernizes outdated BI infrastructure by automating the conversion of Cognos reports and dashboards to Power BI. Organizations gain modern analytics capabilities while reducing maintenance overhead.

Data Integration & ETL Migrations

The Informatica to Databricks/Fabric/Talend Accelerator automates workflow conversion from Informatica to modern platforms, reducing manual effort by 50-60%. Organizations slash ETL licensing expenses while becoming AI-ready on platforms like Databricks. This accelerator handles complex transformation logic, job dependencies, and scheduling requirements while optimizing for target platform architecture.

The Azure to Microsoft Fabric Accelerator streamlines migration from various Azure data services to Microsoft Fabric’s unified platform, reducing pipeline maintenance efforts by 50%. Organizations consolidating their analytics ecosystem use this accelerator to simplify data architecture and reduce complexity.

The SSIS/SSAS to Microsoft Fabric Accelerator completes migrations in days rather than weeks. This accelerator converts SQL Server Integration Services packages and Analysis Services models to cloud-native Microsoft Fabric components, maintaining business logic while modernizing infrastructure.

Data Warehouse & Analytics Migrations

The SQL Services to Fabric Accelerator eliminates on-premises costs while boosting overall performance. Organizations migrating SQL Server databases to Microsoft Fabric benefit from automated schema conversion, data transfer, and optimization for cloud-native capabilities.

Process Automation Migrations

The UiPath to Power Automate Accelerator reduces RPA licensing costs by up to 75% while leveraging existing Microsoft licenses. Organizations gain better integration within the Microsoft ecosystem, cutting costs while improving automation capabilities.

Why FLIP Accelerators Transform Migration Economics

These accelerators change how migration works. Instead of months of manual code conversion and testing, organizations complete migrations in weeks. The automation reduces human error, ensures consistency, and frees internal teams to focus on optimization rather than tedious conversion work.

Available through Azure Marketplace with free trial options, FLIP accelerators let organizations validate the approach before committing to full-scale migration. This reduces procurement friction and speeds up time-to-value.

Real-World Impact: Manufacturing Sector Case Study

A global manufacturing organization with operations across 15 countries faced challenges with siloed data across legacy ERP systems, production databases, and supply chain applications. Their goal: consolidate this data on Microsoft Fabric to enable real-time analytics and AI-driven optimization.

Migration Complexity: 12 TB of historical data across Oracle, SQL Server, and SAP systems, 150+ ETL workflows requiring modernization, strict data governance requirements for international operations, and zero-downtime requirement for production systems.

Kanerika’s Approach:

During the assessment and planning phase (4 weeks), the Kanerika team conducted comprehensive data landscape mapping, identifying dependencies across systems and prioritizing migration waves based on business impact. They designed a phased approach starting with historical data, followed by operational databases, and finally real-time integration.

During execution with FLIP accelerators (12 weeks), Kanerika automated the majority of ETL workflow conversion and data transformation logic. The team implemented parallel processing for historical data migration while establishing incremental sync for operational databases.

During validation and optimization (3 weeks), rigorous testing validated data integrity through automated reconciliation. Performance tuning on Microsoft Fabric ensured query response times improved by 40% compared to the legacy environment.

Results: Migration completed in 19 weeks vs. 36-week original estimate (47% faster), zero data loss with 100% integrity validation across all datasets, 35% reduction in ongoing data management costs, 40% improvement in query performance on the new platform, and real-time analytics capabilities enabling $2.3M in annual cost savings through optimized production scheduling.

This case study shows Kanerika’s ability to handle complex, multi-system migrations while delivering measurable business value. The combination of structured methodology, automation tools, and platform expertise resulted in outcomes exceeding client expectations.

Security During Migration

Data security during the migration process is critical. Kanerika implements enterprise-grade security protocols throughout every migration: end-to-end encryption for data in transit and at rest, role-based access controls limiting data exposure, comprehensive audit trails documenting all migration activities, and compliance with GDPR, HIPAA, SOC 2, and ISO 27001 standards.

These security measures ensure sensitive data remains protected during transfer, meeting regulatory requirements while minimizing risk exposure.

Post-Migration Excellence

Kanerika’s engagement doesn’t end at cutover. Comprehensive post-migration services include performance monitoring and optimization for 90 days post-migration, knowledge transfer workshops training internal teams on new platform capabilities, documentation covering architecture, processes, and operational procedures, and ongoing support options for continued platform optimization.

This approach ensures organizations realize full value from their migration investment while building internal capability for long-term management.

Migration Made Easy with Kanerika’s Accelerator

Partner with Kanerika for Seamless, Error-free Migration

Frequently Asked Questions

1. What is legacy data migration in an enterprise context?

Legacy data migration is the process of moving data from outdated or proprietary systems into modern platforms such as cloud, lakehouse, or advanced analytics environments. It often involves complex schemas, embedded business logic, and decades of historical data. Enterprises treat it as a modernization effort rather than a simple data transfer.

2. Why do legacy data migrations require a clear strategy?

Legacy environments are deeply connected to business operations, compliance, and reporting. Without a clear strategy, organizations risk migrating unnecessary data, increasing costs, and introducing quality issues. A structured strategy helps define scope, sequencing, and success criteria upfront.

3. What are the biggest security risks in legacy data migration?

Legacy systems often lack modern encryption, access controls, and monitoring. During migration, sensitive data can be exposed if security is not enforced throughout the process. Enterprises must apply encryption, role-based access, and audit logging from extraction to deployment.

4. How do enterprises ensure data quality when migrating legacy systems?

Data quality is ensured through early profiling, cleansing, and validation before data moves to the target platform. Legacy data often contains duplicates, outdated records, and inconsistent formats. Addressing these issues before migration prevents long-term trust problems.

5. Why is scalability a major concern in legacy data migration?

Legacy migrations involve large volumes of data, multiple systems, and cross-team dependencies. Manual approaches do not scale well in such environments. Scalable migrations rely on automation, reusable frameworks, and standardized validation processes.

6. How can enterprises avoid business disruption during legacy migration?

Enterprises minimize disruption by using phased migrations, parallel runs, and continuous reconciliation. These approaches allow legacy and modern systems to operate together during transition. Business continuity planning is essential for mission-critical systems.

7. How do organizations measure the success of legacy data migration?

Success is measured by data accuracy, system performance, reduced maintenance costs, and user trust in reports. Minimal downtime and regulatory readiness are also key indicators. Ultimately, successful migration enables faster insights and future growth.