We live in an age where businesses must effectively manage colossal amounts of data. According to IDC, the global data sphere will increase to 175 zettabytes by the year end, underscoring how much information matters today. Amid this rapid growth in data creation, enterprises require robust solutions for quickly managing, integrating, and analyzing large quantities of information. Such solutions should be able to work across different environments seamlessly so as not to impede workflows or productivity levels – that’s what Data Fabric does best! Think of data fabric as the master key that finally unlocks all your data doors at once.

What is Data Fabric?

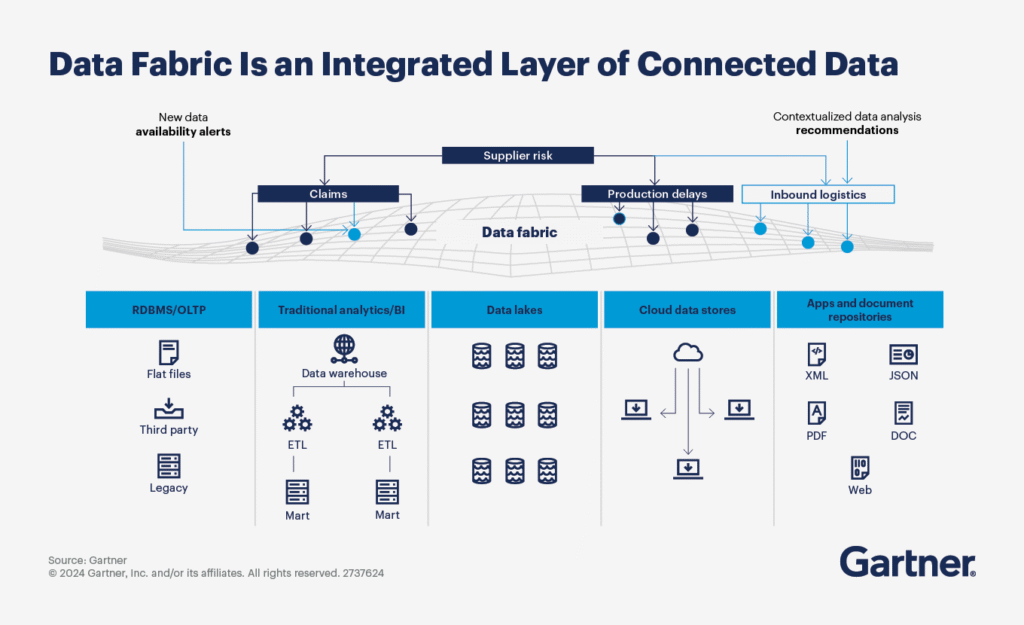

Data Fabric refers to a single framework for universal data management that merges diverse sources and services. Moreover, it provides similar capabilities across different locations, such as on-premises setups or clouds (private/public). Advanced technologies such as machine learning and artificial intelligence are used within it. These also help to automate tasks related to getting systems that store or process data ready for use while ensuring its accessibility, security, and usability.

With big data taking over everything around us, companies deal with enormous volumes generated from various sources. Additionally it includes social media channels and sensors, like transactional databases, etc., but not limited only by them. In such cases, traditional methods fail because they lack the capacity necessary for handling these complexities. Thus, it leads to silos where quality becomes inconsistent along with slow analytics speeds down. Consequently, it affects decision-making efficiency negatively and slows overall operational agility. This is where Data Fabric comes into play.

Moreover, it represents a revolutionary data management architecture that weaves together distributed data across multi-cloud and hybrid environments. Moreover, this unified data integration platform creates a single access layer over your fragmented data sources, enabling real-time analytics and automated data governance without the traditional headaches.

But here’s the kicker – unlike traditional data warehousing solutions that force you to move all your data to one location, data fabric architecture provides seamless data connectivity right where your data lives. Consequently, you get the best of both worlds: unified access without the massive migration projects that keep IT teams up at night.

Take your data to the next-level and be AI ready!

Explore how Kanerika can help you streamline data management for your enterprise.

Data Fabric vs. Traditional Data Management

Here is a table comparing Data Fabric with Traditional Data Management:

Aspect | Data Fabric | Traditional Data Management |

| Data Integration | Unified integration of data from various sources | Often siloed, requiring multiple tools and processes |

| Data Accessibility | Real-time access across environments | Limited, often batch-processed |

| Data Governance | Automated governance policies | Manual and fragmented |

| Scalability | Highly scalable and flexible | Limited scalability, often requires significant reconfiguration |

| Data Processing | Real-time analytics and processing | Mostly batch processing |

| Technology Stack | Utilizes AI and machine learning | Relies on traditional ETL and data warehousing tools |

| Architecture | Supports hybrid, multi-cloud environments | Usually restricted to on-premises or single cloud environments |

| Data Consistency | Ensures consistent data across the organization | Inconsistencies due to data silos |

| Cost Efficiency | Optimizes resources and reduces redundancy | Higher costs due to fragmented infrastructure |

| Security and Compliance | Robust, automated security and compliance measures | Manual processes, prone to human error |

| Implementation Time | Faster deployment with automated tools | Longer due to complex integration processes |

| User Experience | Simplified, user-friendly interface | Often complex and requires specialized knowledge |

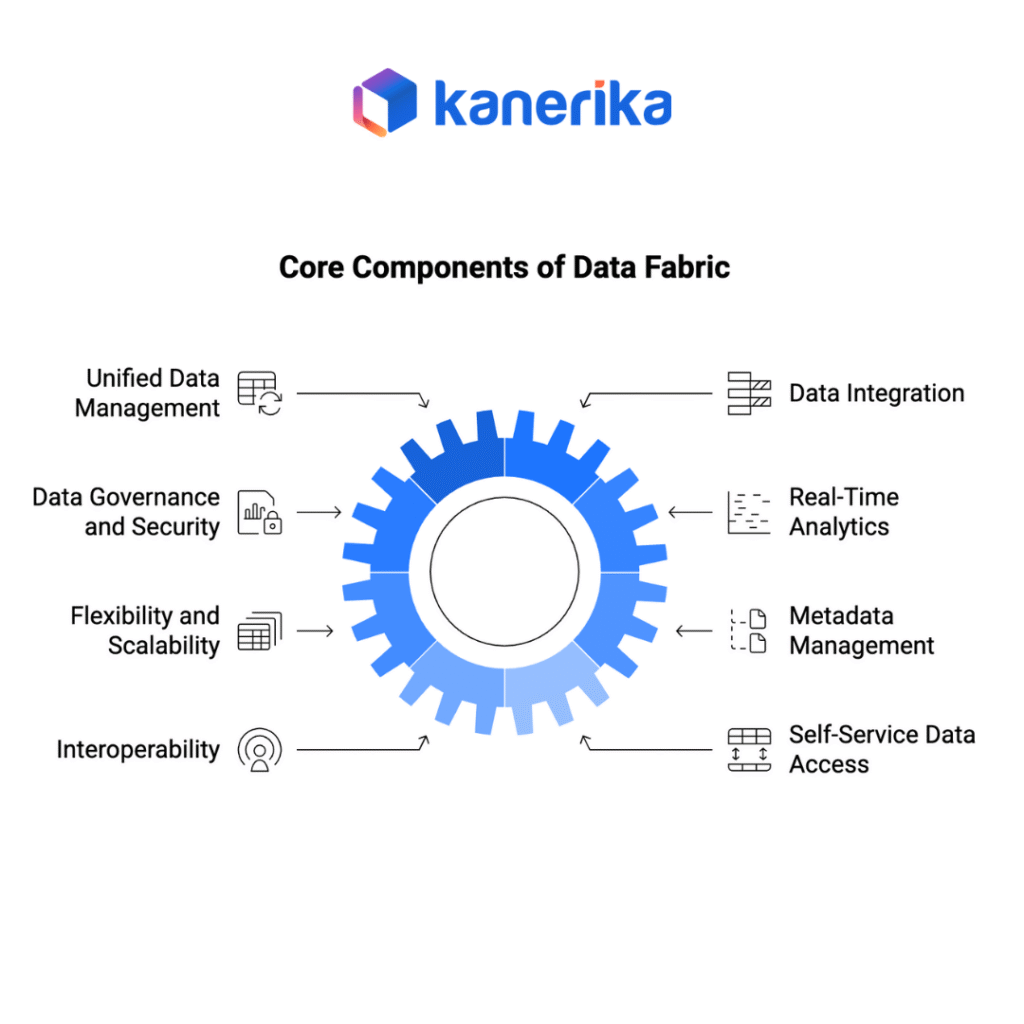

Core Concepts of Data Fabric

Understanding the principles of Data Fabric is essential for appreciating its importance and how it disrupts data management. Here are some main pillars or concepts behind data fabric:

1. Unified Data Management

Data fabric offers a comprehensive structure combining information from different sources, whether local, in the cloud, or hybrid environments. This integration allows seamless flow and control over data while eliminating silos and ensuring uniformity.

2. Data Integration

- Processes ETL (Extract, Transform, Load): Automated processes move data from various sources, modify it into valuable forms, and feed it into the system.

- Methods for Ingesting Data: These techniques include batch processing, real-time streaming, and API integrations, which bring data into systems more efficiently.

- Synchronization: Ensuring that all platforms and applications receive current updates simultaneously to avoid inconsistency of information.

3. Data Governance and Security

- Automatic Governance Policies Enforcement: This feature allows you to enforce rules about usage standards, quality access, etc., automatically.

- Tracking Data Lineage: Monitoring where it comes from and how it moves through the network, ensuring traceability & accountability within organizations’ systems.

- Robust Security Measures: More advanced security protocols, such as encryption control audits, are designed to protect sensitive materials from unauthorized persons entering such areas and putting them at risk.

4. Real-Time Analytics

- Stream Processing: Immediate action was taken upon arrival at each point, allowing continuous processing without any delay between two points, i.e., node-to-node or host-to-host communication linkages, among others, depending on the type being processed, such as audio video text, etc.

- Real-time Dashboards Reports: This helps businesses make quick decisions by providing instant access to up-to-date information and insights required to respond appropriately within the shortest time possible.

- Predictive Analytics: Based on current information collected using AI machine learning-enabled systems trends, behavior can be forecasted accurately, thus enabling organizations to make better choices quickly when faced with different situations.

5. Flexibility & Scalability

- Growing Data Volumes: It should handle large volumes of data without compromising performance.

- Changing Requirements: New business needs technological advancements. Regulatory changes, among other things, require that it easily adjust itself, accordingly. Hence becoming more applicable to a wide range of scenarios.

- Optimizing resources: Cost-effectively uses computational storage resources to save money while improving overall system efficiency levels through better utilization, such as deduplication, compression, etc.

6. Metadata management

- Metadata Repository: A single place where all types of content information are stored, making it possible to understand the whole landscape comprehensively.

- Metadata-driven Processing: Using metadata to automate and optimize data processing tasks.

- Enhanced Data Discovery: Facilitating identifying and understanding data assets within the organization.

7. Interoperability

- Standardized APIs and Protocols: Ensuring different systems and applications can communicate seamlessly.

- Multi-cloud Support: Integrating data across various cloud platforms enables businesses to leverage each provider’s best features.

- Cross-functional Data Sharing: Allowing different departments and teams to access and use the same data efficiently.

8. Self-service Data Access

- User-friendly Interfaces: Simplified tools and dashboards enable non-technical users to access and analyze data without IT support.

- Data Catalogs: Organized collections of data assets, making it easy for users to find and utilize the data they need.

- Automated Data Preparation: Tools that clean, transform, and prepare data for analysis, reducing the time and effort required.

Business Intelligence vs Data Analytics: Which One Does Your Business Need?

Understand the real differences between business intelligence and data analytics, see where each fits into your enterprise stack, and decide how to use them more effectively together.

Data Fabric Architecture

The data fabric architecture is designed to create a cohesive and efficient management system for integrating different sources and environments. Moreover, it also consists of several layers and components, each essential in ensuring no interruption while transferring, storing, securing or processing the information.

1. Data Integration Layer

ETL Processes and Tools

- Extract: This takes data from various sources like databases, applications, or external services.

- Transform: At this stage, extracted data is converted into a usable format, which may involve cleaning it up, aggregating it, and enriching it further.

- Load: Here transformed information gets saved in the target storage system.

Data Ingestion Techniques

- Batch Processing: Involves collecting large volumes of data for processing at scheduled intervals.

- Real-time Streaming: Continuous processing of arriving data to enable instant analysis and response.

- API Integrations: Using APIs to facilitate real-time data exchange between different systems or applications.

2. Storage Layer

Data Lakes vs Data Warehouses

- Data Lakes: So, These are large repositories where raw unstructured/big data is stored in its original form until it is required for use.

- Warehouses: Certainly, these structured storage systems optimized for reporting and analysis that store processed information with the support of complex queries.

Cloud-based Storage Solutions

- Cloud Storage: Scalable, flexible storage solutions are also provided by cloud services such as AWS, Azure, and Google Cloud, among others.

- Hybrid Storage: Balancing cost performance & security through combining on-premises with cloud storage.

3. Data Processing Layer

Big Data Technologies

- Hadoop: Open-source distributed computing environment framework used mainly for storing massive datasets during processing stages across clusters;

- Spark: Known for speediness and ease, a big analytics engine designed to process massive amounts of data fast;

Stream Processing

- Kafka: A distributed streaming platform enabling the creation of real-time pipelines and concurrent streaming applications;

- Flink: A stream-processing framework designed to handle bounded/unbounded streams in real time.

4. Metadata Management Layer

- Metadata Repository: Centralized storage that houses all metadata, providing a holistic view of data assets and their source’s usage.

- Metadata-driven Processing: We use metadata to automate data management tasks, improving efficiency and consistency.

- Enhanced Data Discovery: Provides knowledge about available resources to help users within an organization quickly identify, understand, locate, utilize, or share relevant information.

5. Data Governance Layer

- Automated Governance Policies: These policies set rules and standards for access use quality maintenance, among other things, and are then enforced automatically to ensure compliance integrity.

- Data Lineage Tracking: Keeping track of origin movement transformation accountability system

- Security Measures: Applying advanced security protocols like encryption controls and regular audits protects sensitive data

6. Interoperability Layer

- Standardized APIs and Protocols: This ensures seamless communication between different apps/systems through using standard application programming interfaces (APIs) as healthy protocols such TCP/IP, etc.;

- Multi-cloud Support: Integrating information across various cloud platforms. So that businesses can take advantage of what each provider has offered them. Concerning this service offering they have subscribed to or signed up for at their convenience.

- Cross-functional Data Sharing: Allowing departments to share the same efficiently and use it where necessary enhances collaboration among employees working within organization units/departments who may require such shared inputs regularly.

7. Self-service Data Access Layer

- User-friendly Interfaces: Simplified tools and dashboards enable non-technical users to access and analyze data without IT support.

- Data Catalogs: Organized collections of data assets, making it easy for users to find and utilize the data they need.

- Automated Data Preparation: Tools that clean, transform, and prepare data for analysis, reducing the time and effort required.

8. Real-time Analytics Layer

- Stream Processing: Continuous data processing as it arrives, allowing for immediate analysis and action.

- Real-time Dashboards and Reports: Instant access to up-to-date information and insights, consequently helps businesses make informed decisions quickly.

- Predictive Analytics: Using AI and machine learning to forecast trends and behaviors based on real-time data.

Benefits of Data Fabric

Data fabric benefits organizations seeking to control and exploit their information more effectively. Here are some key advantages:

1. Better Accessibility and Visibility of Data

Seamless Integration: This system integrates data from multiple sources so that it can be accessed through one platform.

Immediate Access: It allows users to access real-time data, facilitating a quicker decision-making process and timely insights.

2. Improved Quality and Consistency of Data

Automated Cleansing: This feature cleanses and standardizes information automatically, thus reducing errors or discrepancies.

Single View: ensures all organizational stakeholders have consistent views about different types of business records across the enterprise.

3. Agility and Scalability in Managing Data

Scalable Architecture: It is flexible enough to handle increased volumes of data and accommodate changes in business requirements over time.

Flexible Deployment Options: Supports various models such as on-premises cloud-based environments, among others, depending on where you want your system installed.

4. Cost-effectiveness & Resource Optimization

Efficient Resource Utilization: Optimizing storage space usage and processing power consumption lowers the overall operational costs of using such resources.

Reduced Redundancy: Minimizes data duplication and redundancy, saving storage space and improving performance.

5. Enhanced Data Governance and Security

Automated Compliance: Ensures data compliance with regulations through automated governance policies.

Robust Security Measures: Implements advanced security protocols to protect sensitive data from breaches and unauthorized access.

6. Analytics In Real-Time and Its Insights

Instantaneous Analysis: promotes fast data processing for analytics, enabling immediate findings.

Predictive Analytics: Uses AI and machine learning to forecast trends and behaviors, helping businesses stay ahead.

Azure Data Factory to Fabric Migration: Challenges and Best Practices

Familiarize yourself with the strategic framework, automated migration tools, and proven methodologies necessary to avoid becoming another migration failure.

Data Fabric Implementation Strategies

This implementation calls for well thought out preparation and execution to meet organizational requirements and maximize its value. Below are some strategies:

1.Assessing Data Requirements and Goals

- Identify Data Sources: List all data sources, including databases, applications, and cloud services. Know different types of data (structured or unstructured) and their specific needs.

- Set Goals: Define what you want to achieve by implementing the Data Fabric, such as improving access to information, enhancing analytical capabilities, or ensuring compliance. Moreover, to ensure objectives are clear, measurable indicators of success throughout implementation process.

2. Designing a Scalable and Flexible Architecture

- Choose Flexible Framework: Choose a Data Fabric framework that can handle different data types, sources, and processing requirements. Ensure horizontal scalability as data volume and complexity increase over time.

- Plan for Future Growth: Design architecture with future expansion in mind, allowing for the integration of new data sources and technologies. Implement modular components that can be easily updated or replaced as needed.

3. Choosing the Right Technology Stack

- Evaluate Tools & Platforms: Assess available tools and platforms for data integration, storage, processing, and governance. Consider factors such as compatibility with existing systems, ease of use, and vendor support.

- Leverage Cloud Technologies: Utilize cloud services for storage and processing to take advantage of scalability, flexibility, and cost-efficiency. Explore hybrid solutions that combine on-premises and cloud resources

4. Robust Governance & Security Foundation

- Establish Policies: Make sure there are clear policies around governance, which shall serve as guidelines on what needs to be done to meet regulatory requirements, internal standards, etc.

- Enhance Security:

- Implement advanced security measures such as encryption, access controls, and regular audits. Monitor security threats and vulnerabilities and respond promptly to any incidents.

5. Promoting User Adoption & Training

- Provide Training and Support: Offer comprehensive training programs to ensure users understand how to use the Data Fabric effectively. Provide ongoing support to address issues and update users on new features and improvements.

- Encourage Feedback: Create feedback channels through which users can give suggestions or even complain about the software’s poor performance; such information should then be used to improve the software continuously until all concerns have been addressed satisfactorily, etc.

6. Monitoring and Optimization

- Continuous Monitoring: Monitor performance health tools while tracking them over time to determine when improvements might need to be implemented within any given period under review, etc.

- Regular Updates and Maintenance: Keep up with the latest technologies and best practices by updating fabric regularly based on findings made during the monitoring process, thus ensuring efficiency security goals are achieved throughout its lifetime.

Real World Use Cases of Data Fabric

Data Fabric’s versatile architecture and capabilities make it suitable for a wide range of applications across different industries. Here are some real-world use cases that demonstrate its impact:

1. Financial Services

Detection and prevention of fraud

- Problem: Financial organizations should be capable of recognizing and stopping fraudulent activities instantly.

- Solution: Data Fabric combines information from multiple sources such as transaction records, customer profiles, or external databases. It uses machine learning algorithms with real-time analytics to detect abnormal behaviors and patterns thus enabling proactive response against frauds.

360° Customer View

- Challenge: Banks need complete understanding about their clients so they can offer them personalized services.

- Solution: By aggregating data from several systems (CRM system, transactional data etc.) into single repository – customer profile; this will help bank employees see through all interactions which have happened with individual over time hence being able to provide tailored financial products/services & enhance satisfaction levels among customers.

2. Healthcare

Patient Care and Treatment

- Challenge: Hospitals have vast amounts of patient records, which they must manage efficiently to improve the quality of care they provide.

- Solution: Data Fabric integrates electronic health records (EHR), lab results, imaging data, and wearable device data. It enables real-time access to patient information, supports advanced analytics for personalized treatment plans, and enhances patient outcomes.

Clinical Research and Trials

- Problem Statement: Effective management, analysis, and reporting on clinical trial datasets are key success factors for any research institution conducting such studies.

- Answer: Data Fabric provides a unified platform for aggregating data from various trial sites, patient records, and research databases. It facilitates real-time analysis and collaboration, accelerating the development of new treatments and therapies.

3. Retail and E-Commerce

Personalized Shopping Experience

- Challenge: Retailers want to create unique shopping experiences that will satisfy customers’ needs and win their loyalty.

- Solution: Data Fabric integrates data from online and offline channels, customer behavior, and purchase history. It uses real-time analytics to provide personalized recommendations, targeted promotions, and improved customer service.

Inventory Management

- Challenge: It is hard for organizations or businesses to efficiently manage inventory levels because there is need ensure consistent supply meet demand while preventing stockouts/overstocks situations occurring concurrently.

- Solution: Data Fabric consolidates data from sales, supply chain, and warehouse systems. It enables real-time visibility into inventory levels, predictive analytics for demand forecasting, and optimized stock replenishment.

4. Manufacturing

Anticipation of Maintenance

- Challenge: Factories must reduce downtime and expenses on repair and maintenance.

- Solution: The Data Fabric merges information from internet-connected devices, machines, and service records. It then uses predictive analytics to estimate when machinery will fail so that it can be serviced before it breaks down and reduces production time.

Optimization of the Supply Chain

- Challenge: Fast manufacturing and delivery depend on efficient management of the supply chain.

- Solution: Data Fabric aggregates data from suppliers, logistics, and production systems. It provides real-time insights into supply chain performance, identifies bottlenecks, and optimizes inventory and logistics operations.

5. Telecommunications

Network Performance Management

- Challenge: Telecom providers need to ensure reliable network performance and service quality.

- Solution: Data Fabric integrates data from network devices, customer interactions, and service logs. It enables real-time monitoring, predictive analytics for network optimization, and proactive issue resolution.

Customer Churn Reduction

- Challenge: Reducing customer churn is vital for maintaining a stable subscriber base.

- Solution: Data Fabric combines data from customer usage patterns, support tickets, and social media interactions. It uses machine learning to identify at-risk customers and develop targeted retention strategies.

Data Fabric ROI

Let’s talk turkey. What’s this going to cost you, and more importantly, what’s it going to save you?

The Investment:

- Software Licensing: $50K-$500K annually (varies by data volume)

- Professional Services: $100K-$300K for implementation

- Training and Change Management: $25K-$75K (because people matter)

- Ongoing Support: 15-20% of license cost annually

The Returns:

- Time-to-Insight Reduction: 60-80% faster analytics delivery (speed kills… the competition)

- Operational Cost Savings: 30-40% reduction in data management overhead

- Revenue Impact: 10-15% increase from better decision-making

- Compliance Cost Reduction: 50% lower audit and governance costs

Typical Payback Period: 12-18 months. Not instant gratification, but pretty close in enterprise software terms.

Future Trends and Innovations in Data Fabric

1. Deeper Integration with AI and Machine Learning (AI/ML)

Data fabric solutions will become more deeply entrenched in artificial intelligence and machine learning. This will enable:

- Automated Data Management: By automating tasks like data transformation, cleaning, and integration, more human resources will be freed for strategic work.

- Advanced Analytics: AI-powered analytics will unlock more profound insights from data, leading to more informed decision-making.

- Self-Service Analytics: Empowering business users to explore and analyze data independently through AI-powered intuitive interfaces.

2. Expansion of Edge Computing

Data fabric will expand into edge computing environments where it works seamlessly with devices generating or processing data closer to its source. This means that:

- Real-time Insights: Faster analysis of data captured at the edge, leading to quicker responses and actions.

- Improved Scalability: Data fabric will manage data across distributed edge locations efficiently.

- Reduced Latency: Processing data closer to its source minimizes latency issues.

3. Automated Data Governance

Data fabric will play a more significant role in data governance through automation. This will include

- Automated Data Lineage Tracking: Automatically tracking data origin, movement, and transformations for improved transparency and auditability.

- Data Access Controls: Automating access controls to ensure data security and compliance with regulations.

- Data Quality Monitoring: Proactive monitoring for data quality issues and automatic remediation.

4. Blockchain for Enhanced Security and Trust

Integration of blockchain technology with data fabric has the potential to:

- Guarantee Data Provenance: Blockchain can create an immutable record of data origin, enhancing trust and security.

- Improved Data Sharing: Secure and transparent data sharing between organizations can be facilitated through blockchain integration.

5. Increased Focus on Interoperability and Open Standards

Data fabric solutions will prioritize interoperability with diverse data sources and platforms. This will ensure:

- Seamless Integration: Effortless integration of data fabric with existing IT infrastructure.

- Vendor Agnosticism: Flexibility in choosing the best tools and technologies regardless of vendor.

- Reduced Vendor Lock-In: Organizations won’t be tied to specific vendors due to open standards.

6. User-Friendly Interfaces and Self-Service Data Access

Data fabric will evolve to offer user-friendly interfaces catering to technical and non-technical users. This will enable:

- Democratization of Data: Empowering users across various departments to access and analyze data independently.

- Improved User Adoption: Intuitive interfaces will encourage broader use of data fabric capabilities.

- Faster Time-to-Insight: Users can access and analyze data efficiently without relying on IT teams.

Ready to Get Started? Your Quick Assessment

Before you jump in, honestly answer these questions:

- Do you have data scattered across multiple cloud platforms?

- Are you spending more than 40% of your time preparing data instead of analyzing it?

- Do business users constantly complain they can’t access the data they need?

- Are your compliance reporting cycles painfully slow?

- Do you need real-time analytics capabilities to stay competitive?

If you checked three or more boxes, data fabric isn’t just a nice-to-have – it’s essential for your survival in today’s data-driven economy.

Why Choose Kanerika for Your Data Engineering Journey?

When it comes to data engineering solutions, experience matters. That’s why leading organizations trust Kanerika to transform their data chaos into competitive advantage.

Proven Track Record: HR Analytics Transformation

Take our recent success with a major client’s HR data modernization. We implemented a common and integrated Data Warehouse on Azure SQL and enabled Power BI dashboard, consolidating HR data and providing the client with a comprehensive view of their human resources. The results? Enhanced efficiency and decision-making, improved talent pool engagement through decoded recruitment, tenure, and attrition trends, effective employee policy management, and significant time savings with overall efficiency improvements in HR operations.

Enterprise-Scale Platform Modernization

In another transformative project, we helped a major enterprise overhaul their entire data analytics platform. The solution enhanced their decision-making processes, enabling them to make informed and strategic choices based on real-time insights, increased operational efficiency by fastening data retrieval, reducing manual data handling, and enhancing productivity across various departments. Moreover, we empowered their reporting and analytics capabilities to identify trends and extract valuable insights, while ensuring scalability and futureproofing through agile data architecture frameworks.

What Sets Kanerika Apart:

- Deep Technical Expertise: Our team combines years of data engineering experience with cutting-edge technology knowledge

- Business-First Approach: We don’t just implement technology – we solve business problems

- Proven Methodologies: Our structured implementation approach minimizes risk while maximizing value

- End-to-End Support: From strategy through execution to ongoing optimization, we’re your partner every step of the way

The Bottom Line

Data fabric isn’t just another technology buzzword destined for the graveyard of forgotten trends. It represents the future of how successful organizations will manage, access, and derive value from their data.

The question isn’t whether you need data fabric – it’s whether you can afford to wait while your competitors gain the advantage. In today’s fast-moving business environment, the cost of inaction often exceeds the cost of action.

So, are you ready to turn your data chaos into your competitive advantage? The fabric of your data future awaits.

Take your data to the next-level and be AI ready!

Explore how Kanerika can help you streamline data management for your enterprise.

Frequently Asked Questions

What is the difference between ETL and data fabric?

ETL is about moving data – extracting it, transforming it, and loading it into a target system. A data fabric, however, is a holistic architecture that provides unified access to data residing in diverse sources, without necessarily moving all of it. Think of ETL as a pipeline and a data fabric as a network – one transports, the other connects. The key difference lies in the approach: centralized vs. decentralized access.

What is data fabric vs data mesh?

Data fabric is a unified, integrated approach to managing data across your entire organization, regardless of location or format. Data mesh, conversely, distributes data ownership and management to individual domain teams, creating a decentralized, federated system. The key difference lies in centralization versus decentralization of control and governance. Think of data fabric as a single, powerful engine, while data mesh is a network of smaller, specialized engines working together.

What is data fabric vs data lake?

Data lakes are like raw, unorganized storage – think of a giant pile of unsorted building materials. A data fabric, however, is a managed and connected system that allows you to access and use data from various sources (including lakes!) efficiently and securely, like a sophisticated construction project using those materials effectively. It’s less about where the data is, and more about how you access and use it. The fabric orchestrates access, ensuring consistency and governance.

What is data fabric used for?

Data fabric isn’t just a storage system; it’s a dynamic, unified approach to data management. It’s used to connect and access data from diverse sources—cloud, on-premises, etc.—seamlessly, improving agility and insights. Think of it as a high-speed data highway enabling faster, more informed decision-making. Ultimately, it accelerates digital transformation by making data easily discoverable and usable.

What is data fabric framework?

A data fabric isn’t just a single technology; it’s a *holistic approach* to data management. It seamlessly integrates diverse data sources – from clouds to databases – creating a unified, readily accessible view. This allows for agile, efficient data use across your entire organization, without the limitations of traditional data silos. Think of it as a unified, intelligent network for all your data.

What is data warehouse vs data fabric?

A data warehouse is like a central, pre-organized library of historical data, optimized for specific reporting and analysis needs. A data fabric, however, is a more dynamic, interconnected network of data sources, allowing for agile access and integration across various systems – think of it as a living, adaptable data ecosystem rather than a static repository. The key difference lies in agility and integration: data warehouses are structured; data fabrics are networked.

What is the difference between data fabric and data mesh in Informatica?

Informatica’s Data Fabric is a centralized, governed platform for data integration and management, focusing on a unified view of data. Data Mesh, conversely, distributes data ownership and governance closer to the data producers, creating a more decentralized and agile architecture. Think of Data Fabric as a single, powerful data lake, while Data Mesh is a network of smaller, interconnected data lakes. The key difference lies in the degree of centralization vs. decentralization.

Is ETL part of data warehouse?

No, ETL is not part of the data warehouse itself; it’s the crucial process leading to the data warehouse. Think of it as the plumbing and construction crew – vital for building the house (data warehouse), but not the house itself. ETL prepares and loads the data, making it usable within the warehouse. The warehouse is the destination, ETL is the journey.

What is Talend data fabric used for?

Talend Data Fabric is a unified platform for managing your entire data lifecycle. It helps you collect, prepare, govern, and analyze data from diverse sources, all within a single, integrated environment. This streamlines data operations, boosts efficiency, and enables better decision-making based on reliable, readily accessible insights. Essentially, it’s a one-stop shop for building a robust and trustworthy data infrastructure.