What happens when supplier delays start costing millions? A global logistics firm faced that exact problem—slow onboarding and missing documents were dragging operations. Kanerika’s AI agent, DokGPT, fixed it fast. It scanned invoices, contracts, and emails, flagged missing files, and filled gaps using past data. Supplier onboarding time decreased by 40%, and compliance errors were reduced by half, demonstrating the practical impact in the ongoing evolution of AI agents.

More companies are now seeing how AI agents can handle complex tasks without constant human input. These agents learn from context, adjust to changing data, and keep improving. Gartner expects 40% of enterprise apps to use AI agents by 2026. The market’s growing fast, with usage already crossing 85% in some form across large businesses.

In this blog, we’ll look at how AI agents evolved from simple chatbots to complex systems that plan, act, and learn. We’ll break down the shift from single-function tools to orchestrators, show where they’re making the biggest impact, and explain what this means for businesses trying to stay ahead.

Transform Your Business with AI-Powered Solutions!

Partner with Kanerika for Expert AI implementation Services

Key Takeaways

- AI agents can think, reason, and act independently to meet defined goals.

- They feature adaptability, autonomy, perception, and goal-driven decision-making.

- AI agent architecture has evolved from basic LLMs to advanced multi-agent systems.

- Various types of agents—reflex, goal-based, learning, and autonomous—serve distinct tasks.

- Kanerika enables enterprises to adopt AI seamlessly through secure, scalable solutions.

- Kanerika’s AI agents, such as DokGPT, Jennifer, Karl, and others, automate real-world workflows.

- Overall, AI agents are revolutionizing automation with intelligence, collaboration, and context awareness.

What are AI Agents

AI agents are software programs that can understand goals, make decisions, and take actions to achieve those goals with minimal human intervention. Unlike traditional software that follows fixed rules, AI agents can adapt their behavior based on context, learn from interactions, and handle tasks that require reasoning.

The key difference between an AI agent and a regular AI model is autonomy. A language model gives you an answer when you ask a question. An AI agent can break down a complex task, figure out what steps are needed, use tools to gather information, and work toward a solution without you specifying every detail.

Think of it like the difference between a calculator and a personal assistant. A calculator performs the exact operation you tell it to. A personal assistant understands “I need to prepare for next week’s board meeting” and figures out what that involves: checking your calendar, gathering relevant documents, summarizing key points, and preparing materials. That’s what AI agents do.

Key Features of AI Agents

- Adaptability: Learn and improve performance over time.

- Autonomy: Operate independently without continuous human guidance.

- Perception: Sense and interpret the environment through data inputs.

- Decision-Making: Choose actions based on rules, models, or learned experiences.

- Goal Orientation: Focus on achieving defined objectives efficiently.

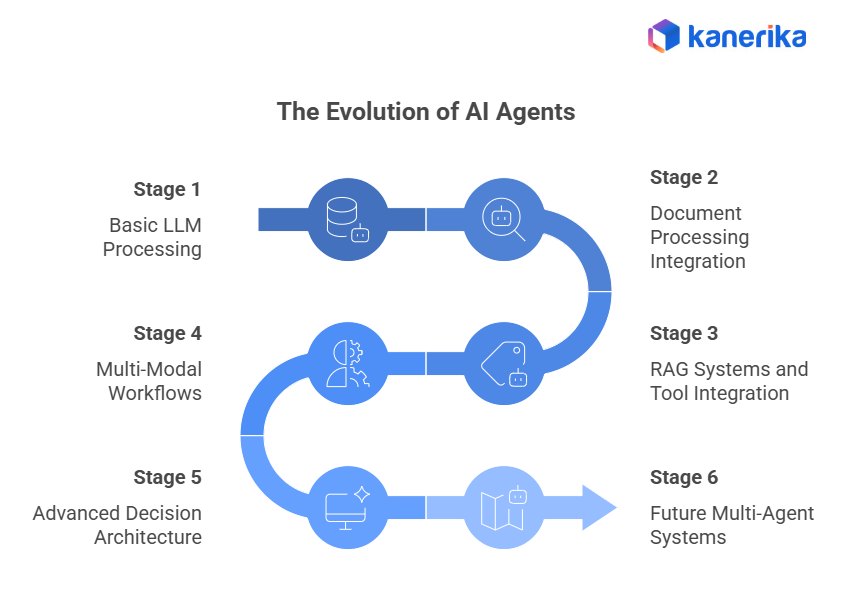

The Six Stages in the Evolution of AI Agent Architecture

Stage 1: Basic LLM Processing

The earliest stage in AI agent development involves Large Language Models (LLMs) functioning in a simple input-output loop. Here, the AI receives text input, processes it using the LLM, and generates text output. This stage primarily focuses on language understanding and generation, forming the foundation of modern AI capabilities.

The main limitation is isolation. The agent can’t access current information, remember previous conversations, or verify facts against external sources. Everything depends on what the model learned during training. Response quality relies entirely on the training data’s relevance to your question.

Example: ChatGPT’s initial release in late 2022 worked this way. You asked a question, it generated an answer from its training data, and that was it. Each conversation started fresh with no memory. It couldn’t tell you today’s weather, access your documents, or remember what you discussed five messages ago. But for explaining concepts, writing code, or generating creative content, it worked remarkably well.

Stage 2: Document Processing Integration

This stage adds the ability to read and understand documents you provide. The flow stays linear, but now processes your files alongside your questions. The LLM treats documents as extended context, reading them to generate more relevant answers.

This made agents practical for real work. You could upload contracts, research papers, or reports and get summaries, extract specific information, or ask questions about the content. The challenge became context window size since early systems struggled with long documents. Processing quality mattered too because agents needed to understand document structure and connect information across pages.

Example: Claude’s Projects feature lets you upload multiple documents that persist across conversations. Upload your company’s style guide, previous reports, and data files. Ask questions about any of them or request work that follows your established patterns. The agent reads everything you’ve shared and generates responses informed by those documents.

Stage 3: RAG Systems and Tool Integration

RAG (Retrieval Augmented Generation) in the evolution of AI agents transformed the architecture from a linear process to a dynamic loop. Instead of relying solely on training data, agents could search external databases and retrieve relevant information on demand. This solved the problem of keeping models updated without constant retraining.

Tool integration appeared at this stage. Agents could now call calculators, check APIs, query databases, or run code. They became interactive problem-solvers instead of just text generators. The agent decides when it needs external information, fetches it, and incorporates findings into its response.

The challenge was decision-making. Agents had to know when to use tools, format requests correctly, and handle errors when tools returned unexpected data. But the payoff was huge because agents could access current information and perform actions beyond text generation.

Example: Perplexity AI uses RAG to answer questions with current information. Ask about recent news or specific facts, and it searches the web in real time, retrieves relevant content, and synthesizes an answer with citations. You get responses based on the latest available information rather than outdated training data. The agent shows you which sources it used, making answers verifiable.

Stage 4: Multi-Modal Workflows

Modern AI agents reflect the evolution of AI agents, becoming multi-modal and capable of processing a range of data types, including text, images, audio, and more.

Memory systems improved significantly. Instead of treating each message independently, agents started maintaining conversation context and user preferences. Interactions felt continuous rather than disconnected. Tool use expanded too, with agents generating images, creating visualizations, or editing photos based on your requests.

Processing became more complex and expensive. Handling images requires more computation than text. But capabilities improved enough to justify the tradeoffs for tasks that genuinely needed visual understanding.

Example: GPT-4 Vision (now GPT-4o) processes images and text together. Upload a photo of your fridge contents and ask for recipe suggestions. Share a screenshot of an error message and get debugging help. Show a chart and request analysis. The agent sees images the same way humans do, identifying objects, reading text within images, and understanding spatial relationships.

Stage 5: Advanced Decision Architecture

This stage in the evolution of AI agents introduces separated agent functions into specialized components. Memory, decision-making, and execution became distinct modules rather than one monolithic system. When input arrives, the memory module checks context, the decision module evaluates options, and the execution module takes action.

This structure handles complex, multi-step tasks reliably and efficiently. Agents can plan workflows, track progress, and adjust strategies when initial approaches fail. Decision-making became explicit as agents evaluated whether to search, calculate, ask clarifying questions, or generate information from existing knowledge before acting.

Memory works differently, too. Instead of just conversation transcripts, agents store structured information about preferences, project details, and task status. This enables continuity across sessions and better personalization over time.

Example: Microsoft’s Copilot in enterprise settings uses this architecture. It maintains memory about your work patterns, frequently accessed documents, and project contexts. When you ask it to “update the Q3 report,” it knows which document you mean, remembers the format you prefer, and recalls what data sources to use. It can start a task, save progress, and continue later while maintaining full context.

Stage 6: Future Multi-Agent Systems

The next generation uses multiple specialized agents working together. One agent checks safety, another interprets intent, a third executes tasks, and a fourth verifies output. Each has a narrow responsibility rather than one agent handling everything. The future of AI agents envisions multi-agent ecosystems with layered architectures. Key features include:

- Safety: Agents monitor every request and block anything risky before it gets executed. This helps prevent harmful actions and keeps systems in check.

- Accountability: You can trace the entire decision-making process. That means you can see why the agent chose a particular path or tool, and understand the logic behind its actions.

- Interpretation: Some agents prioritize understanding user intent clearly before taking any action. They ensure the request is accurate and well-formed before passing it to other agents or tools.

The architecture supports rollback, allowing systems to undo actions and try again when mistakes occur. Coordination happens through structured data formats rather than free-form communication, making behavior more predictable. Human oversight becomes granular, with approval points throughout the workflow, rather than only at the end.

Example: Google’s Gemini team demonstrates the evolution of AI agents by developing multi-agent systems where each specialized model handles a specific part of complex tasks. For research projects, one agent searches academic databases, another evaluates the credibility of sources, a third synthesizes the findings, and a fourth fact-checks the claims. Each agent excels in one area rather than one agent doing everything adequately.

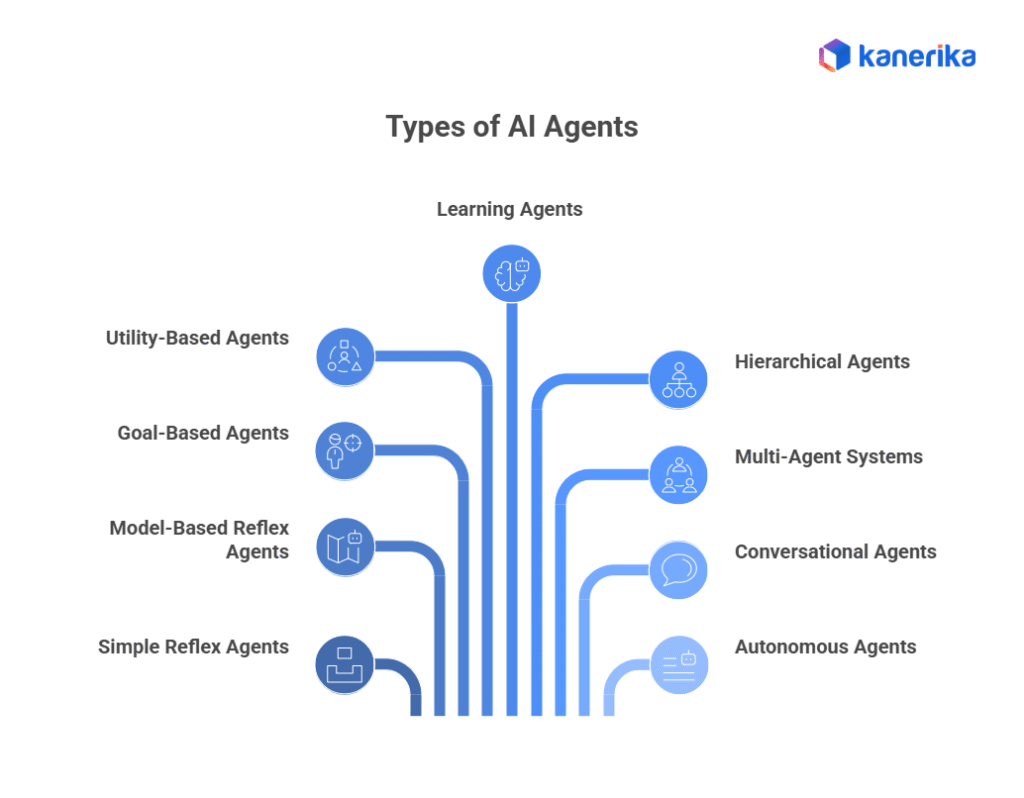

Types of AI Agents

AI agents come in different forms, each designed for specific tasks and complexity levels. As part of the evolution of AI agents, understanding these types helps you choose the right tool for your needs and anticipate how each system performs in different scenarios.

1. Simple Reflex Agents

Simple reflex agents are the most basic type of AI agents. They act solely based on the current situation using predefined “if-then” rules. They don’t maintain memory or consider past states, nor do they plan for the future. While effective in predictable and static environments, they fail when situations require reasoning, adaptation, or learning.

Example: A spam filter that blocks emails containing certain keywords operates as a simple reflex agent. It sees “free money” in the subject line and marks it as spam. No reasoning, no context, just an immediate reaction based on rules.

2. Model-Based Reflex Agents

Model-based reflex agents improve upon simple reflex agents by maintaining an internal model of the world. They track how the environment changes and how their actions impact it. This allows them to make informed decisions even when current perception alone is insufficient.

Example: A smart thermostat, such as Nest, acts as a model-based reflex agent. It tracks your temperature preferences at various times, learns your schedule, and remembers how long it takes your house to heat or cool. It adjusts preemptively rather than just reacting when you manually change settings.

3. Goal-Based Agents

Goal-based agents focus on achieving specific objectives. Instead of reacting blindly, they evaluate potential actions by reasoning which moves will bring them closer to a desired goal. This ability to plan makes them more adaptable to new or unpredictable situations.

Example: Google Maps operates as a goal-based agent. Your goal is to reach a destination. The agent considers multiple routes, evaluates factors like distance and traffic, and recommends the path most likely to achieve your goal of arriving quickly. If traffic appears, it recalculates because the goal hasn’t changed, even though conditions have.

4. Utility-Based Agents

Utility-based agents don’t just aim to achieve goals; they also evaluate multiple outcomes and select the one that maximizes overall benefit. They are particularly useful in complex environments with competing priorities, where simply reaching a goal isn’t enough.

Example: Uber’s pricing and driver-matching system uses utility-based reasoning. It balances multiple competing factors like driver proximity, passenger wait time, driver earnings, and surge pricing. The system optimizes for overall marketplace efficiency rather than just matching the nearest driver to each rider. It considers what produces the best outcome across everyone using the platform.

5. Learning Agents

Learning agents continually adapt and improve over time, based on their experiences and the feedback they receive. They are crucial when environments are dynamic or user preferences vary. Learning agents have several components: a performance element to select actions, a learning element to improve, a critic to provide feedback, and a problem generator to suggest new strategies.

Example: Netflix’s recommendation system is a learning agent. It starts with general preferences but continuously learns what you specifically enjoy. It notes which shows you finish versus abandon, what you rate highly, and what you watch repeatedly. Over time, the agentic AI recommendations become more personalized and accurate because it’s learning your unique taste patterns.

6. Hierarchical Agents

Hierarchical agents manage complexity by breaking tasks into subtasks. High-level agents set goals, mid-level agents plan actions, and low-level agents execute specific tasks. This layered approach mirrors organizational structures and allows efficient problem-solving.

Example: Tesla’s Autopilot uses hierarchical agency. A high-level planner decides the route and determines when to change lanes. Mid-level controllers manage steering, acceleration, and braking. Low-level systems handle specific motor commands to actuators. Each layer focuses on its scope without getting overwhelmed by details from other levels.

7. Multi-Agent Systems

Multi-agent systems consist of multiple AI agents working together, sometimes collaborating and sometimes competing. Each agent has specialized capabilities, and the system as a whole can solve problems too complex for a single agent. They excel in distributed environments and tasks requiring parallel processing.

Example: Stock market trading platforms use multi-agent systems. Some agents monitor news and social media for sentiment. Others analyze technical patterns in price movements. Risk management agents enforce position limits. Execution agents find optimal times to enter or exit trades. They operate simultaneously, share information, and coordinate decisions.

8. Conversational Agents

Conversational agents interact naturally using human language. They understand queries, maintain context, and respond appropriately across multiple exchanges. They bridge the gap between humans and machines, making AI accessible to non-technical users.

Example: ChatGPT functions as a conversational agent. It understands your questions even when phrased casually, remembers what you discussed earlier in the conversation, asks for clarification when your request is ambiguous, and generates responses that feel natural and contextual.

9. Autonomous Agents

Autonomous agents operate independently for extended periods, handling complex tasks with minimal human intervention. They plan, act, and adapt dynamically, often breaking high-level goals into subtasks and executing them sequentially or in parallel. These agents require robust error handling and decision-making to avoid inefficiencies.

Example: AutoGPT and similar projects attempt autonomous agency. You give them a high-level goal like “research the sustainable packaging market and create a competitor analysis.” The agent breaks this into subtasks, searches the web, visits company websites, extracts information, organizes findings, and produces a report. It runs for minutes or hours without you specifying each step.

How Kanerika Is Driving Practical AI Adoption in Enterprises

Kanerika builds AI systems that solve real business problems. As a Microsoft Solutions Partner for Data and AI, it uses platforms like Azure, Power BI, and Microsoft Fabric to create secure, scalable solutions for industries such as manufacturing, retail, finance, and healthcare.

Its AI agents work directly with enterprise data — including scanned documents, images, voice inputs, and structured formats. These systems are designed to fit into existing workflows, helping teams automate routine tasks, reduce manual effort, and make faster decisions.

The company is ISO 27701 and 27001 certified, ensuring strict data privacy and security. It also supports enterprise teams with training and deployment services, giving them a clear path to adopting agentic AI without disrupting operations.

Are Multimodal AI Agents Better Than Traditional AI Models?

Explore how multimodal AI agents enhance decision-making by integrating text, voice, and visuals.

Kanerika’s AI Agents: Specialized, Secure, and Workflow-Ready

Kanerika builds AI agents that are task-specific, privacy-aware, and designed to work together. Reflecting the evolution of AI agents, each agent is designed to address a specific challenge, ranging from document analysis to voice interaction, and can operate independently or as part of a larger multi-agent system.

Here’s a closer look at the agents:

- DokGPT retrieves answers from scanned documents and PDFs using natural language queries. It’s used in legal, finance, and compliance-heavy environments where document intelligence matters.

- Jennifer handles voice-based tasks like scheduling and call routing. It’s built for customer support and operations teams that rely on phone interactions.

- Karl analyzes structured data and generates visual summaries. It helps analysts and decision-makers spot trends quickly.

- Alan summarizes long legal contracts into short, actionable insights. It’s used by legal teams to reduce review time and improve contract analysis.

- Susan redacts sensitive data to meet GDPR and HIPAA standards. It’s used in healthcare, HR, and compliance workflows.

- Mike checks documents for math errors and formatting issues. It’s built for finance and reporting teams that need accuracy.

- Jarvis acts as a general-purpose orchestrator. It coordinates tasks between agents, manages workflows, and ensures smooth execution across systems. Jarvis is especially useful in multi-agent setups where different agents need to collaborate on a shared goal.

These agents are built with privacy and security in mind. They don’t store data unnecessarily and are designed to operate safely within enterprise environments. Reflecting the evolution of AI agents, Kanerika’s intelligent architecture combines real-time insights, predictive analytics, and automation to create a flexible system that adapts to enterprise needs—helping teams move faster without compromising control.

Transform Your Business with Cutting-Edge AI Solutions!

Partner with Kanerika for seamless AI integration and expert support.

FAQs

1. What is an AI agent and how does it differ from regular AI?

AI agents are autonomous systems that can plan, reason, and act independently to achieve specific goals. Unlike traditional AI models, which only provide responses when prompted, AI agents can make decisions, execute multi-step workflows, and learn from context, making them more like digital assistants than simple tools. This autonomy is a core feature of agentic AI, transforming how businesses automate processes.

2. How do AI agents automate complex business tasks?

AI agents can handle repetitive and multi-step operations across departments, from processing invoices and contracts to managing customer queries. By integrating agentic AI into workflows, businesses can reduce manual work, improve accuracy, and free employees to focus on strategic tasks. Tools like Kanerika’s AI agents or OpenAI’s GPT agents are designed to manage such operations efficiently.

3. What industries benefit the most from agentic AI?

Agentic AI is widely adopted in finance, healthcare, logistics, retail, and manufacturing. For example, banks use AI agents for compliance automation, healthcare providers for document management, and logistics firms for supplier onboarding. Any sector dealing with complex data, repetitive workflows, or large-scale decision-making can leverage AI agents for efficiency and cost reduction.

4. How secure and private are AI agents when handling sensitive data?

Security and privacy are critical in agentic AI deployment. Leading AI agent platforms, such as Kanerika, Microsoft, and Anthropic, ensure compliance with standards like GDPR, HIPAA, and ISO 27701. These systems minimize unnecessary data storage, use encryption, and provide controlled access, enabling businesses to automate processes without compromising data privacy.

5. How do AI agents improve business decision-making?

AI agents analyze large datasets, detect patterns, and provide actionable insights in real time, helping businesses make faster, more informed, and data-driven decisions.