Let’s be real — choosing DataOps tools can feel like shopping for a car when you don’t even have a driver’s license yet. Too many options, too much tech-speak, and somehow everything costs way more than you thought. Meanwhile, bad data management is a critical issue that can can disrupt your business. IBM found that businesses lose around $3.1 trillion every year in the U.S. alone because of poor data handling (source:

The right DataOps setup makes sure your data doesn’t just exist — it works for you, without dragging your teams down. So if you’re tired of spreadsheets that lie and dashboards that freeze, maybe it’s time to figure out what to actually look for. Let’s break it down in a way that actually makes sense.

Speed Up Your Data Management with Powerful DataOps Tools!

Partner with Kanerika Today!

What is DataOps?

DataOps is a collaborative data management methodology that integrates DevOps principles with data analytics. It streamlines the end-to-end data lifecycle by automating data delivery, ensuring quality, and improving cross-functional collaboration. Think of it as a bridge connecting data engineers, scientists, and business users through automated workflows, continuous integration/deployment, and monitoring practices.

DataOps aims to reduce data friction, accelerate insights delivery, and increase the reliability of data products while maintaining governance and security standards.

Why DataOps Actually Matters in Today’s Data Ecosystem

Data is growing faster than most companies can keep up with. But it’s not just about size — it’s about trust, speed, and teamwork. That’s where DataOps steps in.

Think of DataOps as the behind-the-scenes system that keeps your data clean, your pipelines flowing, and your team in sync. Without it, you get broken dashboards, messy handovers, constant back-and-forth between teams, and worst of all — decisions based on outdated or wrong info.

Here’s why it matters now more than ever:

Data volumes are exploding – IDC says we’ll hit over 180 zettabytes by 2025. That’s too much to handle manually.

More teams rely on data – From marketing to operations, everyone wants fast answers.

Mistakes are expensive – Bad data can cost millions. IBM estimates $3.1 trillion is lost every year in the U.S. due to poor data quality.

Speed is everything – DataOps brings automation, which cuts delays and boosts reliability.

Data Automation: A Complete Guide to Streamlining Your Businesses

Accelerate business performance by systematically transforming manual data processes into intelligent, efficient, and scalable automated workflows.

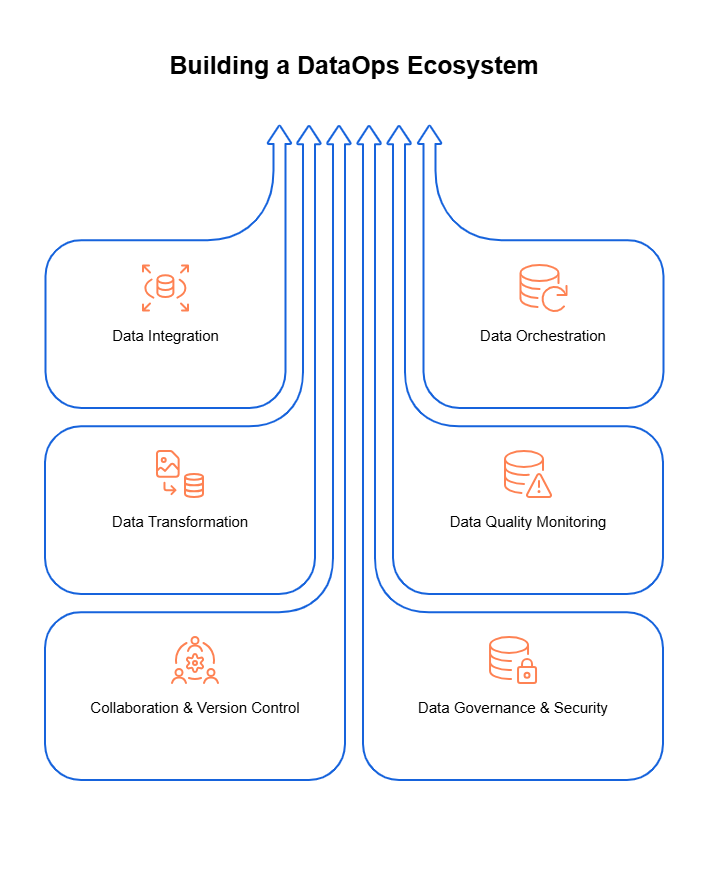

What Are the Core Components of DataOps Tools?

DataOps isn’t a single tool or process — it’s a mix of parts that all work together to make your data reliable, fast, and usable. Here’s what it’s made of:

1. Data Integration

This is where it all starts — pulling data from different sources and bringing it together in one place. Whether it’s a database, a SaaS app, or a CSV file, you need a way to connect it all.

- Tools: Fivetran, Talend, Stitch

- Focus: Automate extraction and loading

- Goal: Avoid manual copy-paste chaos

2. Data Orchestration

Think of this as the conductor of the data pipeline — managing when and how data moves through each stage.

- Tools: Apache Airflow, Prefect, Dagster

- Focus: Scheduling, dependencies, failure handling

- Goal: Keep pipelines running like clockwork

3. Data Transformation

Raw data is messy. This step cleans it up and shapes it into something useful — like turning timestamps into actual dates or splitting full names into first/last.

- Tools: dbt, Apache Spark, Dataform

- Focus: Clean, standardize, reshape

- Goal: Get data analytics-ready

4. Data Quality Monitoring

Even with great pipelines, things break. Data might go missing, spike unexpectedly, or show weird trends. This part catches those issues before they mess up your reports.

- Tools: Great Expectations, Monte Carlo, Soda

- Focus: Alerts, validation, anomaly detection

- Goal: Trust your data, not second-guess it

5. Collaboration & Version Control

Multiple teams touch the same data. You need structure — version history, approval flows, and ways to track changes.

- Tools: Git, GitLab, Bitbucket (used with dbt or similar tools)

- Focus: Reproducibility, change tracking

- Goal: Avoid the “who broke it?” blame game

6. Data Governance & Security

Who can access what? Is sensitive data being exposed? This layer adds rules and protection to make sure data use stays responsible and compliant.

- Tools: Alation, Collibra, Immuta

- Focus: Access control, data lineage, compliance

- Goal: Keep regulators happy and avoid leaks

FLIP: An AI-powered, Low Code/ No Code DataOps Platform

FLIP makes extracting actionable insights from data simpler and quicker this with its AI-powered low-code/no-code platform. It enables businesses to simplify and automate complex data transformation pipelines, speeding up data operations and decision-making.

1. Pre-built Connectors for Seamless Integration

FLIP offers pre-built connectors that easily integrate with existing workflows, enhancing pipeline efficiency and ensuring smooth data operations across systems. This seamless integration saves valuable time and resources, enabling faster time-to-insight.

2. Powerful Data Manipulation

FLIP’s advanced data manipulation tools empower you to perform complex transformations while maintaining data quality. Whether it’s workflow customization or meeting complex business requirements, FLIP offers flexibility and operational efficiency at scale.

3. Real-time Automation

With real-time processing and automated alerts, FLIP helps you stay ahead of potential issues. The platform monitors your pipeline continuously, providing immediate insights and proactive file monitoring, ensuring that no critical data is missed.

4. Enterprise Security and Compliance

FLIP prioritizes security with robust protocols and supports hybrid data formats, ensuring your data is always compliant and protected.

FLIP is the modern solution to efficient, automated, and secure DataOps.

Why AI and Data Analytics Are Critical to Staying Competitive

AI and data analytics empower businesses to make informed decisions, optimize operations, and anticipate market trends, ensuring they maintain a strong competitive edge.

Other Top DataOps Tools in 2025

1. Fivetran

Fivetran revolutionizes data integration with its zero-maintenance pipelines that automatically adapt to source schema changes, enabling teams to focus on analysis rather than pipeline maintenance. Its cloud-native architecture allows organizations to centralize disparate data sources quickly without writing or maintaining custom code.

Key Features

- 300+ pre-built, fully-managed connectors requiring minimal configuration

- Automatic schema drift handling and self-healing pipelines

- Incremental updates with log-based change data capture

- SOC 2, HIPAA, GDPR, and other compliance certifications

Ideal Use Cases

- Consolidating SaaS application data into a central warehouse

- Building real-time analytics dashboards from multiple sources

- Implementing a scalable ELT architecture with minimal engineering overhead

- Supporting global data operations with distributed data sources

2. dbt (Data Build Tool)

dbt transforms how organizations handle data transformation by bringing software engineering best practices to analytics code, enabling analysts to build reliable data models through SQL. It creates a collaborative environment where data models become version-controlled, tested, and documented assets that teams can trust.

Key Features

- Version control integration with Git-based workflows

- Automated testing framework for data validation

- Comprehensive documentation generation

- Modular approach to SQL transformations with reusable code

Ideal Use Cases

- Standardizing transformation logic across data teams

- Implementing CI/CD for analytics code

- Creating a single source of truth for business metrics

- Enabling analysts to contribute to the data pipeline without engineering support

3. Apache Airflow

Apache Airflow offers a programmatic approach to authoring, scheduling, and monitoring workflows, giving data teams unprecedented control and visibility into their data pipelines. Its Python-based DAGs (Directed Acyclic Graphs) allow engineers to express complex dependencies and execution logic with precision.

Key Features

- Python-based workflow definition for maximum flexibility

- Rich web UI for monitoring and troubleshooting

- Extensible architecture with 200+ ready-to-use operators

- Backfilling capabilities for historical data processing

Ideal Use Cases

- Orchestrating complex multi-system data workflows

- Managing dependencies between various data processing tasks

- Implementing retry logic and failure handling for critical pipelines

- Scaling data operations across cloud environments

4. Monte Carlo

Monte Carlo delivers autonomous data observability that helps teams detect, resolve, and prevent data incidents before they impact downstream consumers. Its machine learning-driven approach continuously monitors data assets for quality issues without requiring manual rule configuration.

Key Features:

- Automated anomaly detection using machine learning

- End-to-end data lineage visualization

- Incident management workflow integration

- Field-level data quality monitoring

Ideal Use Cases:

- Preventing data downtime in critical analytics environments

- Maintaining SLAs for data delivery and quality

- Tracking upstream and downstream impact of data changes

- Reducing time to detection and resolution for data incidents

5. Alation

Alation combines powerful data cataloging with collaborative features to create a central platform where teams can discover, understand, and trust their data assets. Its active metadata management capabilities help organizations maintain governance while enabling broader data democratization.

Key Features

- Automated data discovery and intelligent metadata extraction

- Built-in data governance and stewardship workflows

- Collaboration tools including conversations and annotations

- Integration with business intelligence and analytics tools

Ideal Use Cases

- Implementing data governance at enterprise scale

- Enabling self-service analytics while maintaining compliance

- Building a searchable inventory of all data assets

- Capturing and preserving institutional knowledge about data

DataOps Benefits: Ensuring Data Quality, Security, And Governance

Transform organizational data strategies by integrating advanced operational practices that optimize quality, security, and governance.

What Benefits of Using DataOps Tools?

1. Increased Data Pipeline Efficiency and Reliability

DataOps tools automate repetitive tasks and standardize workflows, dramatically reducing manual intervention and human error. Through continuous integration and monitoring, data pipelines become more resilient, with self-healing capabilities that address issues before they cascade.

Organizations implementing robust DataOps solutions report up to 70% fewer pipeline failures and 3x faster data processing, creating reliable data delivery that business stakeholders can depend on.

2. Reduced Time-to-insight for Business Users

By streamlining the journey from raw data to actionable intelligence, DataOps tools collapse traditional timelines from weeks to hours or minutes. Automated testing and validation eliminate lengthy quality assurance cycles, while self-service capabilities empower business users to access and analyze data without technical bottlenecks.

This acceleration enables organizations to respond to market changes faster, identify opportunities sooner, and make data-driven decisions while information is still relevant.

3. Better Collaboration Between Data Teams

DataOps platforms create a shared workspace where data engineers, scientists, analysts, and business users collaborate using common tools and language. Version control and documentation features preserve institutional knowledge, while standardized processes eliminate silos and “shadow IT.”

Teams gain transparency into the entire data lifecycle, fostering cross-functional problem-solving and innovation, ultimately creating a unified data culture where diverse expertise converges to extract maximum value from data assets.

4. Enhanced Data Quality and Governance

DataOps tools implement automated quality checks throughout the data pipeline, catching anomalies and inconsistencies immediately. Comprehensive data lineage tracking enables root cause analysis and regulatory compliance by documenting every transformation.

Governance policies become executable code rather than static documents, ensuring consistent application across all data processes. The result is higher stakeholder trust in data assets and dramatically reduced compliance risks.

5. Cost Reduction Through Automation and Optimization

By eliminating repetitive manual tasks, DataOps tools reduce labor costs while freeing skilled personnel for higher-value work. Resource optimization features automatically scale computing resources up or down based on actual needs, while predictive analytics prevent costly overprovisioning.

Enhanced visibility into the entire data ecosystem helps identify redundant processes and storage, while standardized approaches reduce technical debt. Organizations typically report 30-40% lower operational costs after mature DataOps implementation.

Data Lake vs. Data Warehouse: Which One Powers Better Business Insights?

Explore the key differences between a data lake and a data warehouse to understand which one offers better insights for your business needs.

Best Practices for Implementing DataOps Tools

1. Start Small

Begin your DataOps journey with a focused approach by selecting a single pipeline with measurable business impact. This controlled environment allows your team to learn, adjust methodology, and demonstrate value before tackling more complex data ecosystems. The confidence and experience gained from early wins create momentum for broader adoption.

- Choose a data pipeline that addresses a specific business problem with high visibility

- Document baseline metrics before implementation to quantify improvements

- Create a dedicated cross-functional “DataOps tiger team” to champion initial efforts

2. Ensure Data Quality

Data quality forms the bedrock of successful DataOps implementations, requiring systematic validation throughout the entire pipeline. Building quality checks as code directly into your workflows creates a self-monitoring system that prevents bad data from propagating downstream. This proactive approach transforms quality from periodic assessment to continuous assurance.

- Implement automated testing with clearly defined thresholds for data accuracy, completeness, and consistency

- Create data quality SLAs with business stakeholders to align expectations and priorities

- Establish data quality incident response procedures with clear ownership and escalation paths

3. Promote Collaboration

Breaking down traditional barriers between technical and business teams accelerates value creation in DataOps environments. Shared tools, common vocabularies, and collaborative processes replace siloed approaches and finger-pointing. When diverse perspectives converge around data workflows, organizations discover innovative solutions and build mutual understanding.

- Establish regular cross-functional ceremonies that bring together technical and business stakeholders

- Create shared responsibilities through tools that democratize access while maintaining governance

- Implement collaborative documentation practices where knowledge is continuously refined by all team members

4. Monitor and Iterate

Successful DataOps implementations evolve continuously through systematic observation and refinement. Comprehensive monitoring captures both technical metrics and business outcomes, creating feedback loops that drive ongoing optimization. This culture of continuous improvement transforms DataOps from a static implementation to a dynamic capability.

- Deploy observability solutions that capture both pipeline health metrics and business impact indicators

- Schedule regular retrospectives to identify bottlenecks and prioritize improvements

- Create a formal mechanism for users to suggest workflow enhancements and report issues

Data Mesh vs Data Lake: Key Differences Explained

Explore key differences between a data mesh and a data lake, and how each approach addresses data management and scalability for modern enterprises.

Kanerika: Transforming Your Data Management with Advanced DataOps Tools and Solutions

As a renowned data and AI services company, Kanerika has been empowering businesses across industries to enhance their data workflows with cutting-edge solutions. From data consolidation and modeling to data transformation and analysis, our custom-built solutions have effectively addressed critical business bottlenecks, driving predictable ROI for our clients.

Leveraging the best of tools and technologies, including FLIP — an AI-powered low-code/no-code DataOps platform — we design and develop robust data and AI solutions that help businesses stay ahead of the competition. DataOps is at the core of our approach, ensuring that your data operations are not only streamlined but also automated for maximum efficiency, security, and compliance.

By partnering with Kanerika, you gain a strategic ally who will simplify your data processes, enhance collaboration across teams, and empower your decision-making with reliable, actionable insights.

Accelerate Your Data Management with Scalable DataOps Tools!

Partner with Kanerika Today!

Frequently Asked Questions

What are the three pipelines of DataOps?

DataOps doesn’t rigidly adhere to “three pipelines,” but rather emphasizes a continuous flow encompassing three key areas. Think of it as ingestion (getting data in), processing (cleaning, transforming, enriching), and delivery (making data accessible to consumers). These areas interact constantly, creating a dynamic, iterative cycle focused on efficient and reliable data. The exact tools and steps within each vary based on the organization’s needs.

What is the difference between DataOps vs DevOps?

DataOps focuses on streamlining the entire data lifecycle, from ingestion to analysis and delivery of insights, emphasizing automation and collaboration across teams. DevOps, conversely, centers on software development and deployment, aiming for faster and more reliable releases. While both share principles like automation and CI/CD, DataOps tackles data-centric challenges, whereas DevOps targets software applications. Essentially, DataOps is DevOps applied specifically to data.

What is a DataOps platform?

A DataOps platform streamlines the entire data lifecycle, from ingestion to analysis, automating tasks and improving collaboration. It’s like a central nervous system for your data, ensuring efficient flow and high-quality insights. Think of it as DevOps, but specifically for managing and optimizing your data processes. This boosts speed, reduces errors, and improves the overall reliability of your data-driven decisions.

What are the four primary components of DataOps?

DataOps hinges on four key pillars: Automation, driving efficiency through streamlined processes; Collaboration, fostering seamless teamwork across data teams; Continuous Improvement, embracing iterative development and feedback; and Observability, providing real-time insights into data flow and quality for proactive issue management. These elements work together for faster, more reliable data delivery.

What is an example of DataOps?

DataOps isn’t a single tool, but a collaborative approach. Imagine a streamlined assembly line for data: it automates data processes (like cleaning and transformation) and improves communication between data engineers, scientists, and business users, ensuring faster, higher-quality insights. This collaborative, automated workflow is a DataOps example.

What are data pipelines tools?

Data pipeline tools are like automated assembly lines for your data. They ingest raw data from various sources, clean and transform it, and then deliver it to its final destination (like a database or data warehouse). Think of them as the behind-the-scenes workers ensuring your data is ready for analysis and decision-making. They streamline the entire data flow, saving time and preventing errors.

What are the 3 types of pipelines in Jenkins?

Jenkins doesn’t strictly categorize pipelines into just three types. Instead, it offers flexibility. You’ll see discussions of freestyle jobs (simple, often single-stage builds), scripted pipelines (using Groovy for detailed control), and declarative pipelines (using a more readable, YAML-like syntax for configuration). The best choice depends on your project’s complexity.

What are the two types of pipeline in DevOps?

DevOps pipelines aren’t strictly categorized into just *two* types, but we often see them broadly divided by focus: CI/CD pipelines automate code integration, testing, and deployment. Release pipelines, while overlapping with CI/CD, specifically manage the release process to different environments (e.g., staging, production), often including more nuanced approvals and rollbacks. The distinction highlights the separation of *building* software from *releasing* it.

What is the difference between DataOps and MLOps?

DataOps focuses on streamlining the entire data lifecycle, from ingestion to delivery, ensuring reliable and consistent data for all users. MLOps, a subset, specifically optimizes the *machine learning* lifecycle, building upon DataOps principles but adding a layer of model training, deployment, and monitoring. Think of MLOps as DataOps specialized for AI applications. Essentially, MLOps needs DataOps, but DataOps can exist independently.

What is AWS DataOps?

AWS DataOps is all about streamlining your data workflows on AWS, making them faster, more reliable, and easier to manage. It blends DevOps principles with data engineering to automate processes like data integration, transformation, and delivery. Think of it as applying agile software development to your entire data lifecycle for better insights, faster. Ultimately, it’s about getting data to the right people at the right time, reliably.

What is data pipeline in DevOps?

In DevOps, a data pipeline automates the flow of data from its source to its final destination (like a data warehouse or machine learning model). Think of it as an assembly line for data, ensuring consistent processing, transformation, and delivery. It’s crucial for efficient data analysis, reporting, and driving data-informed decisions within a DevOps workflow. This automation reduces manual errors and speeds up the entire data lifecycle.

What is the DataOps lifecycle?

DataOps is the agile approach to data management, mirroring DevOps’ success in software. It’s a continuous cycle of planning, building, testing, deploying, and monitoring data pipelines and processes to ensure data quality and rapid delivery of insights. This iterative process prioritizes collaboration and automation across teams to achieve faster, more reliable data flows. Think of it as DevOps, but for your data.

What is DataOps in Azure?

DataOps in Azure streamlines the entire data lifecycle, from ingestion to consumption. It’s about automating and improving collaboration between data engineers, scientists, and business users using Azure’s cloud services. This leads to faster data delivery, higher quality insights, and reduced operational costs through continuous integration and continuous delivery (CI/CD) principles. Think of it as DevOps, but specifically tailored for data.