As organizations rethink their data strategy in 2025, many are evaluating how best to unify analytics, governance, and AI-ready architectures. One emerging trend is migrating workloads from Databricks to Microsoft Fabric, driven by Fabric’s promise of a unified platform that combines data engineering, warehousing, real-time analytics, and AI in a single environment. Recent updates to Fabric’s integration tools and OneLake data layer have made it easier for enterprises to consolidate fragmented Databricks pipelines and governance frameworks into a single, cohesive system that supports both SQL and Spark workloads.

According to research, over 70% of companies now run hybrid or multi-cloud data setups. The demand for simpler, scalable platforms continues to grow. Businesses that update their data stack to unified platforms see query performance improve by 30-40%. They also get better data governance and cut costs by simplifying infrastructure. Because of this, more companies are interested in moving their analytics and engineering work from isolated systems, such as standalone Databricks setups, to integrated platforms like Microsoft Fabric.

Keep reading to explore the practical side of migrating from Databricks to Microsoft Fabric. You’ll learn about the benefits, best practices, and how organizations are moving to a more unified, future-ready data platform.

Power Your Business Intelligence With Microsoft Fabric’s Efficiency!

Partner with Kanerika for Expert Microsoft Fabric Implementation Services

Key Takeaways

- Enterprises are increasingly moving from fragmented Databricks setups to Microsoft Fabric to unify analytics, governance, and AI workloads on a single platform.

- Microsoft Fabric’s OneLake, semantic models, and native Power BI integration simplify data access, improve query performance, and reduce infrastructure complexity.

- Predictable, capacity-based pricing in Fabric helps organizations gain better cost control compared to Databricks’ usage-based model.

- Centralized governance through Purview strengthens security, compliance, and data lineage, which is critical for regulated industries.

- Successful migration requires careful assessment of workloads, dependencies, costs, risks, and stakeholder readiness before execution.

- Kanerika accelerates Databricks-to-Fabric migrations with its FLIP automation platform, delivering faster performance, lower costs, and scalable, future-ready analytics.

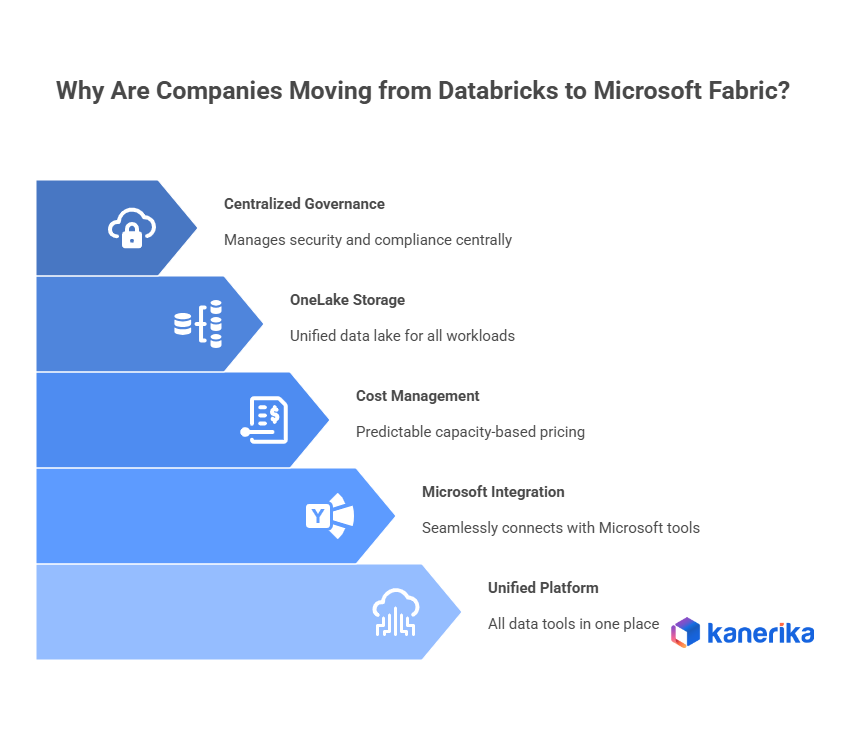

Why Are Companies Moving from Databricks to Microsoft Fabric?

Databricks has been a solid choice for data lakehouse platforms. It handles analytics well and integrates data lakes and warehouses. But lately, more companies are looking at Microsoft Fabric instead. This isn’t because Databricks stopped working. It’s because Microsoft Fabric offers features that better align with what some businesses actually need.

1. Unified Platform vs Multiple Tools

Databricks does its job, but you usually need to connect it with other tools to cover everything. Separate services handle data integration, orchestration, visualization, and governance. Microsoft Fabric puts all of this in one place. Data engineering, data science, real-time analytics, business intelligence, and governance all work from the same platform. Companies managing multiple vendors and dealing with messy integrations find this simplicity helpful.

2. Native Integration with Microsoft Ecosystem

Many enterprises already use Microsoft products. Power BI, Azure Synapse, Teams, Office 365. Microsoft Fabric natively connects to all of them. If your team already uses Power BI for reports or runs cloud services on Azure, Fabric just fits in without much setup. Databricks can work with these tools too, but it needs more configuration and doesn’t feel as smooth. Companies that are heavily invested in Microsoft tools find that Fabric removes a lot of friction.

3. Simplified Cost Management

Databricks pricing gets messy. You pay for compute, storage, and DBUs that change based on workload type. It’s flexible but hard to predict, especially when usage goes up. Microsoft Fabric uses capacity-based pricing, which is easier to understand. You pay for compute capacity in a way that makes sense when you’re looking at the numbers. Finance teams like this because budgeting cloud spending becomes more predictable.

4. OneLake Storage Architecture

Microsoft Fabric has something called OneLake. It’s a unified data lake that runs across the whole platform. All your workloads pull from the same data without copying or moving it. Databricks uses Delta Lake, which works fine, but data often ends up duplicated across workloads or systems. OneLake cuts down on that. You store data once, and every service in Fabric can use it directly. This reduces storage costs and simplifies data management.

5. Centralized Governance and Security

Databricks has strong security features, but governance can get tricky when you’re handling data from multiple sources and managing access across multiple tools. Microsoft Fabric centralizes everything through Purview integration. Data lineage, sensitivity labels, and access controls all get managed from one spot. Industries with strict compliance requirements, such as healthcare or finance, find that this centralized setup reduces risk and makes audits less painful.

Who Should Consider the Move?

Not every company needs to leave Databricks. If your team runs heavily on Spark-based workflows, already built custom integrations, or depends on the open-source flexibility Databricks gives you, staying there makes sense. But if you’re already using a lot of Microsoft tools, want everything to work from one platform, need costs easier to track, or deal with messy governance right now, Microsoft Fabric is worth looking into.

This isn’t about one being better than the other in every way. It’s about what works for your situation. Microsoft Fabric fits well when you want simpler integration, governance you can manage from one spot, and a tighter connection to Microsoft’s ecosystem. Databricks still works great when you need heavy customization and want to keep that open-source flexibility. What really matters is knowing what your business needs most and what problems you’re trying to solve.

Databricks vs Microsoft Fabric Key Differences Explained

Databricks and Microsoft Fabric are both data platforms for managing data and analytics, but they are designed for different audiences and offer distinct capabilities. Here are the key differences between these two analytics platforms:

1. Data Flow and Pipeline Management

Microsoft Fabric introduces the Lakehouse structure, which simplifies data flow and pipeline management by consolidating data storage, transformation, and querying within a single ecosystem. This Lakehouse model enables more straightforward, faster data processing workflows than Databricks’ data lake approach, which often requires separate data flows and additional integrations to manage various data types. With Fabric’s structured Lakehouse, businesses benefit from a more organized data architecture, reducing complexity and improving pipeline efficiency.

2. Architecture

Fabric bundles compute, data transfer, storage, and other costs into a single SKU called Capacity Units. You buy capacity, and all workloads pull from that pool. Billing stays simple.

Databricks separates costs. You pay for compute clusters, storage through your cloud provider, and Databricks Units (DBUs) that vary by workload. This gives more control but makes predicting costs harder as usage grows.

3. Data Querying

Fabric offers significant advantages in data querying with its direct table shortcuts and semantic models, which allow users to create optimized queries for reporting and analytics. In contrast, Databricks typically requires more manual setup to handle complex querying across large datasets. By leveraging Fabric’s semantic models, organizations can establish direct links to data tables, enabling faster access and more streamlined data querying processes, especially for real-time analytics.

4. Resource Consumption and Costs

Microsoft Fabric provides greater flexibility in resource management, particularly by allowing users to choose between shortcuts and direct data writes. Shortcuts enable data to remain in Databricks while being accessed directly in Fabric, minimizing the need for duplicate data flows and reducing storage and transfer costs. Additionally, with the option to centralize operations within Fabric, businesses can consolidate resource usage, preventing the need to run both platforms simultaneously, thus saving on computational expenses.

5. Integration Flexibility

Fabric excels at cross-platform interoperability, with native integration for Power BI, Azure Synapse, and the broader Microsoft ecosystem. This seamless compatibility provides an advantage for organizations already using Microsoft tools, as it enables effortless data sharing and interaction across platforms. In contrast, Databricks often requires third-party tools or additional configurations to achieve the same level of cross-platform integration. Fabric’s unified environment allows teams to work more collaboratively and efficiently, leveraging Microsoft’s ecosystem for a more cohesive data strategy.

Elevate Analytics, Drive Better Results With Microsoft Fabric!

Partner with Kanerika for Expert Microsoft Fabric Implementation Services

| Aspect | Databricks | Microsoft Fabric |

| Platform Architecture | Unified analytics platform built on Apache Spark | All-in-one analytics platform integrating multiple Microsoft services |

| Data Lake Integration | Delta Lake (proprietary optimized storage layer) | OneLake (unified storage with delta lake compatibility) |

| Cost Structure | DBU-based pricing with separate compute and storage costs | Capacity-based pricing with unified cost model |

| Native Integration | Requires connectors for Microsoft tools | Native integration with Microsoft ecosystem (Power BI, Azure, Office 365) |

| Development Environment | Notebooks with support for multiple languages (Python, R, SQL, Scala) | Notebooks plus Microsoft-specific tools like Data Factory, Synapse |

| Machine Learning Capabilities | MLflow integration with extensive ML features | Azure Machine Learning integration with AutoML capabilities |

| Real-time Analytics | Structured Streaming with Delta Live Tables | Real-time analytics through Synapse Real-Time Analytics |

| Governance & Security | Unity Catalog for data governance | Purview integration for unified data governance |

| Deployment Options | Multi-cloud (AWS, Azure, GCP) | Primarily Azure-focused |

| Collaboration Features | Basic collaboration through workspace sharing | Deep integration with Microsoft Teams and Office 365 |

| Data Warehousing | Requires separate configuration | Built-in data warehouse capabilities |

| ETL/ELT Processing | Delta Live Tables and standard Spark ETL | Data Factory with mapping data flows |

| Query Performance | Photon engine for SQL acceleration | Microsoft’s latest query engine built on Lake Database |

| BI Integration | Third-party BI tool integration required | Native Power BI integration |

| Open-source Support | Strong open-source foundation (Apache Spark) | Mix of proprietary and open-source technologies |

Databricks to Microsoft Fabric: Pre-Migration Assessment & Planning

Databricks to Microsoft Fabric: Pre-Migration Assessment & Planning

1. Current State Analysis

- Data Inventory and Classification: Start by checking all the data sitting in your Databricks setup. See which types you have, how much space each takes, and which pieces are sensitive. Make a list of databases, tables, and files, along with how they link together. Mark what’s critical and what’s not. This helps you decide which move to make first and how to lock things down in Fabric.

- Workload Assessment: Review the jobs, notebooks, and pipelines currently running. Check when they run, how much power they use, and which ones depend on others. Knowing these patterns makes planning easier when you set up resources in Fabric.

- Dependencies Mapping: Write down everything connected to your Databricks environment. Data sources, APIs, scheduling tools, and apps that pull from your data. This map shows where problems might pop up, what connections need rebuilding, and what won’t work without changes in Fabric.

- Resource Utilization Metrics: Pull numbers on compute, storage, and memory usage from Databricks. Check cluster activity, job runtimes, and storage trends. These metrics help you size Fabric correctly without overspending or hitting performance walls.

2. Business Impact Evaluation

- Cost-Benefit Analysis: Compare your current Databricks bill with the cost of Fabric. Count licensing, storage, compute, and maintenance for both. Add migration costs upfront against long-term savings. This tells you if the switch makes money sense.

- ROI Projections: Calculate what you’ll gain back. Faster queries, less maintenance time, easier integrations, better efficiency. Use these to convince decision-makers and set realistic goals for what migration can bring.

- Risk Assessment: List what could go wrong. Losing data, systems going down, slower speeds, and breaking compliance rules. Rate how likely each risk is and how bad it could get. Then build plans to prevent them and keep operations stable during the move.

3. Stakeholder Alignment

- Migration Team Structure: Definition of the core migration team, including technical leads, business analysts, data engineers, and subject matter experts. This involves clarifying roles, responsibilities, and decision-making authority to ensure smooth execution of the migration plan.

- Communication Strategy: Development of a clear communication plan to keep all stakeholders informed about migration progress, challenges, and milestones. This includes establishing regular update channels, feedback mechanisms, and escalation procedures for issue resolution.

- Training Needs Analysis: Assessment of current team skills against required capabilities for Microsoft Fabric operations. This helps identify training gaps and develop appropriate learning paths to ensure team readiness for the new platform.

4. Project Management Framework

- Timeline Development: Creation of a realistic migration timeline with clear phases, milestones, and dependencies. This involves considering business cycles, resource availability, and critical business events to minimize disruption during the transition.

- Success Metrics: Definition Establishment of clear, measurable criteria for migration success, including technical performance benchmarks, user adoption rates, and business impact metrics. This provides objective measures to track progress and validate migration outcomes.

- Budget Planning: Detailed budgeting for all aspects of the migration, including software licenses, infrastructure costs, consulting services, and training expenses. This ensures adequate resource allocation and helps prevent cost overruns during the migration process.

- Change Management: Plan development to manage the organizational impact of the platform transition. This includes user adoption plans, resistance management, and processes to ensure smooth operational handover to the new platform.

Microsoft Fabric: A Game-Changer for Data Engineering and Analytics

Unlock new possibilities in data engineering and analytics with Microsoft Fabric’s robust, all-in-one solution for streamlined insights and efficiency.

Advantages of Migrating to Microsoft Fabric – The Kanerika Solution

As a trusted Microsoft data and AI solutions partner, Kanerika brings expertise to every step of your Fabric migration, ensuring seamless integration and optimized data operations. With Kanerika’s approach, expect enhanced data performance, streamlined costs, and scalable, future-ready analytics that drive real business outcomes.

1. Improved Data Integration and Management

Microsoft Fabric stands out by providing an integrated Lakehouse architecture, which simplifies data storage, access, and integration. Unlike Databricks, which requires setting up multiple data flows and managing complex integrations, Fabric’s Lakehouse enables a more unified approach.

Using Dataflow Gen 2, Fabric enables businesses to build a data pipeline that unifies data from diverse sources into a single, manageable Lakehouse environment. This not only enhances data integrity but also improves accessibility for teams needing centralized reporting and analytics, effectively streamlining data management across departments.

2. Enhanced Performance and Scalability

One of the standout features of Microsoft Fabric is its use of semantic models and direct table shortcuts for optimized data querying and reporting. This architecture significantly reduces query times, especially for complex datasets, by allowing Fabric to perform more efficient data queries directly on its semantic models.

For instance, Kanerika’s Fabric implementation demonstrated the benefit of transitioning existing reports (like the ContainerUtilization_POC) into the semantic model, which enhanced query response and reporting speed. Additionally, Fabric’s scalability ensures that as data needs grow, enterprises can adapt quickly without costly system overhauls.

3. Cost-Efficiency

Fabric’s architecture offers various options to minimize operational costs. By utilizing data shortcuts and direct publishing capabilities, Fabric users can maintain data storage efficiency while reducing the need for additional data flows. For instance, shortcuts allow data to remain in Databricks while being accessed seamlessly through Fabric without incurring high transfer or storage fees.

Moreover, Fabric’s ability to consolidate processes into a single ecosystem cuts down on duplicated resource usage, allowing enterprises to reduce reliance on multiple platforms and lower associated operational expenses.

4. Native Integration with Microsoft Ecosystem

For organizations already invested in Microsoft’s suite of tools, Fabric’s native integration with Power BI, Microsoft 365, and Azure is invaluable. This direct integration enables smoother data flow and sharing across the ecosystem, allowing data from Fabric’s Lakehouse to be readily visualized in Power BI or processed within Azure Synapse for further analytics.

This compatibility eliminates the need for third-party integrations and enhances usability, as team members can leverage familiar tools to manage, visualize, and analyze data without additional training or system adjustments.

5. Optimized Resource Usage

Fabric’s unified environment offers businesses the ability to consolidate data processes, reducing resource consumption and operational overhead. By shifting data operations and execution directly onto Fabric, teams can avoid the need to operate both Fabric and Databricks simultaneously. This approach not only conserves computational resources but also reduces costs associated with running parallel engines.

Additionally, Kanerika’s recommendation to transition code execution to Fabric over time further emphasizes its long-term resource efficiency, as it minimizes the heavy reliance on both platforms for ongoing report queries and data management.

Microsoft Fabric Vs Tableau: Choosing the Best Data Analytics Tool

Compare Microsoft Fabric and Tableau to find the right data analytics tool for your business needs, efficiency, and insights.

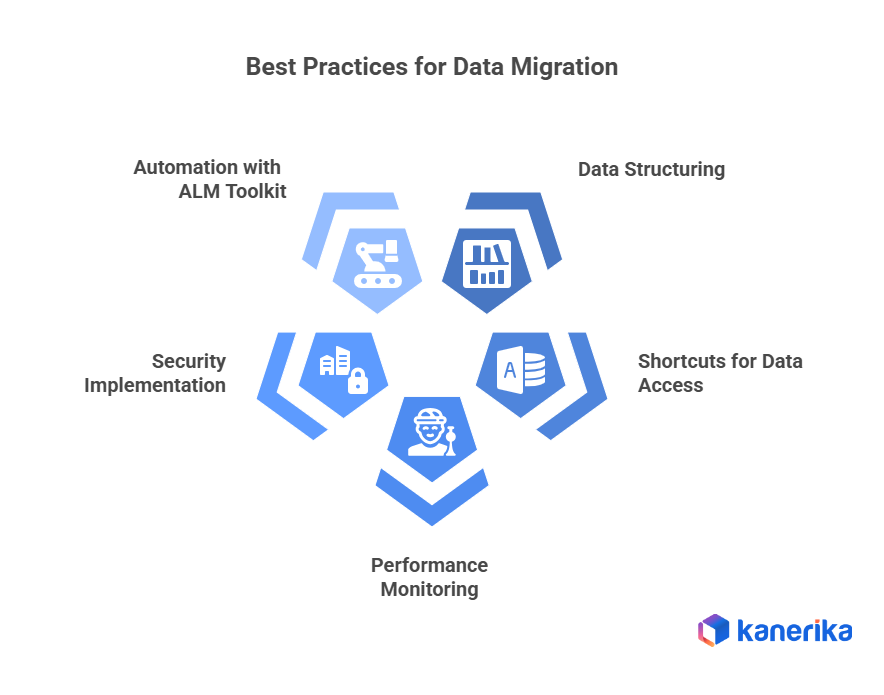

Proven Best Practices for Databricks to Microsoft Fabric Migration

1. Data Structuring and Semantic Model Setup

For effective migration, it’s essential to segment Lakehouses by specific functions (e.g., dimensions or metrics) and create custom semantic models to enable flexibility. This approach allows teams to:

- Keep data organized and easy to manage across different business needs.

- Enable advanced features like calculation groups and field parameters, improving usability.

- Prevent performance slowdowns by minimizing the number of complex joins.

2. Utilize Shortcuts for Data Access

Implementing shortcuts in Fabric allows for direct data access without duplicating flows, reducing workload, and enhancing efficiency. Key benefits of using shortcuts include:

- Simplifying the data architecture by minimizing redundant data flows.

- Allowing Fabric to read data directly from Databricks, reducing storage and transfer costs.

- Speeding up data access and ensuring that reports reflect real-time information.

3. Performance Monitoring and Resource Testing

Regular testing of resource consumption across Databricks and Fabric ensures an optimized and cost-effective migration. To effectively monitor performance:

- Analyze resource usage during report interactions to fine-tune configurations.

- Identify bottlenecks in data flows and apply adjustments for smoother operations.

- Ensure efficient utilization by consolidating processes where possible.

4. Security Implementation

Securing data is paramount, and Row-Level Security (RLS) within Power BI allows for controlled, user-specific access, maintaining data confidentiality and integrity. Best practices for RLS include:

- Setting user roles and permissions to restrict data access based on roles.

- Applying RLS configurations in Power BI for seamless integration with Fabric.

- Maintaining compliance with data privacy regulations by enforcing access controls.

5. Automate Data Processes with ALM Toolkit

Using the ALM Toolkit simplifies the migration of complex relationships and measures between models, helping avoid manual errors and improving consistency. Key uses of the ALM Toolkit are:

- Migrating relationships, calculations, and hierarchies efficiently between Fabric models.

- Ensuring continuity in data models without losing established relationships.

- Saving time and reducing complexity, especially when dealing with large datasets.

Case Study 1: Boosting Data Pipeline Speed and Reducing Cloud Costs with Microsoft Fabric

Client’s challenge:

A global packaging company relied on Azure Data Factory and Synapse pipelines that had become slow, costly, and inconsistent. Parquet conversion steps often failed. Pipeline refreshes took too long. Governance differed across teams, causing repeated work and difficulty maintaining standards. All of this slowed analytics and increased cloud spending.

Kanerika’s Solution:

Kanerika migrated its data engineering setup into Microsoft Fabric using its Fabric migration accelerator. The team rebuilt pipelines for stability, removed unnecessary processing layers, consolidated duplicate workflows, and set up a unified governance model. Fabric dashboards and monitoring made issues easier to detect and fix.

Impact Delivered:

- Around 30% reduction in cloud and data costs

- Roughly 50% faster pipeline execution

- Insights are available almost 80% sooner

- Consistent governance across teams

- A simpler and more scalable Fabric-based architecture

Case Study 2: Transforming Data Processes for a Leading Logistics Company with Microsoft Fabric

Client’s challenge:

A global logistics and transportation firm managed supplier, freight, and warehousing data across many disconnected systems. Their Azure data lake was slow, expensive to maintain, and offered limited visibility into supplier performance, delivery delays, and route-level costs. These issues made day-to-day planning difficult and increased operational inefficiencies.

Kanerika’s solution:

Kanerika moved the client’s supplier and logistics data environment into Microsoft Fabric. The team redesigned data models, optimized table storage, automated ingestion pipelines, and applied consistent governance. Fabric replaced scattered workflows with a single platform for transformation, monitoring, and analytics.

Impact delivered:

- About 50% improvement in data infrastructure efficiency

- Around 31% reduction in processing and storage costs

- Faster access to suppliers and logistics insights

- A single, scalable platform prepared for forecasting and real-time analytics

Kanerika’s Microsoft Fabric Expertise: Efficient Migration and Implementation Services

Kanerika is a recognized Microsoft Data and AI solutions partner. We are among the first firms to work deeply with Microsoft Fabric. Our experience helps companies shift their data systems to Fabric with smooth execution, strong ROI, and minimal disruption. We support teams as they modernize their full data and analytics setup across platforms, pipelines, reporting tools, cloud environments, and automation systems.

Kanerika makes Microsoft Fabric migration easier by automating most of the work through the FLIP accelerator. Many teams want a single platform for analytics and governance, but moving from tools like Azure Data Factory, Synapse, SSIS, or SSAS can be slow and complex. FLIP cuts this effort, keeps existing logic intact, and helps companies shift to Fabric without disruption.

• ADF and Synapse: FLIP scans current setups, converts activities into Fabric Data Factory workflows, and sets up Fabric workspaces. It keeps pipeline logic stable and improves execution inside Fabric.

• SSIS: Teams upload their SSIS packages, and FLIP extracts all logic and turns it into Power Query dataflows fit for Fabric. It preserves transformations, dependencies, and structure.

• SSAS: FLIP converts SSAS tabular models into Fabric semantic models. It preserves measures, hierarchies, roles, and relationships so analytics behave the same after migration.

Kanerika uses FLIP to reduce manual effort, speed up timelines, and ensure accurate validation. It keeps business rules, dependencies, and data lineage intact while delivering a clean transition to Fabric with immediate compatibility across OneLake, Power BI, and Fabric Data Factory.

Transform Your Data, Transform Your Business With Microsoft Fabric!

Partner with Kanerika for Expert Microsoft Fabric Implementation Services

Frequently Asked Questions

How to integrate Databricks with Microsoft Fabric?

Connecting Databricks to Microsoft Fabric streamlines your data workflow by allowing Databricks to serve as a powerful compute engine for Fabric’s analytical capabilities. Essentially, you leverage Databricks’ processing power for data transformation and analysis, then visualize and share the results within the Fabric workspace. This integration typically involves configuring linked services and datasets within Fabric, pointing them to your Databricks resources. The specific steps depend on your Fabric and Databricks setup but generally involve minimal coding.

What is the difference between Microsoft Fabric and Databricks?

Microsoft Fabric is a unified analytics platform built entirely within the Azure ecosystem, offering a streamlined, integrated experience for data engineering, analytics, and visualization. Databricks, while also powerful, is a more independent platform offering similar capabilities but with greater flexibility and broader cloud support (beyond Azure). The key difference lies in integration: Fabric prioritizes deep Azure synergy, while Databricks prioritizes flexibility and openness. Ultimately, the “best” choice depends on your existing cloud infrastructure and preference for tightly-coupled vs. loosely-coupled architectures.

How to connect Databricks to HDFS?

Connecting Databricks to HDFS involves configuring your Databricks cluster to access your HDFS storage. This usually means specifying the HDFS namenode address and potentially configuring authentication mechanisms like Kerberos. You’ll then use standard Spark commands within Databricks notebooks to read and write data directly to your HDFS paths. Successful connection requires proper network connectivity and configured access credentials.

Why migrate to Microsoft Fabric?

Microsoft Fabric consolidates your data warehousing, analytics, and data engineering needs into a single, unified platform. This simplifies your data stack, reducing complexity and cost associated with managing disparate tools. It offers enhanced collaboration and a modern, intuitive user experience, streamlining your entire data lifecycle. Ultimately, it empowers faster, more insightful data-driven decision-making.

How do I connect Google sheets to Databricks?

Connecting Google Sheets to Databricks leverages Databricks’ ability to read data from various sources. You don’t directly connect; instead, you use Databricks’ built-in connectors (like the JDBC/ODBC driver for Google Sheets, if available, or via a connector library) to import your Google Sheet data into a Databricks table or DataFrame. This imported data can then be processed and analyzed within Databricks. Consider alternatives like exporting the sheet to CSV for simpler import if direct connection proves difficult.

How do I import a library into Databricks?

Importing libraries in Databricks is straightforward. You use the familiar `import` statement within your notebook cells, just like in a standard Python environment. Databricks automatically handles most common library installations; if needed, you can install using `%pip install `. This installs the library within your current Databricks cluster.

How do I connect Databricks to Airflow?

Connecting Airflow to Databricks lets you orchestrate Databricks jobs within your Airflow workflows. This is typically achieved using the `DatabricksOperator`, a custom Airflow operator, which leverages your Databricks access token for authentication and submits jobs to your workspace. Essentially, Airflow acts as a conductor, triggering and monitoring Databricks tasks as part of a larger data pipeline. This integration offers powerful workflow management capabilities.