Did you know that organizations generate over 2.5 quintillion bytes of data every single day? In this era of exploding data volume and complexity, traditional databases can no longer handle the speed, scale, or diversity of information businesses rely on. That’s where Data Lake Implementation comes in — providing a unified, scalable foundation for storing structured, semi-structured, and unstructured data in its raw form.

A data lake acts as the backbone of modern analytics and AI, enabling real-time insights, self-service BI, and predictive modeling. In this blog, we’ll explore what a data lake is, why implementing one is critical for enterprise success, and how to design it effectively. You’ll also learn best practices, common pitfalls to avoid, and real-world examples from leading organizations that have turned their data lakes into engines of innovation and competitive advantage.

Key Takeaways

- A well-implemented data lake enables faster insights, scalability, and efficiency across analytics and AI workloads.

- Focus on governance, metadata management, and architecture design from the very beginning to ensure long-term success.

- Use modern platforms like the Databricks Lakehouse to unify batch, streaming, and machine learning workloads efficiently.

- Prioritize continuous improvement through automation, monitoring, and optimization of pipelines.

- Maintain close alignment between business and IT teams to drive adoption, data trust, and sustained value.

- Treat the data lake as a strategic asset, not just a storage system — it powers enterprise-wide innovation and growth.

What Is a Data Lake?

A Data Lake is a centralized storage system that allows organizations to store all their data structured, semi-structured, and unstructured, at any scale. Moreover, it serves as a single repository where raw data from different sources, such as databases, APIs, IoT devices, and applications, is collected and stored in its native format until it is needed for analysis.

Unlike a Data Warehouse, which uses a schema-on-write approach (data must be structured before storage), a Data Lake uses a schema-on-read model. Hence, this means that data can be analyzed in any format and structured only when required, offering flexibility for diverse analytics and AI use cases.

Data Lakes are vital for modern analytics, AI, and machine learning pipelines. As well as, they enable organizations to combine historical and real-time data, empowering advanced use cases such as predictive analytics, fraud detection, and personalized recommendations.

For example, insurance firms use Data Lakes to process IoT and telematics data for risk analysis, while retail companies leverage them for Customer 360 views, combining sales, behavior, and feedback data to improve engagement. Similarly, manufacturers integrate IoT sensor data for predictive maintenance and operational efficiency.

Elevate Your Data Workflows with Innovative Data Management Solutions

Partner with Kanerika Today.

Why Implement a Data Lake?

Enterprises today manage massive amounts of data coming from sensors, applications, customer interactions, and third-party systems. Correspondingly, traditional databases often fail to scale or handle such diversity effectively. Implementing a Data Lake offers a flexible, cost-efficient, and future-ready solution for data storage and analytics.

Business Drivers:

- Rising data volume and variety: Organizations are generating structured, semi-structured, and unstructured data at unprecedented rates, requiring scalable storage.

- Real-time analytics needs: Businesses want immediate insights for decision-making rather than waiting for batch processing cycles.

- Data democratization and self-service BI: Teams across departments need easy access to trusted data for analytics, reporting, and AI use cases.

Technical Benefits:

- Scalability across cloud platforms: Cloud-based solutions like AWS S3, Azure Data Lake Storage, and Google Cloud Storage allow near-infinite scalability and flexibility.

- Cost efficiency via decoupled storage and compute: Separating storage from compute resources lets enterprises optimize performance and reduce costs.

- Foundation for modern architectures: A Data Lake acts as the backbone for data lakehouse frameworks, combining the flexibility of a data lake with the performance of a data warehouse.

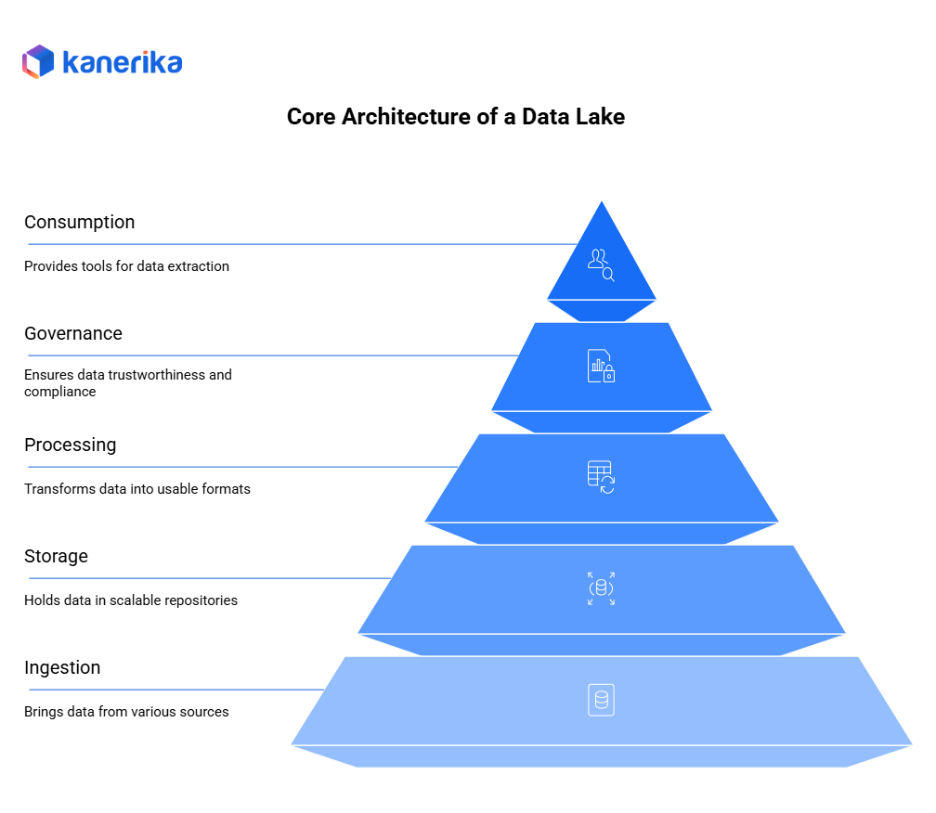

Core Architecture of a Data Lake

A data lake organizes data through multiple layers working together to transform raw information into valuable business insights. Understanding these layers helps organizations design effective data platforms.

Layer 1: Ingestion Layer

The ingestion layer brings data from various sources into the data lake. This layer handles both batch data arriving on schedules and streaming data flowing continuously in real-time. Moreover, common tools include Apache NiFi for flexible data routing, AWS Glue for serverless ETL, and Azure Data Factory for cloud-based orchestration.

The ingestion layer connects to databases, applications, IoT devices, social media feeds, and file systems. Additionally, data arrives in its original format without transformation, preserving complete information for later analysis.

Layer 2: Storage Layer

Once data arrives, the storage layer holds it in scalable, cost-effective repositories. Raw data stores in cloud storage like Amazon S3, Azure Data Lake Storage, or Google Cloud Storage. Some implementations use Hadoop HDFS for on-premises deployments. This layer accommodates all data types including structured databases, semi-structured JSON files, and unstructured documents or images.

The storage uses a flat architecture rather than hierarchical folders, making data easily accessible. Furthermore, this layer separates storage from compute, allowing organizations to scale each independently based on needs.

Layer 3: Processing Layer

The processing layer transforms raw data into usable formats through cleaning, validation, and enrichment. Apache Spark handles both batch and streaming processing at scale. Databricks provides unified analytics capabilities combining data engineering and data science. Snowflake offers cloud-based processing with automatic scaling.

This layer typically organizes data into zones: bronze for raw data, silver for cleaned and validated data, and gold for business-ready analytics datasets. Additionally, the processing layer applies business rules, removes duplicates, standardizes formats, and creates aggregations.

Layer 4: Governance Layer

Governance ensures data remains trustworthy, secure, and compliant throughout its lifecycle. Data catalogs like Unity Catalog, AWS Glue Catalog, or Azure Purview document what data exists and what it means. Access policies control who can view or modify specific datasets.

Lineage tracking shows where data originates and how it transforms through various processes. Moreover, the governance layer enforces data quality rules, manages metadata, and maintains audit trails for compliance. This layer becomes increasingly important as data lakes grow in size and complexity.

Layer 5: Consumption Layer

Finally, the consumption layer provides tools for users to extract value from data. Business intelligence platforms like Power BI and Tableau connect directly to the data lake for reporting and visualization. Data scientists use notebooks and machine learning frameworks to build predictive models.

SQL users query data through engines like Presto or Amazon Athena. Self-service analytics enables business users to explore data without technical expertise. Consequently, this layer democratizes data access across the organization while maintaining governance controls.

Data Flow Visualization

The diagram above illustrates how data flows through these layers:

- Sources → Ingestion: Data enters from databases, applications, sensors, and files

- Ingestion → Raw Storage: Original data lands in the storage layer unchanged

- Raw → Processing: Data moves through bronze, silver, and gold zones with increasing quality

- Processing → Governance: Metadata, lineage, and access controls track all transformations

- Curated → Analytics: Business-ready data becomes available for BI tools and ML models

Key Architecture Principles

- Schema-on-read: Unlike traditional warehouses requiring predefined schemas, data lakes store information first and apply structure when reading data. This flexibility accommodates diverse data types and changing business needs.

- Separation of concerns: Each layer handles specific responsibilities without interfering with others. This modular approach allows replacing individual components without redesigning the entire architecture.

- Scalability: Cloud-based storage and compute resources scale independently based on demand. Organizations pay only for resources they actually use.

- Multi-purpose platform: The same data lake serves data scientists exploring patterns, analysts creating reports, and applications consuming processed data. This unified platform eliminates data silos requiring expensive synchronization.

Modern data lake architectures provide organizations with flexible, scalable platforms supporting diverse analytics needs while maintaining governance and security. Properly implemented, these five layers work together delivering trustworthy insights from vast amounts of diverse data.

Data Migration Tools: Making Complex Data Transfers Simple and Seamless

Enable organizations to efficiently manage and execute intricate data transfers, ensuring accuracy, minimizing downtime, and maintaining data integrity throughout the migration process.

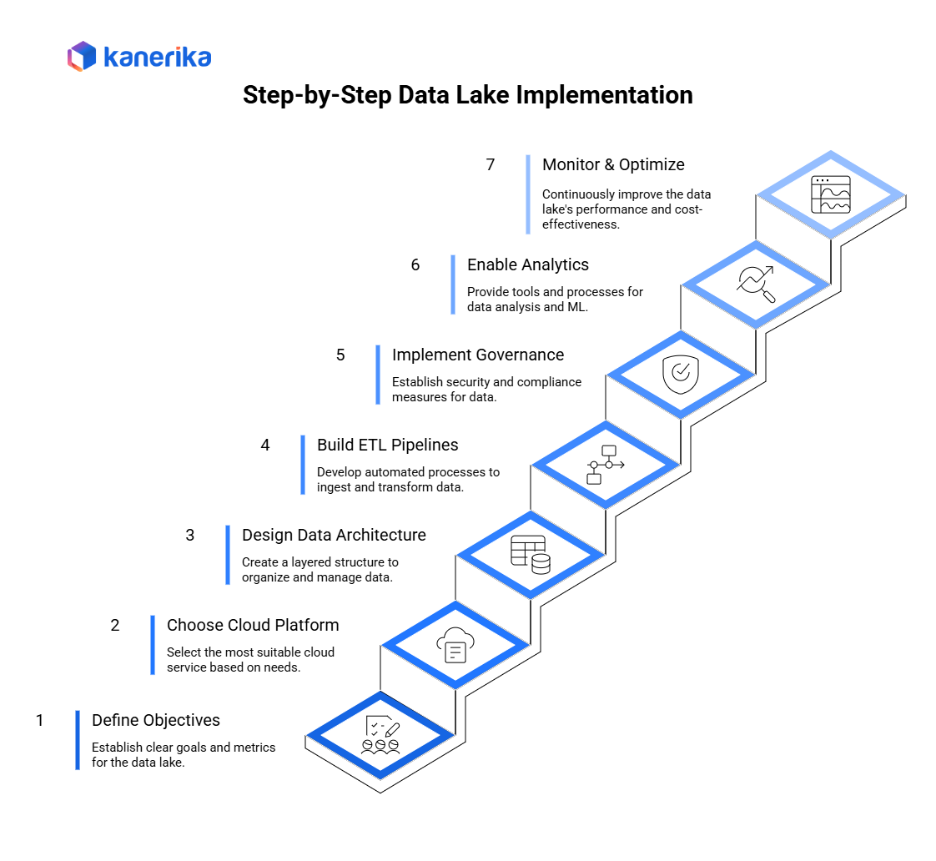

Step-by-Step Data Lake Implementation

Step 1: Define Objectives

Start with the “why.” List your priority use cases (e.g., customer churn analytics, IoT device monitoring, fraud alerts). Convert them into KPIs and success metrics such as time-to-insight, data freshness, and cost per query. Map data sources, users, compliance needs, and expected data growth for the next 12–24 months.

Step 2: Choose the Cloud Platform

Select a primary cloud based on skills, tools, and integrations:

- AWS: Amazon S3 for storage, AWS Glue for metadata/ETL, Athena/EMR for querying.

- Azure: ADLS Gen2 for storage, Synapse/Fabric for analytics, Purview for governance.

- GCP: Cloud Storage for data, BigQuery for analytics, Dataflow/Dataproc for processing.

Consider data residency, networking, pricing models, and native ecosystem fit.

Step 3: Design Data Architecture

Adopt a layered (Medallion) design to keep data organized and trustworthy:

- Raw/Bronze: Land data in native format for traceability.

- Refined/Silver: Clean, de-duplicate, standardize schemas, and enrich with reference data.

- Curated/Gold: Business-ready tables optimized for BI/ML.

Define naming conventions, partitioning, file formats (Parquet/Delta), and retention rules.

Step 4: Build ETL/ELT Pipelines

Ingest data from APIs, databases, apps, and IoT streams. Use change data capture (CDC) where possible. Validate schemas, set quality checks (nulls, ranges, referential rules), and add metadata (source, load time, version). For ELT, push heavy transforms to the lake engine (e.g., Spark/SQL). Automate runs with schedulers and event triggers.

Step 5: Implement Governance & Security

Assign data owners and stewards. Register datasets in a catalog with business glossary terms. Track lineage from source to report. Enforce IAM roles (reader, engineer, owner), row/column-level security, encryption at rest and in transit, and private networking. Log access and changes for audits.

Step 6: Enable Analytics & ML

Expose curated data to BI tools (Power BI, Tableau, Looker). Enable query federation if you must join across systems. Stand up notebooks and ML pipelines for feature engineering and model training. Store features and models with versioning; set up MLOps for deployment and monitoring.

Step 7: Monitor, Scale, Optimize

Create dashboards for pipeline health, data freshness, failure rates, and cost. Tune partitioning, compression, and caching. Use lifecycle policies to tier cold data to cheaper storage. Right-size compute and autoscale for spikes. Review usage quarterly; archive unused datasets and retire stale pipelines.

Best Practices for Data Lake Implementation

Building a successful Data Lake requires more than just storage—it demands planning, governance, and continuous optimization. Here are key best practices to ensure long-term success.

1. Start Small with Clear Use Cases

Begin with defined, high-value use cases instead of trying to migrate everything at once. Moreover, pilot projects such as customer analytics, IoT monitoring, or fraud detection help validate architecture and ROI before scaling.

2. Enforce Naming Conventions and Metadata Standards

Use consistent dataset naming rules and maintain detailed metadata. Standardized naming improves searchability, helps automation, and supports governance tools like data catalogs.

3. Enable Data Quality Checks and Lineage Tracking Early

Build data validation, anomaly detection, and lineage capture into pipelines from the start. As well as tracking data flow ensures accuracy, transparency, and easier debugging during audits.

4. Implement Role-Based Access Control and Encryption

Apply least-privilege access and encrypt data both at rest and in transit. Use IAM policies to control permissions and prevent unauthorized access.

5. Integrate Data Catalog Tools

Adopt catalog and governance tools such as AWS Glue Data Catalog, Azure Purview, or Google Data Catalog to improve discoverability, lineage visibility, and compliance management.

6. Optimize Storage with Partitioning and Tiering

Partition large datasets by date, region, or category for faster queries. Use compression (e.g., Parquet) and tiering (hot, warm, cold) to reduce cost and improve performance.

7. Continuously Document and Test Pipelines

Maintain technical and business documentation for every data process. Also, schedule regular testing of ingestion and transformation pipelines to catch issues early.

By following these best practices, enterprises can ensure their Data Lake implementation remains scalable, governed, and ready to support analytics and AI workloads efficiently.

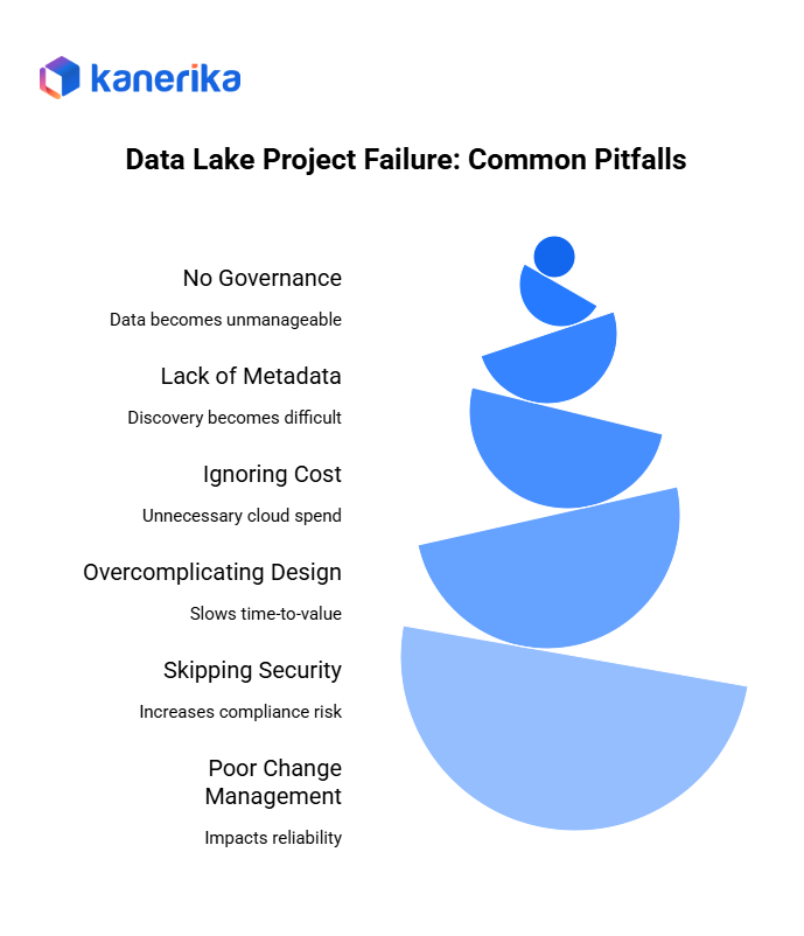

Common Pitfalls and How to Avoid Them

Even with the right tools and planning, many Data Lake projects fail to deliver their full potential because of overlooked challenges. Below are common pitfalls and how to prevent them.

1. No Governance – Leads to a “Data Swamp”

Without clear ownership, standards, and governance frameworks, data lakes become unmanageable over time. Additionally, define data stewards, enforce retention policies, and use cataloging tools from the start to keep data organized and discoverable.

2. Lack of Metadata Management – Makes Discovery Difficult

When metadata isn’t captured or maintained, teams struggle to find relevant datasets. Implement automated metadata extraction and tagging to ensure datasets are searchable, well-documented, and contextualized.

3. Ignoring Cost Optimization – Causes Unnecessary Cloud Spend

Cloud storage is inexpensive, but unmanaged compute, frequent queries, and redundant copies can escalate costs. Apply lifecycle management, automate tiering for cold data, and monitor spend using native cloud cost dashboards.

4. Overcomplicating Early Design – Slows Time-to-Value

Starting with overly complex architectures can delay ROI. Begin with simple, modular pipelines and scale up as maturity grows. Use standardized frameworks like the Medallion Architecture for structure.

5. Skipping Security Controls – Increases Compliance Risk

Neglecting encryption, IAM policies, and audit logging exposes sensitive data. Enable encryption at rest/in transit, apply least-privilege access, and integrate with your identity provider for strong authentication.

6. Poor Change Management – Impacts Reliability

Frequent, untracked schema or pipeline changes can break downstream analytics. Establish version control, change approval workflows, and automated testing to maintain stability.

By addressing these pitfalls early, enterprises can ensure their Data Lake implementation remains governed, cost-efficient, secure, and scalable—delivering real business value.

Real-World Examples of Data Lake

Real-world data lake projects demonstrate how leading organizations are transforming analytics, decision-making, and efficiency with cloud data lake solutions.

Example 1: Shell Energy — Azure Data Lake for Unified IoT and Operations Data

Shell Energy implemented a data lake on Microsoft Azure to integrate IoT, operational, and energy management data across its global network. This modernized data foundation helped the company reduce data preparation time by 60%, enabling faster insights and improved predictive maintenance. The project also enhanced collaboration between data scientists and business teams by providing a single, trusted data environment.

Example 2: Comcast — Databricks Data Lake for Predictive Analytics

Comcast used Databricks Lakehouse to unify customer interaction, billing, and service data. The new data lake supports large-scale predictive models that identify service degradation risks and improve customer retention. The transformation enabled near real-time analytics, accelerating the company’s move toward proactive customer service and reducing churn through better insights.

Example 3: HSBC — Cloud Data Lake for Risk and Compliance Analytics

HSBC adopted a cloud-based data lake to modernize its risk management and compliance frameworks. The platform consolidated risk, transaction, and regulatory data, allowing advanced analytics for stress testing and anti-money laundering (AML). This move improved regulatory reporting accuracy and transparency across regions.

Kanerika: Your Reliable Partner for End to End Data Analytics and Managements Services

Kanerika is a leading IT services and consulting company offering advanced data and AI solutions designed to elevate enterprise operations. We help businesses make better decisions, move faster, and operate smarter. Moreover, our services span across data analytics, integration, governance, and full-scale data management—covering every part of your data journey.

Whether you’re struggling with fragmented systems, slow reporting, or scaling issues, we deliver solutions that solve real problems. Additionally, our team blends deep expertise with the latest technologies to build systems that improve performance, cut waste, and support long-term growth.

By partnering with Kanerika, you’re not just adopting tools—you’re gaining a reliable team focused on outcomes that matter. From strategy to execution, we work closely with your teams to ensure success at every step.

Let us help you turn complex data into clear insights and real impact. Therefore, partner with Kanerika to make your data work harder, smarter, and faster for your business.

All our implementations comply with global standards, including ISO 27001, ISO 27701, SOC 2, and GDPR, ensuring security and compliance throughout the migration process. Moreover, with our deep expertise in both traditional and modern systems, Kanerika helps organizations transition from fragmented data silos to unified, intelligent platforms, unlocking real-time insights and accelerating digital transformation—without compromise.

FAQs

1. What is Data Lake Implementation?

Data Lake Implementation refers to building a centralized, scalable repository that stores all types of data — structured, semi-structured, and unstructured — for analytics, AI, and machine learning.

2. How is a Data Lake different from a Data Warehouse?

A data lake uses a schema-on-read approach (data is structured when accessed), while a warehouse uses schema-on-write (data structured before loading). This makes lakes more flexible and cost-efficient.

3. What are the key steps in implementing a data lake?

Define business goals, select a cloud platform, design architecture (Bronze–Silver–Gold layers), implement ETL pipelines, add governance, and optimize performance.

4. Which technologies are used in Data Lake Implementation?

Popular platforms include AWS S3 + Glue, Azure Data Lake Storage + Synapse, Google Cloud Storage + BigQuery, and Databricks Lakehouse for unified analytics.

5. How do you ensure data governance and security in a data lake?

Use encryption (at rest and in transit), role-based access control, lineage tracking, and catalog tools like AWS Glue Data Catalog or Azure Purview.

6. What are the biggest challenges in Data Lake Implementation?

Common issues include lack of governance, poor metadata management, cost overruns, and security misconfigurations — leading to “data swamps.”

7. What are the benefits of a well-implemented data lake?

A successful data lake enables faster analytics, cost-efficient scalability, real-time insights, and a strong foundation for AI and predictive modeling.