Power BI Deployment Pipelines have become essential for enterprise organizations as Microsoft’s business intelligence platform continues its rapid expansion across global markets. AI adoption in enterprise organizations surged to 72% in 2025, with Power BI serving as a critical component in this digital transformation journey.

This powerful, built-in feature empowers teams to seamlessly manage the lifecycle of reports and datasets across environments, all within a consistent, governed, and secure framework. Rather than relying on manual exports, copy-paste methods, or risky overwrites, deployment pipelines provide a visual, intuitive approach to publishing, validating, and promoting content across the Power BI landscape.

For data teams juggling multiple workspaces, environments, and stakeholders, Power BI Deployment Pipelines enable faster releases, version control, and reduced errors which makes them essential for any enterprise looking to scale BI workflows efficiently.

What Are Power BI Deployment Pipelines?

Power BI Deployment Pipelines provide a structured framework for managing the lifecycle of business intelligence content from development through production. This feature enables organizations to systematically promote reports, dashboards, and datasets through different stages while maintaining version control and ensuring quality standards.

The primary purpose is to eliminate the chaos of ad-hoc report publishing and establish enterprise-grade governance over BI content. Teams can collaborate more effectively while reducing the risk of publishing incomplete or incorrect reports to business users.

ChatGPT-5 Has Arrived: First Impressions, Deep Dive, and Why It Matters

ChatGPT-5 is here, bringing faster reasoning, better accuracy, and unified multimodal skills in one model.

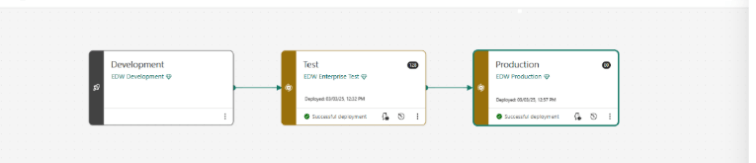

Key Components and Workspace Stages

1. Development Stage

The starting point where BI developers create new reports, modify datasets, and experiment with different visualizations. This workspace allows for iterative development without affecting business users or production data.

2. Test Stage

A controlled environment where stakeholders review content before it reaches end users. Quality assurance testing, user acceptance validation, and performance optimization occur at this stage.

3. Production Stage

The live environment where business users access finalized reports and dashboards. This stage represents the official, approved version of BI content that drives business decisions.

Each stage operates as an isolated workspace with its own security permissions, data connections, and user access controls.

Supported Items in Deployment Pipelines

When deploying content from one pipeline stage to another, the copied content can contain the following items:

- Activator

- Dashboards

- Data pipelines (Preview)

- Dataflows Gen2 (Preview)

- Datamarts (Preview)

- Environment (Preview)

- Eventhouse and KQL Database

- EventStream (Preview)

- KQL Queryset

- Lakehouse (Preview)

- Mirrored Database (Preview)

- Notebooks

- Organizational Apps (Preview)

- Paginated Reports

- Power BI Dataflows

- Real-time Dashboards

- Reports

- Semantic Models

- SQL Database (Preview)

- Warehouses (Preview)

Role in Enterprise BI Lifecycle

Deployment pipelines support mature BI practices by enforcing consistent development processes across teams. They enable proper testing cycles, stakeholder approvals, and rollback capabilities when issues arise.

Organizations can implement change management procedures, maintain audit trails of content modifications, and ensure compliance with data governance policies. This structured approach scales BI development from individual projects to enterprise-wide initiatives.

Manual Publishing vs Automated Pipeline Approach

- Manual publishing requires developers to individually promote content between workspaces, often leading to inconsistencies, forgotten dependencies, and human errors. Version tracking becomes difficult, and coordinating releases across multiple team members creates bottlenecks.

- Automated pipelines streamline the promotion process through one-click deployments that maintain relationships between datasets, reports, and dashboards. Dependencies are preserved automatically, reducing deployment errors and ensuring consistent environments across all stages.

Automated approaches also integrate with approval workflows, allowing designated reviewers to gate content promotion and maintain quality standards without manual intervention.

How Deployment Pipelines Facilitate Data and Metadata Movement

Deployment pipelines help in data and metadata movement across different stages by:

- Automating Content Migration: Ensuring that datasets, reports, and dashboards transition smoothly between environments.

- Reducing Manual Effort: Eliminating the need for manual intervention during content promotion.

- Maintaining Data Consistency: Ensuring that semantic models, parameters, and configurations remain aligned across environments.

- Allowing Selective Deployments: Enabling users to deploy only specific content while leaving other elements unchanged.

Item Pairing and Content Synchronization

What Is Item Pairing?

Item pairing is the process of associating content (reports, datasets, dashboards) across different stages. When items are paired, updates in one stage are reflected in the next without creating duplicates.

How Pairing Works

- When an item is assigned to a deployment stage, it is automatically paired with the corresponding item in the adjacent stage.

- If an unpaired item is deployed to the next stage, a new instance is created instead of overwriting existing content.

- Paired items maintain synchronization, while unpaired items appear separately in the pipeline view.

Move Beyond Legacy Systems and Embrace Power BI for Better Insights!

Partner with Kanerika Today.

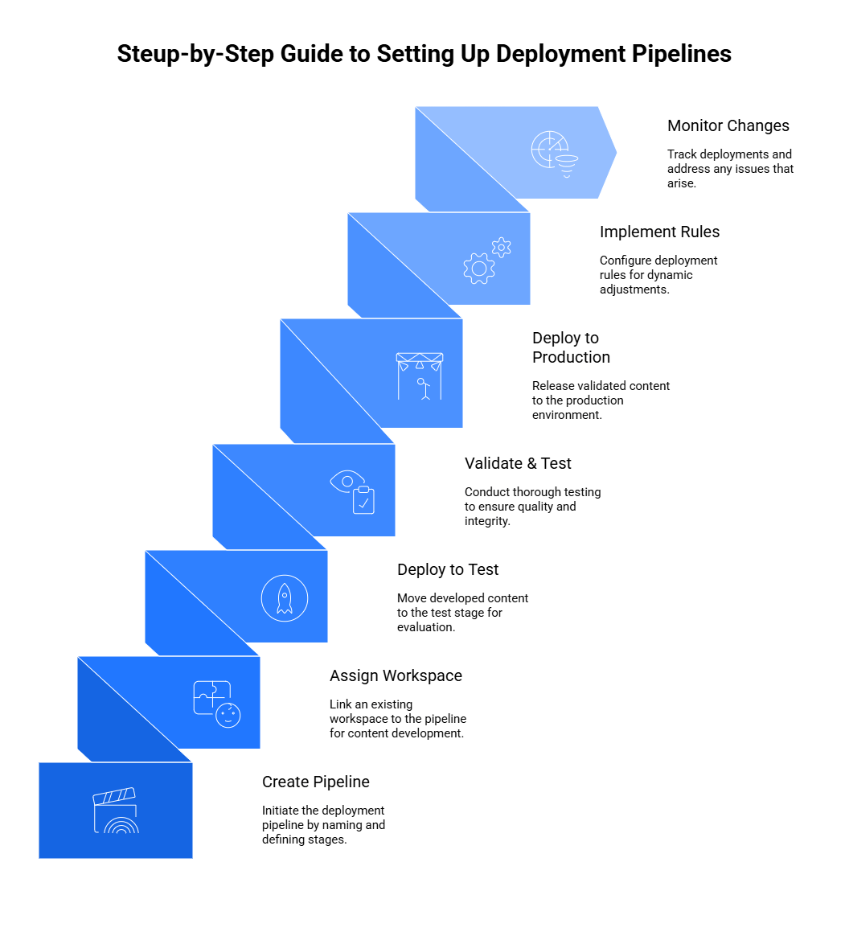

Step-by-Step Guide to Setting Up Deployment Pipelines

Before creating your first deployment pipeline, ensure you have the necessary licensing and workspace structure in place. You’ll need either a Power BI Premium license or Premium Per User (PPU) license to access deployment pipeline functionality. Additionally, prepare your workspace architecture by creating three separate workspaces that will serve as your development, test, and production environments.

Step 1 – Create a Deployment Pipeline

- Navigate to Power BI Service and select Deployment Pipelines from the workspace list.

- Click on Create Pipeline or New Pipeline.

- Provide a name and description for the pipeline.

- Define the number of stages (default is three: Development, Test, and Production).

- Customize the stages as required.

Step 2 – Assign a Workspace

- Assign an existing workspace to the deployment pipeline.

- This workspace will serve as the initial stage for content development.

Step 3 – Deploy Content to the Next Stage

- After content is developed, deploy it to the Test stage.

- Use the comparison tool to identify differences between stages before deployment.

Step 4 – Validate and Test

- Conduct performance tests, data validation, and quality checks in the Test stage.

- Ensure that business rules and data integrity are maintained.

Step 5 – Deploy to Production

- Once testing is complete, deploy the content to the Production stage.

- Ensure that only validated reports and datasets are accessible to business users.

Step 6 – Implement Deployment Rules (Optional)

- Configure deployment rules to modify parameters and connections dynamically.

- Ensure that development environments use sample datasets while production environments connect to live databases.

Step 7 – Monitor Changes and Manage Rules

- Establish deployment rules for automatic data source connections and parameter configurations

- Monitor deployment history through the pipeline interface

- Track successful deployments and identify any issues

- Address problems that arise during the content promotion process

Deployment Options

Deployment pipelines provide the following options:

1. Full Deployment

- Moves all content from one stage to the next.

- Suitable for major updates and new content rollouts.

2. Selective Deployment

- Allows users to choose specific reports, datasets, or dashboards for deployment.

- Useful when only a few changes need to be pushed to production.

3. Backward Deployment

- Moves content from a later stage (e.g., Production) back to an earlier stage (e.g., Test).

- Helps in rollback scenarios or when issues are identified post-deployment.

How to Migrate from SSRS to Power BI: Enterprise Migration Roadmap

Discover a structured approach to migrating from SSRS to Power BI, enhancing reporting, interactivity, and cloud scalability for enterprise analytics.

Automating with APIs and PowerShell for Power Bi Deployment Pipelines

1. Power BI REST API for Triggering Deployments

The Power BI REST API provides comprehensive automation capabilities for deployment pipeline management. Use the Pipelines API endpoints to programmatically trigger deployments between pipeline stages without manual intervention.

Key API operations include:

- Deploy Pipeline Stage: Automatically move content from Development to Test or Test to Production environments

- Get Pipeline Operations: Monitor deployment status and retrieve operation details for tracking progress

- Update Deployment Rules: Programmatically configure parameter mappings and data source connections for different environments

- List Pipeline Stages: Query current pipeline configuration and assigned workspaces for validation

Authentication requires service principal setup with appropriate Power BI permissions. Store credentials securely using Azure Key Vault or similar secret management services to maintain security best practices.

2. CI/CD Integration with Azure DevOps and GitHub Actions

Azure DevOps Integration

Create YAML pipeline definitions that incorporate Power BI deployment steps into your existing development workflows:

- Build Stage: Validate Power BI files and run automated tests on report functionality

- Release Stage: Deploy content through pipeline stages using REST API calls or PowerShell scripts

- Approval Gates: Configure manual approval steps before production deployments

- Rollback Procedures: Implement automated rollback mechanisms for failed deployments

Use Azure DevOps service connections to securely authenticate with Power BI services and manage deployment credentials.

GitHub Actions Integration

Implement GitHub Actions workflows that trigger on repository changes:

- Automated Testing: Run Power BI file validation and data source connectivity tests

- Staged Deployment: Deploy changes through Development, Test, and Production environments sequentially

- Notification Systems: Send deployment status updates to team channels via Slack or Microsoft Teams

- Environment Protection: Configure branch protection rules to prevent unauthorized production deployments

Both platforms support scheduling, conditional deployments, and integration with popular notification systems for comprehensive deployment automation.

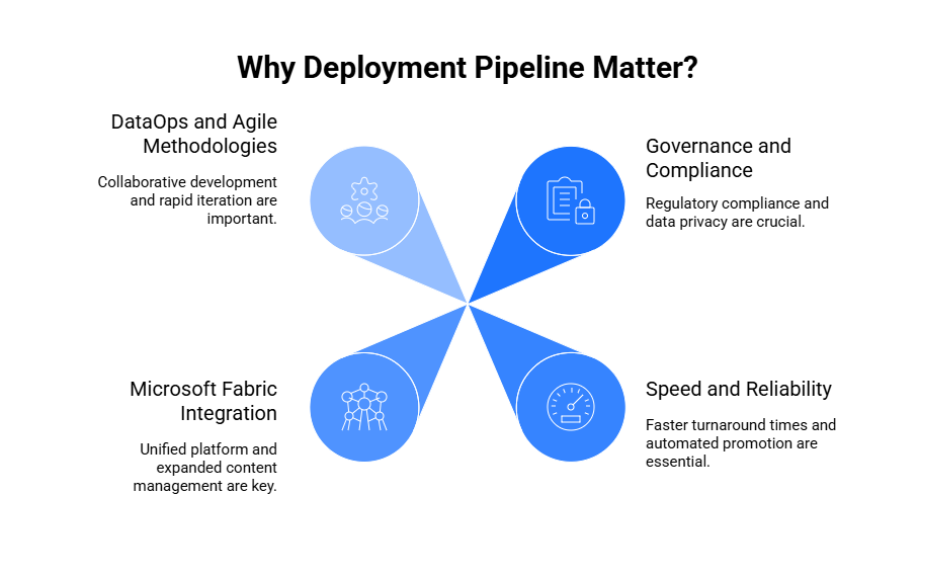

How Are Deployment Pipelines Going to Make a Difference?

1. Governance and Compliance Drive Enterprise Adoption

- Regulatory scrutiny increases: Organizations face heightened requirements for data usage and reporting accuracy documentation

- Audit trail requirements: Deployment pipelines provide comprehensive tracking of content changes, including who modified what and when

- Executive governance expectations: Leadership demands the same level of control for BI content as financial reporting systems

- Clear approval processes: Pipeline workflows establish documented approval gates that satisfy compliance teams

- Data privacy compliance: GDPR and similar regulations require demonstrable control over data transformation and presentation

- Version control necessity: Organizations must show how reports evolved from development through production release

2. Speed and Reliability Transform Business Expectations

- Faster turnaround times: Business stakeholders expect report modifications and new analytics requests completed in hours, not days

- Automated promotion benefits: Manual publishing processes are replaced by streamlined pipeline promotion workflows

- Error reduction: Human mistakes during manual deployments frequently broke functionality or corrupted data connections

- Relationship preservation: Automated pipelines maintain connections between datasets, reports, and dashboards during environment transitions

- Continuous integration adoption: Development teams implement CI practices for BI content similar to software development workflows

- Bottleneck elimination: Automated processes remove manual delays and enable more responsive analytics delivery

3. Microsoft Fabric Integration Changes Everything

- Unified platform convergence: Power BI integration with Microsoft Fabric creates comprehensive data and analytics environments

- Expanded content management: Deployment pipelines now handle data warehouses, machine learning models, and real-time analytics

- Integrated workflow governance: Organizations manage entire data engineering processes through unified pipeline systems

- Sophisticated promotion requirements: Fabric’s capabilities demand more advanced governance and deployment processes

- End-to-end lifecycle management: Pipelines control not just reports but complete analytical ecosystems

- Enhanced integration capabilities: Unified platforms require coordinated deployment across multiple analytical components

4. DataOps and Agile Methodologies Become Standard

- Collaborative development practices: Modern BI teams adopt DataOps emphasizing collaboration, automation, and continuous improvement

- Rapid iteration support: Deployment pipelines enable quick iteration cycles while maintaining quality and governance standards

- Experimentation workflows: Teams test new features in development environments and promote successful innovations quickly

- Production deployment safety: Improvements reach production without disrupting existing business workflows

- Agile responsiveness: Organizations respond faster to changing business requirements and market conditions

- Quality maintenance: Agile practices maintain governance standards despite accelerated development cycles

- Continuous improvement cycles: Teams implement feedback loops and iterative enhancements through structured pipeline processes

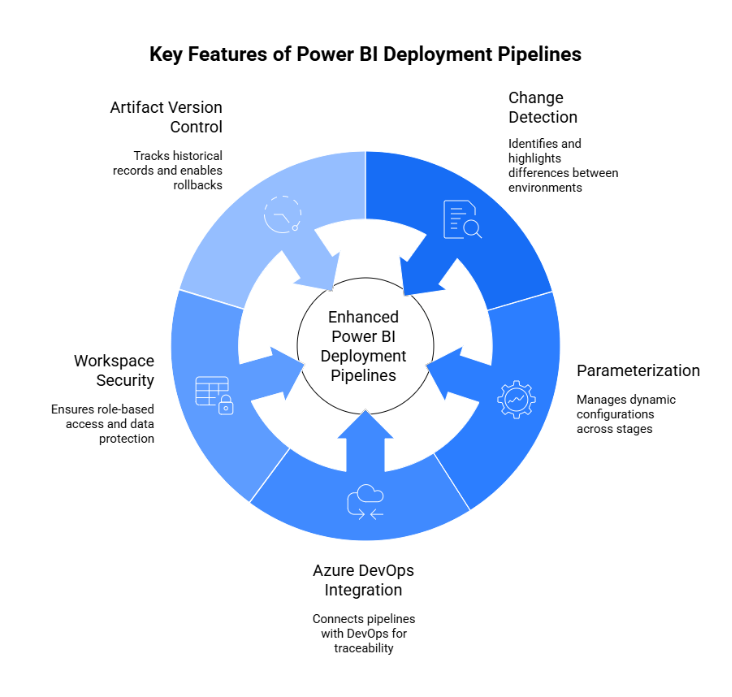

Essential Functions of Power BI Deployment Pipelines for Scalable BI Workflows

1. Change Detection and Comparison

Power BI pipelines automatically identify differences between environments through intelligent change detection. The interface highlights modified reports, updated datasets, and new dashboards with visual indicators that make it easy to spot what needs attention.

Detailed comparison views show specific changes within individual artifacts. Report modifications display side-by-side visualizations, while dataset changes reveal schema updates, new calculated columns, or modified relationships. This granular visibility helps teams understand exactly what will change during deployment.

The system tracks modification timestamps and user information, creating a comprehensive audit trail that supports compliance requirements and troubleshooting efforts.

2. Parameterization Support

Pipeline rules enable dynamic configuration management across different environments. Organizations can maintain separate database connections, API endpoints, or security settings for each stage without duplicating content.

For example, development workspaces connect to test databases while production uses live data sources. The same report automatically adapts to the appropriate environment during deployment. Parameter management extends to refresh schedules, capacity allocations, and performance optimization settings.

This flexibility eliminates the need to maintain multiple versions of identical content with different configuration settings.

3. Azure DevOps and Git Integration

Modern development practices integrate seamlessly with Power BI through Azure DevOps connectivity. Teams can link pipeline deployments to DevOps work items, creating traceability between business requirements and BI deliverables.

Git integration supports version control for Power BI Desktop files, enabling collaborative development workflows similar to software development practices. Developers can branch, merge, and track changes to report definitions while maintaining pipeline synchronization.

Automated deployment triggers can initiate pipeline promotions based on DevOps release schedules or code repository changes, creating end-to-end automation.

4. Workspace Security and Role-Based Access

Each pipeline stage maintains independent security configurations appropriate for its intended audience. Development workspaces restrict access to BI developers and technical stakeholders. Test environments include business analysts and subject matter experts for validation. Production workspaces serve end users with read-only permissions.

Role-based access controls determine who can view, modify, or deploy content at each stage. Pipeline administrators manage overall workflow permissions while workspace contributors focus on content development within their assigned environments.

Security inheritance rules ensure that sensitive data remains protected while enabling appropriate collaboration across development teams.

5. Artifact Version Control

Comprehensive version tracking maintains historical records of all pipeline artifacts. Teams can view previous versions of reports, compare changes over time, and restore earlier configurations if needed.

Deployment history shows exactly when content moved between stages, who initiated the deployment, and what specific changes were included. This information supports change management processes and helps troubleshoot issues that arise after release.

Rollback capabilities allow quick restoration of previous versions when problems occur in production, minimizing business disruption and maintaining user confidence in BI systems.

Best Practices for Using Power BI Deployment Pipelines

1. Maintain Environment Parity

- Standardize workspace structures across Dev, Test, and Production with consistent naming conventions and folder organization

- Mirror security settings and capacity allocations to ensure testing accurately reflects production behavior

- Document environment differences and establish clear rationale for any necessary variations

- Keep data structures identical while using parameters for environment-specific connections

2. Implement Dynamic Dataset Configuration

- Use parameter rules for database connections, API endpoints, and file paths that vary by environment

- Configure stage-appropriate refresh schedules – hourly for development, daily for production

- Apply parameterization to security contexts and performance settings

- Avoid duplicating content by leveraging dynamic configuration instead of multiple versions

3. Establish Clear Documentation Standards

- Create deployment runbooks with pre-deployment checklists and validation procedures

- Document approval workflows including who has authority at each stage and escalation paths

- Maintain contact lists for key stakeholders and technical resources needed during deployments

- Record rollback procedures for quick issue resolution when problems occur

4. Build Quality Assurance Into Every Stage

- Require business validation in Test environment before production deployment

- Implement technical reviews covering performance, security, and data quality standards

- Create standardized testing procedures for functionality, performance, and user experience

- Include regression testing to ensure new changes don’t break existing functionality

- Document test results and approval decisions for audit purposes

5. Time Deployments Strategically

- Schedule during low-usage periods to minimize business disruption

- Coordinate with business users to identify optimal deployment windows

- Plan maintenance windows that accommodate deployment and data refresh operations

- Communicate schedules in advance and provide alternative access during maintenance

6. Maintain Comprehensive Audit Trails

- Log all deployment activities including initiator, content changes, and timestamps

- Track approval decisions with stakeholder names and any noted conditions

- Retain deployment history for compliance requirements and troubleshooting

- Document rollback actions when they occur for future reference

7. Integrate with Enterprise DevOps Practices

- Connect with CI/CD pipelines for seamless automation from development through production

- Link to work items in Azure DevOps or project management tools for traceability

- Automate deployment triggers based on related application or data pipeline updates

- Set up automated notifications for deployment status and issue alerts

- Coordinate with system changes to keep BI content synchronized with underlying updates

Real-World Use Cases of Power Bi Deployment Pipelines

Example 1: Financial Institution Executive Dashboards

A regional bank uses deployment pipelines to manage monthly executive dashboards that consolidate data from loan systems, deposit accounts, and risk management platforms. The development team creates updated reports with new regulatory metrics each month, then promotes them through testing where compliance officers verify accuracy against federal reporting requirements.

The pipeline automatically handles connections to different database environments – development uses masked data while production connects to live financial systems. Deployment timing aligns with month-end closing procedures, ensuring executives receive updated dashboards within 48 hours of period close. Version control maintains historical dashboard versions for auditing purposes, and rollback capabilities provide immediate recovery if data quality issues emerge.

Example 2: Retail Performance Reports Across 100+ Regions

A national retail chain manages store-level performance reports across 120 locations using automated deployment pipelines. Regional managers need consistent reporting formats but with store-specific data connections and localized metrics.

The development team builds standardized templates in the development workspace, then uses parameterization to automatically configure store-specific database connections and regional performance benchmarks during deployment. The test environment validates report accuracy using sample stores from each region before promoting to production.

Scheduled deployments occur weekly during off-peak hours to minimize impact on store operations. Each region maintains separate workspace security, ensuring store managers only access their location’s data while district managers see aggregated regional views.

Example 3: Government Agency Public Data KPIs

A state transportation department tracks public infrastructure KPIs through Power BI pipelines that handle sensitive data classifications and role-based access requirements. Development workspaces use anonymized datasets while production connects to classified traffic and safety databases.

The pipeline enforces strict approval workflows where department heads must sign off on any public-facing dashboard changes. Test environments allow policy analysts to validate data accuracy and ensure compliance with public information disclosure requirements.

Role-based security automatically configures access levels during deployment – public dashboards show aggregate statistics while internal reports include detailed project information restricted to authorized personnel. Audit logging tracks all access and modifications for government transparency requirements.

Common Challenges and How to Overcome Them

1. Sync Issues Between Workspaces

Challenge: Content becomes misaligned between environments when manual changes occur outside the pipeline workflow.

Solution: Establish strict governance requiring all changes flow through the deployment pipeline. Use the comparison feature regularly to identify drift between environments. Create workspace access policies that prevent direct production modifications. Schedule weekly sync checks to catch discrepancies early and document any emergency changes that bypass the pipeline.

2. Broken Parameters or Credentials During Deployment

Challenge: Database connections fail or authentication errors occur when content moves between stages.

Solution: Set up parameter rules before initial deployment and test connections in each environment. Use service principals instead of individual user credentials for automated processes. Create connection validation checklists that verify data source accessibility before deploying. Maintain separate credential stores for each environment and document connection string formats.

3. Misconfigured Row-Level Security (RLS)

Challenge: Security rules don’t apply correctly after deployment, exposing unauthorized data or blocking legitimate access.

Solution: Test RLS configurations thoroughly in the test environment with representative user accounts from each security group. Document security requirements clearly and create validation scripts that verify proper access controls. Use consistent security group naming across environments and maintain separate test user accounts that mirror production security scenarios.

4. Large Dataset Refresh Issues

Challenge: Deployment timeouts or memory errors occur when processing large datasets during promotion.

Solution: Schedule deployments during low-usage periods with extended timeout settings. Break large datasets into smaller, related tables where possible. Use incremental refresh configurations that only process changed data. Monitor capacity utilization and consider premium capacity allocation for complex datasets. Implement staged deployment approaches that handle datasets separately from reports.

5. Troubleshooting Best Practices

- Monitor deployment logs actively and set up alerts for common failure patterns

- Create rollback procedures with specific steps for each type of deployment failure

- Maintain emergency contacts for quick resolution during business-critical deployment issues

- Document common error codes and their proven solutions for faster troubleshooting

- Test deployment processes in non-production environments before implementing changes

SSIS to Fabric Migration Made Easy: A Complete Walkthrough

Learn how to smoothly migrate from SSIS to Fabric, streamline data integration, and enhance performance with this step-by-step walkthrough.

Power BI Pipelines vs Custom DevOps Pipelines

| Aspect | Power BI Deployment Pipelines | Custom DevOps Pipelines (e.g., Azure DevOps, GitHub Actions) |

| Ease of Use | Simple UI-based, no coding required | Requires YAML scripts or PowerShell; steeper learning curve |

| Integration | Native to Power BI; built into the service | Broader integration with CI/CD tools, testing, and deployment ecosystems |

| Automation | Limited automation; manual promotion unless integrated with API | Fully automated with version control, triggers, and testing pipelines |

| Flexibility | Predefined stages (Dev, Test, Prod) | Highly customizable stages and workflows |

| Monitoring | Basic deployment logs and comparison view | Detailed logs, alerts, approval workflows, rollback features |

| Governance | Supports workspace roles and access controls | Can enforce policies via scripts and external governance tools |

| Setup Time | Quick setup—ideal for BI and business teams | Slower setup—requires technical expertise |

| Best For | Citizen developers, business users, Power BI-focused teams | Data engineers, DevOps teams, large-scale enterprise deployments |

| Version Control | Limited versioning without Git integration | Full version control with Git integration |

| Cost & Maintenance | Low maintenance; included with Power BI Premium/Fabric | May incur extra DevOps tooling cost and maintenance overhead |

Future Outlook and Latest Updates of Power Bi Deployment Pipelines

1. Enhanced Fabric Integration

Microsoft Fabric’s unified data platform is transforming how deployment pipelines operate across the entire analytics ecosystem. Future updates will enable seamless promotion of data warehouses, lakehouses, and semantic models alongside Power BI reports through a single pipeline interface.

Cross-workload dependencies will be automatically managed, ensuring that data engineering changes deploy before dependent BI content. This integration eliminates the current complexity of coordinating separate deployment processes across different Fabric workloads.

2. Advanced GitOps Support

Enhanced Git integration will support branch-based development workflows similar to modern software development practices. Teams will merge feature branches into main branches, automatically triggering pipeline deployments through pull request approvals.

Version control capabilities will extend beyond Power BI Desktop files to include dataset configurations, security settings, and workspace metadata. This comprehensive source control enables better collaboration and changes tracking across development teams.

2. Intelligent Automation and Monitoring

AI-powered deployment recommendations will analyze usage patterns and suggest optimal deployment schedules. Predictive monitoring will identify potential issues before they impact users, automatically adjusting capacity allocation during high-demand periods.

Smart rollback capabilities will automatically revert problematic deployments based on performance degradation or error rate increases. Enhanced monitoring dashboards will provide real-time visibility into deployment health across all environments.

3. Enterprise BI Governance Evolution

Deployment pipelines are becoming central to enterprise BI governance frameworks, with enhanced policy enforcement and compliance reporting capabilities. Organizations will define governance rules that automatically validate content against corporate standards before deployment approval.

Integration with Microsoft Purview will provide comprehensive data lineage tracking from source systems through deployed reports, supporting regulatory compliance and impact analysis requirements across the entire data estate.

Kanerika: Elevating Your Reporting and Analytics with Expert Power BI Solutions

Kanerika stands at the forefront of technological innovation, delivering cutting-edge solutions that redefine how businesses harness their data potential. As a premier Microsoft-certified Data and AI Solutions Partner, we specialize in transforming complex data challenges into strategic opportunities through advanced Power BI and Microsoft Fabric technologies.

Our approach goes beyond traditional analytics. We craft bespoke solutions that seamlessly integrate sophisticated data visualization, predictive analytics, and intelligent insights across diverse industry sectors. From manufacturing to finance, healthcare to retail, Kanerika empowers organizations to unlock the hidden value within their data ecosystems.

With a deep understanding of Microsoft’s sophisticated analytics tools, we design intelligent dashboards, implement robust data strategies, and enable businesses to make data-driven decisions with unprecedented precision. Our team of expert analysts and data scientists work collaboratively to drive operational excellence, accelerate growth, and spark innovation through the power of intelligent data solutions.

Transform Your Data Strategy with Power BI’s Advanced Capabilities

Partner with Kanerika Today.

FAQs

1. What are Power BI Deployment Pipelines?

Power BI Deployment Pipelines are a built-in feature that helps users manage and automate the release of content (datasets, reports, dashboards) across development (Dev), test (UAT), and production (Prod) environments in a structured and governed way.

2. Do I need Power BI Premium to use deployment pipelines?

Yes, deployment pipelines require a Power BI Premium license (either capacity-based or PPU – Premium Per User). This feature is not available in the standard Pro license.

3. Can I deploy multiple reports and datasets at once?

Yes, deployment pipelines support batch deployment, allowing users to push multiple artifacts (like datasets, reports, dashboards) simultaneously across stages.

4. How do deployment pipelines handle data source credentials?

Credentials are not automatically transferred between environments. You need to reconfigure data source credentials manually for each stage unless using a shared gateway or parameterized connections.

5. Can I roll back changes using deployment pipelines?

Power BI Deployment Pipelines do not offer native rollback. However, version control can be managed manually by saving PBIX files or integrating with external DevOps tools for backup and restore.

6. Are parameter values supported across pipeline stages?

Yes. You can define and change parameter values per environment, which is helpful for switching data sources or configurations between Dev, Test, and Prod.

7. What are the limitations of Power BI Deployment Pipelines?

Some limitations include:

- Not supporting all content types (e.g., dataflows, paginated reports)

- No automatic trigger/integration with Azure DevOps

- Manual gateway configuration per stage