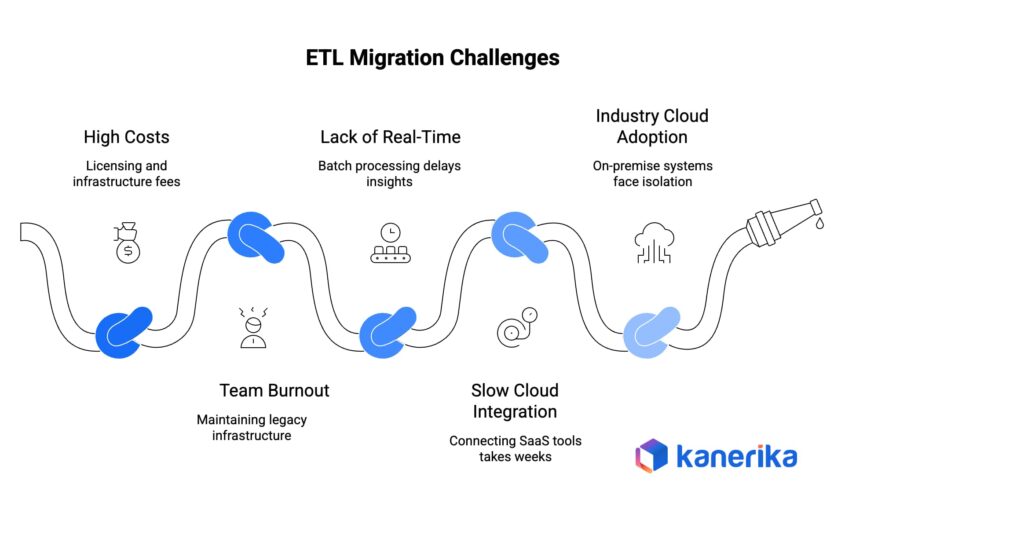

Your finance team closes the quarter at 11 PM instead of 6 PM. Or, your data engineers wait weeks to connect a new cloud data source. Or, your infrastructure team renews legacy ETL licenses at escalating costs. Meanwhile, competitors are running real-time dashboards and shipping analytics in weeks.

This scenario can define your business survival. Modern businesses rely heavily on ETL pipelines to extract, transform, and load data seamlessly across applications, databases, and analytics systems. ETL migration—moving from legacy systems like Informatica, SSIS, or DataStage to cloud platforms—has become essential for enterprises trying to keep pace. But it’s also the project that keeps CTOs awake: complex, high-stakes, and easy to get wrong.

This guide walks through what’s driving migration urgency, what goes wrong, and how organizations have successfully modernized without breaking operations.

Tl;DR

- Legacy ETL platforms (Informatica, SSIS, DataStage) are now a business bottleneck—driving high costs, slow integrations, and blocking real-time and cloud initiatives.

- ETL migration is risky mainly because of hidden business logic, performance surprises on cloud platforms, complex validation, tight cutover windows, and skills gaps on the target tools.

- Successful programs follow a pattern: inventory and metadata extraction, automated code conversion, rigorous reconciliation testing, phased cutover, and strong governance on the new platform.

- Automation (tools like FIRE for metadata and FLIP for conversion) lets teams migrate hundreds of workflows faster and more safely by focusing engineers on validation and optimization instead of manual recoding.

- Kanerika brings verified cloud and data engineering expertise plus proprietary accelerators to help enterprises modernize ETL with minimal disruption and a clear, phased roadmap.

From Legacy to Modern Systems—We Migrate Seamlessly!

Partner with Kanerika for proven migration expertise.

Why ETL Migration Is Now Essential?

Licensing and infrastructure costs are no longer sustainable

Legacy ETL platforms charge significant annual fees that grow faster than budgets. Vendors are actively pushing cloud-only versions at higher price points.

Your team is burning out maintaining legacy infrastructure

Data engineers spend half their time patching workflows and managing servers. Innovation work gets the leftovers. This capacity drain is hard to recover.

Real-time capability is becoming a competitive requirement

Batch processing delivers insights 24+ hours late. Competitors run real-time dashboards and detect issues before your team even knows they exist. In retail, this means inventory adjustments by the minute. In financial services, it means fraud detection before transactions settle.

Cloud integrations are painfully slow in legacy systems

Connecting a new SaaS tool takes weeks in legacy ETL versus days in modern platforms. One healthcare organization needed dozens of integrations annually but could only deliver a handful because each required months of specialized work.

The industry is moving to the cloud

Gartner predicts a significant portion of global workloads will be cloud-ready by 2027. Systems still on-premise will face growing isolation and shrinking vendor support.

Sadly, most organizations don’t move until crisis forces them: a critical job fails during month-end close, a cloud initiative stalls, or compliance requirements demand capabilities the legacy system can’t provide.

What Actually Goes Wrong During ETL Migration

Migration risks are genuine. Understanding them prevents expensive failures.

Data Discrepancies

Legacy systems accumulate decades of undocumented business logic: custom scripts, stored procedures, lookup overrides nobody remembers. A field calculates slightly differently in the new system. Nobody notices for months until compliance discovers a significant discrepancy in revenue or calculations. These silent failures damage trust more than dramatic crashes.

Performance Mismatch

Cloud platforms behave fundamentally differently from on-premise infrastructure. A job that runs in two hours on dedicated hardware might run much longer on cloud infrastructure if not designed correctly. You find this after go-live, when stakeholders are watching and confidence is fragile.

Longer Validation Cycle

You’re validating transformation logic, calculation accuracy, error handling, and scheduling dependencies. A typical organization has hundreds of workflows and thousands of mappings. Validating all of it becomes the project bottleneck that extends timelines significantly.

Risk on Business Continuity

Global operations migrate in narrow windows. Miss your window and you’re either extending cutover into business hours or forcing emergency rollback. Industry research shows most migration projects run over time or budget—not because teams aren’t trying hard, but because migrations are deceptively complex.

Skills Gaps

Your team knows the legacy platform. They don’t know the target. During migration—when expertise is most critical—your team is learning on the job. This combination of high stakes and steep learning curves is where small mistakes compound into big problems.

Key Drivers for Successful ETL Migration

Successful migrations follow a consistent pattern: comprehensive discovery, intelligent automation, rigorous validation, and phased execution.

Start with discovery and inventory.

Map all active jobs, workflows, and scripts. Identify dependencies: which jobs feed which downstream systems? One organization discovered a “rarely used” job was actually feeding three critical reporting systems nobody remembered. Assess complexity to prioritize migration order—simple jobs first (confidence-building), complex jobs last.

Extract metadata automatically.

Automated extraction captures what manual documentation misses. Extract data mappings, transformation rules, workflow orchestration, connection strings, and error handling logic using tools that read native metadata structures directly from your legacy system. Manual documentation typically misses 15-25% of actual logic.

Automate code conversion.

Instead of hand-coding new jobs, use conversion tools to generate them. Modern migration tools automatically parse extracted metadata, translate workflows, apply platform-specific rules, and optimize for cloud patterns. Automation typically handles much of the conversion effort, with the remaining work requiring human expertise for custom logic and performance tuning. This approach converts hundreds of workflows in months instead of a year or more of manual engineering.

Validate with reconciliation frameworks.

Automated reconciliation compares old and new system outputs before any irreversible decisions. Run the legacy job and capture output. Run the new job on the same input. Compare results automatically and flag discrepancies for investigation. This work consumes a meaningful portion of project time but is non-negotiable for production cutover.

Deploy with safeguards.

Migrate low-risk workflows first to build confidence and operational experience. Move important but non-critical processes with 1-2 weeks of parallel running for validation. Mission-critical jobs run in parallel for several weeks before legacy shutdown. Deploy monitoring dashboards, document procedures, test rollback capabilities, and train teams thoroughly.

Implement governance and long-term maintainability.

Set up role-based access control, audit trails logging all changes and access, performance monitoring, and data quality rules. Create technical documentation including architecture diagrams and data lineage maps. Integrate with version control for collaborative development.

Why Automation Matters?

Manual ETL migration fails because it’s slow, error-prone, unmotivating, and nearly impossible to validate comprehensively. Automated migration with human oversight flips this model. The best migration platforms extract comprehensive metadata, convert logic intelligently with built-in knowledge of both source and target systems, generate test cases automatically, and provide detailed conversion reports.

Converting hundreds of workflows manually takes a year or more. Using automated tools, most organizations move faster through the conversion phase, freeing engineers to focus on validation and optimization. This speed builds momentum and executive confidence.

Data Conversion vs Data Migration: Which Approach Suits Your Project?

Explore the differences between data conversion and migration, and how Kanerika handles both.

ETL MIgration Examples

Manufacturing company modernizing legacy Informatica jobs.

This organization had 450+ jobs built over 15 years, month-end close running 6+ hours, and new integrations taking weeks. Using automated metadata extraction and code conversion, they completed migration in several months. Month-end close improved significantly. New integrations dropped from weeks to days. The team reclaimed time previously spent on infrastructure maintenance and could focus on analytics instead.

Financial services accelerating compliance reporting.

600+ SSIS packages spread across data warehouse and analytics. Finance team spent days manually verifying compliance reports. After structured migration with automated validation, compliance reports run in a fraction of the previous time. Data lineage became visible through automated governance. The organization deployed new real-time capabilities for use cases that were previously infeasible. Infrastructure costs decreased meaningfully.

Both examples illustrate the same pattern: phased migration with rigorous validation, enabled by automation, prevents the silent failures that plague rushed approaches.

Kanerika’s Approach to ETL Migration

Kanerika has guided many enterprises through ETL modernization. Here’s what’s different in our approach:

Verified expertise. Microsoft Data Warehouse Migration to Azure Specialization. Databricks Certified Partner. ISO 27001, 27701, SOC II Type II, and GDPR certified. These are verified by third parties, not marketing claims.

Proprietary automation. FIRE extracts complete metadata from legacy repositories (not documentation). FLIP intelligently converts code with built-in knowledge of both source and target platforms, preserving business logic while optimizing for cloud.

Methodology focused on governance and business continuity. Every migration includes built-in audit trails, role-based access control, data quality monitoring, and long-term maintainability from day one. Speed is important, but we optimize for data integrity and operational safety first.

Phased execution. We start with low-risk workloads to build confidence. Mission-critical jobs run in parallel for weeks before cutover. Rollback procedures are tested and ready.

Most migration projects chase speed and cut corners on validation. We do the opposite. This approach takes slightly longer upfront but prevents the costly failures that plague rushed migrations.

Are You Ready to Modernize?

Modernization is urgent if your team spends most of its capacity on maintenance rather than innovation, new integrations take weeks to deliver, real-time analytics capability would create competitive advantage, compliance requirements demand audit trails your current system can’t provide, or team morale is suffering.

Next Steps

If you’re evaluating whether to modernize: Start with a structured assessment of your ETL landscape. Understand what you have, what it costs, and what constraints exist. Kanerika’s Migration Assessment covers your current environment, identifies quick wins and high-risk areas, and outlines what an automation-first roadmap looks like.

If you’re planning a migration: Develop a detailed project roadmap with phased sequence, automation versus manual allocation, skill development plan, and validation strategy. Kanerika’s Readiness Workshop brings together business stakeholders, IT leadership, and engineering teams to define realistic timelines and success metrics.

If you’re ready to execute: Partner with experienced guides who’ve done this at scale. Kanerika combines FIRE and FLIP platforms with structured methodology: metadata extraction, intelligent conversion, comprehensive validation, governance implementation, and operational readiness.

From Legacy to Modern Systems—We Migrate Seamlessly!

Partner with Kanerika for proven migration expertise.

FAQs

1. What is ETL migration?

ETL migration is the process of transferring extract–transform–load (ETL) workflows, logic, and data pipelines from one platform or environment to another. It helps modernize legacy systems, improve scalability, and enable cloud integration.

2. Why do organizations need ETL migration?

Companies migrate ETL systems to reduce maintenance costs, enhance performance, and leverage cloud-native features like automation, elasticity, and AI/ML integration. It’s a key enabler of digital transformation and analytics modernization.

3. What are common types of ETL migration?

Typical migrations include on-premise to cloud, legacy ETL to modern tools, and batch to streaming pipelines, depending on business needs and data processing requirements.

4. What challenges occur during ETL migration?

Common challenges include data loss, logic inconsistencies, integration failures, and extended downtime. Proper validation, automation, and phased execution mitigate these risks.

5. How does automation improve ETL migration?

Automation tools like Kanerika FLIP convert workflows, mappings, and logic automatically—reducing manual effort, preserving accuracy, and accelerating migration timelines.

6. How can companies ensure data accuracy during migration?

By implementing checksum validation, reconciliation reports, and automated testing frameworks, organizations can maintain complete data integrity throughout the migration process.

7. Which ETL tools are most popular for modern data pipelines?

Modern ETL and ELT tools include Talend, Azure Data Factory, Databricks, Snowflake, and AWS Glue, which offer cloud scalability, automation, and seamless integration capabilities.

8. How long does migration take?

Using automated tools, most organizations complete a mid-sized migration in several months to a year depending on complexity, team size, and business constraints. Manual approaches take significantly longer.But, Kanerika’s proprietary migration tool powered by FLIP can significantly reduce the time spent on migration with AI-enabled automation.

9. Can we migrate without downtime?

Yes, through parallel running and phased deployment. Critical workflows run on both systems simultaneously until data parity is verified. This adds timeline but eliminates cutover risk. A parallel period of several weeks for mission-critical jobs is typical for managing risk.

10. Should we redesign workflows or recreate as-is?

Recreate first for speed and risk reduction. After migration stabilizes, redesign specific workflows to leverage cloud-native capabilities like streaming and event-driven processing. This two-phase approach is safer than trying to redesign and migrate simultaneously.

11. How do we ensure data accuracy during ETL migration?

Automated reconciliation frameworks comparing old and new system outputs before cutover. Testing covers row counts, column values, calculations, and edge cases. This work consumes a meaningful portion of project time but is non-negotiable for production cutover.

12. What if new systems don't perform as expected after ETL migration?

Proper testing during validation catches this before production. Cloud platforms require different optimization strategies than on-premise infrastructure: partitioning for distributed processing, resource allocation for elastic compute, optimal data formats. Migration tools apply cloud-optimized patterns automatically, but complex custom logic needs human tuning.