Spotify handles more than 38,000 actively scheduled pipelines every day, processing billions of songs and user preferences to create those perfect playlists you love. But here’s what most people don’t know: their success didn’t come from choosing between data operations or software development practices—it came from combining them. By prioritizing data, Spotify built an automated data collection platform that enables data-driven decision-making in DevOps and improved their developer productivity.

Most companies are still running their data teams and development teams in separate lanes, missing massive opportunities. Meanwhile, organizations that master DataOps vs DevOps integration are seeing real results: Amazon went from monthly releases to 23,000 deployments per day—one every three seconds. They stopped treating software and data as separate problems and started solving them together. Your competitors are probably still debating which approach to pick first, but the smart money is on building both capabilities simultaneously. The question is: how quickly can you start?

Automate Your Data Workflows for Optimal Performance!

Partner with Kanerika for Expert Data Automation Services

What is DevOps?

DevOps is a way of working that brings together software development teams (who write code) and operations teams (who manage systems) to collaborate better. Instead of these teams working separately and passing projects back and forth, DevOps creates a shared approach where everyone works together throughout the entire process—from writing code to releasing it to users.

Think of it like a relay race where runners used to hand off the baton and walk away. DevOps is like having the whole team run together, helping each other reach the finish line faster and with fewer dropped batons.

The main goal is to deliver software updates and new features to users more quickly, reliably, and safely. DevOps achieves this through automation, better communication, and shared responsibility for both building and maintaining applications.

5 Key Components of DevOps

1. Continuous Integration/Continuous Deployment (CI/CD)

Developers regularly merge their code changes into a shared repository where automated tests run immediately. When tests pass, the code automatically deploys to production, eliminating manual handoffs and reducing errors.

2. Infrastructure as Code (IaC)

Server configurations, networks, and computing resources are defined using code files instead of manual setup. This approach makes infrastructure reproducible, version-controlled, and consistent across different environments like development and production.

3. Automated Testing

Software tests run automatically at multiple stages without human intervention. This includes unit tests for individual code pieces, integration tests for system interactions, and performance tests, catching bugs early.

4. Monitoring and Observability

Real-time tracking of application performance, user behavior, and system health through dashboards and alerts. Teams can quickly identify issues, understand user impact, and make data-driven decisions for improvements.

5. Collaboration and Communication

Cross-functional teams share knowledge, tools, and responsibilities through regular meetings, shared documentation, and unified communication platforms. This breaks down traditional silos between development, operations, and security teams.

DataOps Benefits: Ensuring Data Quality, Security, And Governance

Transform organizational data strategies by integrating advanced operational practices that optimize quality, security, and governance.

What is DataOps?

DataOps is a way of working that brings together everyone involved in handling data—data engineers, data scientists, analysts, and business teams—to collaborate more effectively. Instead of these teams working in isolation where data projects take months to deliver insights, DataOps creates a streamlined approach where everyone works together to get reliable data into the hands of decision-makers quickly.

Think of it like a restaurant kitchen. Traditional data work is like having the chef, prep cook, and servers all working separately without talking. DataOps is like having a well-coordinated kitchen where everyone communicates, follows the same recipes (processes), and works together to serve customers faster with consistent quality.

The main goal is to reduce the time it takes to go from “we need this data insight” to “here’s the answer that helps our business.” DataOps achieves this through automated data pipelines, quality checks, and shared responsibility for making sure data is accurate, accessible, and actionable for business decisions.

5 Key Components of DataOps

1. Automated Data Pipelines

Data automatically flows from various sources through processing steps to final destinations without manual intervention. These pipelines handle data collection, cleaning, transformation, and delivery, ensuring consistent and timely data availability.

2. Data Quality Management

Continuous monitoring and validation of data accuracy, completeness, and consistency through automated checks. When data quality issues arise, alerts notify teams immediately, preventing bad data from reaching business users.

3. Data Governance and Compliance

Standardized policies, procedures, and controls ensure data security, privacy, and regulatory compliance. This includes access controls, data lineage tracking, and documentation that maintains trust and legal requirements.

4. Collaborative Data Teams

Cross-functional teams including data engineers, scientists, analysts, and business stakeholders work together using shared tools and processes. Regular communication ensures data projects align with business needs and technical capabilities.

5. Analytics and Insights Generation

Automated creation and delivery of reports, dashboards, and analytical models that provide actionable business insights. Self-service analytics tools enable business users to explore data independently while maintaining quality standards.

Maximize Efficiency with Data Pipeline Automation Solutions

Boost productivity and streamline workflows with automated data pipeline solutions for faster, error-free data processing.

DataOps Vs DevOps: 7 Critical Differences You Need to Know

1. Core Focus: Software vs. Data as Product

DevOps treats software applications as the main product, focusing on building, testing, and deploying code that users interact with directly. DataOps treats data itself as the product, emphasizing the creation of reliable, high-quality datasets and insights that drive business decisions. While DevOps asks “how do we build better software faster?”, DataOps asks “how do we deliver better data insights faster?”

DevOps Focus:

- Building and deploying software applications

- User experience and application performance

- Code quality and system reliability

- Feature delivery and bug fixes

DataOps Focus:

- Creating and managing data pipelines

- Data quality and accuracy

- Analytics and business insights

- Data accessibility and usability

2. Team Structure and Roles: Who’s on Your Team?

DevOps teams center around software development skills, bringing together people who write code, test applications, and manage systems. DataOps teams focus on data expertise, combining people who understand data engineering, analysis, and business context. The skill sets are quite different—DevOps teams think in terms of applications and user interfaces, while DataOps teams think in terms of data flows and business insights.

DevOps Team Members:

- Software developers and programmers

- Quality assurance (QA) engineers

- System administrators and operations engineers

- DevOps/Platform engineers

DataOps Team Members:

- Data engineers and architects

- Data scientists and analysts

- Business intelligence developers

- Data governance specialists

3. Lifecycle Management: SDLC vs. ADLC

DevOps follows the Software Development Lifecycle (SDLC), which moves from planning and coding to testing and deployment in a continuous loop. DataOps uses the Analytics Data Lifecycle (ADLC), which focuses on data discovery, preparation, model building, and insight delivery. While both use iterative approaches, DevOps cycles around software releases, whereas DataOps cycles around data insights and analytics outcomes.

SDLC (DevOps) Stages:

- Plan → Code → Build → Test

- Release → Deploy → Operate → Monitor

- Continuous feedback loop for software improvement

ADLC (DataOps) Stages:

- Discover → Access → Prepare → Model

- Validate → Deploy → Monitor → Insights

- Continuous feedback loop for data quality and relevance

4. Success Metrics: How Do You Measure Victory?

DevOps measures success through software delivery speed and system reliability, tracking how quickly teams can ship features without breaking things. DataOps measures success through data quality and business impact, focusing on how quickly teams can deliver accurate insights that drive decisions. DevOps cares about uptime and deployment frequency, while DataOps cares about data accuracy and time-to-insight.

DevOps Success Metrics:

- Deployment frequency and lead time

- Mean time to recovery (MTTR)

- Change failure rate

- System uptime and performance

DataOps Success Metrics:

- Data quality scores and accuracy

- Time from data request to insight delivery

- Data pipeline reliability and performance

- Business impact of data-driven decisions

5. Automation Focus: Different Tools, Different Goals

DevOps automation centers on software deployment and infrastructure management, using tools that build, test, and deploy applications automatically. DataOps automation focuses on data processing and quality assurance, using tools that move, clean, and validate data automatically. Both aim to reduce manual work, but DevOps automates software processes while DataOps automates data processes.

DevOps Automation Tools:

- CI/CD pipelines (Jenkins, GitHub Actions)

- Infrastructure as Code (Terraform, Ansible)

- Container orchestration (Docker, Kubernetes)

- Application monitoring (Prometheus, New Relic)

DataOps Automation Tools:

- Data pipeline orchestration (Apache Airflow, Prefect)

- Data quality testing (Great Expectations, dbt)

- Data integration platforms (Fivetran, Stitch)

- Data observability (Monte Carlo, Datadog)

6. Risk Management: What Keeps Teams Awake at Night?

DevOps teams worry most about system outages, security breaches, and failed deployments that could bring down applications and impact users directly. DataOps teams lose sleep over data quality issues, compliance violations, and incorrect insights that could lead to bad business decisions. Both face serious consequences, but DevOps risks are usually immediate and visible, while DataOps risks can be subtle and accumulate over time.

DevOps Risk Concerns:

- System downtime and service outages

- Security vulnerabilities and data breaches

- Failed deployments and rollback scenarios

- Performance degradation under load

DataOps Risk Concerns:

- Poor data quality affecting business decisions

- Data privacy and compliance violations

- Incorrect analytics leading to wrong conclusions

- Data pipeline failures causing information gaps

7. Stakeholder Expectations: Who Are You Serving?

DevOps primarily serves end users and internal technical teams, focusing on delivering software experiences that work smoothly and reliably. DataOps serves business decision-makers and analysts, focusing on providing accurate, timely data that supports strategic choices. DevOps stakeholders want applications that function well, while DataOps stakeholders want insights they can trust and act upon.

DevOps Stakeholders:

- End users and customers

- Product managers and business owners

- Internal development teams

- System administrators and IT operations

DataOps Stakeholders:

- Business executives and decision-makers

- Data analysts and business intelligence teams

- Compliance and governance teams

- Data scientists and researchers

Data Automation: A Complete Guide to Streamlining Your Businesses

Accelerate business performance by systematically transforming manual data processes into intelligent, efficient, and scalable automated workflows.

| Aspect | DevOps | DataOps |

|---|---|---|

| Core Focus | Building and deploying software applications | Creating and delivering data insights and analytics |

| Team Structure | Software developers, QA engineers, system administrators | Data engineers, data scientists, business analysts |

| Lifecycle Management | Software Development Lifecycle (SDLC) with code-to-deployment cycles | Analytics Data Lifecycle (ADLC) with data-to-insights cycles |

| Success Metrics | Deployment frequency, system uptime, mean time to recovery | Data quality scores, time-to-insight, pipeline reliability |

| Automation Focus | CI/CD pipelines, infrastructure provisioning, application deployment | Data pipeline orchestration, quality testing, insight generation |

| Risk Management | System outages, security breaches, failed deployments | Data quality issues, compliance violations, incorrect insights |

| Stakeholder Expectations | End users, product managers, technical teams | Business executives, analysts, decision-makers |

How Do DataOps and DevOps Complement Each Other?

1. Shared Infrastructure and Platform Management

Both methodologies benefit from the same underlying infrastructure technologies like cloud platforms, containers, and orchestration tools. DevOps teams can manage the infrastructure that DataOps teams use for their data pipelines, creating efficiency and consistency across the organization.

- Reduced infrastructure costs through shared resources

- Consistent security and compliance standards

- Simplified maintenance with unified platform management

- Common tooling and expertise across teams

2. Unified CI/CD Pipeline Integration

Data workflows can be integrated into the same continuous integration and deployment pipelines that handle software releases. When application code changes, data schemas and analytics models can be automatically updated and tested together, ensuring synchronization.

- Automated data pipeline deployment alongside application releases

- Synchronized testing of code changes and data transformations

- Reduced integration conflicts between software and data components

- Streamlined release management for complete solutions

3. Cross-Functional Team Collaboration

Teams can share knowledge, tools, and best practices across both domains, creating a more versatile workforce. DevOps engineers can help DataOps teams with automation and infrastructure, while data professionals can help developers understand analytics requirements.

- Knowledge sharing between technical and analytical teams

- Cross-training opportunities for skill development

- Reduced silos between development and data operations

- More well-rounded technical capabilities organization-wide

4. Common Monitoring and Observability Framework

Both methodologies benefit from integrated monitoring that tracks application performance alongside data pipeline health. A unified dashboard can show how application issues relate to data quality problems, providing a complete system health picture.

- Holistic view of system and data health

- Faster root cause identification across domains

- Integrated alerting for both application and data issues

- Correlation analysis between software performance and data quality

5. Standardized Version Control and Change Management

Using the same Git workflows and change management processes for both code and data artifacts creates consistency and reduces learning curves. Data scientists can use familiar development processes, and data pipeline changes follow the same review procedures as code changes.

- Consistent workflows across all technical teams

- Familiar tools and procedures for all team members

- Standardized review and approval processes

- Unified change tracking and rollback capabilities

6. Shared Security and Compliance Practices

Both domains need similar security controls, access management, and compliance frameworks. Implementing unified security practices that cover both software systems and data operations is more efficient than managing separate frameworks.

- Consistent protection across all organizational assets

- Reduced complexity in security management

- Unified access controls and audit trails

- Streamlined compliance reporting and documentation

7. End-to-End Business Value Delivery

Applications and data work together to create complete business solutions that serve customers effectively. When teams collaborate on integrated solutions, they can optimize the entire value chain rather than just individual components.

- Complete business solutions rather than isolated components

- Optimized customer experiences through integrated systems

- Faster delivery of data-driven features and insights

- Enhanced product capabilities through combined expertise

8. Resource Optimization and Cost Efficiency

Sharing computing resources, storage systems, and tooling between both methodologies reduces overall infrastructure costs. Organizations can create efficient, multi-purpose platforms that serve both software development and data processing needs.

- Maximized utilization of computing and storage resources

- Reduced licensing costs through shared tooling

- Efficient platform management with unified operations

- Lower total cost of ownership for technical infrastructure

9. Accelerated Innovation Cycles

When software features and data insights are developed together, organizations can innovate faster. New product ideas can be prototyped with both technical implementation and supporting analytics considered from the start.

- Faster time-to-market for data-driven products

- More robust solutions with integrated design approach

- Rapid prototyping with both software and data components

- Enhanced competitive advantage through faster delivery

10. Consistent Quality Standards

Applying similar quality gates, testing frameworks, and review processes across both software and data ensures consistent standards organization-wide. This creates a unified culture of quality that spans all technical deliverables.

- Uniform quality standards across all technical work

- Integrated testing approaches for software and data

- Consistent review processes and approval workflows

- Organization-wide culture of quality and excellence

DataOps vs DevOps: Top Tools and Tech Stack

DevOps Tool Ecosystem

1. Jenkins

Open-source automation server that orchestrates continuous integration and deployment pipelines. Jenkins connects code repositories to production environments, automatically building, testing, and deploying applications while providing detailed feedback on build status and failures.

2. Docker

Containerization platform that packages applications with their dependencies into lightweight, portable containers. Docker ensures applications run consistently across different environments, from development laptops to production servers, eliminating “it works on my machine” problems.

3. Kubernetes

Container orchestration platform that manages, scales, and deploys containerized applications across clusters of machines. Kubernetes automates load balancing, resource allocation, and failover, ensuring applications remain available and performant under varying workloads.

4. Terraform

Infrastructure as Code tool that defines and provisions cloud resources using declarative configuration files. Terraform enables teams to create reproducible, version-controlled infrastructure deployments across multiple cloud providers with consistent, predictable results.

5. Prometheus

Monitoring and alerting toolkit that collects metrics from applications and infrastructure in real-time. Prometheus stores time-series data, provides powerful querying capabilities, and integrates with visualization tools to help teams understand system performance and health.

DataOps Tool Landscape

1. Apache Airflow

Workflow orchestration platform that schedules and monitors complex data pipelines using Python code. Airflow provides visual pipeline management, dependency handling, and retry mechanisms, ensuring reliable execution of data processing tasks across distributed systems.

2. dbt (Data Build Tool)

Analytics engineering tool that transforms raw data into analysis-ready datasets using SQL and version control. dbt enables data teams to apply software engineering practices like testing, documentation, and collaboration to data transformation workflows.

3. Great Expectations

Data quality framework that validates data accuracy, completeness, and consistency through automated testing. Great Expectations helps teams catch data issues early, maintain data quality standards, and build confidence in analytical datasets and business reports.

4. Apache Kafka

Distributed streaming platform that handles real-time data feeds between applications and systems. Kafka enables high-throughput data ingestion, processing, and distribution, supporting both batch and streaming data architectures for modern analytics platforms.

5. Looker

Business intelligence platform that creates self-service analytics through modeling and visualization tools. Looker enables business users to explore data independently while maintaining governance and consistency through centralized data definitions and access controls.

Benefits of Data Automation for Insurance Companies

Unlock efficiency and accuracy in claims, underwriting, and customer service with data automation, driving faster, smarter decisions for insurance companies.

FLIP – Redefining DataOps with AI-Driven Automation

FLIP transforms traditional data operations by integrating artificial intelligence directly into your data pipelines. It automates complex workflows, reduces manual effort, and helps teams get faster, clearer insights—without getting buried in code. From data integration to transformation, it brings speed, accuracy, and control to every step.

With its intuitive drag-and-drop interface and ready-to-use templates, FLIP allows users to automate routine data tasks, freeing up time for more strategic initiatives. Additionally, FLIP enhances data quality by implementing sophisticated validation and cleansing rules, ensuring accuracy and completeness. Its secure, unified platform also improves data accessibility, offering role-based access to ensure that the right data is available to the right people.

Case Studies: FLIP’s Accounts Payable Automation Capabilities

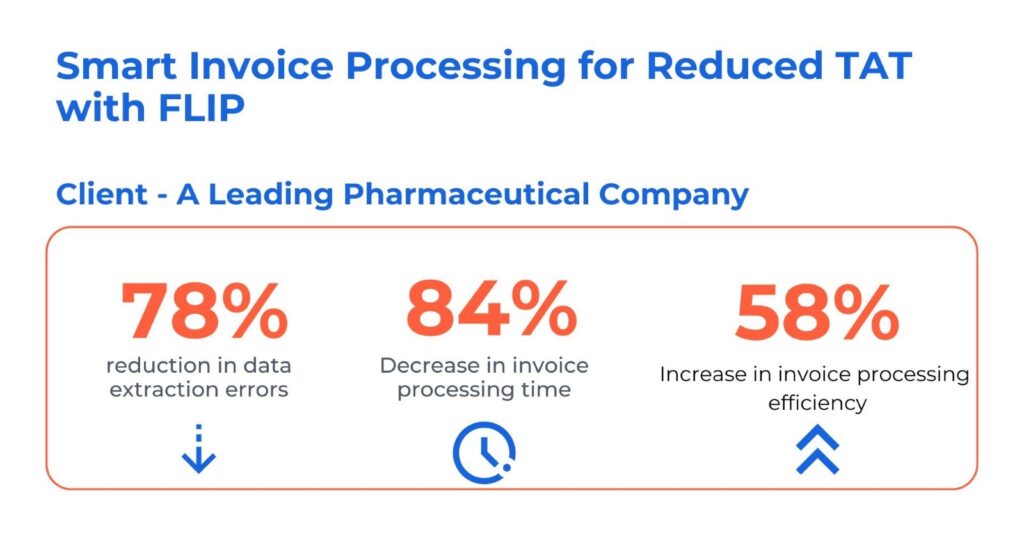

1. Smart Invoice Processing for Reduced TAT

The client is a leading pharmaceutical company in the USA. They faced challenges with a complex invoice tracking system spanning 20 disparate portals and email channels. The diverse document formats and manual data extraction processes significantly increased error rates and financial risks across their invoice management workflow.

By leveraging the capabilities of FLIP, the team at Kanerika helped resolve the client’s challenges by providing the following solutions:

- Centralized invoice collection from various state portals and emails, enhancing payment efficiency and financial tracking

- Standardized diverse invoice formats into a unified data structure, streamlining processing

- Instituted format-specific validation checks for each invoice, ensuring accuracy and reducing financial errors

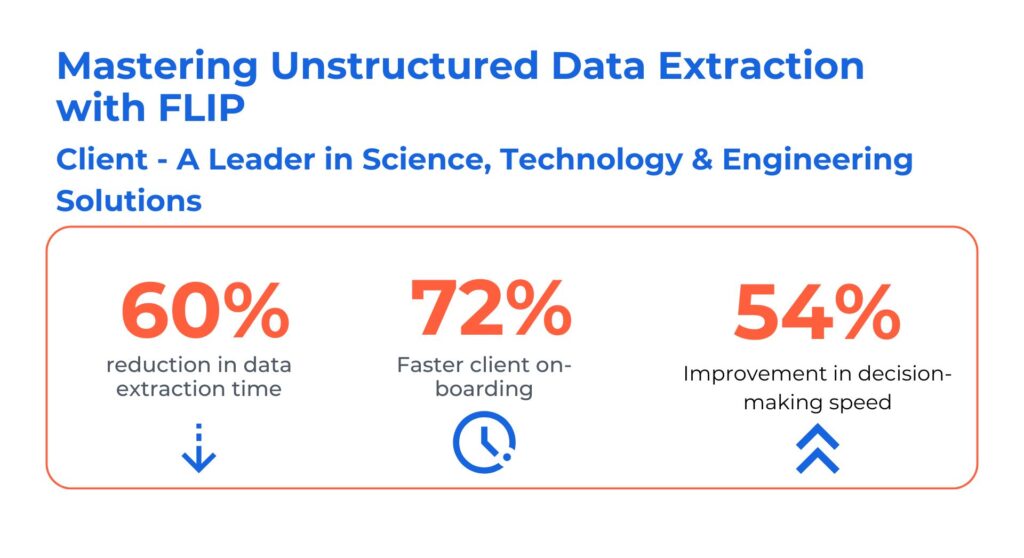

3. Mastering Unstructured Data Extraction with FLIP

The client is a global leader in science, technology, and engineering solutions, providing services to customers in over 80 countries. The company faced challenges with streamlining operations and improving efficiency. They needed a state-of-the-art AI-driven data extraction solution to automate data processing from complex, unstructured documents.

By leveraging FLIP’s advanced capabilities, Kanerika offered the following solutions:

- FLIP efficiently extracted content from PDFs and Excel files, including complex tabular data and AI-generated summaries, meeting the client’s specific requirements.

- The extracted data was stored in structured formats (XLS, JSON, SQL tables), enabling easy analysis and integration with the client’s existing systems.

- FLIP’s intuitive drag-and-drop interface and auto-mapping features allowed their team to create data pipelines easily, while the Monitor Page streamlined exception handling and error management.

Kanerika: Driving Business Growth with Data and AI Excellence

Kanerika helps businesses turn their data into real results using the latest in agentic AI, machine learning, and advanced data analytics. With deep expertise in data governance, we support industries like manufacturing, retail, finance, and healthcare to improve productivity, reduce costs, and make smarter decisions.

Whether it’s optimizing supply chains, improving customer experiences, or boosting operational efficiency, our AI-driven solutions are designed to meet practical needs across sectors.

We also place a strong focus on quality and security. With certifications like CMMI, ISO, and SOC, we follow the highest standards in project delivery and data protection—ensuring reliable and secure implementation at every step.

At Kanerika, we help businesses move forward with confidence, using data and AI the smart way.

Accelerate Growth and Efficiency with Enterprise-Grade Automation

Partner with Kanerika Today!

FAQs

What is the salary of DataOps vs DevOps?

DataOps and DevOps engineers’ salaries are comparable but can vary widely based on experience, location, and specific skills. Generally, DataOps roles might command slightly higher salaries due to the increasing demand for data expertise. However, senior-level DevOps engineers often earn more than their DataOps counterparts. Ultimately, the specific compensation hinges on the individual’s unique skillset and market conditions.

What is the difference between DataOps and CI CD?

DataOps focuses on streamlining the entire data lifecycle, from ingestion to analysis, emphasizing collaboration and automation to ensure data quality and timely insights. CI/CD, conversely, centers on rapidly deploying and updating *software* applications, automating the build, test, and release processes. While both boost automation, DataOps tackles data challenges while CI/CD tackles software deployment. They can work together, with CI/CD pipelines supporting DataOps workflows.

What is meant by DataOps?

DataOps is all about streamlining the entire data lifecycle, from collection to analysis. It uses Agile and DevOps principles to automate processes, improve collaboration, and boost the speed and reliability of delivering data-driven insights. Think of it as DevOps, but specifically for data. The goal is faster, better, and more trustworthy data for decision-making.

What is the difference between DevSecOps and DataOps?

DevSecOps integrates security throughout the *entire* software development lifecycle, ensuring applications are secure from the start. DataOps, conversely, focuses on the *reliable and rapid delivery of data*, emphasizing automation and collaboration to improve data quality and accessibility. Think of DevSecOps as securing the *how* of software and DataOps as optimizing the *what* of data. They’re distinct but can complement each other in a holistic approach to secure and efficient digital operations.

Is DataOps and DevOps same?

No, DataOps and DevOps aren’t the same, though they share a common philosophy. DevOps focuses on software development and deployment speed and reliability. DataOps, conversely, applies that same agile, collaborative approach specifically to data pipelines and analytics processes, emphasizing data quality and accessibility throughout. Essentially, DataOps is DevOps adapted for the unique challenges of big data.

Is DevOps more dev or ops?

DevOps isn’t strictly “more” dev or ops; it’s the bridge between them. It emphasizes collaboration and shared responsibility, blurring traditional boundaries. The balance shifts depending on the specific organization and project needs, but the goal is unified, efficient software delivery.

What is the role of DataOps?

DataOps is all about streamlining the entire data lifecycle, from collection to insights. It uses agile principles and automation to ensure faster, more reliable data delivery. Think of it as DevOps, but specifically for data – aiming for continuous integration and delivery of data products. Ultimately, it’s about getting trustworthy data into the hands of those who need it, quickly and efficiently.