Data-driven companies were found to be 23 times more likely to acquire customers, highlighting the immense value of leveraging data effectively in business operations. With enormous volumes of data that businesses have to handle these days, the ability to clean, organize, and transform raw data into actionable insights can be the difference between leading the market and lagging. Data preprocessing entails all the above processes.

It is the crucial first step in the data analysis pipeline, ensuring that the quality and structure of data are optimized for better decision-making. By refining raw data into a format suitable for analysis, businesses can uncover the maximum value of their data assets, leading to improved outcomes and strategic advantages.

Move From Informatica to Talend!

Kanerika keeps your workflow migration running smooth.

What is Data Preprocessing?

Data preprocessing refers to the process of cleaning, transforming, and organizing raw data into a structured format suitable for analysis. It involves steps like removing duplicates, handling missing values, scaling numerical features, encoding categorical variables, and more. The goal is to enhance data quality, making it easier to extract meaningful insights and patterns during analysis. Effective data preprocessing is crucial for accurate modeling and decision-making in various fields like machine learning, data mining, and statistical analysis.

The Role of Data Preprocessing in Machine Learning and Data Analysis

Data preprocessing is a crucial first stage in the field of data science, where insights are extracted from unprocessed data. In order to create accurate and dependable machine learning models and data analysis, raw data must be transformed into a format that can be used. This is why it’s so important to preprocess data:

1. Improved Data Quality

Real-world data is rarely pristine. Missing values, inconsistencies, and outliers can all skew results and hinder analysis. Data preprocessing addresses these issues by cleaning and refining the data, ensuring its accuracy and consistency. This foundation of high-quality data allows subsequent analysis and models to function optimally.

2. Enhanced Model Performance

Machine learning models rely on patterns within the data for learning and prediction. However, inconsistencies and noise can mislead the model. Data preprocessing removes these roadblocks, allowing the model to focus on the true underlying relationships within the data. This translates to improved model performance, with more accurate predictions and reliable results.

3. Efficient Data Processing

Unprocessed data can be cumbersome and time-consuming for algorithms to handle. Data preprocessing techniques like scaling and dimensionality reduction streamline the data, making it easier for models to process and analyze. This translates to faster training times and improved computational efficiency.

4. Meaningful Feature Extraction

Raw data often contains irrelevant or redundant information that can cloud the true insights. Data preprocessing, through techniques like feature selection and engineering, helps identify the most relevant features that contribute to the analysis or prediction. This targeted focus allows models to extract the most meaningful insights from the data.

5. Interpretability and Generalizability

Well-preprocessed data leads to models that are easier to interpret. By understanding the features and transformations applied, data scientists can gain deeper insights into the model’s decision-making process. Additionally, data preprocessing helps models generalize better to unseen data, ensuring their predictions are relevant beyond the training set.

6. Reduced Overfitting

Preprocessing techniques like regularization and cross-validation help in reducing overfitting, where the model performs well on training data but poorly on new, unseen data. This improves the generalization of the model.

7. Faster Processing

Preprocessing optimizes data for faster processing by removing redundancies, transforming data types, and organizing the data in a format that is efficient for machine learning algorithms.

8. Compatibility

Different algorithms have specific requirements regarding data format and structure. Preprocessing ensures that the data is compatible with the chosen algorithms, maximizing their effectiveness.

Data Ingestion vs Data Integration: How Are They Different?

Uncover the key differences between data ingestion and data integration, and learn how each plays a vital role in managing your organization’s data pipeline.

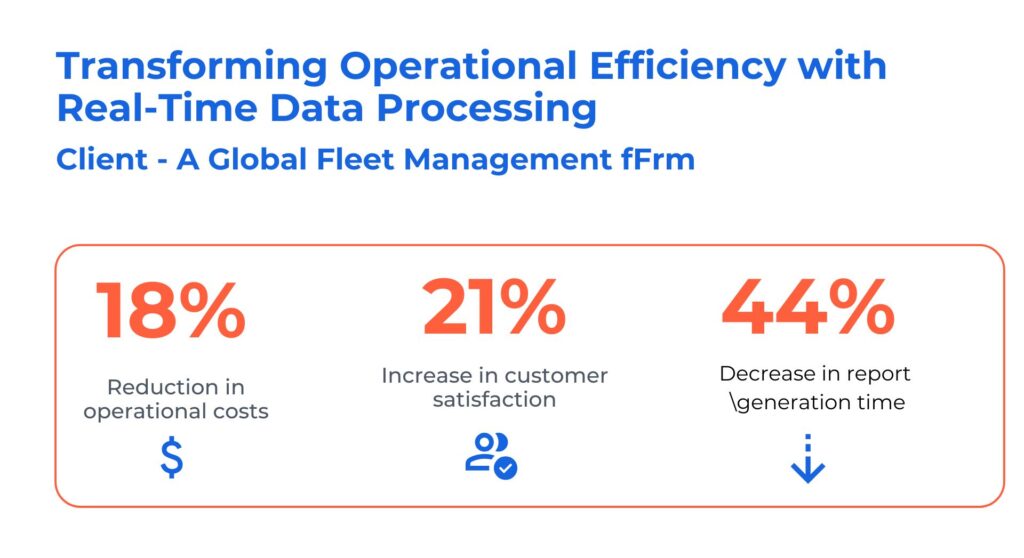

Case Study: 1. Transforming Operational Efficiency with Real-time Data Processing

Business Context

The client is a leading provider of GPS fleet tracking and management solutions. They faced challenges due to the intricate nature of real-time data integration issues associated with receiving vehicle data from various partners. They sought solutions to bolster their fleet management capabilities and increase operational efficiency.

Kanerika helped them address their problems using advanced tools and technologies like Power BI, Microsoft Azure, and Informatica. Here are the solutions we offered:

- Developed self-service analytics for proactive insights, streamlining operations, and enhancing decision-making

- Built intuitive “Report Builder” for custom KPI reports, boosting adaptability and empowering users with real-time data processing

- Reduced engineering dependency and increased process efficiency with new report-generation capabilities

The 7–Step Process for Data Preprocessing

Step 1: Data Collection

Gathering Raw Data: This entails getting information from a range of sources, including files, databases, APIs, sensors, surveys, and more. The information gathered could be unstructured (written, photos) or structured (spreadsheets, databases, etc.)

Sources of Data: Data sources include company databases, CRM systems, and external sources including social media, public datasets, and third-party APIs. Choosing trustworthy sources is essential to guaranteeing the authenticity and caliber of the information.

Importance of Data Quality at the Collection Stage: The entire process of data analysis is impacted by the quality of the data at the collection stage. Inaccurate findings, defective models, and skewed insights can emerge from poor data quality. As soon as data is collected, it is crucial to make sure it is correct, comprehensive, consistent, and pertinent.

Step 2: Data Cleaning

Handling Missing Values: In order to prevent bias in analysis, it is necessary to handle missing values, which are common occurrences. One can use interpolation techniques to estimate missing values based on preexisting data patterns, delete rows and columns containing missing data, or fill in the gaps with mean, median, and mode values.

Dealing with Noisy Data: Noisy data contains outliers or errors that can skew analysis. Techniques like binning (grouping similar values into bins), regression (predicting missing values based on other variables), or clustering (grouping similar data points) can help in handling noisy data effectively.

Eliminating Duplicates: Duplicate records can distort analysis results. Identifying and removing duplicate entries ensures data accuracy and prevents redundancy in analysis.

Step 3: Data Integration

Combining Data from Multiple Sources: Data integration involves merging datasets from different sources to create a unified dataset for analysis. This step requires handling schema integration (matching data schemas) and addressing redundancy to avoid data conflicts.

Ensuring Consistency: Standardizing data formats, resolving naming conflicts, and addressing inconsistencies in data values ensures data consistency across integrated datasets.

Step 4: Data Transformation

Normalization: Normalization scales data to a standard range, improving the performance of machine learning algorithms. Techniques like Min-Max scaling (scaling data to a range between 0 and 1), Z-Score scaling (standardizing data with mean and standard deviation), and Decimal Scaling (shifting decimal points) are used for normalization.

Aggregation: Aggregating data involves summarizing information to a higher level (e.g., calculating totals, averages) for easier analysis and interpretation.

Feature Engineering: Creating new features or variables from existing data enhances model performance and uncovers hidden patterns. Feature engineering involves transforming data, combining features, or creating new variables based on domain knowledge.

Step 5: Data Reduction

Reducing Dataset Size: Large datasets can be computationally intensive. Data reduction techniques like Principal Component Analysis (PCA) reduce the dimensionality of data while retaining essential information, making analysis faster and more manageable.

Balancing Variables: Balancing variables ensures that all features contribute equally to analysis. Techniques like Standard Scaler, Robust Scaler, and Max-Abs Scaler standardize variable scales, preventing certain features from dominating the analysis based on their magnitude.

Step 6: Encoding Categorical Variables

Converting Categories into Numerical Values: Machine learning algorithms often require numerical data. Techniques like Label Encoding (assigning numerical labels to categories) and One-Hot Encoding (creating binary columns for each category) transform categorical variables into numerical format.

Handling Imbalanced Data: Imbalanced datasets where one class is significantly more prevalent than others can lead to biased models. Strategies like Oversampling (increasing minority class samples), Undersampling (decreasing majority class samples), or Hybrid Methods (combining oversampling and undersampling) balance data distribution for more accurate model training.

Step 7: Splitting the Dataset

Creating Training, Validation, and Test Sets: Splitting the dataset into training, validation, and test sets is crucial for evaluating model performance. The training set is used to train the model, the validation set for tuning hyperparameters, and the test set for final evaluation on unseen data.

Importance of Each Set: The training set helps the model learn patterns, the validation set helps optimize model performance, and the test set assesses the model’s generalization to new data.

Methods for Splitting Data Effectively: Techniques like random splitting, stratified splitting (maintaining class distribution), and cross-validation (repeatedly splitting data for validation) ensure effective dataset splitting for robust model evaluation.

Take Control of Your Data and Drive Growth With Innovative Management Techniques!

Partner with Kanerika Today.

8 Best Tools and Libraries for Data Preprocessing

1. Pandas (Python)

Pandas is a powerful and versatile open-source library in Python specifically designed for data manipulation and analysis. It offers efficient data structures like DataFrames (tabular data) and Series (one-dimensional data) for easy data wrangling. Pandas provides a rich set of functions for cleaning, transforming, and exploring data.

2. NumPy (Python)

NumPy serves as the foundation for scientific computing in Python. It provides high-performance multidimensional arrays and efficient mathematical operations. While not primarily focused on data preprocessing itself, NumPy underpins many data manipulation functionalities within Pandas and other libraries.

3. Scikit-learn (Python)

Scikit-learn is a popular machine learning library in Python that offers a comprehensive suite of tools for data preprocessing tasks. It provides functionalities for handling missing values, encoding categorical variables, feature scaling, and dimensionality reduction techniques like PCA (Principal Component Analysis).

4. TensorFlow Data Validation (TFDV) (Python)

TensorFlow Data Validation (TFDV) is a TensorFlow library specifically designed for data exploration, validation, and analysis. It helps identify data quality issues like missing values, outliers, and schema inconsistencies. While not as widely used as the others on this list, TFDV can be a valuable tool for projects built on TensorFlow.

5. Apache Spark (Scala/Java/Python)

For large-scale data processing, Apache Spark is a powerful distributed computing framework. It allows parallel processing of data across clusters of machines. While Spark itself isn’t solely focused on preprocessing, libraries like Koalas (built on top of Spark) provide functionalities similar to Pandas, enabling efficient data cleaning and transformation on massive datasets.

6. OpenRefine

OpenRefine (formerly Google Refine) is a free, open-source tool for data cleaning and transformation. It provides features for exploring data, reconciling data discrepancies, and transforming data formats.

7. Dask

Dask is a parallel computing library in Python that allows for efficient handling of large datasets. It provides capabilities for parallel execution of data preprocessing tasks, making it suitable for big data processing.

8. Apache NiFi

Apache NiFi is a data integration and processing tool that offers visual design capabilities for building data flows. It supports data routing, transformation, and enrichment, making it useful for data preprocessing pipelines.

Move From SSAS To Microsoft Fabric!

Partner with Kanerika to change through reliable guided steps.

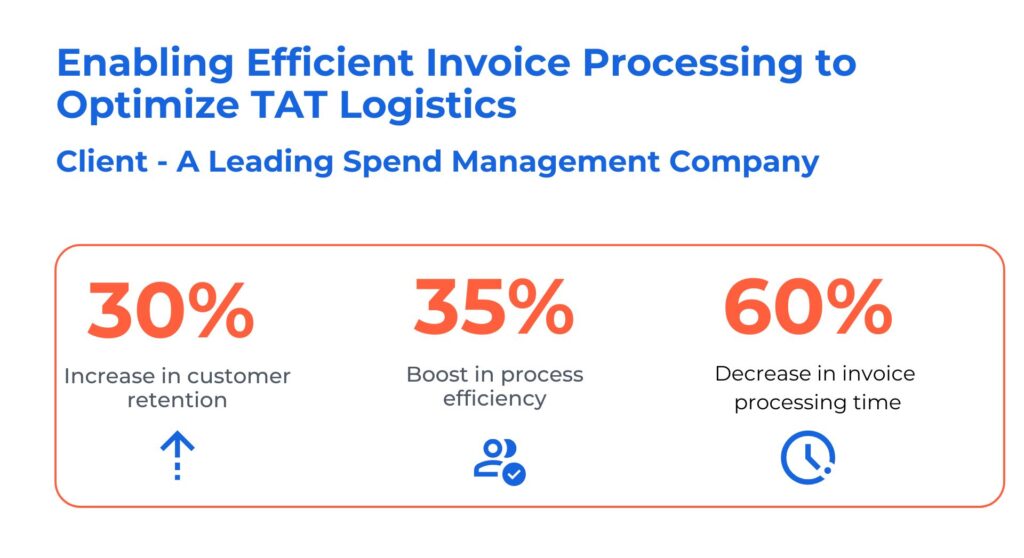

Case Study 2. Enabling Efficient Invoice Processing to Optimize TAT Logistics

The client is a global leader in Spend Management. They faced issues with delays in invoice processing which affected their service delivery, impacting the overall revenue.

Kanerika offered efficient solutions to facilitate faster invoice processing and increase their overall efficiency.

- Leveraged Informatica B2B and PowerCenter to segment large files for efficient processing, mitigating memory overflow and network timeouts

- Implemented FileSplitter to create manageable output files by splitting based on record count and size, facilitating downstream processing

- Achieved rapid processing, completing tasks in under a day, and maintained efficiency by creating separate queues for different file sizes

Choose Kanerika for Expert Data Processing, Analysis, and Management Solutions

At Kanerika, a renowned data and AI services company, we specialize in helping businesses across various sectors streamline their data workflows. From data consolidation and modeling to transformation and advanced analysis, our innovative solutions are designed to address critical business challenges and unlock actionable insights. Our custom-built strategies have successfully resolved bottlenecks, enabling our clients to achieve predictable ROI and drive growth.

By leveraging powerful tools like Power BI and Microsoft Fabric, along with cutting-edge technologies, we deliver tailored data and AI solutions that give you a competitive edge. Whether it’s enhancing data management processes or optimizing data analysis, Kanerika ensures that your business is equipped with the tools and insights needed to thrive. Partner with us to unlock the full potential of your data and stay ahead of the curve in an increasingly data-driven world.

Transform Your Data Into Powerful Insights With Advanced Data Management Techniques!

Partner with Kanerika Today.

Frequently Asked Questions

What is meant by data preprocessing?

Data preprocessing is like cleaning and preparing your ingredients before cooking. It involves transforming raw data into a format suitable for analysis, removing inconsistencies and errors. This ensures your analysis is accurate and reliable, giving you better insights. Think of it as laying the foundation for a strong analytical model.

What are the 5 major steps of data preprocessing?

Data preprocessing cleans and prepares your raw data for analysis. The five key steps involve: 1) data cleaning (handling missing values and outliers); 2) data transformation (scaling, normalization); 3) data reduction (dimensionality reduction techniques); 4) data integration (combining datasets); and 5) data discretization (converting continuous data into categories). These steps ensure your data is consistent, relevant, and ready for modeling.

What are the 4 major tasks in data preprocessing?

Data preprocessing cleans and prepares your data for analysis. The four main tasks are: data cleaning (handling missing values and outliers), data transformation (scaling, normalization), data reduction (dimensionality reduction), and data integration (combining data from different sources). Essentially, it’s about making your data accurate, consistent, and usable for your models.

What are the 5 steps in data preparation?

Data prep isn’t a rigid five-step process, but key stages always include: gathering & cleaning your raw data (handling missing values and inconsistencies); exploring its structure and identifying relevant features; transforming it (e.g., scaling, encoding); potentially reducing dimensionality for efficiency; and finally, validating your prepared data to ensure it’s fit for your model. The exact order and emphasis may vary based on your project needs.

What are the data preprocessing methods?

Data preprocessing cleans and prepares your raw data for analysis. This involves handling missing values (filling or removing), transforming variables (like scaling or encoding categorical data), and potentially reducing dimensionality to improve model performance and accuracy. Essentially, it’s about making your data usable and reliable.

How to handle missing data?

Missing data is a common problem in datasets. Strategies depend on the *type* of missingness (random, systematic) and the *amount* missing. Common approaches include imputation (filling in missing values) or using models robust to missing data. Always document your chosen method and its potential impact on results.

What is data preparation or preprocessing?

Data preparation, or preprocessing, is like cleaning and organizing your ingredients before cooking. It transforms raw data into a usable format for analysis, handling missing values, inconsistencies, and outliers. This crucial step ensures your analysis is accurate and reliable, yielding meaningful results. Think of it as laying the foundation for a strong analytical structure.