According to Gartner, poor-quality data costs businesses nearly $15 million annually. Understanding the importance of good data practices as well as having tools to create good data is crucial for the success of a data-dependent organization. Drilling down, data quality refers to the condition of data based on factors such as accuracy, consistency, completeness, reliability, and relevance. It is essential for the success of your business operations, as it ensures that the data you rely on for decision-making, strategy development, and day-to-day functions are dependable and actionable. When data is of high quality, your organization can anticipate better operational efficiency, improved decision-making processes, and enhanced customer satisfaction.

What is Data Quality

Data quality refers to the overall fitness of data for its intended purpose. It’s not just about how accurate the data is—it’s about how complete, consistent, timely, valid, and trustworthy it is within the context of how it’s being used. In today’s digital world, data drives everything from strategic decisions to real-time automation, and its quality can make or break outcomes.

Many organizations mistakenly equate data quality solely with accuracy, but that’s only one piece of the puzzle. High-quality data must be reliable, usable, and relevant across the systems and teams that depend on it.

The difference between raw data and high-quality data is like the difference between unrefined oil and clean fuel. Raw data may contain duplicates, missing fields, outdated entries, or formatting issues—while high-quality data is clean, organized, and optimized for insight and action.

Ultimately, strong data quality ensures that teams across the business can trust the data they’re using, leading to better decisions, reduced risk, and stronger performance.

Impact of Data Quality on Business

- Decision-Making: Reliable data supports sound business decisions

- Reporting: Accurate reporting is necessary for compliance and decision-making

- Insights: High-quality data yields valid business insights and intelligence

- Reputation: Consistent and trustworthy data upholds your organization’s reputation

Quality Dimensions

Understanding the multiple dimensions of data quality can give you a holistic view of your data’s condition and can guide you in enhancing its quality. Each of these dimensions supports a facet of data quality necessary for overall data reliability and relevance.

Dimensions of Data Quality:

- Accessibility: Data should be easily retrievable and usable when needed.

- Relevance: All data collected should be appropriate for the context of use.

- Reliability: Data should reflect stable and consistent data sources.

The 6 Core Dimensions of Data Quality

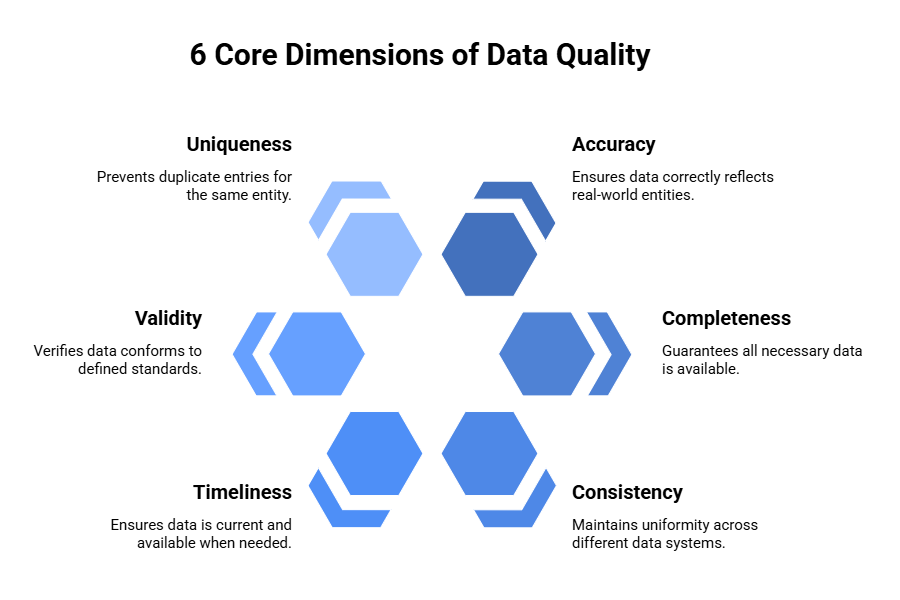

Ensuring high data quality means evaluating data across multiple dimensions—not just accuracy. These six core dimensions help organizations assess, manage, and improve the reliability and usability of their data.

1. Accuracy

Accuracy refers to whether the data correctly reflects the real-world entities or events it’s intended to describe. For example, a customer’s billing address must match their actual location. Inaccurate data leads to poor decisions, financial loss, or compliance risks.

2. Completeness

Completeness measures whether all required data is available. A missing customer phone number or transaction ID could disrupt downstream processes. Completeness doesn’t mean collecting every possible field—it means having enough relevant data to support the task at hand.

3. Consistency

Consistency ensures that data is uniform across different systems and sources. If a customer’s status is marked “active” in one system and “inactive” in another, it creates confusion and undermines trust. Standardized definitions and synchronization are key to consistency.

4. Timeliness

Timeliness assesses whether the data is up to date and available when needed. Outdated or delayed data can negatively impact real-time analytics, forecasting, and operational decisions. Timely data is especially critical in sectors like finance, healthcare, and supply chain.

5. Validity

Validity checks whether data values conform to defined formats, rules, or standards. For instance, a date of birth field should follow a standard date format, and email addresses should contain “@”. Invalid data may pass unnoticed but cause major issues during analysis or automation.

6. Uniqueness

Uniqueness ensures there are no duplicate entries for the same entity. Duplicate records can inflate metrics, confuse reporting, and increase costs in marketing and outreach efforts.

Why Data Quality Matters

High-quality data is essential for reliable decision-making, operational efficiency, and business growth. Here’s why it matters:

- Informed Decision-Making

Accurate and trustworthy data enables leaders to make strategic decisions with confidence. Poor data can lead to misinformed actions, missed opportunities, and financial losses. - Reliable Reporting

Organizations rely on consistent data to generate reports for stakeholders, investors, and internal teams. Inconsistent or incorrect data can undermine trust and create confusion. - Enhanced Customer Experience

Clean, consistent customer data ensures smooth interactions—correct billing, timely communications, and personalized service. Poor data often leads to errors that frustrate users and damage brand loyalty. - AI and Machine Learning Performance

Data quality is critical for training accurate and unbiased AI/ML models. Models built on flawed data may produce misleading or harmful predictions, especially in sensitive domains like healthcare or finance. - Regulatory Compliance

Regulatory bodies demand accurate and complete records. Poor data quality can result in compliance violations, penalties, or audits—damaging both reputation and revenue.

8 Best Data Modeling Tools to Elevate Your Data Game

Explore the top 8 data modeling tools that can streamline your data architecture, improve efficiency, and enhance decision-making for your business.

Managing Data Quality

Effective data quality management (DQM) is essential for your organization to maintain the integrity, usefulness, and accuracy of its data. By focusing on systems, strategies, and governance, you can ensure that your data supports critical business decisions and operations.

Data Quality Management Systems

Your data quality management system (DQMS) serves as the technological backbone for your data quality initiatives. It often integrates with your Enterprise Resource Planning (ERP) systems to ensure a smooth data lifecycle. Within a DQMS, metadata management tools are crucial as they provide a detailed dictionary of your data, its origins, and uses, which contributes to higher transparency and better usability.

- Key Components of a DQMS:

- Metadata Management

- Control Measures

- Continuous Monitoring & Reporting Tools

- Integration with ERP and Other Systems

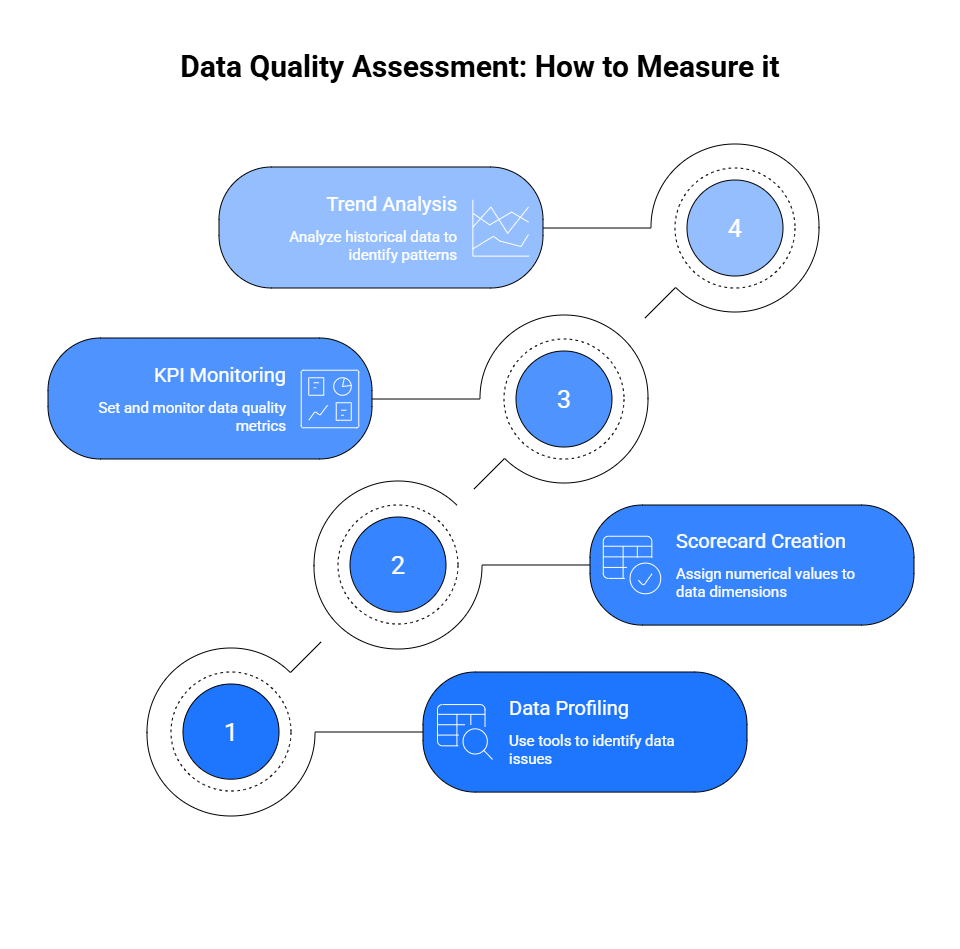

Data Quality Assessment: How to Measure It

Measuring data quality is essential to understanding how trustworthy and usable your data really is. A structured assessment process helps identify gaps and maintain data health over time. Here are key methods to measure data quality:

- Use Data Profiling Tools

Data profiling tools scan datasets to uncover issues like missing values, duplicates, outliers, and inconsistencies. Tools like Great Expectations, Informatica Data Quality, and Microsoft Purview help automate these checks across sources. - Create a Data Quality Scorecard

Scorecards assign numerical values to key dimensions like accuracy, completeness, and consistency. These scores help teams quantify quality and track improvement over time. - Monitor KPIs and Thresholds

Set clear thresholds for acceptable data quality metrics (e.g., <2% missing values, 98% format validity). Regularly monitor KPIs using dashboards to detect early warning signs before they impact operations. - Track Error Trends Over Time

Historical analysis of recurring issues (e.g., duplicate records or failed validations) reveals patterns and helps identify root causes. This insight supports proactive data governance.

Regular assessments ensure that your data remains a reliable foundation for decision-making, reporting, and AI initiatives.

Implementing Data Quality Standards

Effective data quality implementation transforms your raw data into reliable information that supports decision-making. You’ll utilize specific tools and techniques, establish precise metrics, and adhere to rigorous standards to ensure compliance and enhance the value of your data assets.

Tools and Techniques:

1. Open-Source Tools

Great Expectations

- Python-based framework for creating data validation rules and generating documentation

- Excels at profiling datasets and creating expectation suites for CI/CD workflows

- Offers cost-effective solutions with strong community support and extensive customization capabilities

- Integrates seamlessly into existing data pipelines and development workflows

Deequ

- Developed by Amazon for data quality verification in large-scale datasets

- Focuses specifically on Apache Spark environments for big data processing

- Provides automated data profiling and anomaly detection capabilities

- Offers programmatic approach to data quality testing and monitoring

2. Enterprise Tools

Informatica

- Delivers end-to-end data quality solutions including profiling, cleansing, and monitoring

- Provides comprehensive data governance and lineage tracking capabilities

- Offers advanced matching and deduplication algorithms for data consolidation

Talend

- Integrates data quality within broader data integration platform

- Provides visual interface for building data quality rules and workflows

- Supports both on-premise and cloud deployment options

Ataccama

- Specializes in data quality with machine learning-enhanced profiling

- Offers automated issue detection and remediation suggestions

- Provides advanced data matching and entity resolution capabilities

Microsoft Purview

- Combines data quality with comprehensive governance features

- Integrates natively with Microsoft ecosystem and Azure services

- Offers automated data discovery and classification capabilities

Collibra

- Provides data quality within broader data governance framework

- Offers business glossary and data lineage visualization

- Focuses on collaborative data stewardship and policy management

3. Cloud-Native Options

AWS Glue DataBrew

- Offers visual data preparation with built-in quality profiling

- Provides no-code interface for data transformation and validation

- Integrates seamlessly with other AWS data services and storage solutions

Google Cloud Data Quality

- Provides automated monitoring and validation capabilities

- Offers real-time data quality scoring and alerting

- Integrates with BigQuery and other Google Cloud Platform services

Databricks Delta Live Tables with Expectations

- Combines streaming data pipelines with quality constraints

- Enables real-time data quality enforcement across modern data architectures

- Provides declarative approach to building reliable data pipelines with built-in quality checks

Establishing Metrics

Measuring data quality is vital for monitoring and improvement. You must establish clear metrics that may include, but are not limited to, accuracy, completeness, consistency, and timeliness:

- Accuracy: Percentage of data without errors.

- Completeness: Ratio of populated data fields to the total number of fields.

- Consistency: Lack of variance in data formats or values across datasets.

- Timeliness: Currency of data with respect to your business needs.

Data Quality Standards and Compliance

Adhering to data quality standards ensures your data meets benchmarks for effectiveness and efficiency. Compliance with regulations such as the General Data Protection Regulation (GDPR) is not optional; it’s critical. Your organization must:

- Understand relevant regulatory compliance requirements and integrate them into your data management practices.

- Develop protocols that align with DQ (data quality) frameworks to maintain a high level of data integrity.

By focusing on these areas, you build a robust foundation for data quality that supports your organization’s goals and maintains trust in your data-driven decisions.

Overcoming Data Quality Challenges

Your foremost task involves identifying and rectifying common issues. These typically include duplicate data, which skews analytics, and outliers that may indicate either data entry errors or genuine anomalies. Additionally, bad data encompasses a range of problems, from missing values to incorrect data entries. To tackle these:

- Audit your data to identify these issues.

- Implement validation rules to prevent the future entry of bad data

- Use data cleaning tools to remove duplicates and correct outliers

The Financial Impact

Data quality has direct financial implications for your business. Poor data quality can lead to financial loss due to misguided business strategies or decisions based on erroneous information. The costs of rectifying data quality issues (known as data quality costs) can balloon if not managed proactively. To mitigate these financial risks:

- Quantify the potential cost of poor data quality by assessing past incidents

- Justify investments in data quality by linking them to reduced risk of financial loss

Recover from Poor Data Quality

Once poor data quality is identified, you must embark on data remediation to recover from poor data quality. This could involve intricate data integration and migration processes, particularly if you’re merging datasets from different sources or transitioning to a new system.

- Develop a data restoration plan to correct or recover lost or corrupted data

- Engage in precision cleaning to ensure data is accurate and useful post-recovery

Data Integration Tools: The Ultimate Guide for Businesses

Explore the top data integration tools that help businesses streamline workflows, unify data sources, and drive smarter decision-making.

Data Quality in Business Context

Decision-Making

As you evaluate your business decisions, you quickly realize that the trust you place in your data is paramount. Accurate, consistent, and complete data sets form the foundation for trustworthy analyses that allow for informed decision-making. For instance, supply chain management decisions depend on high-quality supply chain and transactional data to detect inefficiencies and recognize opportunities for improvement.

Operational Excellence

Operational excellence hinges on the reliability of business operations data. When your data is precise and relevant, you can expect a significant boost in productivity. High-quality data is instrumental in reducing errors and streamlining processes, ensuring that every function, from inventory management to customer service, operates at its peak.

Marketing and Sales

Your marketing campaigns and sales strategies depend greatly on the quality of customer relationship management (CRM) data. Personalized and targeted advertising only works when the underlying data correctly reflects your customer base. With good information at your fingertips, you can craft sales strategies and marketing material that are both engaging and effective, resonating with the target audience you’re trying to reach.

Best Practices for Data Quality

To ensure your data is reliable and actionable, it’s essential to adopt best practices. They involve implementing a solid framework, standardized processes, and consistent maintenance.

Developing an Effective Framework

Your journey to high-quality data begins with an assessment framework. This framework should include comprehensive criteria for measuring the accuracy, completeness, consistency, and relevance of your data assets. You must establish clear standards and metrics for assessing the data at each phase of its lifecycle, facilitating a trusted foundation for your data-related decisions.

Best Practices

Best practices are imperative for extracting the maximum value from your assets. Start by implementing data standardization protocols to ensure uniformity and ease data consolidation from various sources. Focus on:

- Data Validation: Regularly verify both the data and the metadata to maintain quality

- Regular Audits: Conduct audits to detect issues like duplicates or inconsistencies

- Feedback Loops: Incorporate feedback mechanisms for continuous improvement of data processes

Your aim should be to create a trusted source of data for analysis and decision-making.

Maintaining Data Quality

Data quality is not a one-time initiative but a continuous improvement process. It requires ongoing data maintenance to adapt to new data sources and changing business needs. Make sure to:

- Monitor and Update: Regularly review and update as necessary

- Leverage Technology: Use modern tools and solutions to automate quality controls where possible

- Engage Stakeholders: Involve users by establishing a culture that values data-driven feedback

Measuring and Reporting Data Quality

Evaluating and communicating the standard of your data are critical activities that ensure you trust the foundation upon which your business decisions rest. To measure and report effectively, you must track specific Key Performance Indicators (KPIs) and document these findings for transparency and accountability.

Key Performance Indicators

Key Performance Indicators provide you with measurable values that reflect how well the data conforms to defined standards.

Consider these essential KPIs:

- Accuracy: Verifying that data correctly represents real-world values or events.

- Completeness: Ensuring all necessary data is present without gaps.

- Consistency: Checking that data is the same across different sources or systems.

- Uniqueness: Confirming that entries are not duplicated unless allowed by your data model.

- Timeliness: Maintaining data that is up-to-date and relevant to its intended use case.

Documentation and Reporting

Documentation involves maintaining records involving metrics, processes used to assess these metrics, and lineage, which offers insights into data’s origins and transformations. Reporting refers to the compilation and presentation of this information to stakeholders.

- Track and Record Metrics:

- Provide detailed logs and descriptions for each data quality metric.

- Use tables to organize metrics and display trends over time.

- Include Data Lineage:

- Detail the data’s journey from origin to destination, offering transparency.

- Represent complex lineage visually, using flowcharts or diagrams.

- Foster Transparency and Accountability:

- Share comprehensive reports with relevant stakeholders.

- Implement a clear system for addressing and rectifying issues.

Data Visualization Tools: A Comprehensive Guide to Choosing the Right One

Explore how to select the best data visualization tools to enhance insights, streamline analysis, and effectively communicate data-driven stories.

How to Determine Data Quality: 5 Standards to Maintain

1. Accuracy: Your data should reflect real-world facts or conditions as closely and as correctly as possible. To achieve high accuracy, ensure your data collection methods are reliable, and regularly validate your data against trusted sources.

- Validation techniques:

- Cross-reference checks

- Error tracking.

2. Completeness: Data is not always complete, but you should aim for datasets that are sufficiently comprehensive for the task at hand. Inspect your data for missing entries and consider the criticality of these gaps.

- Completeness checks:

- Null or empty data field counts

- Mandatory field analysis.

3. Consistency: Your data should be consistent across various datasets and not contradict itself. Consistency is crucial for comparative analysis and accurate reporting.

- Consistency verification:

- Cross-dataset comparisons

- Data version controls.

4. Timeliness: Ensure your data is updated and relevant to the current time frame you are analyzing. Timeliness impacts decision-making and the ability to identify trends accurately.

- Timeliness assessment:

- Date stamping of records

- Update frequency analysis.

5. Reliability: Your data should be collected from dependable sources and through sound methodologies. This increases the trust in the data for analysis and business decisions.

- Reliability measures:

- Source credibility review

- Data collection method audits.

8 Best Data Modeling Tools to Elevate Your Data Game

Explore the top 8 data modeling tools that can streamline your data architecture, improve efficiency, and enhance decision-making for your business.

Enhancing Business Efficiency with Kanerika’s Advanced Data Management Solutions

Partnering with Kanerika could revolutionize businesses through advanced data management solutions. Our expertise in data integration, advanced analytics, AI-based tools, and deep domain knowledge allows organizations to harness the full potential of their data for improved outcomes.

Our proactive data management solutions empower businesses to address data quality and integrity challenges and transition from reactive to proactive strategies. By optimizing data flows and resource allocation, companies can better anticipate needs, ensure smooth operations, and prevent inefficiencies.

Additionally, our AI tools enable real-time data analysis alongside advanced data management practices, providing actionable insights that drive informed decision-making. This goes beyond basic data integration by incorporating continuous monitoring systems, which enable early identification of trends and potential issues, improving outcomes at lower costs.

Enhance Data-Driven Decision-Making With Powerful, Efficient Data Modeling!

Partner with Kanerika Today!

FAQs

1. What is data quality?

Data quality refers to how well data is suited for its intended use. High-quality data is accurate, complete, consistent, timely, valid, and free of duplicates—making it trustworthy for decision-making, reporting, and automation.

2. Why is data quality important for businesses?

Poor data quality can lead to bad decisions, regulatory risks, lost revenue, and customer dissatisfaction. High data quality ensures better insights, efficient operations, and stronger customer trust.

3. What are the main dimensions of data quality?

The six core dimensions are:

-

Accuracy

-

Completeness

-

Consistency

-

Timeliness

-

Validity

-

Uniqueness

4. How do you measure data quality?

Data quality is measured using profiling tools, scorecards, KPIs, and monitoring trends over time. These tools help identify errors, track progress, and enforce quality standards.

5. What causes poor data quality?

Common causes include manual data entry errors, siloed systems, lack of validation rules, outdated information, and inconsistent data definitions.

6. How does data quality affect AI and machine learning?

AI models depend on high-quality input data. Poor data leads to biased, inaccurate, or unreliable predictions. Feature quality is essential for model accuracy and fairness.

7. What tools help improve data quality?

Popular tools include Great Expectations, Informatica Data Quality, Talend, Microsoft Purview, and Databricks Delta Live Tables. These automate profiling, validation, and cleansing tasks.