Insurance companies are sitting on a data goldmine, yet nearly 70% still struggle to turn that data into actionable insights according to Pwc. Rising customer expectations, regulatory demands, and new risks are forcing insurers to modernize how they manage and analyze information.

This is where Databricks Insurance Analytics transforms the game. Legacy systems and siloed databases often slow down claims processing, hinder underwriting accuracy, and limit fraud detection. Databricks solves this challenge through its Lakehouse architecture, which unifies all data — structured, unstructured, and streaming — into a single, governed platform.

With Databricks, insurers can power real-time decision-making, automate claims workflows, enhance risk modeling, and deliver hyper-personalized customer experiences.

In this blog, we’ll explore what insurance analytics on Databricks means, why it’s crucial for modern insurers, its architecture, implementation steps, and best practices for scalable, compliant success.

Build Powerful Regulatory Compliance Solutions with Databricks

Partner with Kanerika Today!.

Key Takeaways

- Insurance analytics on Databricks empowers insurers to unify data across systems, apply AI at scale, and deliver faster insights with stronger governance.

- The Databricks Lakehouse architecture integrates structured, unstructured, and streaming data into a single source of truth, enabling advanced analytics, fraud detection, and real-time decision-making.

- By leveraging pre-built accelerators and governance tools, insurers reduce operational costs and achieve compliance while improving customer experience.

- Ultimately, success with Databricks insurance analytics depends on aligning business goals, building a solid data foundation, enforcing governance early, and operationalizing machine learning models for continuous value creation.

What Is Insurance Analytics on Databricks?

Insurance analytics refers to the process of using data from multiple sources—such as claims, policies, underwriting, customer interactions, and external market signals—to generate insights that guide business decisions. For insurers, analytics is no longer limited to retrospective reporting; it now drives proactive risk management, fraud prevention, and personalized policy offerings.

The Databricks Lakehouse Platform provides the ideal foundation for modern insurance analytics. It unifies structured and unstructured data—from transactional databases, documents, and IoT devices—within a single platform. By combining the best elements of data lakes and data warehouses, Databricks supports both real-time streaming and batch processing, enabling insurers to perform machine learning (ML), predictive modeling, and business intelligence (BI) at scale. This architecture ensures flexibility for diverse insurance workloads, from policy management to regulatory reporting. (Source: Databricks eBook – Insurers: Innovate with Data & AI)

Common use cases include claims automation to reduce processing times, fraud detection through anomaly detection models, usage-based pricing using telematics and IoT data, Customer 360 insights for personalized service, and underwriting risk modeling that improves accuracy and compliance.

Insurers adopt Databricks for its ability to accelerate analytics speed, lower operational costs, and improve decision precision. The platform’s scalability, open ecosystem, and built-in governance allow teams to move from reactive reporting to real-time analytics, enabling data-driven decision-making that strengthens profitability, compliance, and customer trust.

Why It Matters for Insurers

The insurance industry is changing rapidly. Companies now handle massive volumes of data from telematics devices, IoT sensors, claim documents, and customer records—all under increased regulatory scrutiny and competitive pricing pressure. Traditional systems struggle to integrate and analyze this growing complexity, leading to slow decisions, higher costs, and missed opportunities.

Databricks addresses these challenges by providing insurers with a unified, scalable analytics platform that simplifies data management and accelerates decision-making.

With Databricks, insurers gain

- Unified access to all data sources: Seamlessly connect data from legacy systems, third-party providers, and IoT devices, eliminating silos and improving visibility across operations.

- Faster time to insight and lower total cost of ownership (TCO): The Lakehouse architecture combines storage, analytics, and AI in one environment—reducing infrastructure overhead and improving efficiency. Source

- Support for complex AI and ML models: Build and deploy predictive models for underwriting, pricing, and claims automation at scale, enhancing accuracy and consistency.

Business Value for Insurers

Databricks empowers insurers to make smarter, faster decisions—leading to better risk selection, faster claims resolution, and an improved customer experience. By integrating data and AI under one platform, insurers not only strengthen compliance and governance but also gain a clear competitive advantage in an industry defined by speed, trust, and precision.

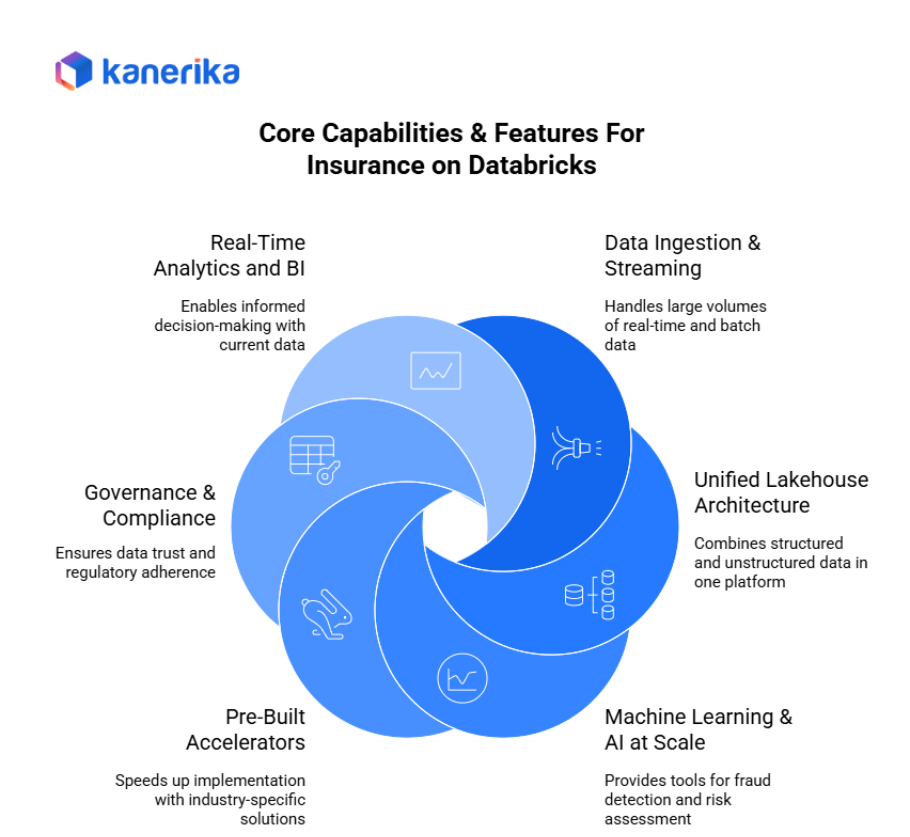

Core Capabilities & Features for Insurance on Databricks

Databricks provides insurance companies with powerful tools to handle complex data challenges while improving operations and customer service. These capabilities address the unique needs of the insurance industry.

1. Data Ingestion & Streaming

Insurance companies generate massive amounts of data from multiple sources. Databricks handles large volumes of policy information, claims records, customer interactions, and telematics data from connected devices. The platform processes both batch data that arrives periodically and streaming data that flows continuously.

For example, telematics sensors in vehicles send real-time driving behavior data that Databricks ingests and analyzes immediately. This capability enables insurers to work with current information rather than outdated batch reports.

2. Unified Lakehouse Architecture

Traditional insurance systems separate data warehouses for structured reporting from data lakes for raw information storage. In contrast, Databricks combines both approaches into a single lakehouse architecture.

This means policy data, claims history, customer profiles, and unstructured documents all live in one unified platform. As a result, analysts access complete information without copying data between systems or dealing with inconsistencies.

3. Machine Learning & AI at Scale

Insurance companies use machine learning for fraud detection, risk assessment, pricing optimization, and claims prediction. Databricks includes built-in MLflow for tracking experiments and managing model deployments.

Additionally, the platform provides runtime support for deep learning frameworks, enabling advanced AI applications. Insurers can build models that predict claim likelihood, detect fraudulent patterns, or recommend personalized policy options at scale across millions of customers.

4. Pre-Built Accelerators

Databricks offers industry-specific accelerators that speed up implementation. The “Smart Claims for Insurance” accelerator automates claims workflow by processing documents, extracting key information, routing claims appropriately, and flagging anomalies for review. These pre-built solutions reduce development time from months to weeks. Reference: Databricks Solution Accelerators for Insurance

5. Governance & Compliance

Insurance regulations require strict data handling and audit capabilities. Unity Catalog ensures data trust through comprehensive governance features. The system tracks data lineage showing where information originates and how it transforms through various processes.

Audit trails record who accesses what data and when, meeting regulatory requirements. Furthermore, fine-grained access controls ensure employees see only the data necessary for their roles.

6. Real-Time Analytics and BI

Decision-makers need current information rather than yesterday’s reports. Databricks enables real-time dashboards for underwriting teams assessing risk, claims adjusters tracking case status, and executives monitoring business performance.

Analysts create interactive visualizations using SQL, Python, or BI tools connected directly to the lakehouse. Consequently, stakeholders make informed decisions based on the latest data rather than waiting for scheduled reports.

Databricks Insurance Analytics Architecture

The Databricks Lakehouse architecture provides insurers with a unified foundation to manage structured, semi-structured, and unstructured data efficiently. It ensures consistent governance, scalability, and performance for complex insurance workflows — from claims and underwriting to customer analytics.

1. High-Level Architecture Flow

Insurance data flows through a layered Medallion architecture that simplifies transformation and governance:

- Ingestion Layer: Raw data enters from policy administration systems, claims management platforms, brokers, and third-party data sources. Databricks supports both batch and streaming ingestion, allowing real-time data collection from IoT and telematics devices.

- Medallion Architecture (Bronze–Silver–Gold):

- Bronze Layer: Stores raw, unprocessed data for traceability.

- Silver Layer: Cleansed, enriched data for analytics and model training.

- Gold Layer: Curated data ready for business consumption, reports, and dashboards.

- Consumption Layer: Data feeds ML models, BI dashboards, and real-time applications for underwriting, claims optimization, and risk assessment.

2. Integration with Insurance Systems

Databricks integrates seamlessly with policy admin systems, broker and reinsurer platforms, IoT data streams, and regulatory data providers. It also supports APIs for external enrichment datasets like weather, geolocation, or credit data.

3. Governance and Security

Built-in data classification, access control, lineage tracking, and audit logging ensure compliance with insurance regulations like GDPR, HIPAA, and SOC 2.

4. Scalability and Cloud Flexibility

Databricks supports multi-cloud and multi-region deployment (AWS, Azure, GCP), enabling global insurers to standardize analytics while maintaining regional compliance. This flexible architecture allows insurers to scale seamlessly as data and regulatory requirements evolve.

Microsoft Fabric Vs Databricks: A Comparison Guide

Explore key differences between Microsoft Fabric and Databricks in pricing, features, and capabilities.

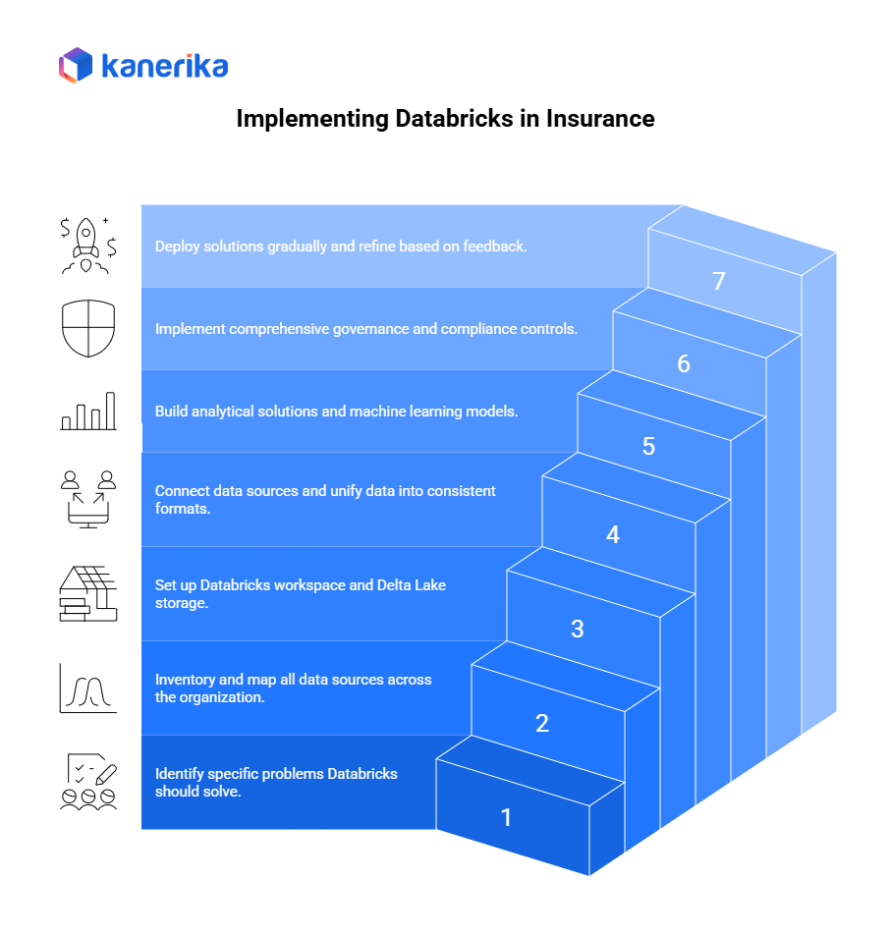

Implementation Roadmap for Insurers

Successfully implementing Databricks in insurance organizations requires a structured approach. Following this roadmap helps ensure smooth deployment while delivering measurable business value.

Step 1: Define Business Use Cases

Start by identifying specific problems Databricks should solve. Common use cases include fraud detection to catch suspicious claims, claim automation to reduce processing time, and pricing optimization to improve underwriting accuracy.

Additionally, consider customer retention prediction, risk assessment improvement, and operational cost reduction. Prioritize use cases based on potential business impact and implementation complexity. Focus on areas where data and analytics can create competitive advantages.

Step 2: Assess Data Landscape

Next, inventory all data sources across your organization. Map legacy systems storing policy and claims information. Identify external data sources like weather data, credit scores, and public records. Document telematics data from connected vehicles and IoT devices.

Catalog image data including claim photos, property images, and damage assessments. Understanding your data landscape reveals integration challenges and opportunities. Furthermore, this assessment identifies data quality issues requiring attention before migration.

Step 3: Build Lakehouse Foundation

Begin technical implementation by setting up your Databricks workspace. Configure Delta Lake as your storage foundation for reliable, high-performance data management. Then, establish Medallion architecture layers: bronze for raw data, silver for cleaned and validated data, and gold for business-ready analytics datasets.

This layered approach organizes data logically while maintaining data quality at each stage. Set up Unity Catalog for centralized governance from the start.

Step 4: Ingest and Unify Data

Connect data sources to Databricks using appropriate ingestion methods. Implement batch processing for historical data and periodic updates. Moreover, configure streaming pipelines for real-time data like telematics feeds and digital interactions.

Unify data from disparate systems into consistent formats. Apply data quality rules during ingestion to catch problems early. Hence, create unified customer, policy, and claims views that combine information from multiple legacy systems.

Step 5: Develop Analytics, ML Models, and Dashboards

Build analytical solutions addressing your prioritized use cases. Develop machine learning models for fraud detection using historical claims patterns. As well as, create pricing optimization models that consider multiple risk factors. Leverage pre-built accelerators like Smart Claims to speed development.

The accelerator provides templates for document processing, information extraction, and automated routing. Design interactive dashboards for underwriters, claims adjusters, and executives. Thus, enable self-service analytics so business users can explore data without IT assistance.

Step 6: Establish Governance and Compliance Controls

Implement comprehensive governance before rolling out to users. Configure access controls ensuring employees see only appropriate data. Additionally, enable audit logging to track data access and usage. Set up data lineage tracking showing how information flows through systems.

Document compliance procedures meeting insurance regulations like HIPAA for health insurance or state-specific requirements. Also, create data retention policies aligned with legal obligations. Test security controls thoroughly before production deployment.

Step 7: Roll Out to Business, Monitor, and Iterate

Deploy solutions gradually starting with pilot user groups. Gather feedback and refine based on real-world usage. Moreover, monitor system performance, user adoption, and business outcomes. Track metrics like fraud detection rates, claims processing times, and pricing accuracy improvements.

Schedule regular reviews to identify optimization opportunities. Therefore, expand successful pilots to broader audiences while continuously improving based on lessons learned.

Implementation Tips

- Focus on Quick Wins: Choose initial projects delivering visible results within 90 days. Early successes build organizational confidence and secure ongoing support.

- Start with Pilot Projects: Test approaches with small teams before enterprise-wide rollout. Pilots reveal issues in controlled environments where fixes are easier.

- Engage Stakeholders Early: Involve business users, IT teams, compliance officers, and executives from the beginning. Hence, their input prevents costly redesigns later. Regular communication keeps stakeholders informed and invested.

- Plan Change Management: Technical implementation alone doesn’t guarantee success. Train users on new tools and processes. Address concerns about workflow changes. Moreover, celebrate wins publicly to build momentum. Create support resources like documentation, training videos, and help desk channels.

- Measure and Communicate Value: Track business metrics showing ROI. Share success stories demonstrating tangible benefits. Use data to justify continued investment and expansion.

Following this roadmap systematically builds a robust Databricks environment while managing risk and delivering continuous business value throughout the insurance organization.

Best Practices & Pitfalls to Avoid

Implementing insurance analytics on Databricks requires a balance of strategy, governance, and execution discipline. Successful insurers adopt structured approaches while avoiding common traps that slow value realization.

Best Practices

- Align analytics strategy with business goals: Start by linking analytics use cases—like claims prediction or fraud detection—to measurable business outcomes such as reduced loss ratio or faster claims cycle time.

- Start with high-impact use cases: Focus first on areas where automation or AI can deliver quick wins, such as claims triage or underwriting scoring.

- Build a strong data foundation first (Medallion architecture): Establish a reliable data pipeline across Bronze, Silver, and Gold layers to ensure clean, high-quality data for analytics and modeling.

- Ensure governance from day one: Implement data quality checks, lineage tracking, and access controls early to maintain trust and compliance across sensitive insurance datasets.

- Use pre-built accelerators and frameworks: Leverage Databricks Solution Accelerators for insurance to shorten time-to-value and reduce implementation complexity.

Pitfalls to Avoid

- Starting with too many use cases: Avoid diluting focus and resources—prioritize use cases based on business value and data readiness.

- Ignoring unstructured data: Overlooking documents, forms, and images leads to incomplete insights and model inaccuracies.

- Underestimating data quality and integration complexity: Poor data mapping and cleansing can delay results and reduce model accuracy.

- Deploying ML models without operationalizing them: Without MLOps and monitoring, models lose relevance quickly reducing their impact on real-time decision-making.

Real-World Case Studies

Here are two real-world examples of how insurers are using Databricks Lakehouse and allied platforms for insurance analytics transformation.

1. Milliman MedInsight

Milliman MedInsight, a provider of healthcare analytics and insurance risk solutions, adopted Databricks to modernize its platform and accelerate insight delivery. The company reports seeing a 5×–7× increase in insight delivery into health insurance risk thanks to the lakehouse architecture. Consequently, by unifying data assets, reducing duplication and improving governance, the firm improved performance and compliance across its analytics environment.

Key lessons:

- Early consolidation of data assets helped accelerate analytics.

- A modern, AI-ready infrastructure supports both speed and scale.

- Strong governance and trust frameworks are essential for regulated contexts.

2. Usage-Based Insurance with MongoDB Atlas + Databricks

In a joint initiative described by MongoDB and Databricks, insurers leveraged real-time telematics, operational data and analytics to build usage-based insurance (UBI) models. The platform allowed streaming ingestion, fusion of structured and unstructured data, and real-time pricing and underwriting insights.

Key lessons:

- Integrating operational and analytical platforms removes silos and improves insight latency.

- Real-time data and AI drive differentiated usage-based products.

- Governance and data trust remain foundational—especially in new models like UBI.

Overall takeaway: In both cases, insurers benefited significantly when they:

- Consolidated and standardized their data landscape early.

- Adopted analytics platforms built for AI, scale, and governance.

- Embedded trust mechanisms—such as lineage, access control and auditability—into their operations.

Kanerika: Your Trusted Databricks Partner for Advanced Data Analytics

At Kanerika, we help enterprises unlock the full potential of Databricks Data Analytics by building intelligent, scalable architectures that align with business goals and analytical maturity. Modern organizations generate massive volumes of structured and unstructured data — and turning that data into actionable insights requires a unified, AI-ready platform. That’s where Databricks excels.

As a Databricks Partner, Kanerika leverages the Lakehouse Platform to enable end-to-end data analytics — from ingestion and transformation to advanced machine learning and predictive intelligence. Moreover, we use Delta Lake for reliable data storage, Unity Catalog for secure governance, and Mosaic AI for deploying and managing models efficiently.

Our team designs solutions that empower businesses to uncover trends, improve decision-making, and accelerate innovation with real-time analytics and automated reporting. All our implementations adhere to global compliance frameworks including ISO 27001, ISO 27701, SOC II, and GDPR, ensuring data privacy and regulatory readiness.

By combining our deep expertise in data engineering, analytics, and AI with the power of Databricks, Kanerika helps organizations transform raw data into a strategic asset — driving measurable growth, operational efficiency, and competitive advantage.

A New Chapter in Data Intelligence: Kanerika Partners with Databricks

Explore how Kanerika’s strategic partnership with Databricks is reshaping data intelligence, unlocking smarter solutions and driving innovation for businesses worldwide.

FAQs

1. What is Databricks Insurance Analytics?

Databricks Insurance Analytics is a unified data and AI platform that helps insurers integrate data from policies, claims, telematics, and customers. It enables real-time insights, predictive modeling, and automation using the Databricks Lakehouse architecture.

2. How does Databricks improve insurance operations?

By consolidating siloed data and supporting both structured and unstructured sources, Databricks allows insurers to automate claims, detect fraud, personalize pricing, and accelerate underwriting decisions.

3. What kind of data can be analyzed on Databricks?

Insurers can analyze diverse datasets — policy information, IoT and telematics data, claims documents, risk assessments, customer interactions, and third-party data — all within one unified platform.

4. How does Databricks ensure compliance and data security?

Databricks supports enterprise-grade governance through Unity Catalog, role-based access control, encryption, and audit logs — ensuring compliance with standards like GDPR, HIPAA, and SOC 2.

5. What are common use cases of Databricks Insurance Analytics?

Typical use cases include claims automation, fraud detection, customer retention analysis, pricing optimization, and risk scoring through AI/ML models.

6. How is Databricks different from traditional insurance data platforms?

Unlike legacy systems, Databricks offers an open, cloud-native Lakehouse architecture that combines the scalability of data lakes with the performance of data warehouses — enabling faster insights and AI innovation.