When deploying large language models, selecting the right inference engine can save time and money. Two popular options—SGLang vs vLLM—are built for different jobs.

In a test using DeepSeek-R1 on dual H100 GPUs, SGLang demonstrated a 10-20% speed boost over vLLM in multi-turn conversations with a large context. That matters for apps like customer support, tutoring, or coding assistants, where context builds over time. SGLang’s RadixAttention automatically caches partial overlaps, reducing compute costs.

vLLM, on the other hand, is built for batch jobs. It handles templated prompts effectively and supports high-throughput tasks, such as generating thousands of summaries or answers simultaneously. In single-shot prompts,vLLM was 1.1 times faster than SGLang.

Both engines hit over 5000 tokens per second in offline tests with short inputs. However, SGLang held up better under load, maintaining low latency even with increased requests. That makes it a better fit for real-time apps.

If your use case is chat-heavy and context-driven, SGLang might be the better pick. If you’re running structured, repeatable tasks,vLLM could be faster and more efficient. The rest of this blog breaks down how each engine works, where they shine, and what to watch out for when choosing one for your setup.

Key Takeaways

- SGLang excels at structured generation, multi-turn conversations, and complex workflows.

- vLLM focuses on high throughput, memory-efficient text completion, and large-scale deployments.

- vLLM is faster for simple tasks; SGLang performs better for structured outputs by reducing retries.

- SGLang allows custom logic and workflow integration; vLLM is simpler but less flexible.

- Use SGLang for interactive apps, RAG pipelines, and JSON outputs; use vLLM for batch jobs and high-traffic APIs.

- Many enterprises combine both, leveraging vLLM for bulk processing and SGLang for complex, structured tasks.

Overview of SGLang

What is SGLang?

SGLang (Structured Generation Language) is an open-source framework for serving LLMs with complex generation requirements. It was designed to handle scenarios where standard text completion is insufficient.

The framework excels at tasks that need structured output, multi-turn conversations, and integration with external tools or APIs.

Getting Started with SGLang

Installation is straightforward with pip:

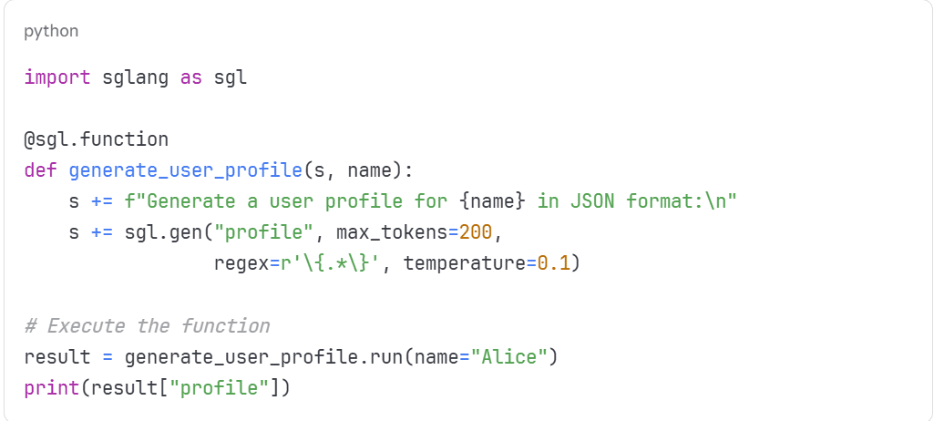

Here’s a basic example of structured JSON generation:

LLM vs vLLM: Key Differences, Speed & Performance Guide

Compare LLM vs vLLM to understand differences in speed, performance, architecture, and scalability. Find out which option is best for your AI workloads.

Core Features and Design Philosophy

SGLang’s design centers around three main ideas:

1. Structured Generation: The framework can enforce JSON schemas, regex patterns, and other output constraints during generation. This means you receive valid, structured data without the need for post-processing.

2. Stateful Sessions: Unlike stateless serving, SGLang maintains conversation state across multiple requests. This makes it perfect for chatbots and interactive applications.

3. Flexible Programming Model: You can write complex generation logic using Python-like syntax. This includes loops, conditions, and function calls within your prompts.

Supported Models, Integrations, and Ecosystem

SGLang works with the most popular open-source models, including Llama, Mistral, and CodeLlama. It integrates well with Hugging Face transformers and supports both CPU and GPU inference.

The framework also connects with popular vector databases and can handle retrieval-augmented generation (RAG) workflows out of the box.

Pros and Limitations

Pros:

- Excellent for structured generation tasks

- Built-in support for complex workflows

- Good integration with existing Python codebases

- Active development and responsive community

Limitations:

- Smaller user base compared to vLLM

- It can be overkill for simple text generation

- Learning curve for the structured generation syntax

Boost Efficiency Using LLM-Powered AI Automation!

Partner with Kanerika for Expert AI implementation Services

Overview of vLLM

What is vLLM?

vLLMis a high-performance inference engine designed to maximize throughput when serving large language models. It’s become the standard choice for production deployments where speed matters most.

The framework focuses on efficient memory management and request batching to serve more users with less hardware.

Getting Started with vLLM

Install vLLM with CUDA support:

Basic serving example:

Core Features and Unique Capabilities

1. PagedAttention: This is vLLM’s key innovation. Instead of allocating memory for the maximum possible sequence length, it allocates memory in pages as needed. This reduces memory waste by up to 4x.

2. Dynamic Batching: vLLM can efficiently batch requests of different lengths together. This results in improved GPU utilization and increased throughput.

3. Streaming Support: The framework supports streaming responses, allowing users to see output as it’s generated, rather than waiting for the complete response.

4. Multiple Sampling Methods: vLLM supports various decoding strategies, including beam search, nucleus sampling, and temperature sampling.

Ecosystem and Adoption

vLLM has gained broad adoption across research labs, startups, and enterprises. Major cloud providers offer LLM-based serving options. The framework integrates with popular deployment tools, such as Ray Serve and Kubernetes.

The community is large and active, with frequent updates and comprehensive documentation.

Pros and Limitations

Pros:

- Exceptional throughput and latency performance

- Mature and stable codebase

- Large community and extensive documentation

- Good integration with cloud platforms

Limitations:

- Less flexible for complex generation patterns

- Focused primarily on completion tasks

- May require more setup for specialized use cases

LLM Training: How to Build Smarter Language Models

Learn how to train LLMs for smarter AI: fine-tuning, scaling, ethics & real-world use cases.

SGLang vs vLLM– Side-by-Side Comparison

1. Performance

Throughput: vLLM typically wins in raw throughput benchmarks. Its PagedAttention and batching optimizations can serve 2-4x more requests per second than traditional serving methods.

SGLang’s throughput depends heavily on the complexity of your generation tasks. For simple completions, it’s slower than vLLM. For structured generation, the gap narrows because SGLang avoids the retry loops other frameworks need.

Latency: Both frameworks offer competitive latency for their target use cases.vLLM has lower latency for straightforward text generation. SGLang can achieve better end-to-end latency for structured tasks because it produces the correct output format on the first attempt.

2. Scalability

Multi-GPU Support: Both frameworks support multi-GPU deployments. vLLM has more mature distributed serving capabilities and can handle larger model sizes across multiple GPUs.

SGLang is catching up, but it currently works better for smaller deployments or single-GPU setups.

Distributed Serving: vLLM integrates well with container orchestration and service mesh architectures. It’s easier to deploy vLLM in cloud-native environments.

3. Flexibility

Model Types: Both frameworks support similar model architectures. vLLM has broader model support and receives updates for new architectures more quickly.

Fine-tuning Compatibility: Both work with fine-tuned models from Hugging Face and other sources.

Integration Options: SGLang offers more flexibility for complex workflows and custom logic.vLLM is more straightforward but less customizable.

4. Ease of Use & Developer Experience

Learning Curve:vLLMis easier to get started with if you just need fast text completion. The API is simple and well-documented.

SGLang requires learning its structured generation syntax, but this pays off for complex use cases.

Documentation: vLLM has more comprehensive documentation and examples. SGLang’s documentation is improving, but it still has some catching up to do.

5. Community Support

vLLM has a larger, more established community. You’ll find more tutorials, blog posts, and Stack Overflow answers for vLLM-related questions.

SGLang has a smaller but engaged community, with responsive maintainers who actively help users.

Use Cases and Deployment Scenarios

1. When to Use SGLang

Choose SGLang when you need:

- Structured Output: JSON APIs, database queries, or any format-constrained generation

- Complex Workflows: Multi-step reasoning, tool calling, or conditional logic

- Interactive Applications: Chatbots or assistants that maintain conversation state

- RAG Pipelines: Applications that combine retrieval with generation

2. When to Use vLLM

Choose vLLM when you need:

- Maximum Throughput: High-traffic applications or API endpoints

- Simple Text Generation: Completion, summarization, or basic Q&A

- Production Stability: Mature deployments with proven reliability

- Cloud Integration: Easy deployment on managed platforms

3. Hybrid or Combined Approaches

Some teams use both frameworks for different parts of their application. For example, vLLM for high-throughput completion tasks and SGLang for structured generation workflows.

Private LLMs: Transforming AI for Business Success

Learn about private LLMs for secured AI workflows, data privacy & personalized performance.

Alternatives Beyond SGLang vs vLLM

1. TensorRT-LLM: NVIDIA’s optimized inference engine with the best performance on NVIDIA GPUs, but limited to CUDA environments.

2. Text Generation Inference (TGI): Hugging Face’s serving solution with good model support but generally lower throughput than vLLM.

3. Ray Serve: A more general-purpose serving framework that can work with multiple inference engines.

4. FasterTransformer: NVIDIA’s earlier framework, now largely superseded by TensorRT-LLM.

Summary: SGLang vs vLLM Feature Comparison

| Feature | SGLang | vllm |

| Core Strength | Multi-turn dialogue, structured output, complex task optimization | High-throughput single-round inference, memory-efficient management |

| Key Technology | RadixAttention, compiler-inspired design | PagedAttention, Continuous Batching |

| Performance | Optimized for complex workflows | 2-4x higher throughput for simple tasks |

| Memory Efficiency | Standard memory allocation | Up to 4x better memory utilization |

| Latency | Better end-to-end for structured tasks | Lower latency for text completion |

| Suitable Models | General LLMs/VLMs (LLaMA, DeepSeek, Qwen) | Ultra-large-scale LLMs (GPT-4, Mixtral, Llama 70B+) |

| Learning Curve | Higher (requires custom DSL) | Lower (ready-to-use APIs) |

| Model Support | Good coverage of popular models | Excellent, fastest to support new architectures |

| Structured Generation | Native support with constraints | Limited, requires post-processing |

| Multi-GPU Support | Basic distributed serving | Advanced multi-GPU and tensor parallelism |

| Streaming | Supports real-time streaming | Excellent streaming capabilities |

| Community Size | Growing, responsive maintainers | Large, established ecosystem |

| Documentation | Improving, focused examples | Comprehensive guides and tutorials |

| Cloud Integration | Basic deployment options | Excellent cloud-native support |

| Production Readiness | Good for specialized use cases | Battle-tested for high-scale deployments |

| Best Use Cases | JSON APIs, chatbots, RAG workflows, tool calling | High-traffic serving, completion APIs, simple Q&A |

| Pricing/Resource Cost | Higher cost per request, lower total cost for complex tasks | Lower cost per request, efficient hardware utilization |

How Kanerika Powers Enterprise AI with LLMs and Automation

At Kanerika, we design AI systems that solve real problems for enterprises. Our work spans various industries, including finance, retail, and manufacturing. We use AI and ML systems to detect fraud, automate vendor onboarding, and predict equipment issues. Our goal is to make data useful—whether it’s speeding up decisions or reducing manual work.

LLMs are a core part of our solutions. We train and fine-tune models to match each client’s domain. This enables us to deliver accurate summaries, structured outputs, and prompt responses. We build private, secure setups that protect sensitive data and support scalable training. Our approach is built around control, performance, and cost-efficiency.

We also focus heavily on automation. Our agentic AI systems combine LLMs with smart triggers and business logic. These systems handle repetitive tasks, route decisions, and adapt to changing inputs. This enables teams to move faster, reduce errors, and focus on strategy rather than routine work.

Conclusion

The choice between SGLang vs vLLM ultimately depends on your specific needs. If you’re building applications that require structured output or complex generation workflows, SGLang offers unique capabilities that simplify development.

For high-throughput serving of traditional text completion tasks, vLLM remains the better choice. Its maturity, performance optimizations, and large community make it the safer bet for production deployments.

Many successful AI applications use both frameworks for different parts of their infrastructure. Start with your most critical use case, then expand as your needs grow. In the ongoing debate of SGLang vs vLLM, the best decision comes down to balancing speed, flexibility, and long-term scalability.

Accelerate Business Success through LLM AI Automation!

Partner with Kanerika for expert-driven AI strategies.

FAQs

1. What is the main difference between SGLang and vLLM?

The key difference lies in their focus areas. vLLM is built for raw speed and high throughput, making it ideal for handling a large number of text generation requests. SGLang, on the other hand, focuses on structured generation, ensuring that complex workflows produce the correct format on the first attempt.

2. Which is faster: SGLang vs vLLM?

In most benchmarks, vLLM is faster due to its PagedAttention and batching optimizations, often serving 2–4x more requests per second. However, SGLang can outperform vLLM in structured tasks because it avoids repeated retries, which helps improve overall end-to-end latency in those cases.

3. Is SGLang or vLLM better for large-scale deployments?

For large-scale, cloud-native deployments, vLLM is generally the stronger option. It has more mature multi-GPU and distributed serving capabilities, making it easier to scale across clusters. SGLang currently performs best in smaller setups or single-GPU environments but is steadily improving.

4. Which framework is easier to use, SGLang or vLLM?

vLLM offers a simpler setup and a beginner-friendly API, so developers can start quickly if they only need fast text completions. SGLang requires learning its structured generation syntax, which adds some complexity at the start but provides long-term benefits for custom workflows and advanced tasks.

5. Who has better community support: SGLang or vLLM?

vLLM has a larger and more established user base, with plenty of tutorials, blogs, and community discussions available online. SGLang’s community is smaller but highly engaged, with maintainers who are very responsive and actively support users with troubleshooting and new feature requests.