Businesses are increasingly adopting real-time data processing and artificial intelligence (AI) to enhance decision-making and operational efficiency. A recent Deloitte report highlights the growing importance of real-time data, with companies building advanced data pipelines to make swift, data-driven decisions.

To meet these evolving demands, Microsoft has enhanced Microsoft Fabric, a cloud-native platform that streamlines data analytics. Fabric Runtime 1.3, released in September 2024, integrates Apache Spark 3.5, offering improved performance and scalability for data processing tasks.

This blog will guide you through loading data into a Lakehouse using Spark Notebooks within Microsoft Fabric. Additionally, we’ll demonstrate how to leverage Spark’s distributed processing power with Python-based tools to manipulate data and securely store it in the Lakehouse for further analysis.

What is Microsoft Fabric?

Microsoft Fabric is an integrated, end-to-end platform that simplifies and automates the entire data lifecycle. It brings together the tools and services a business needs to integrate, transform, analyze, and visualize data — all within one ecosystem. Microsoft Fabric eliminates the need for organizations to manage multiple platforms or services for their data management and analytics needs. Thus, the entire process—from data storage to advanced analytics and business intelligence—can now be accomplished in one place.

Transform Your Data Analytics with Microsoft Fabric!

Partner with Kanerika for Expert Fabric implementation Services

What are Spark Notebooks in Microsoft Fabric?

One of the standout features of Microsoft Fabric is the integration of Spark Notebooks. These interactive notebooks are designed to handle large-scale data processing, which is, therefore, essential for modern data analytics, especially when working with big data.

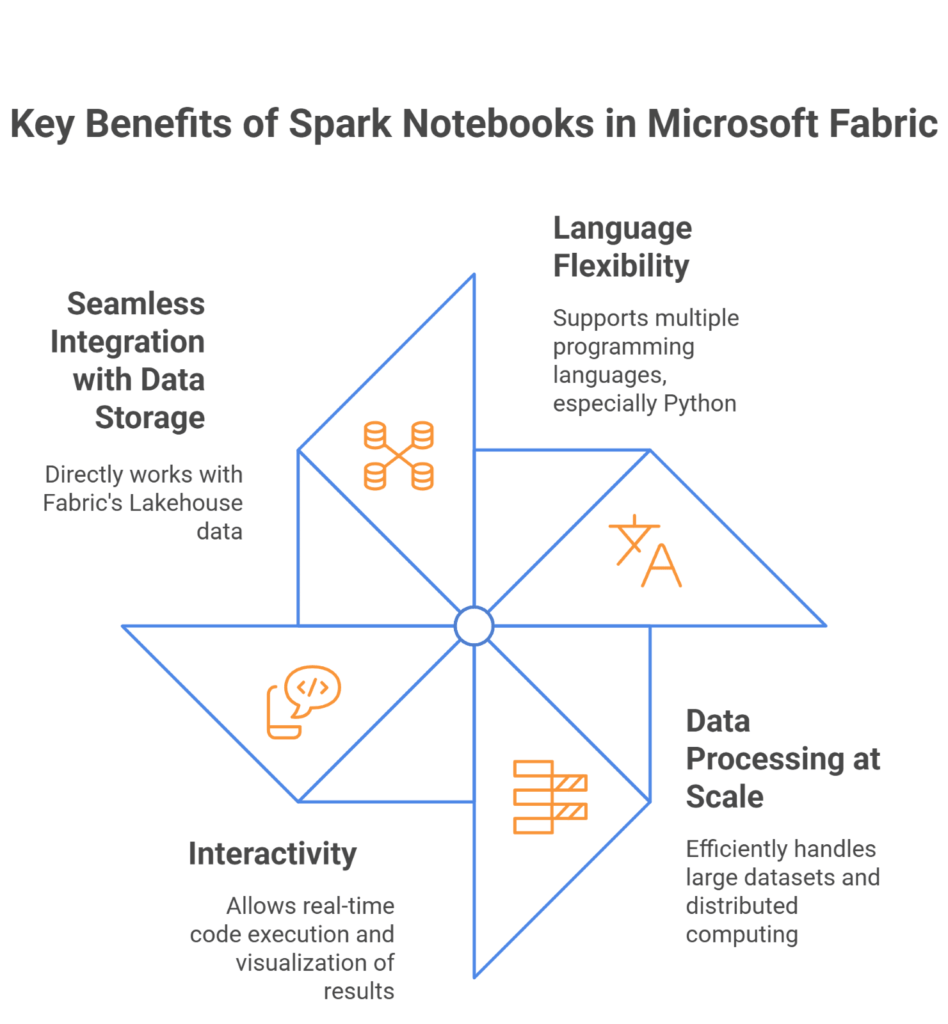

Key Benefits of Spark Notebooks in Fabric:

- Language Flexibility: Microsoft Fabric builds Spark Notebooks to support multiple programming languages. Moreover, they are specifically known for natively supporting Python, especially within the data science and analytics community.

- Data Processing at Scale: The underlying engine, which is Apache Spark, is built for large datasets and distributed computing. Spark Notebooks allow users to write code to quickly load, process and analyze large volumes of data. This is particularly useful for businesses dealing with Big Data that need to process it without delays.

- Interactivity: Spark Notebooks are highly interactive. This means users can write code in blocks and execute them in real-time. The interactive nature of the notebooks allows users to visualize intermediate results, test different approaches, and quickly iterate on their work.

- Seamless Integration with Data Storage: One of the major advantages of Spark Notebooks in Microsoft Fabric is their built-in integration with the underlying data storage solutions, like the Lakehouse. This integration allows users to work directly with data from Fabric’s Lakehouse, efficiently manipulating and transforming data without cross-platform data movement.

How to Set Up Microsoft Fabric Workspace

Step 1: Sign in to Microsoft Fabric

Access Microsoft Fabric

- Open a web browser and go to app.powerbi.com.

- Sign in using your Microsoft account credentials. If you’re new to Microsoft Fabric, you may need to create a new account otherwise sign up for a trial version.

Navigate to Your Workspace

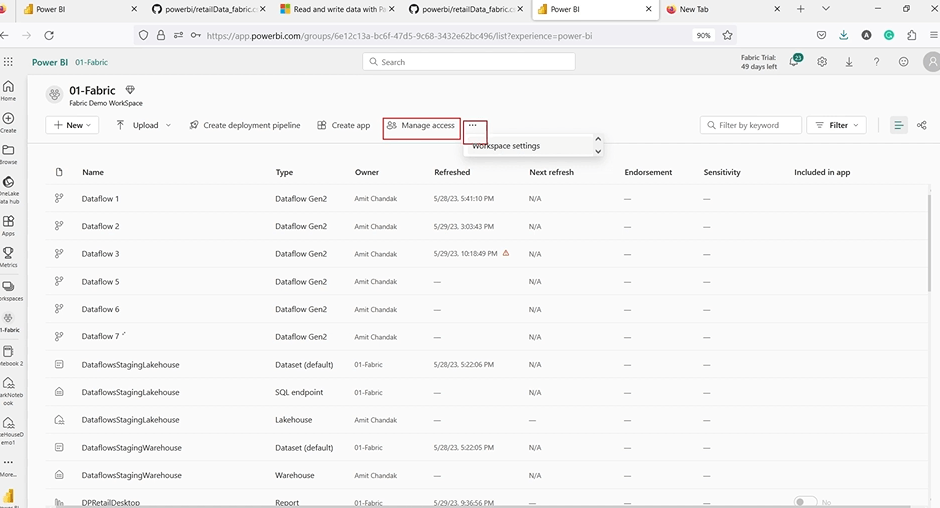

After logging in, you will be directed to the Microsoft Fabric workspace. This is where all your projects, datasets, and tools are housed.

Step 2: Create a New Lakehouse

Why Create a New Lakehouse?

Creating a fresh Lakehouse ensures your current project remains separate from any previous data experiments or projects. It’s a clean slate that makes managing your data and processes more efficient.

Navigate to the Workspace

In your workspace, find the option to create a new Lakehouse. Microsoft Fabric offers an easy-to-use interface that guides you through the creation process.

Ensure the Lakehouse is Empty

When creating a new Lakehouse, make sure it’s set up as an empty environment. This ensures that you don’t have any legacy data or configurations interfering with your current data processing tasks.

Step 3: Configure Your Spark Pool

Setup Spark in Fabric

Before you can use Spark Notebooks for data manipulation, you need to generally configure a Spark pool within your Fabric environment. Spark pools allocate resources for distributed data processing.

Check Available Options

- For trial users, Microsoft Fabric offers a starter pool with default settings. Additionally, if you are on a paid plan, you may have the flexibility to customize your Spark pool configuration, such as adjusting the size or selecting the version of Spark to use.

- Verify that the settings are correct for your workload and ensure that Spark is ready for use in your Lakehouse.

Step 4: Start Your First Notebook

Create a New Notebook

- Once the Spark pool is configured, open a Spark Notebook from your Lakehouse workspace.

- Notebooks in Microsoft Fabric allow you to run code interactively. You’ll use this notebook to perform tasks like data cleaning, transformation, and analysis, all within the same environment.

Select the Language and Spark Session

- Choose Python (or another supported language) for your Spark Notebook, depending on your preference and the tasks you plan to perform.

- Initialize the Spark session to ensure your notebook can connect with the configured Spark pool, allowing it to process and analyze data efficiently.

Step 5: Verify Environment and Test Setup

- After setting up your environment and creating your Lakehouse, it’s important to test that everything is working as expected.

- Try loading a sample dataset into Lakehouse and run a basic query or transformation in your notebook. This will help verify that your Spark pool is properly connected, and your data is loading correctly.

Data Visualization Tools: A Comprehensive Guide to Choosing the Right One

Explore how to select the best data visualization tools to enhance insights, streamline analysis, and effectively communicate data-driven stories.

How to Configure Spark in Microsoft Fabric

Spark Settings

Spark runs on clusters, and in Microsoft Fabric, you have the option to configure the Spark pool for your environment. If you’re using a trial account, you’ll have limited configurations available. Make sure you’re aware of the available options.

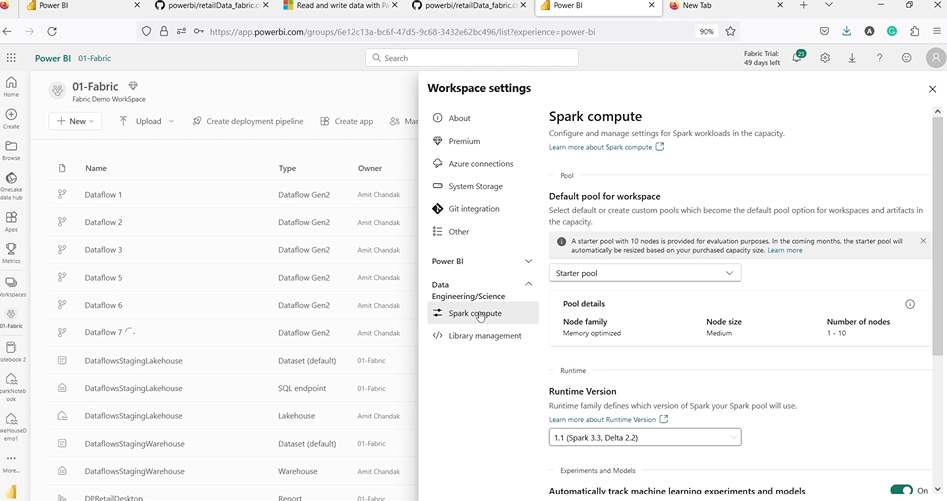

Step 1: Access Spark Settings

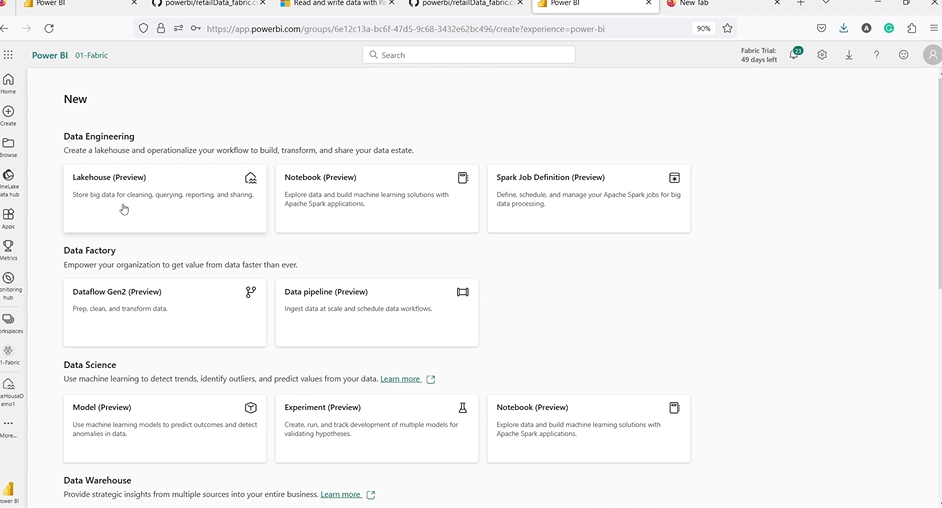

To configure Spark in Microsoft Fabric, you’ll need to access the workspace settings where you can modify the Spark configuration. Here’s how you can do it:

Go to Your Workspace: Navigate to the Microsoft Fabric workspace where your data resides.

Click the Three Dots Next to Your Workspace Name: In the workspace menu, click on the three vertical dots (also known as the ellipsis).

Select Workspace Settings: From the dropdown menu, choose Workspace Settings to access all available configuration options for your workspace.

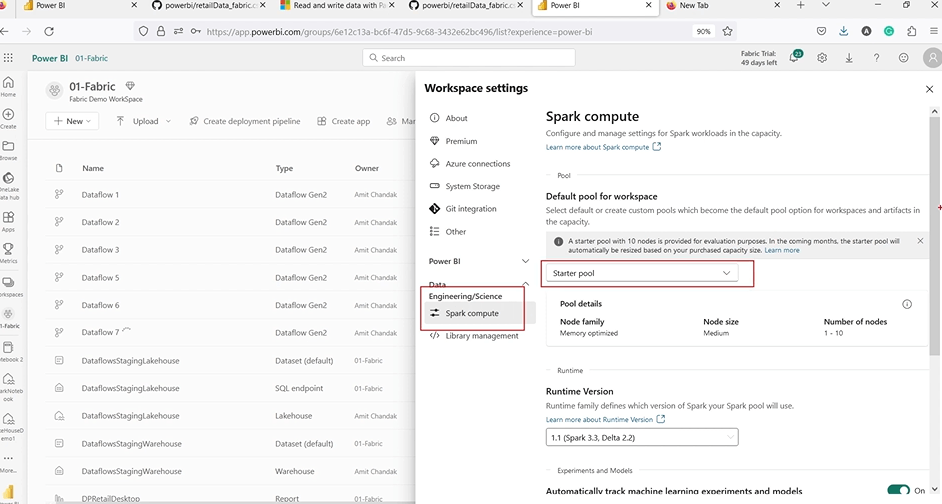

Find the Spark Compute Section

- Inside the workspace settings, locate the Spark Compute section. This is where you can configure the Spark clusters that will run your data processing tasks.

- The Spark Compute section allows you to create, manage, and monitor the Spark pools available in your workspace.

Step 2: Selecting the Spark Pool

Once you’re in the Spark Compute section of the workspace settings, you can configure and select the Spark pool that suits your needs. Here’s what you can do:

Choose the Appropriate Spark Pool

If you’re a trial user, you’ll typically have access to a starter pool. This default pool is fine for smaller data processing tasks and experiments but has limited resources compared to a full Spark pool. You can select the starter pool for testing purposes or for small-scale operations.

Configure Spark Version and Properties

- In the Spark pool settings, you can choose the version of Spark you want to use (e.g., Spark 3.x). Different versions offer varying performance improvements, features, and compatibility with specific tools or libraries.

- Additionally, you can adjust other properties of the Spark pool, such as memory allocation, number of workers, and compute power. These configurations are critical for larger datasets or more complex processing tasks, although they are restricted on trial accounts.

Creating and Opening Spark Notebooks in Microsoft Fabric

Step 1: Open a New Notebook

Navigating to the Notebook Interface

- Within Microsoft Fabric, you can create a new Spark Notebook by first navigating to your Lakehouse or Workspace.

- Once there, locate the option to create a new notebook. The platform provides an intuitive interface for creating and managing notebooks, which will allow you to execute code and interact with your data.

Choosing Between a New or Existing Notebook

You can choose to start a new notebook from scratch, which is ideal for starting fresh and writing new code, or you can open an existing notebook if you want to continue with previous work or use pre-written code for your analysis.

Setting Up the Environment

- When creating a new notebook, you’ll select the Spark pool where the code will be executed. If you’re just testing or running smaller workloads, you can use the default starter pool available for trial users.

- The notebook interface allows you to write and execute code cells interactively, making it easier to test snippets of code and see results instantly.

Step 2: Writing Python Code

Spark and Python Integration

- Once you set up your notebook, you write code directly within it using Python. While Spark Notebooks support multiple languages, Python is commonly used for its simplicity and powerful data manipulation libraries.

- In this notebook, you’ll use Pandas, a popular Python library, to read, manipulate, and process data. The combination of Spark for large-scale data processing and Pandas for easy data handling makes this notebook an ideal tool for analytics.

Python Code Execution

- With Spark running in the background, Python code will be executed on Spark’s distributed compute infrastructure, which means it can handle large datasets efficiently.

- Every time you execute a code cell in the notebook, Spark processes the data, and you can instantly see the result of your operations.

Steps to Load Data Using Spark Notebooks in Microsoft Fabric

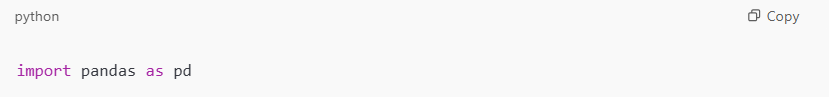

Step 1: Importing Pandas

- To begin working with data in Python, you need to import Pandas. This library provides easy-to-use data structures, such as DataFrames, that are perfect for working with tabular data.

- Importing Pandas allows you to load, clean, and manipulate the data efficiently within the notebook.

Step 2: Reading Data from an External Source

- In most cases, your data might not reside within the Microsoft Fabric environment, so you’ll need to load it from an external source like a CSV file hosted on GitHub or a file from another cloud storage location.

- Here’s an example of loading a CSV file directly from a URL:

This reads the data into a Pandas DataFrame (df), which is a Python object that holds the data in a tabular format, making it easy to manipulate.

Step 3: Running Spark Jobs

- Executing Code on Spark: After loading your data, you can execute code to process and analyze the data. Additionally, when you run your Python code, Spark automatically processes the operations in memory, leveraging its distributed compute resources to handle large datasets.

- Real-Time Execution: Running Spark jobs allows you to see the results instantly in your notebook. For example, when you manipulate the DataFrame or apply any transformations, Spark manages the heavy lifting behind the scenes.

How to Transform Data into Spark in Microsoft Fabric

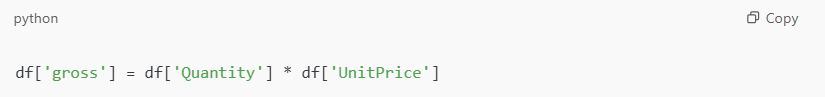

Adding a New Column

Data Transformation Example

One common task in data manipulation is adding new columns or features to your dataset. For instance, if you want to create a new column that represents the total gross amount (calculated by multiplying the quantity and price), you can easily do this with Pandas:

This code multiplies the Quantity and UnitPrice columns and stores the result in a new column called gross.

Verifying the Transformation

After transforming the data, you can display the DataFrame again to ensure the new column was added correctly:

Steps to Save Transformed Data in Microsoft Fabric

Step 1: Save Data as CSV

After manipulating the data, you may want to save the transformed DataFrame for later use. You can easily save the data as a CSV file using the .to_csv() method:

This will save the data to your Lakehouse, and the file will be accessible for future analysis or reporting.

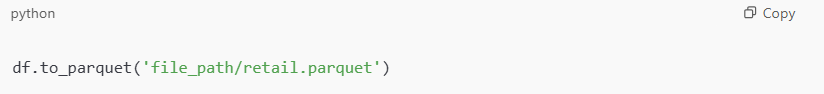

Step 2: Save Data as Parquet

- For larger datasets, the Parquet format is more efficient than CSV as it is a columnar storage format optimized for analytics.

- You can save your data as a Parquet file:

Parquet is especially suitable for big data workloads and ensures that it processes your data quickly and efficiently.

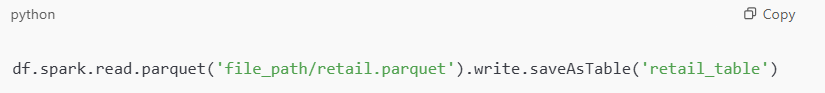

Converting Parquet Data into a Lakehouse Table

- After you save your data as a Parquet file, you can easily convert it into a table within the Lakehouse environment. You can structure tables as objects optimized for querying.

- You can convert the Parquet file into a table using Spark code:

Alternatively, you can right-click the Parquet file in your Lakehouse and select “Load to Tables” from the context menu.

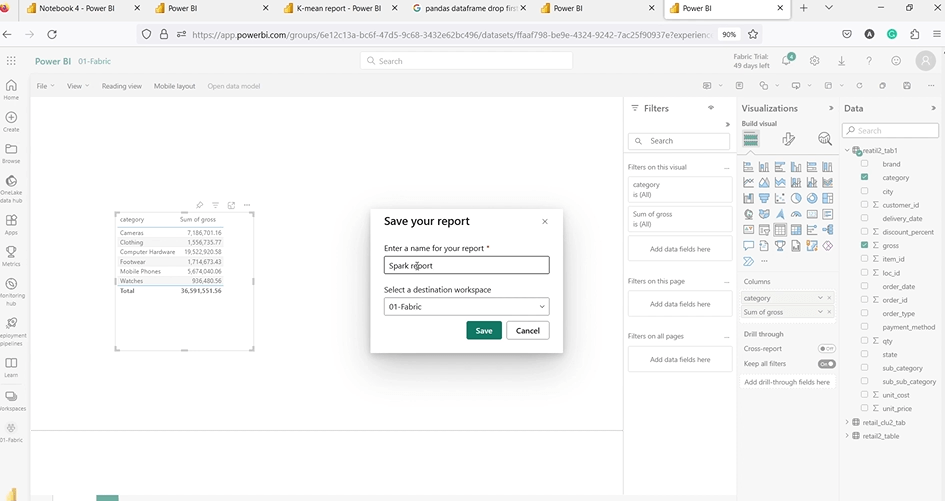

Analyzing Data in Power BI

Step 1: Create a Power BI Report

- Once your data is transformed and stored in the Lakehouse as a table, you can easily connect it to Power BI for visualization.

- Power BI allows you to create reports and dashboards based on the data stored in the Lakehouse, helping you analyze trends, create charts, and share insights with stakeholders.

Step 2: Visualize and Explore

- Inside Power BI, you can use different visuals like bar charts, line graphs, and tables to explore your data. The tool lets you build interactive dashboards where users can filter information, drill into specific sections, and uncover more detailed insights.

- These features make it easier to spot trends, track performance, and understand what’s really going on with your data.

Partner with Kanerika to Unlock the Full Potential of Microsoft Fabric for Data Analytics

Kanerika is a leading provider of data and AI solutions, specializing in maximizing the power of Microsoft Fabric for businesses. With our deep expertise, we help organizations seamlessly integrate Microsoft Fabric into their data workflows, enabling them to gain valuable insights, optimize operations, and make data-driven decisions faster.

As a certified Microsoft Data and AI solutions partner, Kanerika leverages the unified features of Microsoft Fabric to create tailored solutions that transform raw data into actionable business insights.

By adopting Microsoft Fabric early in the process, businesses across various industries have achieved real results. Kanerika’s hands-on experience with the platform has helped companies accelerate their digital transformation, boost efficiency, and uncover new opportunities for growth.

Partner with Kanerika today to elevate your data capabilities and take your analytics to the next level with Microsoft Fabric!

Frequently Asked Questions

How to load data into Lakehouse?

Step-by-step guide to Prepare and load data into your lakehouse

Step 1: Open Your Lakehouse.

Step 2: Create a New Dataflow Gen2.

Step 3: Import from Power BI Query Template.

Step 4: Choose the Query Template.

Step 5: Configure Authentication.

Step 6: Establish Connection.

Step 7: Familiarize with the Interface.

How do I load data into spark?

For a completed notebook for this article, see DataFrame tutorial notebooks.

Step 1: Define variables and load CSV file

Step 2: Create a DataFrame

Step 3: Load data into a DataFrame from CSV file

Step 4: View and interact with your DataFrame

Step 5: Save the DataFrame

How to use lakehouse in fabric?

Create a lakehouse

1. In Fabric, select Workspaces from the navigation bar.

2. To open your workspace, enter its name in the search box located at the top and select it from the search results.

3. From the workspace, select New item, then select Lakehouse.

4. In the New lakehouse dialog box, enter wwilakehouse in the Name field.

What is the abfs path in fabric?

Users can use the abfs path to read and write data to any lakehouse. For example, a notebook could be in workspace A, but using the abfs path, you can read or write data to a lakehouse in workspace B without mounting the lakehouse or setting a default lakehouse.

How to load JSON data in Spark?

Spark SQL can automatically infer the schema of a JSON dataset and load it as a DataFrame. using the read. json() function, which loads data from a directory of JSON files where each line of the files is a JSON object.

How to connect Spark with database?

Hence in order to connect using pyspark code also requires the same set of properties. url — the JDBC url to connect the database. Note: “You should avoid writing the plain password in properties file, you need to encoding or use some hashing technique to secure your password.” Spark class `class pyspark.

How to drop a table in fabric Lakehouse?

You can find your Lakehouse object ID in Microsoft Fabric by opening your lakehouse in your workspace, and the ID is after /lakehouses/****** in your URL. After this I can drop the table using the DROP TABLE command