What if your smartphone could instantly recognize objects, translate languages, and detect potential health issues—all without an internet connection? What if industrial robots could make split-second decisions without relying on a distant cloud? It’s the promise of Edge AI, a revolutionary technology that’s transforming the devices we use every day. With over 75% of enterprise-generated data expected to be processed at the edge by 2025, Edge AI is emerging as the key technology behind smarter, more responsive devices across various industries.

According to a report by Grand View Research, the global edge AI software market is valued at USD 14,787.5 million and is projected to grow at a CAGR of 21% from 2023 to 2030. This staggering growth underscores the increasing importance of Edge AI in our increasingly connected world. As we stand on the brink of a new era in computing, Edge AI is poised to redefine how we interact with technology, offering unprecedented speed, privacy, and capabilities that were once thought impossible without constant cloud connectivity.

What is Edge AI?

Edge AI refers to the deployment of artificial intelligence (AI) algorithms directly on devices or at the edge of a network, rather than relying on centralized cloud servers. This allows for data processing and decision-making to occur locally, reducing latency and improving real-time responsiveness.

For example, in autonomous vehicles, Edge AI enables the car to process sensor data on the spot, allowing it to make instant decisions about braking or avoiding obstacles without needing to communicate with a cloud server. This localized processing not only speeds up operations but also enhances data privacy by keeping sensitive information on the device itself.

Transform Your Business with Innovative Edge AI Solutions!

Partner with Kanerika Today!

How Edge AI Differs from Traditional AI?

Edge AI and traditional AI differ primarily in where data processing occurs, which has significant implications for latency, security, and scalability.

Traditional AI

It typically relies on cloud computing, where data is sent from devices to centralized servers for processing. These servers are equipped with powerful computational resources that analyze large datasets and return results to the device. While this model works well for tasks that require intensive processing power, it comes with drawbacks. The round-trip to the cloud introduces latency, making real-time decision-making challenging. Additionally, the reliance on constant internet connectivity and the transfer of data to remote servers can pose privacy and security risks, especially for sensitive information.

Edge AI

This brings the processing power closer to the data source—either on the device itself or on a local server within the network. By processing data locally, Edge AI significantly reduces latency, enabling real-time or near-real-time responses. This is critical in applications like autonomous vehicles, industrial automation, and healthcare monitoring, where even a fraction of a second delay can have serious consequences. Furthermore, because data doesn’t need to be transmitted to the cloud, Edge AI enhances privacy and security by keeping sensitive information within the local network.

For example, in smart cameras used for security, traditional AI might upload video footage to the cloud for analysis, which could take several seconds and expose the data to potential breaches. In contrast, an Edge AI-enabled camera can analyze video footage on the spot, identifying threats immediately and reducing the risk of data exposure.

| Aspect | Edge AI | Traditional AI |

| Data Processing Location | On the device or local network | Centralized cloud servers |

| Latency | Low latency due to local processing | Higher latency due to data transfer to and from the cloud |

| Real-Time Decision-Making | Supports real-time decision-making | May experience delays, less ideal for time-sensitive tasks |

| Internet Connectivity | Often operates without continuous internet connection | Requires a stable internet connection for data transfer |

| Privacy and Security | Enhanced, as data is processed locally | Data may be exposed during transmission to the cloud |

| Scalability | Limited by local hardware capabilities | Highly scalable with cloud resources |

| Processing Power | Limited by on-device hardware | Access to vast computational resources |

| Ideal Use Cases | Autonomous vehicles, smart cameras, industrial IoT | Big data analysis, complex AI models, non-time-sensitive tasks |

What Are the Key Components of Edge AI Systems?

1. Edge Devices (Hardware)

Edge devices are the physical units where AI processing takes place locally. These devices are equipped with specialized hardware designed to handle AI computations efficiently at the edge of the network. They are optimized for low power consumption and high performance to support real-time data processing.

Components:

- Microprocessors: CPUs, GPUs, TPUs

- Sensors: Cameras, microphones, IoT sensors

- AI Accelerators: NVIDIA Jetson, Google Edge TPU

2. AI/ML Models

AI and machine learning models form the core intelligence of Edge AI systems, enabling tasks like image recognition, natural language processing, and predictive analytics directly on the device. These models are often optimized for efficiency to run on limited hardware resources without compromising performance.

Features:

- Lightweight Architectures: Designed for resource-constrained environments

- Model Compression: Techniques like quantization and pruning

- Pre-trained Models: Fine-tuned for specific edge applications

3. Data Processing and Analytics

This component manages the collection, processing, and analysis of data generated by edge devices. It ensures that data is processed in real-time, allowing for immediate decision-making and actions without the need to send data to centralized cloud servers.

Functions:

- Real-Time Filtering: Removing irrelevant data on the fly

- Local Analytics: Generating insights directly on the device

- Event-Driven Processing: Responding to specific triggers instantly

4. Connectivity and Networking

Connectivity components enable edge devices to communicate with each other and with central servers or the cloud when necessary. Reliable and fast network connections are crucial for synchronizing data, updating AI models, and ensuring seamless integration with broader systems.

Technologies:

- Wireless Protocols: 5G, Wi-Fi, Bluetooth, Zigbee

- Wired Connections: Ethernet, USB

- Network Protocols: MQTT, CoAP for efficient communication

5. Local Data Storage

Local storage solutions are essential for temporarily or permanently storing data processed at the edge. This storage capability allows devices to manage data without relying on constant cloud connectivity, ensuring data availability and reducing latency.

Storage Options:

- Solid-State Drives (SSDs): Fast and reliable storage

- Flash Memory: Compact and energy-efficient

- Local Databases: SQLite, LevelDB for structured data storage

6. Power Management

Efficient power management ensures that edge devices operate reliably, especially in remote or mobile environments. This component focuses on optimizing energy consumption and utilizing power-efficient hardware to extend device uptime.

Strategies:

- Battery Optimization: Extending battery life through efficient power use

- Energy-Efficient Design: Low-power hardware and components

- Power Harvesting: Utilizing ambient energy sources like solar or kinetic energy

7. Security and Privacy

Security components protect data and AI processes at the edge from unauthorized access and cyber threats. Ensuring data privacy is critical, particularly when handling sensitive information locally on the device.

Security Measures:

- Data Encryption: Protecting data both at rest and in transit

- Secure Boot: Ensuring only trusted software runs on the device

- Access Controls: Implementing authentication and authorization mechanisms

8. Software and Middleware

Middleware serves as an intermediary layer that manages communication between hardware and applications. It facilitates data flow, device management, and the deployment of AI models, ensuring seamless operation of Edge AI systems.

Components:

- Operating Systems: Optimized for edge computing (e.g., Linux-based OS)

- Device Management Software: Tools for monitoring and maintaining devices

- Middleware Platforms: Edge computing frameworks like Kubernetes for orchestration

9. Development and Deployment Tools

These tools support the creation, testing, and deployment of Edge AI applications. They include software development kits (SDKs), integrated development environments (IDEs), and platforms tailored for edge computing, enhancing productivity and ensuring compatibility across different devices.

Tools:

- AI Frameworks: TensorFlow Lite, PyTorch Mobile

- Deployment Platforms: AWS IoT Greengrass, Azure IoT Edge

- Monitoring Tools: Tools for tracking performance and managing updates

Unlock Real-Time Intelligence with Edge AI Implementation

Partner with Kanerika today1

The Need for Edge AI in Smart Devices

Limitations of Cloud-Based Processing

1. Latency Issues

Cloud-based processing often involves sending data from a device to a remote server, where it is processed and then sent back to the device. This round-trip can introduce significant latency, making it unsuitable for applications that require real-time responses, such as autonomous driving or industrial automation. Delays in decision-making could lead to safety risks or operational inefficiencies.

2. Bandwidth Constraints

Sending large volumes of data to the cloud for processing can strain network bandwidth, especially in environments with many connected devices or in remote areas with limited connectivity. High data transfer requirements can lead to network congestion and increased costs, limiting the scalability of cloud-based solutions.

3. Privacy Concerns

Transmitting sensitive data over the internet to a cloud server poses privacy risks, as data can be intercepted or accessed by unauthorized parties. Additionally, storing personal or confidential data on remote servers increases the risk of data breaches, making it difficult to comply with stringent data protection regulations.

Benefits of Edge AI

1. Real-Time Processing

Edge AI processes data directly on the device or close to the source, significantly reducing latency. This capability is crucial for applications like video surveillance, where immediate action is required based on real-time data analysis. By processing data locally, devices can make split-second decisions without relying on a remote server.

2. Reduced Data Transfer

With Edge AI, the system processes most data locally and transmits only the most relevant information to the cloud. This approach conserves bandwidth and lowers operational costs by reducing the amount of data sent over the network.

3. Enhanced Privacy and Security

By keeping data processing and storage local, Edge AI minimizes the risk of data exposure. Sensitive information stays on the device, minimizing the risk of interception during transmission or compromise in a cloud-based breach. This is crucial in industries like healthcare, where we must protect patient data at all costs.

4. Improved Reliability

Edge AI systems are less dependent on constant internet connectivity, making them more reliable in environments with unstable or limited network access. Devices can continue to operate and process data even when offline, ensuring consistent performance and reducing the risk of downtime in critical applications.

Edge AI Technologies and Frameworks

A. Hardware Solutions

Edge AI Chips and Processors

NVIDIA Jetson, Google Edge TPU, and Intel Movidius are specialized edge AI chips and processors designed to handle AI workloads directly on devices. These processors are optimized to consume low power while providing enough computational power to run AI models efficiently.

Key Features:

- Low power consumption

- High efficiency for AI workloads

- Real-time processing capabilities

FPGA and ASIC Implementations

Field-programmable gate arrays (FPGAs) and application-specific integrated circuits (ASICs) are examples of on-demand hardware designed for edge AI to execute tasks energy-efficiently. FPGAs offer adaptability and can be modified with different AI models, while ASICs excel in performance and efficiency for specific computations, making them ideal for mass production.

Advantages:

- Customizable processing capabilities (FPGAs)

- High efficiency and low latency (ASICs)

- Suitable for specific AI workloads

B. Software Frameworks

TensorFlow Lite

TensorFlow Lite is a version of Google’s original TensorFlow framework for users whose work involves applications with low-power devices such as mobile or edge devices. It also enables developers to work efficiently with machine learning models without using higher-power devices. TensorFlow Lite can run on many platforms, including Android, iOS, and embedded devices, which makes it a good candidate for edge AI applications, among others.

Benefits:

- Optimized for mobile and embedded devices

- Supports a wide range of hardware platforms

- Easy integration with existing TensorFlow models

ONNX Runtime

ONNX Runtime is a cross-platform, high-performance scoring engine for Open Neural Network Exchange (ONNX) models. Developed by Microsoft, it supports models trained in various frameworks like PyTorch, TensorFlow, and Scikit-learn. ONNX Runtime is optimized for performance on edge devices, making it a preferred choice for deploying AI models in resource-constrained environments.

Key Features:

- Cross-platform support

- High performance and low latency

- Compatible with multiple AI frameworks

Edge Impulse

Edge Impulse is a platform designed to simplify the development and deployment of machine learning models on edge devices. It provides tools for collecting data, training models, and deploying them on edge hardware like microcontrollers and FPGAs. Edge Impulse is particularly useful for IoT applications, enabling developers to create custom AI models without extensive expertise in machine learning.

Highlights:

- User-friendly interface for model training and deployment

- Supports a variety of edge devices

- Ideal for IoT and embedded applications

C. Edge AI Platforms and Services

Edge Impulse is machine learning software that aims to ease the process of building and deploying machine learning models onto edge devices. It offers the ability to collect data, train models, and finally deploy the models onto edge platforms such as embeddable microcontrollers and FPGAs. Lastly, Edge Impulse is advantageous in IoT use cases by helping in building customized AI models without extensive knowledge of ML.

Core Features:

- Centralized management and deployment of AI models

- Integration with cloud services for hybrid processing

- Scalable solutions for large-scale edge deployments

Accelerate Your Digital Transformation with Edge AI Technology

Partner with Kanerika Today!

What Are the Important Applications of Edge AI ?

- Autonomous Vehicles

By analyzing sensor data—such as photos and lidar readings—inside the car, Edge AI allows autonomous cars to make decisions in real time. This makes it possible to take quick movements, like stopping or steering, which are essential for navigation and safety in dynamic conditions.

- Healthcare

In the healthcare industry, Edge AI powers medical devices for real-time patient monitoring and diagnostics. For example, wearable technology instantly assesses vital signs and notifies healthcare providers of any irregularities without sending data to the cloud.

- Smart Cities

Through the optimization of public safety, energy distribution, and traffic management systems, edge AI plays a crucial role in smart cities. By analyzing vehicle flow in real-time and modifying traffic signals without relying on cloud processing, traffic cameras equipped with Edge AI can lessen traffic.

- Industrial IoT (IIoT)

Through the analysis of sensor data from on-site machines, Edge AI facilitates quality control and predictive maintenance in industrial environments. This allows businesses to resolve problems before they lead to failures, which decreases downtime and boosts efficiency.

- Retail

Edge AI enhances the retail experience by enabling personalized in-store shopping through real-time data analysis. Smart mirrors and sensors can provide customized product recommendations and streamline checkout processes without sending customer data to remote servers.

- Security and Surveillance

By processing video feeds locally, Edge AI enhances security systems by enabling the quick identification of questionable activity. This not only speeds up reaction times but also makes sure that private video doesn’t get sent over the internet.

- Telecommunications

Edge AI in telecoms processes data at the network’s edge to maximize network performance. This makes it possible to use bandwidth more effectively and respond to network demands more quickly, guaranteeing more dependable and seamless communication services.

Implementing Edge AI: Best Practices

1. Choosing the Right Hardware

Selecting the appropriate hardware is crucial for the success of Edge AI deployment. The hardware should be powerful enough to run AI models efficiently while being energy-efficient and compatible with the specific requirements of your application. Considerations include processing power, form factor, and environmental robustness.

Key Considerations:

- Processing Power: Choose hardware with sufficient computational capability (e.g., GPUs, TPUs).

- Energy Efficiency: Opt for low-power chips to extend battery life in mobile or remote devices.

- Environmental Suitability: Ensure the hardware can withstand operational conditions (e.g., temperature, vibration).

2. Optimizing AI Models for Edge Deployment

AI models designed for edge deployment need to be optimized to perform efficiently on limited resources. This involves reducing model size and complexity while maintaining accuracy. Techniques such as model quantization, pruning, and using lightweight architectures like MobileNet are essential for optimizing models for edge environments.

Optimization Techniques:

- Quantization: Reduces the precision of the model’s weights to lower memory usage.

- Pruning: Removes unnecessary parameters to reduce model size.

- Lightweight Architectures: Utilize models specifically designed for edge devices (e.g., MobileNet, SqueezeNet).

3. Ensuring Data Privacy and Security

Protecting data processed at the edge is critical, as these devices often handle sensitive information. Implement robust encryption methods, secure boot processes, and access controls to safeguard data. Additionally, regular updates and security patches are necessary to protect against evolving threats.

Security Measures:

- Data Encryption: Encrypt data both at rest and in transit to prevent unauthorized access.

- Secure Boot: Ensure only trusted software runs on edge devices.

- Access Controls: Implement authentication and authorization protocols.

4. Balancing Edge and Cloud Processing

Striking the right balance between edge and cloud processing is key to maximizing the efficiency and effectiveness of your Edge AI system. While the edge handles real-time, critical tasks, the cloud can be used for more complex analyses and model training. This hybrid approach ensures optimal performance and resource utilization.

Balancing Strategies:

- Real-Time Processing at the Edge: Handle time-sensitive tasks locally to reduce latency.

- Cloud for Intensive Tasks: Offload heavy computations and long-term storage to the cloud.

- Data Synchronization: Ensure seamless data transfer between edge devices and the cloud for consistency.

Enhance the Performance of Your Smart Devices with Edge AI Technology

Partner with Kanerika Today!

Challenges and Considerations in Implementing Edge AI

1. Hardware Limitations

Edge AI requires hardware that is both powerful and compact to fit into Edge devices like cameras, sensors, and mobile phones. These devices have limited computational capabilities compared to cloud servers, which restricts the complexity of AI models we can deploy.

2. Power Consumption

Edge devices are typically battery-powered or have limited energy resources, making power consumption a critical consideration. Running AI models locally demands significant computational resources, which can drain batteries quickly. Designing energy-efficient hardware and optimizing AI models to reduce power usage without compromising performance is a key challenge.

3. Model Optimization

AI models must be tailored to run on Edge devices with limited resources. This means reducing the model’s size using techniques such as quantization and pruning to ensure that the models can deliver results without being computationally expensive. Finding an optimal solution that allows model accuracy while handling resource constraints is tedious and requires proper tuning.

4. Security and Privacy Concerns

Implementing Edge AI involves processing and storing data locally, which raises security and privacy concerns. Devices must be equipped with robust encryption and security protocols to protect sensitive data from unauthorized access. Additionally, ensuring that AI models themselves are secure from tampering or exploitation is a critical consideration.

5. Scalability and Management

Deploying and managing AI across a large number of edge devices presents significant scalability challenges. Updates to AI models, monitoring device performance, and managing data synchronization across a distributed network can be complex and resource-intensive. Solutions must be developed to streamline these processes to ensure seamless operation at scale.

From Data to Decisions: The Impact of AI Forecasting on Business Growth

Unlock your business’s potential with AI forecasting! Discover how transforming data into strategic decisions can drive your growth.

Future Trends in Edge AI for IoT Devices

1. AI-Specific Hardware Advancements

The future of Edge AI will see continued advancements in AI-specific hardware, such as next-generation GPUs, TPUs, and dedicated AI chips. These components will become more powerful, energy-efficient, and compact, enabling more sophisticated AI models to be deployed at the edge. This trend will allow for the development of even smarter and more capable edge devices.

2. 5G and Edge AI Integration

The integration of 5G technology with Edge AI is set to revolutionize how IoT devices operate. With 5G’s ultra-low latency and high bandwidth, edge devices can communicate more efficiently, enabling faster data processing and real-time decision-making. This will enhance applications such as autonomous vehicles, smart cities, and industrial automation, where speed and reliability are paramount.

3. Federated Learning

Federated learning is an emerging trend that enables AI models to train across multiple devices without centralizing the data.

4. Neuromorphic Computing

Neuromorphic computing, inspired by the human brain’s neural architecture, represents the next frontier in Edge AI. These processors mimic the way neurons work, allowing for more efficient and adaptive AI processing. Neuromorphic chips can perform complex tasks with lower power consumption, making them ideal for edge devices that need to operate continuously and autonomously.

Generative AI Automation: A New Era of Business Productivity

Unlock the future of business productivity with Generative AI automation. Start transforming your operations today !

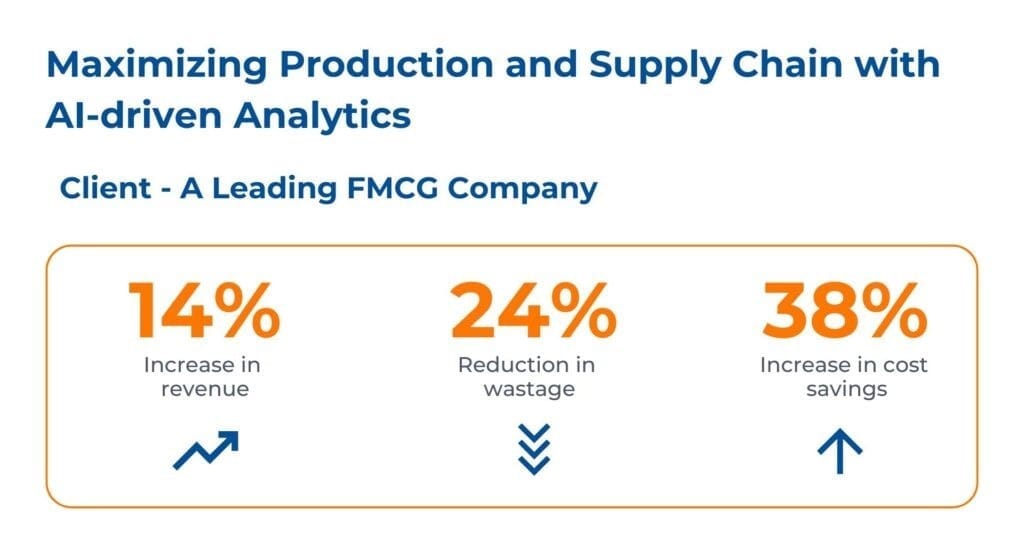

Optimizing Production and Supply Chain through AI Implementation

The client is a frontrunner in the USA’s perishable food production domain.

The client faced challenges with inaccurate production due to a lack of AI implementation and sole reliance on historical data for demand forecasting, leading to customer dissatisfaction. Additionally, production planning and scheduling issues across vendors caused delays, quality problems, and revenue loss.

Kanerika solved their challenges by:

- Implementing AI and ML algorithms, factoring in weather and seasonal changes, to improve demand accuracy and enhance decision-making.

- Utilizing AI in production planning to reduce wastage and maximize revenues.

- Integrating an AI-based demand forecasting engine with the client’s ERP system, enabling seamless real-time decision-making.

Transform Your Business with Kanerika’s Efficient Edge AI Implementation Services

Kanerika’s expertise in data and AI positions us as a leader in delivering cutting-edge solutions tailored to your business needs. We leverage the latest AI tools and technologies, crafting solutions like Generative AI, Predictive AI, or Edge AI based on your specific requirements. Our approach is designed to address unique business challenges, enhancing operations, and driving efficiency and growth.

By choosing Kanerika, you partner with a team that understands the intricacies of AI and data integration. We don’t just implement AI; we ensure it aligns with your business goals, providing measurable results. Whether you need real-time decision-making capabilities, advanced analytics, or streamlined processes, our solutions are engineered to deliver. Trust Kanerika to bring innovation, reliability, and expertise to your AI journey, helping your business achieve new levels of success.

FAQs

What is edge AI?

Edge AI brings the power of artificial intelligence directly to the device generating the data – think smart cameras or phones, not a distant cloud server. This eliminates latency, enhances privacy by keeping data local, and reduces bandwidth needs. It’s about processing intelligence where it’s created, making systems faster and more responsive. Essentially, it’s AI at the source, not in the cloud.

What is the Microsoft edge AI?

Microsoft Edge AI isn’t a single thing, but rather a strategy. It aims to bring the power of artificial intelligence directly to devices running Microsoft Edge, reducing reliance on cloud servers. This means faster, more private processing of AI tasks like image recognition right on your computer or phone. Think of it as making your device smarter without sending all your data to the cloud.

What is the difference between cloud AI and edge AI?

Cloud AI uses powerful remote servers for processing, offering scalability but relying on network connectivity and potentially causing latency. Edge AI processes data locally on devices like smartphones or IoT sensors, providing faster responses and offline functionality, but with limitations on processing power. Essentially, it’s the difference between processing in the cloud versus processing at the “edge” of the network, closer to the data source. The best choice depends on the application’s needs for speed, connectivity, and processing power.

What is the difference between IoT and edge AI?

IoT is about connecting devices to the internet to collect data; think of it as the “nervous system” of a smart home. Edge AI processes that data *locally* on the device itself, rather than sending it to the cloud, making responses faster and more efficient. Essentially, IoT provides the data, while edge AI provides the immediate, intelligent action based on that data.

How much does the edge AI cost?

The cost of edge AI solutions varies wildly. It depends heavily on the hardware needed (from simple sensors to powerful embedded systems), the software complexity (custom models vs. off-the-shelf), and the level of integration required. Think of it like building a house – a tiny shed costs far less than a mansion. Get multiple quotes to compare.

What is edge AI in banking?

Edge AI in banking means bringing artificial intelligence processing closer to the data source – your phone or branch terminal, for example – instead of relying solely on distant servers. This allows for faster, more efficient processing of transactions and fraud detection, minimizing latency and enhancing security. Essentially, it’s AI that acts locally and instantly, improving the customer experience and reducing operational costs.

What is the scope of edge AI?

Edge AI’s scope encompasses processing data closer to its source – think sensors in a factory or cameras in a smart city. This contrasts with cloud-based AI, offering faster processing, reduced latency, and enhanced privacy by minimizing data transfer. Its applications span diverse fields, from predictive maintenance to real-time object detection, essentially wherever immediate action based on local data is crucial. Ultimately, it’s about bringing the intelligence to the edge of the network.

How to access edge AI?

Accessing edge AI depends on your needs. For developers, it involves using specialized hardware (like AI accelerators) and software frameworks (like TensorFlow Lite) to build and deploy models. For users, it’s about utilizing devices and applications that *already* incorporate edge AI – smart cameras, IoT devices, or apps with on-device processing. Essentially, it’s either building it or benefiting from it, depending on your technical skillset.

What are the disadvantages of edge AI?

Edge AI, while powerful, faces limitations. Data privacy concerns arise from processing sensitive information locally, potentially vulnerable to breaches. The computational power at the edge is often restricted, limiting the complexity of models you can deploy. Finally, maintaining and updating numerous edge devices can be a logistical nightmare, requiring significant ongoing management.

What is edge software used for?

Edge software runs directly on devices like sensors or cameras, processing data locally instead of sending it to a central server. This speeds up responses, reduces bandwidth needs, and improves privacy by keeping sensitive information closer to its source. It’s crucial for applications needing immediate action or dealing with limited connectivity.

What are the challenges of edge AI?

Edge AI faces hurdles in balancing processing power with limited device resources like battery life and memory. Data security and privacy are paramount concerns when processing sensitive information locally. Furthermore, deploying and maintaining AI models across diverse edge devices requires robust and scalable management solutions. Finally, achieving consistent accuracy across varying hardware and network conditions presents a significant challenge.

What are the use cases of edge AI?

Edge AI shines where immediate action is needed and connectivity is limited or unreliable. Think self-driving cars reacting instantly to obstacles, industrial robots performing precise tasks without network delays, or remote medical devices making diagnoses without internet access. Essentially, it brings the power of AI directly to the data source for faster, more efficient, and often more private processing.

What is the edge AI?

Edge AI refers to artificial intelligence that processes data and makes decisions at the edge of a network, on devices such as smartphones, smart home devices, or industrial sensors, reducing latency and improving real-time decision-making for enhanced device performance and user experience in various industries.

Who is the CEO of edge AI?

There is no single CEO of edge AI, as it’s a technology implemented by various companies. Leading edge AI companies include NVIDIA, Qualcomm, and Google, with CEOs like Jensen Huang, Cristiano Amon, and Sundar Pichai driving innovation in artificial intelligence, machine learning, and IoT device management.

What is the difference between AI and edge AI?

Edge AI differs from traditional AI as it processes data locally on devices, reducing latency and improving real-time decision-making for applications like smart home devices, industrial automation, and autonomous vehicles, enabling more efficient and secure data analysis and machine learning capabilities.

What are examples of edge AI?

Examples of edge AI include smart home security systems, autonomous vehicles, and industrial automation devices, which process data locally to enable real-time decision-making, improving performance, and reducing latency, ultimately enhancing user experience and operational efficiency in various industries.

Is edge AI the future?

Edge AI is poised to revolutionize device intelligence by enabling real-time data processing and decision-making at the device level, driving innovation in industries like IoT, robotics, and smart homes, and unlocking new revenue streams through enhanced customer experiences and improved operational efficiency.