Weak data quality and poor observability can have devastating consequences, as evidenced by recent high-profile incidents that have severely impacted organizations. In February 2025, Citigroup mistakenly credited a customer’s account with $81 trillion instead of the intended $280. Although the error was identified and corrected within hours without financial loss, it highlighted significant operational vulnerabilities within the bank’s systems.

Similarly, a UK Treasury committee investigation revealed that major banks and building societies experienced over 33 days of IT failures between January 2023 and February 2025. These outages disrupted millions of customers’ access to banking services, emphasizing the need for enhanced data observability to prevent such occurrences.

These events highlight the growing need for both data quality and observability to ensure accurate, reliable, and continuously monitored data in modern business operations.

8 Best Data Modeling Tools to Elevate Your Data Game

Explore the top 8 data modeling tools that can streamline your data architecture, improve efficiency, and enhance decision-making for your business.

What is Data Quality?

Data quality ensures accurate, complete, consistent, reliable, and timely information. It determines whether data is fit for use in decision-making, reporting, and analytics. Poor data quality can lead to financial losses, incorrect insights, and compliance risks.

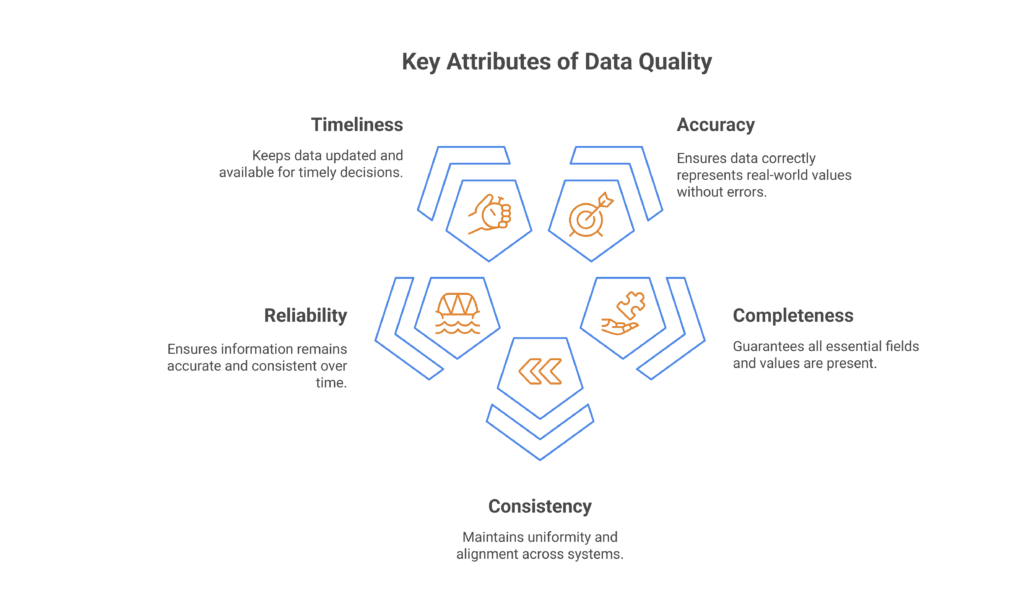

Key Attributes of Data Quality

- Accuracy: Data correctly represents real-world values without errors.

- Completeness: All essential fields and values are present without missing information.

- Consistency: Data remains uniform and aligned across different systems and sources.

- Reliability: Information can be trusted to stay accurate and consistent over time.

- Timeliness: Data is updated and available when required for decision-making.

What is Data Observability?

Data observability provides real-time monitoring of data pipelines, ensuring the smooth flow of accurate and reliable data which enables teams to detect, troubleshoot, and resolve issues before they affect business operations or decision-making. Observability minimizes disruptions and improves trust in data systems by continuously tracking data health.

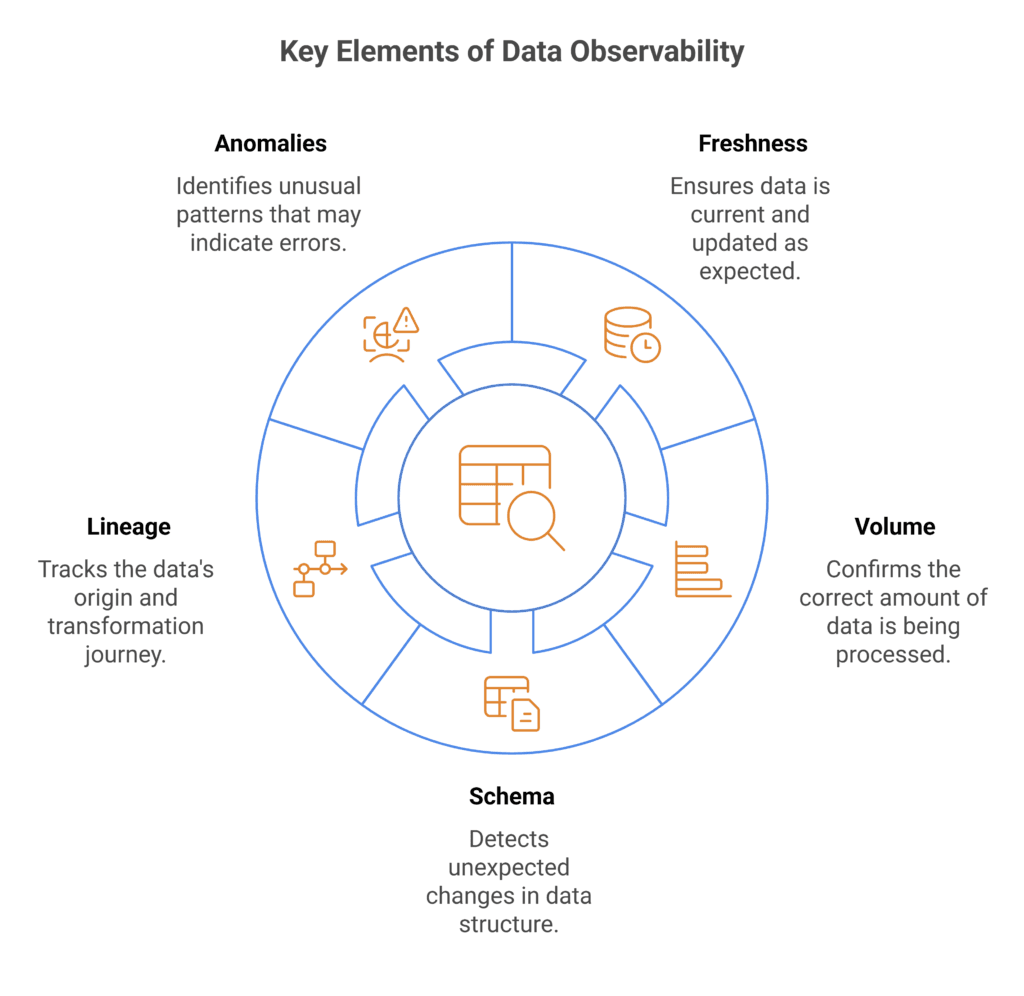

Key Elements of Data Observability

- Freshness: Ensures data is current and updated as expected.

- Volume: Confirms the correct amount of data is being processed.

- Schema: Detects unexpected changes in data structure.

- Lineage: Tracks the data’s origin and transformation journey.

- Anomalies: Identifies unusual patterns that may indicate errors.

Data Observability vs Data Quality: Key Differences

| Aspect | Data Quality | Data Observability |

| Definition | Ensures data is accurate, complete, consistent, reliable, and timely. | Continuously monitors data pipelines to detect issues in real time. |

| Focus | Measures the condition of stored data. | Tracks how data moves through systems and identifies issues early. |

| Approach | Rule-based validation, cleansing, and enrichment. | Automated monitoring, anomaly detection, and alerts. |

| Timing | Applied after data is collected or stored. | Works in real-time to prevent or quickly resolve issues. |

| Scope | Concerned with the final quality of data at rest. | Observes data as it flows through pipelines, ensuring reliability. |

| Common Issues Detected | Duplicate records, missing values, incorrect formatting. | Schema changes, delays, unexpected data volume drops, and anomalies. |

| End Goal | Produces high-quality, usable data for analysis and operations. | Reduces data downtime by proactively identifying pipeline failures. |

| Best For | Batch data processing, regulatory compliance, structured data management. | Real-time analytics, machine learning pipelines, and large-scale data ecosystems. |

| Example Use Case | Cleaning and standardizing customer records before a marketing campaign. | Detecting a sudden drop in transaction records in an e-commerce system before it impacts sales reports. |

When to Choose Data Observability?

Data observability is ideal when continuous monitoring and real-time issue detection are needed to ensure data reliability. It helps teams detect problems before they impact business operations and ensures smooth data flow across multiple systems.

Choose observability when working with real-time data, AI/ML models, cloud-based storage, or event-driven architectures. It helps proactively detect and resolve issues, ensuring business-critical data remains accurate and actionable.

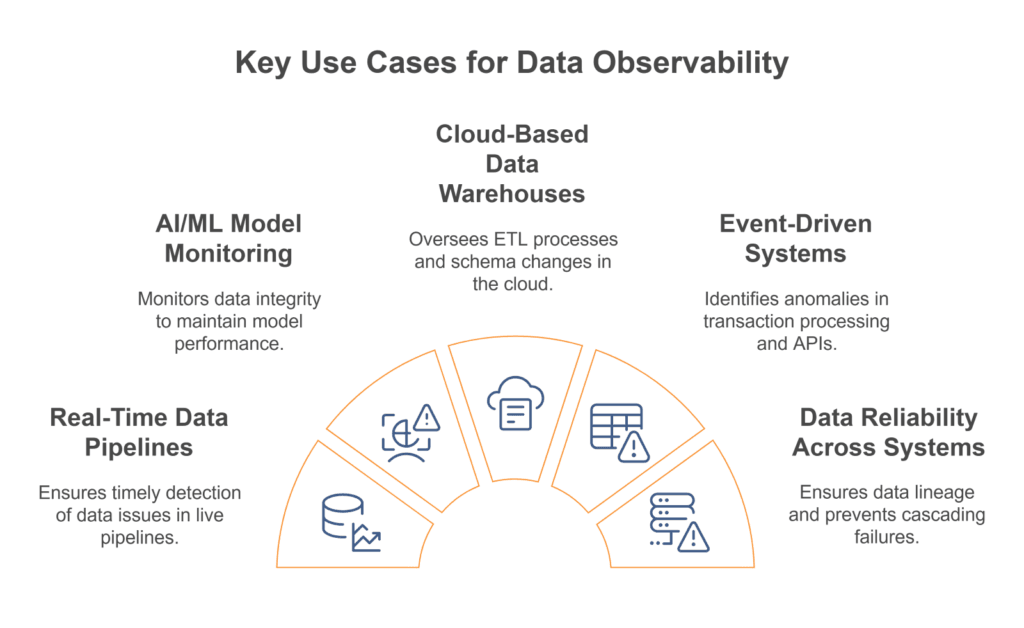

Key Use Cases for Data Observability

- Real-Time Data Pipelines: Essential for industries like e-commerce, finance, and IoT, where live data must be monitored for delays, missing records, or sudden changes.

- AI/ML Model Monitoring: Prevents data drift, pipeline failures, and missing values that could affect model performance and predictions.

- Cloud-Based Data Warehouses: Ensures ETL jobs run correctly, tracks schema changes, and prevents stale or incomplete data in distributed environments.

- Event-Driven Systems: Detects anomalies in transaction processing, API calls, or message queues, ensuring data flows as expected.

- Data Reliability Across Systems: Monitors data lineage, alerts teams to upstream failures, and prevents cascading issues across pipelines.

When to Choose Data Quality?

Data quality solutions are useful when accuracy, consistency, and compliance are critical. These solutions are intended to provide clean, standard, and trusted data for business operations, reporting, and analytics.

Choose data quality solutions when dealing with structured data, where accuracy is critical due to legal and reporting liabilities or helping with business decisions. This ensures reliable, error-free data, minimizes risks and enhances operational efficiency.

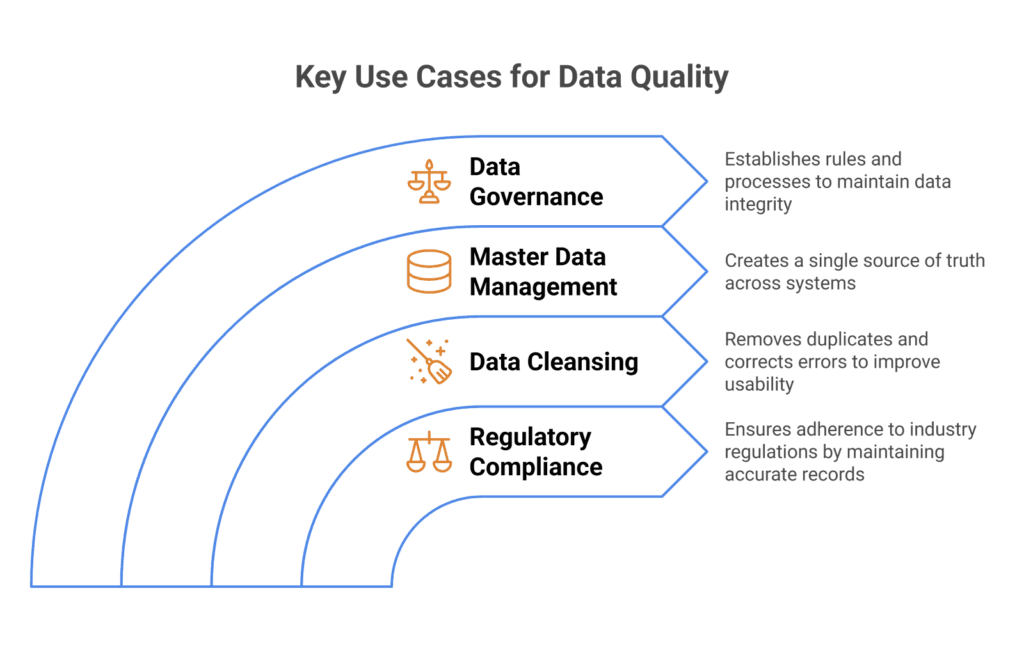

Key Use Cases for Data Quality

- Regulatory Compliance: Ensures adherence to industry regulations (e.g., finance, healthcare) by maintaining accurate and complete records.

- Data Cleansing: Removes duplicates, corrects errors, and standardizes formats to improve data usability.

- Master Data Management: Creates a single source of truth for customer, product, and transaction records across systems.

- Data Governance: Establishes rules, ownership, and validation processes to maintain data integrity.

Data Integration Tools: The Ultimate Guide for Businesses

Explore the top data integration tools that help businesses streamline workflows, unify data sources, and drive smarter decision-making.

How Data Quality and Data Observability Work Together

Organizations require both data quality and data observability to develop a strong and dependable data ecosystem. Data quality allows measuring if stored data is correct, while observability allows ensuring that data pipelines work well in all conditions and do not fail unexpectedly. Here’s how they complement each other:

1. Data Quality as the Foundation

Data quality provides the foundation of reliable analytics, reporting, and compliance. Businesses that rely on such cleansed and structured data avoid basing their decisions on flawed data. Quality management processes (standardization, deduplication, and validation) ensure that data imports are accurate, complete, and appropriately formatted before usage.

For example, in financial reporting, ensuring consistent account numbers, transaction details, and timestamps is essential for compliance with regulations like SOX or IFRS. Without high data quality, reports could contain discrepancies that lead to audits or financial penalties.

2. Data Observability as the Watchdog

Even when data is clean, issues can arise while it moves through pipelines. Data observability acts as a real-time monitoring system, continuously tracking data freshness, volume, and schema changes to detect problems before they cause damage.

For example, an e-commerce platform relies on real-time order tracking. If a data pipeline suddenly fails, order status updates may not reflect correctly, causing confusion for both customers and support teams. Observability catches these failures immediately, allowing teams to fix them before they escalate.

3. Proactive vs. Reactive Approach

- Data quality is reactive—it detects and resolves known problems like missing values, duplicate records, and formatting discrepancies.

- Data observability is proactive — it provides continuous systems monitoring, catching the unexpected such as sudden dip in data volume, schema mismatches, or even latencies, well in advance of their downstream impacts in business operations.

For instance, a machine learning model predicting customer churn may rely on clean customer data (ensured by data quality) but could fail if a data pipeline silently drops 30% of customer records. Observability catches the issue in real time, preventing flawed model outputs.

4. End-to-End Data Coverage

Data quality ensures that historical and stored data remains correct, while observability guarantees that live data flows correctly between systems. Together, they provide end-to-end data trust:

- Quality checks stored data for accuracy before analysis.

- Observability monitors real-time processing and delivery, ensuring new data enters the system without corruption or delay.

For example, a company tracking sales data must ensure that both historical purchase records are correct (data quality) and new transactions are processed in real time without failure (data observability).

5. Preventing Data Downtime

Data downtime — periods when data is missing, inaccurate, or unreliable which can lead to business disruption, revenue loss, and poor customer experiences. Observability proactively reduces downtime by pinging teams whenever problems occur, whereas data quality focuses on long-term correctness. Consider a cloud-based CRM system:

- If data quality isn’t enforced, sales reps may deal with duplicate leads or incomplete customer profiles.

- If observability isn’t in place, a sudden data pipeline failure could mean that new customer interactions aren’t logged in, leading to missed follow-ups and lost revenue.

By combining both, businesses can prevent data failures before they become costly problems.

6. Better Decision-Making

Reliable data leads to better business decisions, more accurate AI models, and improved operational efficiency. When data is both high quality and continuously monitored, organizations can trust it for:

- Customer insights and personalization in marketing.

- Fraud detection and risk assessment in finance.

- Predictive maintenance and IoT analytics in manufacturing.

For instance, an airline using AI to predict aircraft maintenance needs must ensure that:

- Historical maintenance data is accurate and standardized (data quality).

- Real-time sensor data from aircraft engines is captured correctly without delays (data observability).

Data Visualization Tools: A Comprehensive Guide to Choosing the Right One

Explore how to select the best data visualization tools to enhance insights, streamline analysis, and effectively communicate data-driven stories.

Data Observability vs Data Quality: Use Cases

1. Financial Services – Fraud Detection and Risk Management

- Data Quality: Ensures that customer transaction data is accurate, complete, and standardized to detect fraudulent patterns effectively.

- Data Observability: Monitors real-time transaction flows to detect sudden spikes or anomalies that could indicate fraud.

- Use Case: A bank monitoring credit card transactions uses data quality processes to remove duplicate or incorrect entries while observability tracks unexpected transaction surges to flag potential fraud in real-time.

2. E-Commerce – Real-Time Order Processing and Inventory Management

- Data Quality: Cleans and standardizes product information, ensuring consistent SKUs, pricing, and descriptions across platforms.

- Data Observability: Monitors real-time order tracking, alerting teams if transaction data is missing or delayed.

- Use Case: An online retailer ensures that product catalog data remains accurate while using observability to track real-time order processing failures, preventing customer service disruptions.

3. Healthcare – Patient Data Integrity and Compliance

- Data Quality: Ensures that patient records are complete, structured, and meet regulatory compliance standards such as HIPAA.

- Data Observability: Monitors data pipelines to prevent delays in transferring lab results or medical history updates.

- Use Case: A hospital maintains accurate patient records through data quality processes while using observability to track real-time data transfers between healthcare providers, ensuring timely medical decisions.

4. AI and Machine Learning – Data Pipeline Reliability for Model Training

- Data Quality: Prepares clean, accurate datasets for training machine learning models.

- Data Observability: Monitors data drift, missing values, or anomalies that could affect model performance.

- Use Case: A predictive analytics system in retail ensures that historical sales data is accurate while using observability to monitor real-time data feeds that update demand forecasts.

Top 5 Data Quality Tools

These tools help businesses clean, validate, and standardize data to ensure accuracy and reliability.

1. Talend Data Quality

A powerful tool that enables data profiling, cleansing, and standardization to maintain high data integrity. It helps organizations identify and fix errors before data is used for analytics.

- Provides data profiling, standardization, deduplication, and validation.

- Ensures regulatory compliance by enforcing governance policies.

- Integrates with various databases, cloud platforms, and enterprise applications.

2. Informatica Data Quality

An enterprise-grade solution that uses AI-driven automation to maintain high-quality data across multiple systems. It offers robust rule-based validation and real-time monitoring.

- Detects, cleans, and enriches data with AI-powered automation.

- Supports rule-based validation to maintain data accuracy.

- It is best for industries with strict compliance standards, like healthcare and finance.

3. IBM InfoSphere QualityStage

A data quality solution designed for large-scale enterprises to maintain a single source of truth across different systems.

- Cleanses, standardizes, and links data across multiple sources.

- Helps organizations build a unified, accurate master data system.

- Strong focus on data governance and regulatory compliance.

4. Ataccama ONE

An all-in-one platform that combines data quality, governance, and metadata management for real-time data integrity.

- Provides automated data profiling and anomaly detection.

- Best for large enterprises needing real-time data monitoring.

5. Trifacta

A user-friendly tool that specializes in data wrangling and transformation for analytics and reporting.

- Helps clean and structure messy datasets for accurate insights.

- Works well with cloud data warehouses and big data environments.

- Ideal for data teams needing self-service data preparation.

8 Best Data Modeling Tools to Elevate Your Data Game

Explore the top 8 data modeling tools that can streamline your data architecture, improve efficiency, and enhance decision-making for your business.

Top 5 Data Observability Tools

1. Monte Carlo

A leading data observability platform that automates anomaly detection and minimizes data downtime.

- Monitors freshness, volume, schema changes, and data lineage.

- Detects issues before they impact analytics or AI models.

- Helps teams reduce pipeline failures and data downtime.

2. Databand (by IBM)

A data observability tool designed for tracking pipeline health and identifying missing or delayed data.

- Monitors data pipeline performance and missing data.

- Works with Apache Airflow, Spark, and cloud platforms.

- Best suited for data engineers handling large-scale ETL workflows.

3. Bigeye

An AI-powered tool that continuously monitors data quality and reliability in cloud-based environments.

- Uses AI-driven anomaly detection for schema drift, freshness, and volume issues.

- Provides real-time alerts on data inconsistencies.

- It integrates with Snowflake, Redshift, BigQuery, and other cloud warehouses.

4. Soda.io

A no-code data observability tool that allows businesses to monitor data health without deep technical expertise.

- Supports custom rule-based validation and automated alerts.

- Tracks data freshness, completeness, and schema integrity.

- Ideal for organizations that need a simple, easy-to-implement monitoring solution.

5. Great Expectations

An open-source framework that enables teams to define and enforce data validation rules across pipelines.

- Allows teams to set “expectations” for data quality and get alerts when rules are violated.

- Provides detailed reports and audits for tracking pipeline health.

- Best for organizations that need a customizable observability solution.

Choosing the Right Tool

- For organizations focused on regulatory compliance and structured data → Talend, Informatica, IBM InfoSphere.

- For businesses with complex data pipelines that require real-time monitoring → Monte Carlo, Databand, Bigeye.

- For teams looking for open-source and customizable solutions → Great Expectations, Soda.io.

Enhance Data-Driven Decision-Making With Powerful, Efficient Data Modeling!

Partner with Kanerika Today!

Enhancing Business Efficiency with Kanerika’s Advanced Data Management Solutions

Partnering with Kanerika could revolutionize businesses through advanced data management solutions. Our expertise in data integration, advanced analytics, AI-based tools, and deep domain knowledge allows organizations to harness the full potential of their data for improved outcomes.

Our proactive data management solutions empower businesses to address data quality and integrity challenges and transition from reactive to proactive strategies. By optimizing data flows and resource allocation, companies can better anticipate needs, ensure smooth operations, and prevent inefficiencies.

Additionally, our AI tools enable real-time data analysis alongside advanced data management practices, providing actionable insights that drive informed decision-making. This goes beyond basic data integration by incorporating continuous monitoring systems, which enable early identification of trends and potential issues, improving outcomes at lower costs.

Frequently Asked Questions

What is the difference between data quality and data observability?

In summary, data quality focuses on the intrinsic characteristics of data, ensuring it is accurate, complete, and reliable. Data observability, on the other hand, focuses on monitoring and understanding data pipelines and workflows in real-time, allowing organizations to detect and respond to issues promptly.

What are the 5 pillars of data observability?

The five pillars of data observability are:

- Freshness.

- Quality.

- Volume.

- Schema.

- Lineage.

What is the difference between data quality and data monitoring?

While data quality focuses on the accuracy, completeness, and consistency of data, data observability takes it a step further by providing real-time monitoring and insights into data flows. This proactive approach enables organizations to detect anomalies, validate data, and perform root cause analysis promptly.

What are the 5 pillars of data quality?

The five pillars of data quality management are team composition, data profiling, data quality, data reporting, and data resolution and repair. These pillars form the foundation for maintaining and improving the quality of data within an organization.

What is KPI in observability?

Metrics refer to key performance indicators (KPIs) in observability. With this data, teams can understand an application’s real-time condition or performance. The three most common metric types are system, application, and business metrics, each of which are valuable in different analysis contexts.

How to monitor data quality?

Traditional Data Quality Monitoring Approaches

- Manual Data Validation: Writing SQL queries or using spreadsheets to validate data.

- Rule-Based Checks: Implementing rules for data consistency, completeness, and accuracy.

- Regular Audits: Periodic data audits to identify and correct issues.

What is a NoSQL database?

The term NoSQL, short for “not only SQL,” refers to non-relational databases that store data in a non-tabular format, rather than in rule-based, but relational tables like relational databases also do.

What are the 6 C's of data quality?

Data that is Clean, Complete, Comprehensive, Chosen, Credible, and Calculable.