“With data collection, ‘the sooner the better’ is always the best answer.” Marissa Mayer’s quote on data is as relevant today as it was a few years ago. In 2025, global data creation has reached to reach 181 zettabytes which is a massive jump from 79 zettabytes in 2021. The debate around data lake vs. data warehouse has never been more critical.

Take Tesla, for example, the company processes vast amounts of real-time sensor data from its vehicles, requiring a data lake to store unstructured data like video feeds and telemetry. On the other hand, its financial reports, sales metrics, and supply chain data are structured and neatly stored in a data warehouse for business intelligence.

Meanwhile, data warehouses provide structured, processed data optimized for quick decision-making. Understanding the strengths of data lake vs. data warehouse ensures businesses harness the full potential of their data strategy.

Key Learnings

- A data warehouse is built for trusted analytics – It centralizes structured data from multiple business systems, providing a single source of truth for reporting and decision-making.

- Schema-on-write ensures consistency and performance – By structuring data before storage, data warehouses deliver faster queries and reliable, repeatable reports.

- Data warehouses are optimized for BI and reporting – They are best suited for dashboards, financial reporting, and operational analytics that require accuracy and governance.

- Strong governance is a core advantage – Built-in controls for data quality, security, and compliance make data warehouses ideal for regulated enterprise environments.

- Data warehouses remain critical in modern data architectures – Even with data lakes and lakehouses, warehouses continue to play a key role in enterprise analytics strategies.

Transform Your Data Warehouse Into A Scalable Data Lake.

Partner With Kanerika To Ensure Accuracy, Security, And Speed.

What is Data Lake?

A data lake is a centralized storage system that can house a vast amount of structured, semi-structured, and unstructured data. It’s designed to store data in its raw format, with no initial processing required.

Additionally, this allows for various types of analytics, such as big data processing, real-time analytics, and machine learning, to be performed directly on the data within the lake. Therefore, the architecture of a Data Lake typically includes layers like the Unified Operations Tier, Processing Tier, Distillation Tier, and HDFS.

The primary goal of a Data Lake is to provide a comprehensive view of an organization’s data to data scientists and analysts. Hence, This enables them to derive insights and make informed decisions. It’s a flexible solution that supports the exploration and analysis of large datasets from multiple sources.

What is a Data Warehouse?

A data warehouse is simply a central repository that is meant to store, organize, and analyze large amounts of structured business information. To put it simply, it integrates information across various sources, like ERP, CRM, finance, and operations, into one trusted place to report and conduct analytics. This means that organizations will be able to have access to reliable and uninterrupted data to use in their decision-making.

A data warehouse is possible to use, unlike other data platforms as it is a schema-on-write. This implies that the data undergoes cleaning, transformation and structuring prior to storage. Due to this, requests are faster and reports are always consistent. Data warehouses are primarily designed to work with structured data, including tables, rows, and columns, and are, therefore, useful to business intelligence workloads.

The basic data warehouse structure consists of data sources, ETL /ELT pipelines, a centralized storage tier and analytics or BI tools on top. These elements then collaborate in an attempt to achieve data accuracy, performance, and governance.

Data warehouses are often used by enterprises to perform reporting, dashboards, financial analysis, and operation insights. Altogether, a data warehouse can offer a reliable and controlled base of analytics that assists companies to monitor their performance, address compliance requirements, and contribute to data-driven approaches.

Data Warehouse Migration: A Practical Guide for Enterprise Teams

Learn key strategies, tools and best practices for successful data-warehouse migration.

Data Lake vs Data Warehouse: Process and Strategy

Data Lakes are flexible and suited for raw, expansive data exploration, while Data Warehouses are structured and optimized for specific, routine business intelligence tasks.

Both play crucial roles in a comprehensive data strategy, often complementing each other within an organization’s data ecosystem.

Data Lake Process and Strategy

These are ideal for data scientists and analysts who need to perform in-depth analysis. They need it for predictive modeling and understanding large datasets in their raw form.

- The process begins with ingesting raw data from various sources. These include transactional databases, social media, IoT devices, logs, and more.

- Data ingestion tools such as Apache Kafka, Apache NiFi or custom scripts are employed to receive and transfer data to the data lake.

- The raw data is stored as native form in a distributed file system, i.e., Hadoop Distributed File System (HDFS), Amazon S3 or Azure Data Lake Storage (ADLS). It does not have an upfront schema, and is flexible when it comes to storing a variety of data.

- There are numerous data analytics tools and frameworks that will be used by data analysts, data scientists, and other stakeholders to investigate and examine the information in the data lake. These tools are SQL engine, machine learning libraries and visualization tools.

Data Warehouse Process and Strategy

Data Warehouses are best suited for business professionals and decision makers. They require operational data in a structured system for analytics and easy querying.

- Data is extracted from multiple operational systems, such as CRM, ERP, and financial systems, using ETL (Extract, Transform, Load) processes. This involves identifying relevant data sources, extracting data using predefined queries or APIs, and moving it to the data warehouse.

- Extracted data undergoes transformation and cleansing processes to ensure consistency, integrity, and quality. Data is standardized, normalized, and aggregated to make it suitable for analysis and reporting.

- Transformed data is loaded into the data warehouse, typically using batch processing techniques. The data is organized into dimensional models, which optimize query performance and facilitate analytical processing.

- Business users, analysts, and decision-makers query the data warehouse using BI (Business Intelligence) tools, SQL queries, or reporting interfaces. They can generate reports, dashboards, and visualizations to gain better insights.

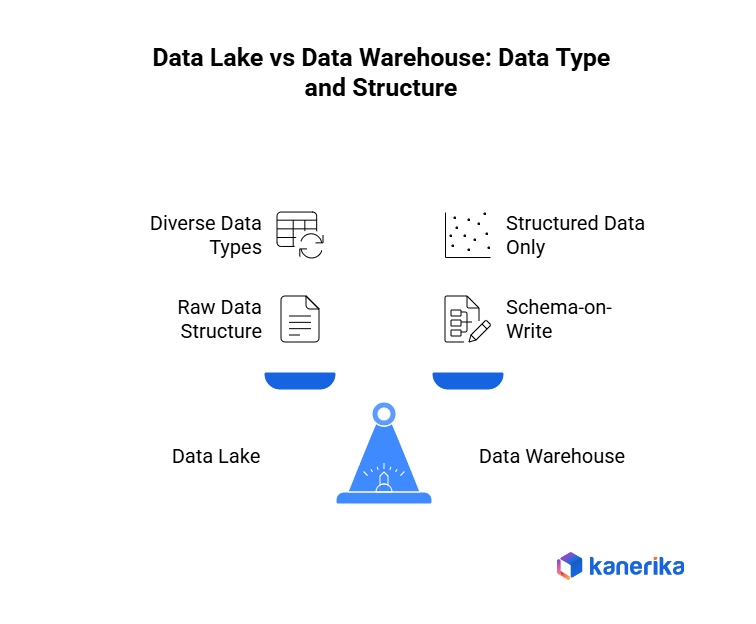

Data Lake vs Data Warehouse: Data Type and Structure Used

Data lakes are also good at managing diverse and unstructured data to be explored and analyzed in advanced analytics. In the meantime, data warehouses are best suited in the storage and analysis of structured information to support operational reporting and business intelligence.

Data Lake Data Type and Structure Used

Data Type: Data Lakes are structured to support large number of data types, such as structured, semi-structured and unstructured data. This implies that they are capable of storing both the traditional database records and the JSON files as well as multimedia files.

Data Structure: Within a Data Lake, data is often stored in its raw form without a predefined schema. It has a flat structure with every piece of data being given a distinct identifier and marked with a list of extended metadata tags. When business question occurs the data can be converted and formed to suit the analysis requirement.

Data Warehouse Data Type and Structure Used

Data Type: Data Warehouses mainly contain well-structured processed and formatted data. Such information has been usually obtained via the transactional systems and other relational databases.

Data Structure: Data in a Data Warehouse is very structured and is organized based on a schema-on-write basis. This implies that the schema (data model) is set by the time the data is being put into the warehouse. It is structured with tables and columns and information is usually aggregated, summarized and indexed to facilitate effective querying and reporting.

Data Lake vs Data Warehouse: Cost, Security and Accessibility

Although data lakes and data warehouses are both useful in managing data, they address divergent requirements which have different implications in terms of cost, security and accessibility. The major contrasts are the following:

Cost

- Data Lake: Data Lake is normally cheaper to store data than data warehouses particularly when a lot of raw and unstructured data has to be stored. It stores the data in its raw format thus removing pre-processing and schema definition which is expensive. Also, data lakes have a high-capacity of managing large amount of unstructured data at a low cost as opposed to a database warehouse.

- Data Warehouse:Typically involves higher upfront costs for infrastructure setup, licensing, and maintenance, particularly for on-premises deployments. Organized data storage is often more intensive in processing power which can result in an increase in the cost of computation. Although data warehouses could be more expensive than data lakes in terms of storage costs of structured data, it might be cheaper.

Security

- Data Lake: The companies should adopt solid protective strategies against the abuse or violation of access to sensitive data. Typically, data lakes include access control and security mechanisms on a granular basis to preserve data rest and transit.

- Data Warehouse: Generally offers stronger security. Access controls and user permissions are easier to be implemented since the schema is structured and predefined. Security in data warehouses is usually considered. The built-in features of role-based access control (RBAC) and row-level security (RLS) allow controlling data access with fine-grained control.

Accessibility

- Data Lake: It is more open to a broader group of users, especially those who are more at home with raw data, e.g., data scientists and analysts. They are in a position to navigate the information without restriction of set formats. There may however be some technical skills required to use and interpret the raw data.

- Data Warehouse: This is created to be simpler to access by the business users and analysts who might not have a great deal of technical expertise. The predefined queries with their structured format allow retrieving individual data points faster to report and analyze. Nonetheless, this priori organization may not allow associating the data with unexpected links.

Use Cases: Data Lake vs Data Warehouse

Data Lakes present a more lax setup that is more appropriate to store and analyze large amounts of different data in their original form, which is useful in machine learning, and real-time analytics. Conversely, Data Warehouses are more ordered and focused on standard regular and complex queries as well as regular operational reporting.

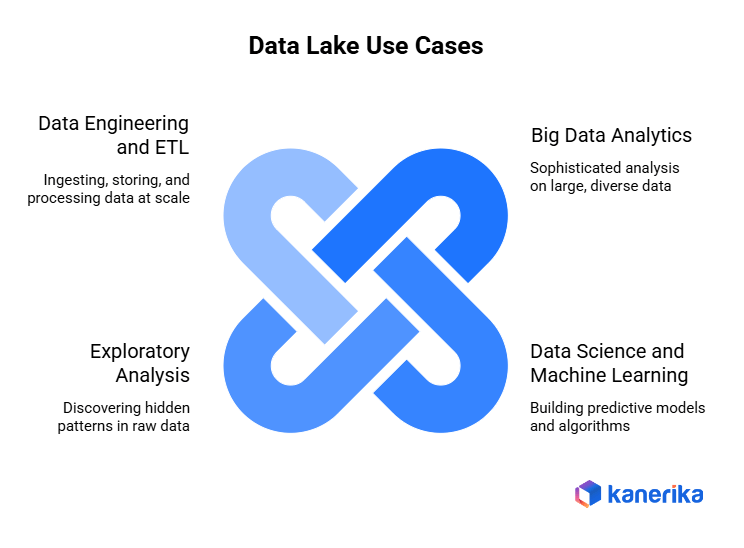

Data Lake Use Cases

- Big Data Analytics: They are suitable towards highly sophisticated analytics on huge quantities of differentiated and unstructured information. Examples of use cases are customer behavior analysis, sentiment analysis of social media data, and the patterns of the IoT sensor data.

- Data Science and Machine Learning: Data lakes provide a rich source of raw data for data scientists and machine learning engineers to build predictive models, perform clustering analysis, and conduct feature engineering tasks. Use cases include predictive maintenance, fraud detection, and recommendation systems.

- Exploratory Analysis: Data lakes allow the data analysts and researchers to interact with and learn something about raw data without established schemas or models. Exploratory data analysis, data visualization, and hypothesis testing are all examples of use cases in which an investigator tries to discover hidden data patterns and correlations.

- Data Engineering and ETL: Lakes serve as a foundational component for data engineering workflows, allowing organizations to ingest, store, and process data at scale. Use cases include data ingestion from various sources, data transformation pipelines, and real-time stream processing.

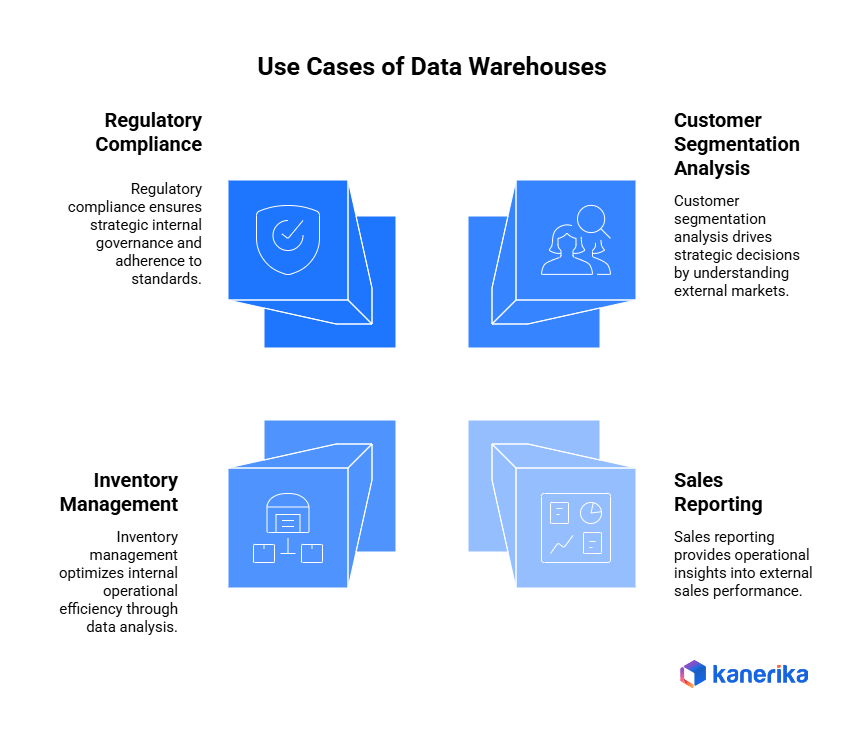

Use Cases of Data Warehouses

- Business Intelligence (BI) and Reporting: Warehouses could be effectively used to create standardized reports, dashboards, and KPI measures to be used by business users and executives. Examples of use cases are sales reporting, financial analysis and monitoring of operational performance.

- Operational Analytics: Data warehouses are useful in ad-hoc and interactive querying to analyze the past data to find the trends in the operation metrics. Examples of the use cases are inventory management, supply chain optimization and workforce planning.

- Regulatory Compliance and Governance: They offer central control and auditing functions in the process of ensuring that the regulatory requirements, governance of data and industry standards are adhered to. Therefore, these cases consist of GDPR compliance, HIPAA, and internal auditing.

- Data-driven Decision Making: Data warehouses allow the stakeholders to make informed decisions, using the correct and consistent data in the warehouse. Additionally, Use cases encompass customer segmentation and market segmentation analysis as well as churn predictions in order to accelerate business strategy and decision-making processes.

Data Lake vs Data Warehouse: Comparison Summary

This table provides a concise comparison of key aspects of Data Lakes and Data Warehouses. It helps to understand their differences and suitability for various applications.

| Aspect | Data Lake | Data Warehouse |

| Definition | A storage repository for raw data in its native format. | A system for reporting and analysis, storing processed data. |

| Data Types | Structured, semi-structured, unstructured. | Primarily structured data. |

| Data Structure | No predefined schema (schema-on-read). | Predefined schema (schema-on-write). |

| Cost | More cost-effective for large volumes of diverse data. | Higher due to sophisticated hardware/software requirements. |

| Security | Can be challenging due to the variety/volume of data. | Generally easier due to structured nature. |

| Accessibility | Flexible, ideal for data scientists/analysts. | User-friendly for business users with standard reporting tools. |

| Primary Use Cases | Big data analytics, machine learning, data discovery. | Structured business reporting, business intelligence. |

| Data Processing | Suitable for real-time data processing and analytics. | Optimized for batch processing and complex queries. |

| Storage Approach | Cost-effective, scalable for large data volumes. | Structured, requires data transformation and cleaning. |

| User Skill Level | Requires more advanced analytical skills. | More accessible for non-technical users. |

| Maintenance | Requires robust management to avoid becoming a data swamp. | Easier to maintain due to structured nature. |

Data Mesh vs Data Lake: Key Differences Explained

Explore key differences between a data mesh and a data lake, and how each approach addresses data management and scalability for modern enterprises.

Data Lake vs Data Warehouse: Which One is Right for You?

Choosing between a Data Lake and a Data Warehouse depends heavily on your business needs and intended applications.

Here’s a breakdown to assist you:

Data Lake: It excels at handling vast quantities of diverse data types, including raw and unstructured data. Therefore, Its scalability and support for advanced analytics and machine learning make it an attractive option. Considerations:

- Opt for a Data Lake if your business demands flexibility in data processing and exploration.

- It’s suitable for scenarios where storing data in its native format is essential for potential future use cases.

- Choose a Data Lake for analytics requiring intricate, non-standardized data processing.

Data Warehouse specializes in managing structured, processed data, facilitating routine and complex querying with optimized stability and speed in data retrieval and analysis. Considerations:

- If your business heavily relies on consistent, structured data for reporting and business intelligence, a Data Warehouse is ideal.

- Data Warehouses offer quick, dependable data access for operational insights and decision-making processes.

- Opt for a Data Warehouse if your data strategy prioritizes structured data analysis and historical reporting.

Case Study 1: Transforming Enterprise Data with Automated Migration from Informatica to Talend

Client Challenge

The client used a large Informatica setup that became costly and slow to manage. Licensing fees kept rising. Workflows were complex, and updates needed heavy manual work. Modernization stalled because migrations took too long.

Kanerika’s Solution

Kanerika used FLIP to automate the conversion of Informatica mappings and logic into Talend. FIRE extracted repository metadata so the team could generate Talend jobs with minimal manual rework. Outputs were validated through controlled test runs and prepared for a cloud-ready environment.

Impact Delivered

• 70% reduction in manual migration effort

• 60% faster time to delivery

• 45% lower migration cost

• Better stability through accurate logic preservation and smooth cutover

Case Study 2: Migrating Data Pipelines from SSIS to Microsoft Fabric

Client Challenge

The client’s SSIS-based pipelines were slow, expensive, and hard to maintain. Reports refreshed slowly, and the platform needed too much manual intervention.

Kanerika’s Solution

Kanerika rebuilt the client’s pipelines using PySpark and Power Query inside Microsoft Fabric. SSIS logic was mapped to the right Fabric components and transformed into Dataflows and PySpark notebooks. A unified Lakehouse structure improved performance and simplified monitoring.

Impact Delivered

• 30% faster data processing

• 40% reduction in operational cost

• 25% less manual maintenance

• Improved scalability through a modern Fabric-based architecture

Kanerika: Empowering Seamless Data Warehouse to Data Lake Migration

At Kanerika, we help enterprises modernize their data landscape by choosing the correct setup that aligns with their operational needs, data complexity, and long-term analytics goals. Traditional data warehouses are effective for managing structured, historical data used in reporting and business intelligence, but they often fall short in today’s dynamic, real-time environments. Consequently, this is where data lakes and data fabric setups come into play, offering the flexibility to efficiently handle diverse, unstructured, and streaming data sources.

As a Microsoft Solutions Partner for Data & AI and an early user of Microsoft Fabric, Kanerika delivers unified, future-ready data platforms. Furthermore, we focus on designing intelligent setups that combine the strengths of data warehouses and data lakes. For clients focused on structured analytics and reporting, we establish robust warehouse models. For those managing distributed, real-time, or unstructured data, we create scalable data lake and fabric layers that ensure easy access, automated governance, and AI readiness.

All our implementations comply with global standards, including ISO 27001, ISO 27701, SOC 2, and GDPR, ensuring security and compliance throughout the migration process. Moreover, with our deep expertise in both traditional and modern systems, Kanerika helps organizations transition from fragmented data silos to unified, intelligent platforms, unlocking real-time insights and accelerating digital transformation without compromise.

FAQs

What is the difference between a data lake and a data factory?

A data lake is a massive, raw data repository—think of it as a digital swamp holding all sorts of information. A data factory, on the other hand, is the processing plant; it structures and prepares data from the lake (or other sources) for analysis and use. Essentially, the lake *stores* and the factory *processes* data. They are complementary, not competing, technologies.

Do you need a data warehouse if you have a data lake?

Not necessarily. A data lake stores raw data; a data warehouse organizes it for analysis. If your needs are purely exploratory or you’re comfortable querying raw data, a data warehouse might be redundant. However, for faster, more efficient reporting and business intelligence, a data warehouse offers significant advantages even with a data lake.

What is the difference between data lake and data warehouse GCP?

GCP’s Data Lake (like Cloud Storage) stores raw, unstructured data in its native format, emphasizing volume and variety. A Data Warehouse (like BigQuery) focuses on structured, curated data optimized for analytical querying and reporting, prioritizing speed and efficiency. Think of a data lake as a raw material repository, while a data warehouse is a refined, finished product ready for analysis. They often work together; the lake provides the source for the warehouse.

Is Snowflake a data lake or data warehouse?

Snowflake isn’t strictly one or the other; it’s a cloud-based data platform that blends the best of both. It offers the scalability and flexibility of a data lake for storing diverse data types, yet provides the structured query and analytical capabilities of a data warehouse for efficient querying and reporting. Think of it as a unified solution, bridging the gap between the two traditional approaches.

What is the main difference between data warehouse and data lake?

A data warehouse is a structured, curated collection of data ready for analysis, like a neatly organized library. A data lake, in contrast, is a raw, unprocessed repository of data in its native format – a vast, unorganized data dump. Think of it like a warehouse versus a natural lake. The key difference lies in the level of processing and structure.

What is ETL in a data warehouse?

ETL stands for Extract, Transform, Load – it’s the vital process that gets data ready for your data warehouse. Think of it as a data plumber: it extracts raw data from various sources, cleans and shapes it (transforms), and then loads it neatly into the warehouse for analysis. Essentially, ETL makes messy data usable for insightful reporting.

What is the difference between data lake and data lakehouse?

A data lake is like a raw, unorganized warehouse of all your data, in various formats. A data lakehouse adds structure and organization to that warehouse, using technologies like open formats and ACID transactions for better querying and analysis. Think of it as upgrading a messy storage room into a well-organized, easily accessible archive. The key difference is the level of governance and management applied.

What is the difference between Azure data Factory and Azure Data Lake and Databricks?

Azure Data Factory orchestrates data movement and transformation, acting like a workflow manager. Azure Data Lake Storage is your raw data repository – think of it as a massive, scalable hard drive. Databricks provides a managed Apache Spark environment for processing and analyzing that data, enabling powerful analytics on the data in the lake. They work together: ADF moves data *into* the Data Lake, then Databricks analyzes it.

What is the difference between ETL and ELT?

ETL (Extract, Transform, Load) cleans and transforms data *before* loading it into the target system, like pre-cooking a meal. ELT (Extract, Load, Transform) loads the raw data first, then transforms it in the target system—think of it as cooking the meal after it’s already in the serving dish. This impacts speed, storage needs, and the best choice depends on your data volume and transformation complexity.

What is the difference between data lake and data lab?

A data lake is a vast, raw storage repository for all types of data, regardless of structure. Think of it as a giant, unorganized warehouse. A data lab, conversely, is a curated, structured environment for data analysis and experimentation – a refined workshop built *on top of* the data lake (or other data sources). Essentially, the lake holds the materials, the lab is where you build with them.