In January 2025, OpenAI, Meta, and Google faced the U.S. Congress over AI-driven election misinformation. Days later, the EU enforced strict AI regulation under its new AI Act, with fines up to €35 million or 7% of global turnover for companies failing transparency standards. These events show how fast AI regulation is becoming a global priority.

According to PwC, 73% of executives now cite regulatory risk as their top concern in adopting AI, yet most enterprises still lack clear governance frameworks. From biased algorithms to data misuse, the stakes include not just compliance fines but also lawsuits and lasting reputational damage.

In this guide, we’ll break down the most important AI regulations shaping 2025, the common compliance pitfalls, and practical steps enterprises can take to stay ahead—turning regulation into a source of trust and competitive advantage.

Why AI Regulation Matters to Modern Businesses

Artificial intelligence has rapidly evolved from a novel research experiment to a mission-critical enterprise tool. According to the McKinsey Global AI Survey (2024), nearly 78% of businesses report AI adoption in at least one business function, ranging from customer support chatbots to complex risk assessment models in the banking sector. While AI adoption once carried the mindset of “move fast and break things,” regulators and boards now emphasize “move fast but stay compliant.”

This shift reflects the recognition that AI, if left unregulated, can generate significant risks for businesses—legal, financial, and reputational. Governance frameworks now serve as enablers of innovation, ensuring that AI adoption remains sustainable and resilient in the long run.

Real-World Business Risks Without Compliance

Ignoring AI regulation can expose businesses to multiple layers of risk:

- Algorithmic Bias → Biased hiring algorithms have already led to class-action lawsuits in the U.S. In 2023, the U.S. Equal Employment Opportunity Commission (EEOC) received a surge of complaints related to automated recruitment systems showing discriminatory outcomes.

- Deepfakes & Intellectual Property Theft → High-profile cases of AI-generated content misusing celebrity likenesses underscore the potential for unchecked generative AI to cause reputational harm and lead to lawsuits.

- Data Privacy Violations → In 2023, Meta was fined €1.2 billion under the GDPR for data transfer violations. AI systems that mishandle or inadequately anonymize personal data can expose businesses to similar penalties.

- Opaque AI Decisions → Black-box models undermine trust. A Deloitte survey found that 62% of executives cite “lack of explainability” as the biggest obstacle to wider AI adoption in regulated industries.

Key Drivers Behind AI Regulation

The growing wave of AI regulation is driven by several key factors that shape how governments and businesses approach the adoption of responsible AI.

- Consumer Protection – AI now influences high-stakes decisions such as loan approvals, medical diagnoses, and hiring. Regulators demand transparency and fairness in these outcomes.

- Trust Building – Large-scale AI adoption depends on public trust. Frameworks are being designed to address concerns about bias, privacy, and fairness, aiming to avoid consumer backlash.

- Accountability – Authorities are closing gaps where companies previously excused opaque decisions by claiming algorithms were “too complex” to explain.

- Global Coordination – With 70+ countries drafting AI laws, alignment is essential to prevent regulatory arbitrage, where firms exploit weaker legal environments.

Supercharge Your Operations with AI!

Partner with Kanerika for cutting-edge AI solutions that drive results.

Key AI Regulation Laws Enterprises Must Track

1. EU AI Act: Global Benchmark

The EU AI Act, passed in 2024 and entering into effect in 2025, is widely regarded as the global “gold standard” for AI regulation. It introduces a risk-based classification model:

- Minimal-risk AI: Allowed with no restrictions (e.g., spam filters).

- Limited-risk AI: Requires transparency disclosures (e.g., chatbots must identify themselves as AI).

- High-risk AI: Requires conformity assessments, ongoing monitoring, and data governance (e.g., AI in hiring, education, and credit scoring).

- Unacceptable-risk AI: Outright banned (e.g., social scoring, manipulative biometric systems).

Penalties are severe, ranging from up to €35 million to 7% of the company’s global annual turnover, whichever is higher. General-purpose AI (GPAI) providers—such as OpenAI, Anthropic, or Google DeepMind—face additional transparency obligations regarding training data, model usage, and safety protocols.

2. U.S. State-Led Patchwork

Unlike the EU, the U.S. has no single federal AI law. Instead, states are leading the charge:

- In 2024, 40 states introduced AI-related bills; six states—including California, Colorado, and Connecticut—enacted legislation.

- California’s California Privacy Protection Agency (CPPA) gained expanded enforcement powers over AI use in consumer data processing.

- Several states are introducing AI watermarking requirements for generative AI systems to combat misinformation.

- Federal debate continues on whether a national framework should preempt state laws. Until then, businesses must navigate a fragmented compliance map similar to how they manage CCPA vs. GDPR.

3. Asia-Pacific Approaches

- China: Requires mandatory algorithm registration with the Cyberspace Administration of China and content labeling for AI-generated media.

- Singapore: Promotes the Model AI Governance Framework, a voluntary yet influential guideline emphasizing transparency and fairness.

- Japan: Focuses on AI in manufacturing and robotics, with a sector-specific regulatory approach.

- South Korea: Drafting AI laws focusing on data protection and algorithm fairness in consumer applications.

- Australia: Running consultations on AI safety and ethics, likely leading to legislation by 2026.

4. UK’s Principles-Based Framework

The UK diverges from the EU’s prescriptive model by adopting a principles-based approach. Its 2025 plan includes:

- Making voluntary AI safety agreements legally binding.

- Establishing the AI Safety Institute with independent authority to audit AI models.

- Delegating oversight to sector-specific regulators (e.g., FCA for finance, MHRA for healthcare).

This flexible framework allows innovation while holding businesses accountable.

5. Global Momentum

- According to the Stanford AI Index Report (2025), over 70 countries are actively drafting or enforcing AI regulations.

- Organizations such as the OECD, UNESCO, and G7 are pushing for global alignment.

- Standards bodies like ISO and IEEE are formalizing AI governance benchmarks. For instance, ISO/IEC 42001 (2023) became the first global standard for AI Management Systems.

AI Security Framework: How Enterprises Can Protect Their AI Systems

Learn how to secure AI systems, manage risks, and implement a robust AI security framework.

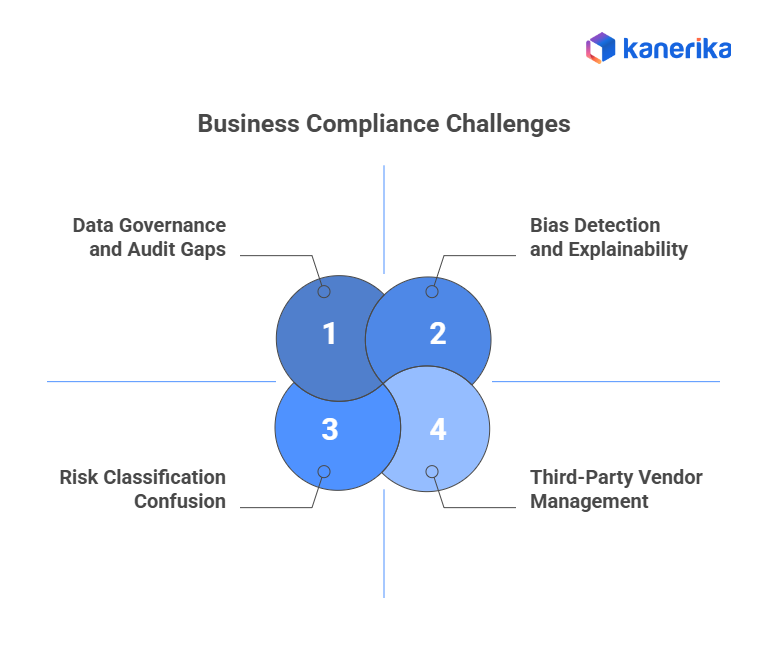

Core Compliance Challenges Businesses Face

1. Risk Classification Confusion

The same AI system may be classified differently depending on jurisdiction. For example, an AI-powered recruitment tool might be “high-risk” in the EU (due to employment impact) but “low-risk” in the U.S. The challenge grows as AI regulation changes, requiring frequent updates and creating uncertainty for global businesses.

2. Data Governance and Audit Gaps

AI models depend heavily on large datasets. Regulators increasingly demand evidence of data provenance, quality, and retention policies. However, many businesses lack systems that track data through its entire lifecycle. Ensuring compliance when third-party datasets or vendors are involved adds another layer of complexity.

3. Bias Detection and Explainability

AI explainability remains a key challenge:

- Deep learning models often lack interpretable decision pathways.

- Businesses must balance accuracy with explainability, particularly in regulated sectors.

- Without consistent industry-wide bias testing methods, compliance audits can become subjective.

4. Third-Party Vendor Management

Most enterprises rely on third-party AI vendors. Yet, these vendors may not meet compliance requirements, leaving the enterprise liable. Contracts increasingly need compliance clauses, shared risk agreements, and audit rights. Vendor risk management becomes a critical component of AI governance.

Tools and Frameworks Supporting Compliance

1. International Standards for AI Governance

- ISO/IEC 42001 (2023): Provides a structured framework for creating and maintaining AI Management Systems (AIMS). Focuses on ethics, fairness, and transparency.

- NIST AI Risk Management Framework (2023): Widely adopted in the U.S., it helps organizations identify, assess, and mitigate AI risks.

- Alignment between ISO, NIST, and the EU AI Act provides enterprises with a roadmap for harmonized compliance.

2. Commercial Compliance Platforms

- IBM Watson.governance: Offers monitoring of model risks, drift, and explainability.

- Credo AI: Provides compliance dashboards with continuous regulatory updates.

- Microsoft Responsible AI Toolbox: Open-source tools for bias detection and explainability.

- AWS AI Governance Tools: Built-in compliance features for audit trails and data security.

3. Internal Monitoring Systems

- Real-time dashboards track model performance and drift.

- Automated audit trails ensure readiness for regulatory inspections.

- Incident response workflows handle compliance breaches quickly.

- Model versioning helps enterprises prove accountability during audits.

How to Address Key AI Ethical Concerns In 2025

Learn AI ethical challenges, from bias to transparency, and how to ensure responsible AI use.

How Businesses Can Prepare for AI Compliance

1. Audit and Inventory AI Systems

- Create a full registry of AI tools, models, and their functions.

- Map data flows across training, deployment, and monitoring.

- Assign each system to regulatory risk categories.

- Conduct recurring audits as systems evolve.

2. Strengthen Data and Privacy Governance

- Ensure compliance with GDPR, CCPA, and sector-specific laws.

- Track data lineage from collection to deletion.

- Verify compliance of external data sources and vendors.

- Disclose data usage transparently to customers.

3. Establish AI Governance Structures

- Form ethics committees, including legal, technical, and business teams.

- Appoint compliance officers with AI-specific expertise.

- Define escalation paths for high-risk AI deployments.

4. Embed Human Oversight

- Define thresholds where human-in-the-loop is mandatory.

- Train reviewers to detect AI errors, bias, and anomalies.

- Maintain override mechanisms for automated systems.

5. Develop AI Literacy and Training Programs

- Train leadership and staff on AI risks and compliance.

- Promote awareness of fairness, accountability, and transparency.

- Encourage organization-wide adoption of ethical AI practices.

6. Stay Ahead of Global Regulations

- Monitor evolving laws in the EU, U.S., UK, and APAC.

- Adjust governance frameworks proactively.

- Participate in global AI standard-setting initiatives.

Sector-Specific Compliance Requirements

1. Financial Services

- Model Risk Management: Banks must validate AI systems like traditional models.

- GDPR Article 22: Consumers can demand explanations for automated credit decisions.

- Equal Credit Opportunity Act (ECOA): Requires testing lending algorithms for bias.

- Stress Testing: AI systems must be evaluated under adverse economic scenarios.

2. Healthcare

- FDA Regulation: By 2024, over 690 AI-enabled medical devices had received approval. Class III devices require full clinical trials.

- HIPAA Compliance: AI tools must protect patient health information.

- Informed Consent: Patients must be informed when AI contributes to diagnosis or treatment.

- Liability Concerns: Insurers may exclude malpractice claims if AI systems aren’t properly validated.

3. Human Resources

- Bias Audits: New York’s Local Law 144 mandates annual bias audits for hiring AI.

- Candidate Consent: Required before applying AI-powered recruitment tools.

- Transparency Protocols: Employees must be able to appeal AI-driven decisions.

4. Marketing & Advertising

- Disclosure Rules: AI-generated content must be clearly labeled.

- Data Protection: Personalization tools must comply with GDPR/CCPA.

- IP Concerns: AI-generated creative assets require legal protections.

- Cross-Border Transfers: Marketing data must comply with regional transfer rules.

How Kanerika Helps Enterprises Stay Ahead of AI Regulation

At Kanerika, we help enterprises secure their AI systems while staying compliant with global regulations. Our AI security framework protects models, data, and workflows from emerging threats using a layered strategy that combines governance, risk detection, and automation.

We use tools like Microsoft Purview to classify sensitive data, detect insider risks, and enforce policies in real time. Our framework aligns with AI TRiSM principles—ensuring every model is transparent, accountable, and ethically deployed.

Kanerika’s solutions support compliance with laws like GDPR, HIPAA, and the EU AI Act, and are backed by certifications including ISO 27701, SOC II, and CMMi Level 3. Whether you’re working with LLMs, RPA bots, or autonomous agents, our platform adapts to your needs and scales with your enterprise.

Through partnerships with Microsoft, Databricks, and AWS, we deliver enterprise-grade AI security that’s built for trust, compliance, and growth.

Transform Your Business with AI-Powered Solutions!

Partner with Kanerika for Expert AI implementation Services

FAQs

1. What is AI regulation, and why is it important?

AI regulation involves creating laws and guidelines to ensure artificial intelligence is developed and used ethically, safely, and transparently. It’s crucial to prevent misuse, protect individual rights, and foster public trust in AI technologies.

2. Which countries have implemented AI regulations?

Countries like the European Union, the United States, and India have introduced AI regulations. The EU’s AI Act is one of the most comprehensive, categorizing AI systems by risk level and imposing stricter rules on higher-risk applications. In India, the government has issued advisories and is developing frameworks to balance innovation with responsibility.

3. What are the key components of the EU AI Act?

The EU AI Act classifies AI systems into categories based on their risk to health, safety, and fundamental rights. High-risk AI systems face stricter obligations, including transparency, accountability, and data governance measures.

4. How do AI regulations impact businesses?

Businesses must comply with AI regulations to avoid penalties, ensure ethical use of AI, and maintain consumer trust. This includes implementing transparency measures, conducting risk assessments, and ensuring data privacy.

5. What challenges do regulators face in AI governance?

Regulators face challenges such as keeping pace with rapid technological advancements, ensuring international cooperation, and addressing ethical concerns like bias and accountability in AI systems.

6. How can individuals and organizations stay informed about AI regulations?

Individuals and organizations can stay informed by following updates from regulatory bodies, participating in industry forums, and engaging with educational resources on AI ethics and governance.