Large companies today work with data coming from dozens of apps, old systems, and cloud tools. Keeping everything connected and usable is one of the hardest parts of data work. This is where Databricks Enterprise Integration helps. Databricks lets organizations bring data from many sources into a single platform so teams can clean, process, and use it for analytics without jumping between multiple systems.

For example, Databricks announced a 70% year-over-year growth in its EMEA enterprise business driven by demand for AI and data integration solutions. Moreover, the global data intelligence and integration software market was estimated at 18.85 billion dollars in 2024 and is expected to reach 71.74 billion dollars by 2033, growing at a compound annual rate of about 16.4%. These figures show why integration at the enterprise scale is more than a back-office task; it is a strategic move for data-driven companies.

In this blog, you will learn how Databricks Enterprise Integration works, why large organizations choose it, and how it helps create faster insight across departments.

Unlock Real-Time Insights And AI Innovation With Databricks Enterprise Integration.

Partner With Kanerika For End-To-End Implementation And Support.

Key Takeaways

- Databricks unifies data from ERP, CRM, cloud, and legacy systems into a single lakehouse for faster insights.

- Delta Lake, Auto Loader, and Unity Catalog simplify ingestion, governance, and real-time analytics.

- Enterprises use Databricks to reduce engineering costs, improve ML deployment speed, and scale across clouds.

- Major brands like Walmart, Shell, NAB, GM, Danone, and H&M rely on Databricks for AI, analytics, and data modernization.

- Kanerika helps organizations implement Databricks for migration, governance, AI integration, and end-to-end data transformation.

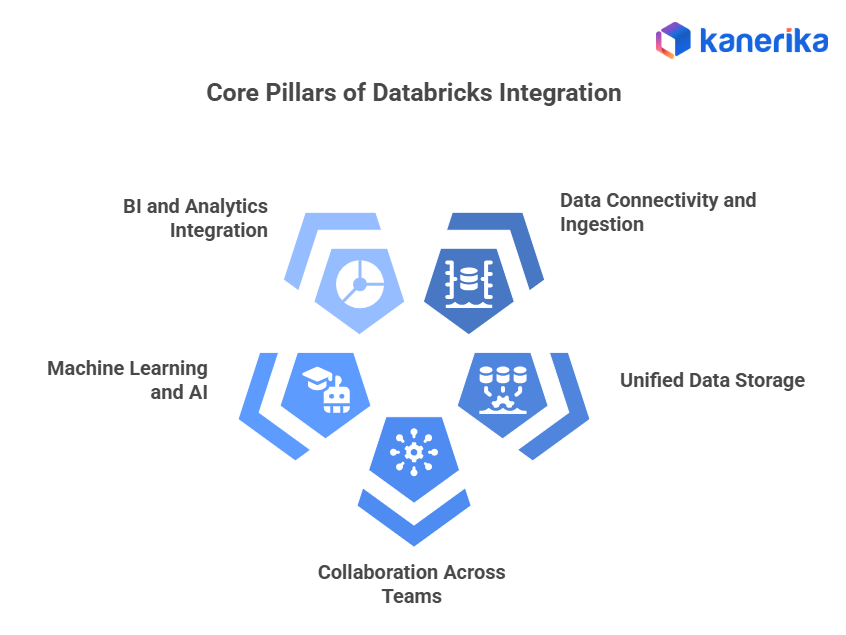

Core Pillars of Integration in Databricks

a. Data Connectivity and Ingestion

Databricks is known for its ability to connect with almost any enterprise data source. Additionally, whether your organization uses AWS, Azure, GCP, Salesforce, Oracle, SAP, or traditional on-premises databases, Databricks has connectors for all of them that handle large-scale data ingestion. Auto Loader and Delta Live Tables let you set up pipelines that continuously pull data from multiple systems into the lakehouse. These pipelines understand your data schema and stay reliable over time.

Real-life example: One global company streams customer data from Salesforce and order details from their ERP into Delta Lake. Now they have a real-time view of sales activity. This helps them make faster decisions and automate their reporting.

b. Unified Data Storage with Delta Lake

Delta Lake is where all your enterprise data lives. It handles ACID transactions, lets your schema change over time, supports time travel, and tracks versions. This makes your analytics more predictable. You avoid problems like broken pipelines or reports showing old data.

Example: A retail brand with more than 500 stores uses Delta Lake to maintain up-to-date transaction records. Consequently, this helps with forecasting, store performance analysis, and accurate inventory management.

c. Collaboration Across Data Teams

Databricks helps data engineers, analysts, ML teams, and business users work together in a shared environment. Notebooks, Repos, cluster permissions, and Unity Catalog make it easy for teams to collaborate. They can share code, track versions, and access properly governed datasets. This cuts down on teams working in isolation. Analytics get delivered faster across the organization.

d. Machine Learning and AI Integration

Databricks supports the complete lifecycle of machine learning and AI. Additionally, MLflow gives teams the ability to track experiments, manage models, deploy them, and monitor performance in production environments.

Data scientists can work on large datasets and build advanced models. They can push results into business applications without dealing with infrastructure headaches.

Example: A manufacturing company uses IoT sensor data to train predictive maintenance models in Databricks. Therefore, this helps detect failures early and reduces operational downtime.

e. BI, Analytics and Business Application Integration

Databricks works with the main BI tools. Power BI, Tableau, Looker, Sigma, and Qlik all connect. Direct Lake access and fast SQL performance mean teams can build interactive dashboards on fresh data. No more waiting for scheduled refreshes. Business users across departments get real-time analytics, self-service reporting, and AI-driven insights.

Databricks Integration with Enterprise Systems

a. ERP Systems (SAP, Oracle, Microsoft Dynamics)

Enterprises rely on ERP systems for critical operations. Moreover, Databricks simplifies insight creation by connecting ERP data with operational, customer, and financial datasets. It supports connectors, ingestion pipelines, and transformation layers that make ERP analytics faster and more accurate.

This helps improve supply chain visibility, inventory accuracy, financial forecasting, and manufacturing insights. Additionally, AI and ML models built on ERP data also automate demand planning and procurement processes.

b. CRM Systems (Salesforce, HubSpot)

Databricks brings together CRM, marketing, sales, and service data into a single customer 360 analytics model. Furthermore, organizations gain deeper visibility into customer lifetime value, churn signals, lead quality, and behaviour patterns.

ML models can personalize customer journeys, score leads, and strengthen retention strategies based on predicted customer needs.

c. Cloud and Hybrid Environments

Databricks works across multiple cloud providers, including AWS, Azure, and GCP. Moreover, this is ideal for enterprises that operate in hybrid or multi-cloud environments.

Delta Sharing allows organizations to share data securely with vendors, partners, and customers without moving or duplicating files. Consequently, this supports collaborative data systems, cross-cloud analytics, and regulatory compliance across industries such as banking, healthcare, and insurance.

d. Legacy Modernization

Many enterprises continue to rely on legacy tools like SSIS, Hadoop, and on-prem SQL pipelines. Additionally, Databricks helps modernize these systems by migrating them to scalable lakehouse pipelines that support both ELT and ETL workflows.

This reduces infrastructure cost, speeds up processing, improves governance, and prepares the organization for AI-driven use cases. Therefore, it is a critical step toward building a modern, future-ready data setup.

Architecture: How Enterprise Integration on Databricks Works

Enterprise integration on Databricks follows a simple but powerful setup that brings together data from multiple systems and prepares it for analytics and AI. The typical flow looks like this:

Source systems → Ingestion layer → Delta Lake → Workflows → ML and BI applications

- Source systems include ERP platforms, CRM tools, cloud storage, IoT sensors, marketing systems, on-prem databases, and legacy applications. Databricks connects to these sources through ready-made connectors and ingestion frameworks.

- The ingestion layer uses tools like Auto Loader and Delta Live Tables to ingest data in real time or in batch mode. This ensures continuous, reliable, and schema-aware ingestion across structured and unstructured data.

- Delta Lake acts as the unified storage layer. Additionally, it stores all data in a consistent, version-controlled format that supports ACID transactions, time travel, and schema evolution. This becomes the single source of truth for analytics, machine learning, and reporting.

- Workflows built on Databricks manage data transformation, cleansing, enrichment, and aggregation. Furthermore, these pipelines prepare the data for downstream use while ensuring accuracy, lineage tracking, and reliability.

- ML and BI applications access this prepared data through optimized SQL endpoints and direct lake access. Teams can run interactive dashboards, build machine learning models, deploy AI solutions, or create data-driven business applications without delays.

Throughout this setup, Unity Catalog provides governance and security. Moreover, it manages permissions, access control, audit logs, data lineage, and compliance policies across all users and workloads. This ensures that data is consistently governed across the entire lakehouse environment, even in multi-cloud deployments.

Why Databricks Advanced Analytics is Becoming a Top Choice for Data Teams

Discover how Databricks enables advanced analytics with unified data, AI, and scalable BI.

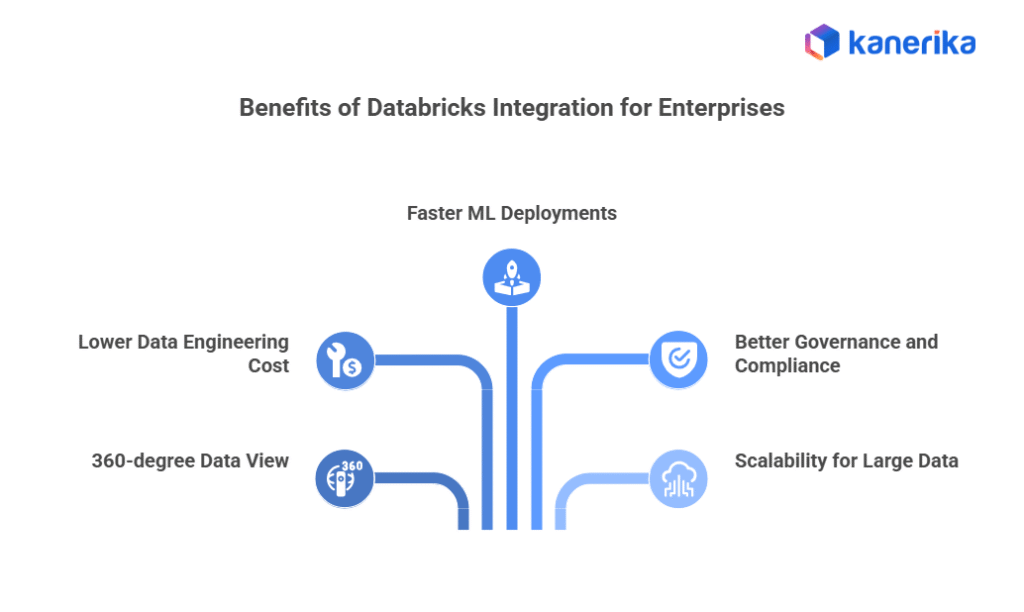

Benefits of Databricks Integration for Enterprises

1. 360-degree data view

Databricks brings all enterprise data into a single platform. You get a complete view of customers, operations, finance, supply chain, and business performance. Teams across functions work with the same trusted data. This reduces misalignment and improves decision-making.

2. Lower data engineering cost

The platform automates ingestion, transformation, workflow management, and scaling. Moreover, this reduces manual engineering effort and lowers both operational and infrastructure costs. Companies spend less time fixing pipelines and more time delivering insights.

3. Faster machine learning deployments

Databricks brings together data, experimentation, model management, and deployment into one environment. Furthermore, MLflow speeds up the lifecycle from development to production, helping enterprises roll out AI solutions faster and more reliably.

4. Better governance and compliance

Unity Catalog centralizes governance, audit logs, fine-grained access control, and lineage. Additionally, enterprises maintain strict compliance with rules while supporting controlled data sharing across teams and external partners.

5. Scalability for large data volumes

Databricks is built to handle massive datasets, streaming data, global deployments, and high-compute workloads. Moreover, the lakehouse setup scales elastically, allowing enterprises to manage everything from IoT pipelines to real-time analytics without performance issues.

Common Challenges and How Databricks Solves Them

Most enterprises struggle with disconnected systems, slow data processing, and complex governance requirements. Consequently, Databricks helps address these challenges through a unified, scalable lakehouse setup.

1. Data silos

Companies store data everywhere. CRM, ERP, cloud systems, and on-prem servers. This makes it hard to see the full picture. Databricks puts everything together in Delta Lake. Now teams can work with the same clean data for analytics, reports, and AI.

2. Batch delays

Old data warehouses process in batches. Your insights are always a day or a week behind. Databricks streams data in real time with Auto Loader and Delta Live Tables. You go from weekly updates to live analytics.

3. Security and compliance

Managing access across different tools gets messy fast. Unity Catalog handles all the permissions, tracking, and audit logs in one place. You can meet governance requirements across any cloud.

4. Multi-cloud complexity

Enterprises working across Azure, AWS, and GCP often face integration issues. Furthermore, Databricks provides a consistent platform across all major clouds and enables secure data sharing through Delta Sharing, which lets you share data securely without copying files around. This makes hybrid setups much simpler.

5. Skill gaps

Different teams use different tools and languages, which slows down collaboration. Moreover, Databricks supports SQL, Python, R, and Scala in a single workspace, making it easier for engineers, analysts, and data scientists to collaborate without learning a new toolset.

AI in Robotics: Pushing Boundaries and Creating New Possibilities

Explore how AI in robotics is creating new possibilities, enhancing efficiency, and driving innovation across sectors.

Major Enterprises Integrated with Databricks

Databricks supports a wide range of industry applications by combining unified data storage, scalable compute, and connected AI capabilities.

1. Retail

- Ahold Delhaize: Uses Databricks for real-time analytics, AI/ML, customer personalization, inventory forecasting, and loyalty programs on Databricks.

- H&M Group: Reported as a Databricks customer for inventory management and data-driven customer insights.

2. Banking / Financial Services

- National Australia Bank (NAB): Centralizes hundreds of data sources on Databricks. They use it for fraud detection, chat assistants, and data governance.

- Mastercard: Applies Databricks for AI-driven commerce, data governance, and enterprise insights.

- HSBC: Listed among major Databricks customers, especially in the financial services space.

3. Energy / Industrial

- Shell: Uses Databricks for large-scale analytics, ML, and unified data strategy, particularly for energy operations, risk, and governance.

- Bosch: Uses Databricks in its supply chain and manufacturing data platform to drive IoT analytics and operational optimization.

4. Healthcare

Databricks is used by 9 of the top 10 largest US health insurers and 8 of the 10 largest healthcare companies.

- Amgen: Uses Databricks to bring together structured data, improve governance, and enable traceable analytics.

- Hinge Health: Uses Unity Catalog (Databricks) for fine-grained access control and HIPAA compliance.

5. Transportation / Automotive

- Rivian: The EV manufacturer uses Databricks for real-time data ingestion, analytics, and AI to power their product development and operations.

- General Motors (GM): Uses Databricks for customer-360 analytics and data science workloads.

6. Consumer Goods

- Danone: Works with Databricks to scale AI, improve data quality, and speed up decision-making across global teams.

- Bayer (Consumer Health): Uses Databricks to build reusable data products, scalable analytics, and a governed data setup.

Kanerika + Databricks: Building Intelligent Data Ecosystems for Enterprises

Kanerika helps enterprises modernize their data infrastructure through advanced analytics and AI-driven automation. We deliver complete data, AI, and cloud transformation services for industries such as healthcare, fintech, manufacturing, retail, education, and public services. Our work covers data migration, engineering, business intelligence, and automation. Organizations achieve measurable outcomes.

As a Databricks Partner, we use the Lakehouse Platform to bring together data management and analytics. Our approach includes Delta Lake for reliable storage, Unity Catalog for governance, and Mosaic AI for model lifecycle management. This enables businesses to move from fragmented big data systems to a single, cost-efficient platform that supports ingestion, processing, machine learning, and real-time analytics.

Kanerika ensures security and compliance with global standards, including ISO 27001, ISO 27701, SOC 2, and GDPR. Additionally, with deep experience in Databricks migration, optimization, and AI integration, we help enterprises turn complex data into useful insights and speed up innovation.

Streamline Data, Analytics, And AI Workflows Through Databricks Integration.

Partner With Kanerika For Secure, Reliable, And Scalable Execution.

FAQs

What is Databricks enterprise integration

It is the process of linking Databricks with other tools your company uses each day. This can include storage tools, workflow tools, analytics tools, and data management systems. With these links in place, data moves between systems smoothly, and teams can work in one shared environment without slow manual steps.

Why do companies need Databricks enterprise integration

Most companies have many tools spread across cloud and on-site setups. When these tools do not connect well, data becomes messy and slow to use. Integration helps teams pull data into one place, clean it, and use it for reports or models. It also cuts the time spent on repeated tasks and lowers the chance of mistakes.

How complex is Databricks enterprise integration

The setup depends on the tools you use, but most teams find the steps easy once access rules are ready. You will need service accounts, clear folder paths, and basic setup inside Databricks. Many cloud tools already provide built-in connectors, which makes the setup lighter and faster.

What systems can connect during Databricks enterprise integration

Databricks can link with CRMs, data warehouses, data lakes, BI tools, workflow tools, and storage systems. It also works well with API based tools. This wide support lets companies build a clean data path from source to model training to reports.

Does Databricks enterprise integration improve security

Yes. It puts access rules, logs, and data checks in one place. Instead of managing rights in many systems, teams can use one central setup. This reduces risk and makes it easier to track who accessed or changed data. It also helps companies follow internal and external rules.