Microsoft’s $10 billion investment in OpenAI’s GPT models transformed their entire product suite significantky, with GitHub Copilot alone helping developers code 55% faster and driving a 50% increase in developer satisfaction. But what exactly makes GPT models so transformative?

GPT models, or Generative Pre-trained Transformers, represent the cutting edge of artificial intelligence, powering everything from customer service chatbots to advanced content creation tools. These neural networks have redefined how machines understand and generate human language, processing context and nuance in ways that seemed impossible just a few years ago.

Whether you’re a business leader exploring AI integration, a developer looking to harness language models, or simply curious about this technology reshaping our digital landscape, understanding GPT models has become crucial. From their fundamental architecture to real-world applications, this guide breaks down everything you need to know about these powerful AI systems that are silently transforming how we work, communicate, and create.

Transform Your Business with AI-Powered Solutions!

Partner with Kanerika for Expert AI implementation Services

What is a GPT Model?

A GPT (Generative Pre-trained Transformer) model is a type of artificial intelligence that uses deep learning to process and generate human-like text. These models are trained on vast amounts of text data to understand patterns in language and context.

In today’s tech-driven world, GPT models have become transformative tools across various sectors. They power chatbots, virtual assistants, and content generation systems, helping businesses automate customer service and content creation. They assist in code generation, language translation, and educational tutoring. Their ability to understand and respond to natural language has made them valuable in research, writing, and data analysis.

Overview of GPT Models

Architectural Components

Built on the Transformer architecture, these models consist of multiple layers of transformer blocks, each containing self-attention mechanisms and feed-forward neural networks. This design enables GPT models to capture long-range dependencies in the text, making them highly effective for a variety of NLP tasks.

Features and Capabilities

One of the standout features of GPT models is their ability to generate human-like text. This is achieved through a mechanism known as “autoregression,” where the model generates one token at a time and uses its previous outputs as inputs for future predictions. This sequential generation process allows for the creation of coherent and contextually relevant text, setting GPT models apart from many other machine learning models in the NLP domain.

Another remarkable capability of GPT models is their versatility. Due to their pre-training on diverse and extensive text corpora, these models can be fine-tuned to excel in specific tasks or industries. Whether it’s generating creative writing, answering questions in a customer service chatbot, or even composing music, the possibilities are virtually endless.

The evolution from GPT-1 to the latest versions has also seen significant improvements in efficiency and performance. Advanced optimization techniques and hardware accelerators like GPUs have made it feasible to train your own GPT model and deploy it, opening up new avenues for research and application.

Partner with Kanerika to Modernize Your Enterprise Operations with High-Impact Data & AI Solutions

The Evolution of GPT Models: From GPT-1 to GPT-4o

1. GPT-1

Architecture & Features

GPT-1 introduced the transformer architecture, revolutionizing natural language processing by relying on attention mechanisms instead of traditional recurrent models. With 117 million parameters, it demonstrated the ability to generate coherent text but was limited in scale and depth.

Capabilities

- Basic text generation and summarization.

- Limited understanding of context beyond a few sentences.

Applications

- Foundation for advanced NLP research.

- Simple chatbot implementations and document summarizers.

2. GPT-2

Architecture & Features

GPT-2 brought a significant leap in model size with 1.5 billion parameters, making it capable of generating more coherent and contextually relevant outputs. Its unsupervised learning on diverse datasets expanded its understanding of language patterns.

Capabilities

- Better contextual understanding across longer texts.

- Creative writing, poetry, and idea generation.

Applications

- Enhanced conversational agents and content generation.

- Early applications in automated storytelling.

Improvements Over GPT-1

- A tenfold increase in parameters for greater sophistication.

- Ability to handle more complex and nuanced tasks.

3. GPT-3

Architecture & Features

With 175 billion parameters, GPT-3 redefined language models by providing human-like responses across various domains. It utilized few-shot and zero-shot learning, enabling users to prompt the model with minimal examples for accurate results.

Capabilities

- Advanced text comprehension and generation.

- Ability to code, translate languages, and generate detailed reports.

Applications

- Chatbots, virtual assistants, and creative writing tools.

- Coding support for developers and educational content generation.

Improvements Over GPT-2:

- 100x increase in parameters, resulting in improved precision and versatility.

- Ability to adapt to different tasks with limited input.

4. GPT-4

Architecture & Features

GPT-4 built on the foundation of GPT-3, introducing multimodal capabilities, allowing it to process both text and image inputs. With improved parameter efficiency and enhanced fine-tuning techniques, GPT-4 achieved greater precision in language understanding and generation. The model focused on delivering human-like reasoning and a deeper understanding of nuanced contexts.

Key Features

- Ability to interpret images alongside text inputs.

- Support for extended conversation history for seamless contextual understanding.

- Enhanced safety and alignment with human values through iterative fine-tuning.

Capabilities

Advanced Language Understanding: Handles complex queries with improved reasoning and coherence. Understands and generates nuanced, contextually relevant content.

Multimodal Processing: Interprets image-based data, making it suitable for tasks like image captioning, visual Q&A, and more. Enables integration with OCR (Optical Character Recognition) tools for text extraction from visuals.

Creative Outputs: Generates high-quality creative writing, from poetry to technical documentation. Assists with coding, debugging, and creating structured workflows.

Improvements Over GPT-3

Multimodal Input Processing: A leap beyond GPT-3’s text-only capabilities, addressing a broader range of use cases.

Deeper Contextual Understanding: Maintains coherence across longer conversations or documents.

Enhanced Safety Features: Reduced potential for generating harmful or biased content through improved alignment techniques.

5. GPT-4o

GPT-4o (“o” for “omni”) represents a significant stride in achieving seamless human-computer interaction. The model accepts a diverse range of inputs, including text, audio, image, and video, and generates outputs in text, audio, or image formats, making it highly versatile.

With response times as low as 232 milliseconds, averaging 320 milliseconds, GPT-4o mirrors human-like conversational response speeds. It matches GPT-4 Turbo’s performance in English text and coding tasks, while offering substantial improvements in non-English languages.

Key Enhancements:

- Cost-Effectiveness: Faster and 50% cheaper API than previous models.

- Multimodal Capabilities: Processes and generates across multiple data formats.

- Superior Vision and Audio Understanding: Excels in interpreting visual and auditory inputs.

6. o1 Series

Introduces advanced reasoning models designed to tackle complex problem-solving tasks with human-like thought processes.

o1-Preview

This model emphasizes deep reasoning, allowing it to handle intricate problems in mathematics, coding, and scientific analysis. By allocating more time to deliberate before responding, o1-Preview processes multi-step problems to reach accurate conclusions.

Key Features

- Advanced Reasoning: Simulates human-like step-by-step thinking for complex problem-solving.

- Enhanced Accuracy: Excels in tasks requiring precision, such as advanced mathematics and coding.

- Versatility: Applicable across various domains, including scientific research and strategic planning.

Capabilities:

- Solves intricate mathematical problems with high accuracy.

- Generates detailed coding solutions and assists in debugging.

- Provides comprehensive analysis for scientific research tasks.

o1-Mini

A streamlined version of o1-Preview, o1-Mini offers efficient reasoning capabilities at a reduced computational cost. It is approximately 80% cheaper than o1-Preview, making it an attractive option for users with budget constraints.

Key Features

- Cost-Effective: Delivers powerful reasoning abilities with lower computational expenses.

- Efficiency: Processes tasks swiftly, suitable for applications requiring quick responses.

- Accessibility: Designed to be more accessible to a broader range of users and developers.

Capabilities

- Performs quick coding tasks efficiently.

- Handles basic reasoning tasks without extensive computational resources.

- Suitable for applications needing rapid yet accurate problem-solving.

Partner with Kanerika to Modernize Your Enterprise Operations with High-Impact Data & AI Solutions

Working Mechanism of GPT Models

GPT models, built on the Transformer architecture, represent a groundbreaking approach to natural language processing. Their working mechanism involves a sophisticated interplay of multiple components, starting from processing raw text input to generating human-like responses. Through pre-training on massive datasets and subsequent fine-tuning, these models develop a deep understanding of language patterns, context, and relationships.

1. Pre-training Phase

- Model learns by predicting next words in sequences

- Processes billions of text examples to understand patterns

- Develops foundational knowledge of grammar, facts, and context

2. Input Processing

- Breaks text into tokens (word pieces or characters)

- Converts tokens into numerical embeddings

- Adds positional encodings to maintain sequence order

3. Attention Mechanism

- Evaluates relationships between all words in the input

- Uses multiple attention heads for parallel processing

- Weighs the importance of different contexts simultaneously

- Creates a comprehensive contextual understanding

4. Feed-Forward Networks

- Processes attention outputs through neural layers

- Transforms information into complex representations

- Combines different aspects of language understanding

- Builds deeper semantic connections

5. Output Generation

- Creates probability distributions for possible next tokens

- Uses sampling techniques to control randomness

- Generates coherent text based on learned patterns

- Maintains consistency with previous context

6. Fine-tuning and Alignment

- Optimizes for specific use cases and applications

- Trains on specific tasks for specialized performance

- Implements RLHF for better human alignment

- Adds safety measures and bias reduction

How to Choose the Right GPT Model for Your Needs

Factors to Consider

Choosing the right GPT model for your specific needs involves a careful evaluation of several factors. The first and perhaps most obvious is the size of the model, usually indicated by the number of parameters it contains. Larger models like GPT-3 offer unparalleled performance but come with increased computational requirements and costs. If your task is relatively simple or you’re constrained by resources, smaller versions like GPT-2 or even DistilGPT may suffice.

Fun Fact – The “Distil” in DistilGPT stands for “knowledge distillation,” where the model plays the role of a “student” learning from a larger “teacher” model. Even though it’s like a mini-me version of the original GPT, DistilGPT can still deliver impressively accurate results while being faster and more efficient!

Comparative Analysis of Different Versions

It’s also beneficial to compare different versions of GPT models to understand their strengths and limitations. For instance, while GPT-3 excels in tasks that require a deep understanding of context, GPT-2 might be more than adequate for simpler tasks like text generation or summarization. Some specialized versions are optimized for specific tasks, offering a middle ground between performance and computational efficiency.

What Makes a Good GPT Model?

The quality of a GPT model can be evaluated based on several criteria. Accuracy is often the most straightforward metric, but it’s not the only one. Other factors like computational efficiency, scalability, and the ability to generalize across different tasks are also crucial. Moreover, the interpretability of the model’s predictions can be vital in applications where understanding the reasoning behind decisions is important.

Factors to Consider

1. Model Size: A larger model with more parameters often means better performance, as it can capture more complex patterns and dependencies in the data. However, there is a trade-off with computational efficiency.

2. Training Data: The quality and diversity of the training data play a crucial role. Data should be well-curated, diverse, and representative of the tasks the model can perform.

3. Architecture: The underlying architecture, like the Transformer structure in the case of GPT, should be capable of handling the complexities involved in natural language understanding and generation.

4. Self-Attention Mechanism: The self-attention mechanisms should effectively weigh the importance of the input, capturing long-range dependencies and relationships between words or phrases.

5. Training Algorithms: Effective optimization algorithms and training procedures are essential for the model to learn efficiently.

6. Fine-Tuning Capabilities: The ability to fine-tune the model on specific tasks or datasets can enhance its performance and applicability.

7. Low Latency & High Throughput: For real-world applications, the model should be able to provide results quickly and handle multiple requests simultaneously.

8. Robustness: A good GPT model should be robust to variations in input, including handling ambiguous queries or coping with grammatical errors in the text.

9. Coherence and Contextuality: The model should generate outputs that are coherent and contextually appropriate, especially in tasks that require generating long-form text.

10. Ethical and Fair: It should minimize biases and not generate harmful or misleading information.

Prerequisites to Build a GPT Model

Hardware and Software Requirements

Before you can start building a GPT model, you’ll need to ensure that you have the necessary hardware and software. High-performance GPUs are generally recommended for training these models, as they can significantly speed up the process. Some of the popular choices include NVIDIA’s Tesla, Titan, or Quadro series. Alternatively, cloud-based solutions offer scalable computing resources and are a viable option for those who do not wish to invest in dedicated hardware.

On the software side, you’ll need a machine-learning framework that supports the Transformer architecture. TensorFlow and PyTorch are the most commonly used frameworks for this purpose. Additionally, you’ll need various libraries and tools for tasks like data preprocessing, model evaluation, and deployment.

Introduction to Datasets

The quality of your dataset is a critical factor that can make or break your GPT model. For pre-training, a large and diverse text corpus is ideal. This could range from Wikipedia articles and news stories to books and scientific papers. The goal is to expose the model to a wide variety of language constructs and topics.

For fine-tuning, the dataset should be highly specific to the task you want the model to perform. For instance, if you’re building a chatbot, transcripts of customer service interactions would be valuable. If it’s a medical diagnosis tool, then clinical notes and medical records would be more appropriate.

Elevate Your Business Productivity with Advanced AI!

Partner with Kanerika for Expert AI implementation Services

How to Create a GPT Model

Steps to Initialize a Model

Creating a GPT model from scratch is a complex but rewarding process. The first step is to initialize your GPT model architecture. This involves specifying the number of layers, the size of the hidden units, and other hyperparameters like learning rate and batch size. These settings can significantly impact the model’s performance, so it’s crucial to choose them carefully based on your specific needs and the resources available to you.

It’s not just about the architecture; it’s also about planning and domain-specific data. Before you even start initializing your model, you need to have a clear plan and a dataset that aligns with your specific needs. This planning stage is often overlooked but is crucial for the success of your model.

Setting Up the Environment and Required Tools

Before you start the training process, you’ll need to set up your development environment. This involves installing the necessary machine learning frameworks and libraries, such as TensorFlow or PyTorch, and setting up your hardware or cloud resources. You’ll also need various data preprocessing tools to prepare your dataset for training. These could include text tokenizers, data augmentation libraries, and batch generators.

Setting up the environment is not just about installing the right tools; it’s also about ensuring you have the computational resources to handle the training process. Whether it’s robust computing infrastructure or cloud-based solutions, make sure you have the resources to meet the computational demands of training and fine-tuning your model.

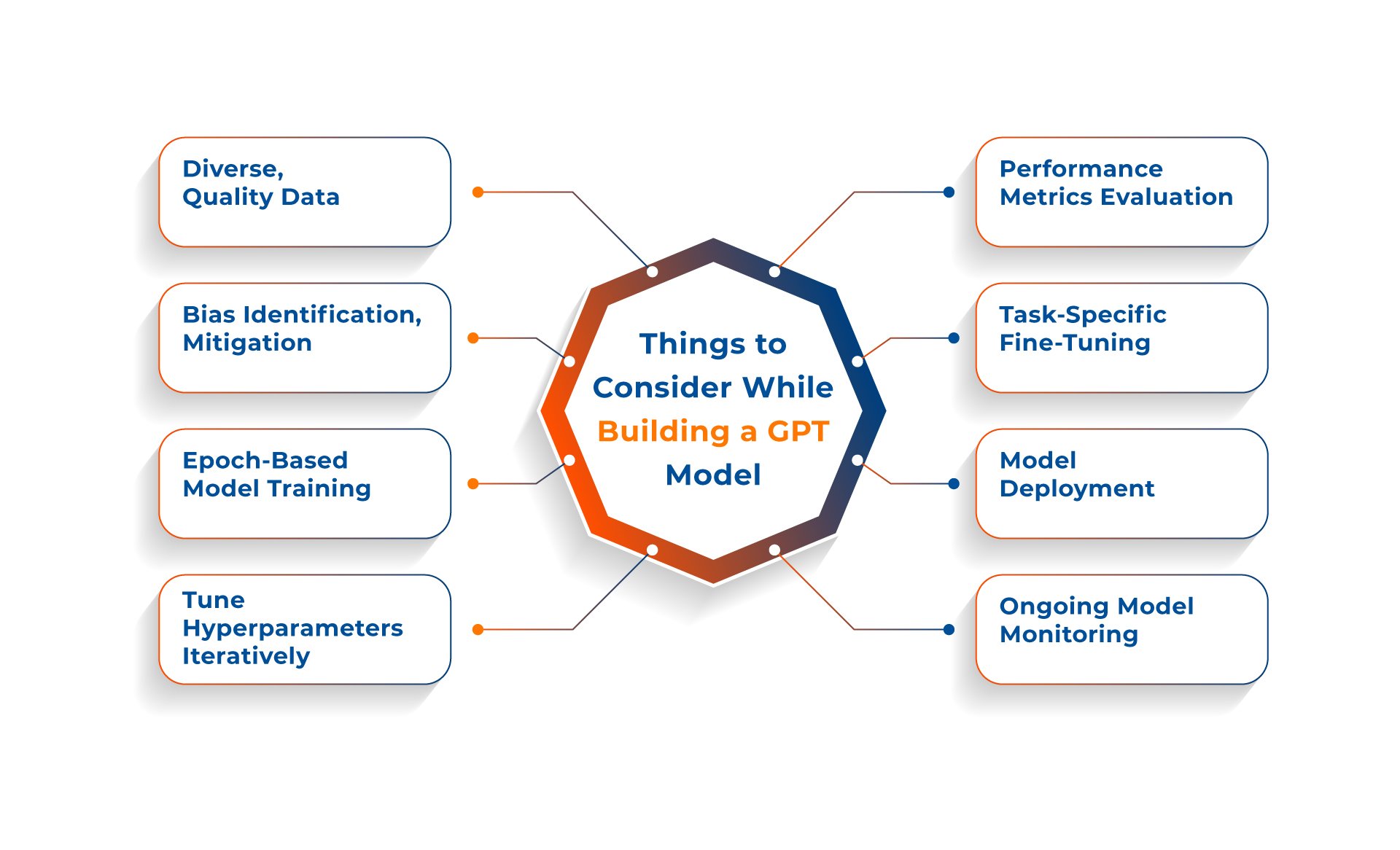

Things to Consider While Building a GPT Model

1. Data Quality and Diversity

The quality and diversity of your training data can significantly impact your model’s performance. It’s crucial to use a dataset that is both large and diverse enough to capture the various nuances and complexities of the language. This is especially important for tasks that require a deep understanding of context or specialized knowledge.

2. Handling Biases in the Data

Another critical consideration is the potential for biases in your training data. Biases can manifest in many forms, such as gender bias, racial bias, or even bias toward certain topics or viewpoints. It’s essential to identify and mitigate these biases during the data preprocessing stage to ensure that your model performs fairly and accurately across diverse inputs.

3. Training the Model

Once your environment is set up, and you have your domain-specific data ready, the next step is to train your model. Training involves feeding your prepared dataset into the initialized model architecture and allowing the model to adjust its internal parameters based on the data. This is usually done in epochs, where one epoch represents one forward and backward pass of all the training examples.

4. Hyperparameter Tuning

While the model is training, you may need to fine-tune hyperparameters like learning rate, dropout rate, or weight decay to improve its performance. This process can be iterative and may involve running multiple training sessions with different settings to find the optimal combination of hyperparameters.

5. Model Evaluation

After training is complete, you should evaluate your model’s performance using various metrics such as accuracy, F1-score, or precision-recall, depending on your specific application. It’s also crucial to run your model on a separate validation set that it has not seen before to gauge its generalization capability.

6. Fine-Tuning for Specific Tasks

If you’ve built a generic GPT model, you may need to fine-tune it on a more specialized dataset to perform specific tasks effectively. Fine-tuning involves running additional training cycles on a smaller, domain-specific dataset.

7. Deployment

The final step is deploying your trained and fine-tuned GPT model into a real-world application. This could range from integrating it into a chatbot to using it for data analysis or natural language processing tasks. Ensure that your deployment environment meets the computational demands of your model.

8. Monitoring and Maintenance

After deployment, continuously monitor the model’s performance and make adjustments as needed. Data drift, where the distribution of the data changes over time, may require you to retrain or fine-tune your model periodically.

Pre-Training Your GPT Model

Pre-training serves as the foundational phase for GPT models, where the model is trained on a large, general-purpose dataset. This allows the model to learn the basic structures of the language, such as syntax, semantics, and even some world knowledge. The significance of pre-training cannot be overstated; it sets the stage for the model’s later specialization through fine-tuning. Essentially, pre-training imbues the model with a broad understanding of language, making it capable of generating and understanding text in a coherent manner.

The quality of the dataset used for pre-training is crucial. It must be diverse and extensive enough to cover various aspects of language. The data should also be clean and well-preprocessed to ensure that the model learns effectively.

Methods and Strategies for Effective Pre-Training

Effective pre-training is not just about feeding data into the model; it’s about doing so in a way that maximizes the model’s ability to learn and generalize. Here are some strategies to make pre-training more effective:

1. Data Augmentation

Data augmentation involves modifying the existing data to create new examples. This can be done through techniques like back translation, synonym replacement, or sentence shuffling. Augmenting the data can enhance the model’s ability to generalize to new, unseen data.

2. Layer Normalization and Gradient Clipping

These are techniques used to stabilize the training process. Layer normalization involves normalizing the inputs in each layer to have a mean of zero and a standard deviation of one, which can speed up training and improve generalization. Gradient clipping involves limiting the value of gradients during backpropagation to prevent “exploding gradients,” which can destabilize the training process.

3. Use of Attention Mechanisms

Attention mechanisms, particularly the Transformer architecture, have been pivotal in the success of GPT models. They allow the model to focus on different parts of the input text differently, enabling it to capture long-range dependencies and relationships in the data.

4. Monitoring Metrics

Just like in the fine-tuning phase, monitoring metrics like loss and accuracy during pre-training is crucial. This helps in understanding how well the GPT model is learning and whether any adjustments to the training process are needed.

By employing these methods and strategies, you can ensure that the pre-training phase is as effective as possible, setting a strong foundation for subsequent fine-tuning and application-specific training.

Steps to Train a GPT Model

1. Detailed Walkthrough of the Training Process

Training a GPT model is not a trivial task; it requires a well-thought-out approach and meticulous execution. Once you’ve preprocessed your data and configured your model architecture, you’re ready to begin the training phase. Typically, the data is divided into batches, which are then fed into the model in a series of iterations or epochs. After each iteration, the model’s parameters are updated based on a loss function, which quantifies how well the model’s predictions align with the actual data.

2. Batch Size and Learning Rate

Choosing the right batch size and learning rate is crucial. A smaller batch size often provides a regularizing effect and lower generalization error. On the other hand, a larger batch size helps the model to converge faster but may lead to overfitting. The learning rate controls how much the model parameters should be updated during training. A high learning rate might make the model converge faster but overshoot the optimal solution, while a low learning rate could make the training process extremely slow.

3. Use of Optimizers

Optimizers like Adam or RMSprop can be used to adjust the learning rates dynamically during training, which can lead to faster convergence and lower training costs.

4. Monitoring and Managing the Training Phase

Effective management of the training phase can make or break your model’s performance. This involves not just monitoring key metrics like loss, accuracy, and validation scores, but also making real-time adjustments to your training strategy based on these metrics. Tools like TensorBoard can be invaluable for this, offering real-time visualizations that can help you make data-driven decisions.

5. Early Stopping and Checkpointing

If you notice that your model’s validation loss has stopped improving (or is worsening), it might be time to employ techniques like early stopping. This involves halting the training process if the model’s performance ceases to improve on a held-out validation set. Checkpointing, or saving the model’s parameters at regular intervals, can also be beneficial. This allows you to revert to a ‘good’ state if the model starts to overfit.

6. Hyperparameter Tuning

Once you have a baseline model, you can perform hyperparameter tuning to optimize its performance. Techniques like grid search or random search can be used to find the optimal set of hyperparameters for your specific problem.

By carefully monitoring and managing each aspect of the training process, you can ensure that your GPT model is as accurate and efficient as possible, making it a valuable asset for whatever application you have in mind.

Partner with Kanerika to Modernize Your Enterprise Operations with High-Impact Data & AI Solutions

Tips for Generating and Optimizing GPT Models

1. Enhancing the Efficiency and Performance

The journey doesn’t end with your GPT model’s training. There are several avenues to explore for optimizing its performance further. One such method is model pruning, which included removing insignificant parameters to make the model more efficient without a significant loss in performance. Another technique is quantization, which involves converting the model’s parameters to a lower bit-width. This not only reduces the model’s size but also improves its speed, making it more deployable in real-world applications.

2. Model Compression and Pruning Techniques

Model compression techniques like knowledge distillation can also be employed. In this method, a smaller model, often referred to as the “student,” is trained to replicate the behavior of the larger, trained “teacher” model. This student model is then more computationally efficient and easier to deploy, making it ideal for resource-constrained environments.

3. Dynamic Inline Values and Directives

Dynamic inline values and directives can be used to give GPT inline instructions. For example, you can use custom markup such as brackets to introduce some variety to the output. This is particularly useful when you are using GPT in an exploratory way, similar to how you would use a Jupyter Notebook.

4. Optimizing for Specific Tasks

GPT can be carefully adjusted for specific purposes such as text generation, code completion, text summarization, and text transformation. For instance, if you’re using GPT for code generation, you can use the directive “Optimize for space/time complexity” to get more efficient code.

Fine-Tuning GPT Models

1. Customizing Pre-Trained Models for Specific Tasks

After the initial phase of pre-training, a GPT model can be fine-tuned to specialize in a particular task or domain. This involves training the model on a smaller, task-specific dataset. The fine-tuning process allows the model to apply the general language understanding it gained during pre-training to the specialized task, thereby improving its performance. This is particularly useful in scenarios like customer service chatbots, medical diagnosis, and legal document summarization where domain-specific knowledge is crucial.

2. Strategies for Successful Fine-Tuning

Successful fine-tuning involves more than just running the model on a new dataset. It’s crucial to adjust the learning rate to prevent the model from unlearning the valuable information it gained during pre-training. Techniques like gradual unfreezing, where layers are unfrozen gradually during the fine-tuning process, can also be beneficial. Another strategy is to use a smaller learning rate for the earlier layers and a larger one for the later layers, as the earlier layers capture more general features that are usually transferable to other tasks.

3. Hyperparameter Tuning for Fine-Tuning

Hyperparameter tuning is another essential aspect of fine-tuning. Parameters like batch size, learning rate, and the number of epochs can significantly impact the model’s performance. Automated hyperparameter tuning tools like Optuna or Hyperopt can be used to find the optimal set of hyperparameters for your specific task.

4. Monitoring and Evaluation During Fine-Tuning

It’s essential to continuously monitor the model’s performance during the fine-tuning process. Tools like TensorBoard can be used for real-time monitoring of various metrics like loss and accuracy. This helps in making informed decisions on when to stop the fine-tuning process to prevent overfitting.

Deploying GPT Models

1. Integration into Applications

Once your GPT model is trained and fine-tuned, the next step is deployment. This involves integrating the model into your application or system. Depending on your needs, this could be a web service, a mobile app, or even an embedded system. Tools like TensorFlow Serving or PyTorch’s TorchServe can simplify this process, allowing for easy deployment and scaling.

2. Scalability and Latency Considerations

When deploying a GPT model, it’s essential to consider factors like scalability and latency. The model should be able to handle multiple requests simultaneously without a significant drop in performance. Caching strategies and load balancing can help manage high demand, ensuring that the model remains responsive even under heavy load.

3. Security and Compliance

Security is another critical consideration when deploying GPT models, especially in regulated industries like healthcare and finance. Ensure that your deployment strategy complies with relevant laws and regulations, such as GDPR for data protection or HIPAA for healthcare information.

Best Practices for Maintaining GPT Model Performance

1. Continuous Monitoring and Updating

After deploying your GPT model, it’s crucial to keep an eye on its performance metrics. Tools like Prometheus or Grafana can be invaluable for this, allowing you to monitor aspects like request latency, error rates, and throughput in real-time. But monitoring is just the first step. You should update your model based on these metrics and any new data that comes in. OpenAI suggests that the quality of a GPT model’s output largely depends on the clarity of the instructions it receives. Therefore, you may need to refine your prompts or instructions to the model based on the performance metrics you observe.

2. Advanced Monitoring Techniques

In addition to basic metrics, consider setting up custom alerts for specific events, such as a sudden spike in error rates or a drop in throughput. These can serve as early warning signs that something is amiss, allowing you to take corrective action before it becomes a significant issue.

3. Handling Drift in Data and Model Performance

Data drift is an often overlooked aspect of machine learning model maintenance. It refers to the phenomenon where the distribution of the incoming data changes over time, which can adversely affect your model’s performance. OpenAI recommends breaking down complex tasks into simpler subtasks to lower error rates. This can be particularly useful in combating data drift. By isolating specific subtasks, you can more easily identify where the drift is occurring and take targeted action.

4. Strategies for Managing Data Drift

Re-sampling the Training Data: Periodically update your training data to better reflect the current data distribution.

Parameter Tuning: Adjust the model’s parameters based on the new data distribution.

Model Re-training: In extreme cases, you may need to re-train your model entirely.

Systematic Testing: Any changes you make should be tested systematically to ensure they result in a net improvement. OpenAI emphasizes the importance of this, suggesting the development of a comprehensive test suite to measure the impact of modifications made to prompts or other aspects of the model.

By implementing these best practices, you can significantly enhance the effectiveness of your interactions with GPT models, ensuring that they remain a valuable asset for your team or project.

Challenges and Limitations of GPT Models

Computational Costs

While GPT models offer unparalleled performance, they come with high computational costs, especially the larger versions like GPT-3 and GPT-4. This can be a limiting factor for small organizations or individual developers. However, techniques like model pruning and quantization can help mitigate this issue to some extent.

Ethical and Societal Implications

The ethical considerations of GPT models are critical, especially as they become more integrated into daily life. These concerns include the risk of perpetuating biases, spreading misinformation, and generating misleading or harmful content. To address these issues, transparency in training data and methods is essential, as is the development of guidelines and safeguards for responsible use.

Kanerika: Transforming Businesses with Tailored AI Solutions

At Kanerika, we champion innovation by delivering tailored AI solutions that redefine business operations across industries. Our expert team develops custom AI technologies designed to tackle unique challenges and unlock transformative potential.

From finance and manufacturing to healthcare and logistics, we craft intelligent, industry-specific strategies that enhance efficiency, deliver actionable insights, and drive a competitive edge. Our solutions go beyond generic AI, focusing on practical, impactful outcomes.

By leveraging cutting-edge AI technologies like predictive analytics, intelligent automation, and machine learning, we turn complex business challenges into strategic opportunities. With a global track record of success and partnerships with renowned clients, Kanerika is your trusted ally in digital transformation.

We don’t just implement AI; we empower businesses to reimagine their future.

Achieve 10x Business Growth with Custom AI Innovations!

Partner with Kanerika for Expert AI implementation Services

FAQs

What is a GPT model?

A GPT model is a powerful language AI that predicts the next word in a sentence, building up coherent and contextually relevant text. Think of it as a sophisticated autocomplete on steroids, trained on massive amounts of data to understand and generate human-like language. Essentially, it learns patterns in language to create text, translate, and answer your questions. Its abilities are constantly evolving through ongoing learning.

What does GPT stand for?

GPT stands for Generative Pre-trained Transformer. It’s a type of AI model; “generative” means it creates new text, “pre-trained” indicates it learned from massive datasets beforehand, and “transformer” describes its underlying architecture—a powerful way of processing information. Essentially, it’s a sophisticated text-generation engine.

What is a GPT-3 model?

GPT-3 (Generative Pre-trained Transformer 3) is a powerful language model that predicts and generates human-like text. It learns patterns from massive datasets to complete sentences, answer questions, and even create different creative text formats. Essentially, it’s a sophisticated autocomplete on steroids, capable of astonishingly nuanced and coherent output. Think of it as a highly advanced statistical parrot, but one that understands context remarkably well.

How many types of GPT are there?

There isn’t a fixed number of “types” of GPT. Think of it as a family of models; GPT-3, GPT-3.5-turbo, and GPT-4 are prominent examples, each with different capabilities and training data. New models are constantly being developed, so any specific count is quickly outdated. The key difference lies in their size, training, and resulting performance.

What is GPT-4 model?

GPT-4 is a massively powerful language model, far exceeding its predecessors in understanding and generating human-like text. It’s essentially a sophisticated pattern-matching machine, predicting the next word in a sequence with remarkable accuracy based on its immense training data. This allows it to perform diverse tasks, from answering questions to creating stories and translating languages. Ultimately, it’s a leap forward in AI’s ability to understand and interact with the world through language.

Who invented ChatGPT?

ChatGPT wasn’t invented by a single person but is a product of OpenAI, a research company. Think of it like a collaborative masterpiece, built by a team of AI researchers and engineers. Their work leveraged advancements in deep learning and massive datasets to bring this conversational AI to life. Essentially, it’s a collective achievement rather than a solo creation.

What is ChatGPT full form?

ChatGPT doesn’t have a formal “full form” like an acronym. It’s a name, a brand, combining “Chat” to describe its conversational ability and “GPT” which stands for Generative Pre-trained Transformer – the underlying technology. Think of it like “Google” – it’s a name, not an acronym needing expansion.

Is GPT an NLP model?

Yes, GPT (Generative Pre-trained Transformer) is fundamentally an NLP model. It leverages deep learning techniques to understand and generate human-like text, a core function of Natural Language Processing. Its ability to process and create coherent language is precisely what defines it as an NLP model. Essentially, GPT’s power stems directly from its NLP capabilities.

What is the difference between GPT-3 and GPT-4 model?

GPT-4 boasts significant improvements over GPT-3, showcasing enhanced reasoning abilities and a much larger context window. This means GPT-4 can handle more complex instructions and longer conversations more effectively. Essentially, it’s smarter, more nuanced, and can process more information at once. The improvements are qualitative, impacting accuracy and overall performance.

What is the difference between GPT-4 and GPT-4 Turbo?

GPT-4 Turbo boasts significant improvements over GPT-4. Primarily, it offers enhanced reasoning abilities and a larger context window, allowing it to process and understand more information at once. This leads to more nuanced and accurate responses. Essentially, Turbo is a faster, smarter, and more capable iteration of the already powerful GPT-4.

How to get GPT-4 Turbo?

GPT-4 Turbo isn’t available as a standalone product you can directly “get.” Access depends on your subscription to ChatGPT Plus or integration through approved APIs. Essentially, you need an existing paid plan to use it; it’s not a separate purchase. Check OpenAI’s website for the most up-to-date access options.

What is the difference between GPT-4 and GPT-4o?

GPT-4 and GPT-4o are essentially the same underlying model, but GPT-4o (often “GPT-4-0” for clarity) refers specifically to the *initial* release of GPT-4. Think of it like a software version: GPT-4o is the baseline, while later versions are iterative improvements, incorporating bug fixes and sometimes minor performance enhancements. Subsequent releases simply aren’t explicitly labelled with a version number.

Is GPT a deep learning model?

Yes, GPT (Generative Pre-trained Transformer) is fundamentally a deep learning model. It utilizes a complex, multi-layered neural network architecture – the transformer – to process and generate text. This architecture allows it to learn intricate patterns and relationships within language data, enabling its impressive text generation capabilities. Essentially, its “deepness” lies in its many interconnected layers processing information.

What is GPT full form?

GPT stands for Generative Pre-trained Transformer. It’s a type of AI model that learns from massive datasets to generate human-like text. Essentially, it’s a sophisticated word-prediction engine, capable of writing, translating, and answering questions. Think of it as a highly advanced autocomplete on steroids.