Big data has become the backbone of modern business strategy, it is the base on which modern AI implementation stands on. 75% of businesses worldwide use data to drive innovation, while 50% report that data helps them compete in the market, according to Statista. But with great data comes great challenges.

According to Research and Markets, the big data and analytics services market size will grow to $365.42 billion in 2029 at a compound annual growth rate of 21.3%.

The amount of data at enterprises grows by 63% each month, with 12% of organizations reporting increases of 100% or more, reveals a survey by Matillion and IDG. This explosive growth brings both opportunities and obstacles that can make or break your data strategy. Opportunities range from implementing agentic AI to gaining a major market advantage.

Bottom Line: While big data offers tremendous potential for driving innovation and business growth, most organizations struggle with 11 core challenges that prevent them from extracting real value from their data investments.

What is Big Data?

Big data refers to datasets that are too large, complex, or fast-moving for traditional data processing tools to handle effectively. It’s characterized by the “5 Vs”:

- Volume: Petabytes or exabytes of information

- Velocity: Real-time or near real-time data streams

- Variety: Structured, semi-structured, and unstructured data types

- Veracity: Data quality and accuracy concerns

- Value: The ability to extract meaningful insights

Transform Your Business with Data & AI- Powered Solutions!

Partner with Kanerika for Expert Data & AI implementation Services

The 11 Biggest Big Data Challenges

1. Data Volume Explosion

The Challenge:

Organizations are drowning in data. Companies now handle petabytes or even exabytes of information, growing faster than their infrastructure can support.

Real Impact:

- Storage costs can reach millions annually

- Query performance degrades as datasets expand

- Infrastructure struggles under massive data loads

Solutions:

- Cloud storage adoption: AWS S3, Azure Blob, Google Cloud Storage offer scalable solutions

- Data compression: Reduce storage needs by 50-80%

- Automated lifecycle management: Archive old data, delete unnecessary files

- Data lakes: Store raw data cheaply until needed

Example:

Netflix processes over 15 petabytes of data daily but uses cloud-native solutions and intelligent data tiering to manage costs effectively.

2. Data Integration Complexity

The Challenge:

Data comes from everywhere – databases, APIs, IoT devices, social media, third-party sources. Integrating these disparate systems creates massive headaches.

Pain Points:

- Data silos prevent comprehensive analysis

- Complex ETL processes increase costs and delays

- Inconsistent formats make data unusable

- Integration projects can take months or years

Solutions:

- Modern integration platforms: Apache Kafka, Snowflake, Talend

- API-first architecture: Standardize data access methods

- Data virtualization: Query data without moving it

- Schema-on-read approaches: Handle diverse data types flexibly

Success Story:

Walmart integrated over 200 data sources using Apache Kafka, reducing integration time from months to weeks while improving data freshness.

3. Data Quality Issues

The Challenge:

“Garbage in, garbage out” is the biggest threat to big data success. Poor quality data leads to wrong decisions and wasted resources.

Common Problems:

- Inconsistent data formats across systems

- Missing or incomplete records

- Duplicate entries inflating datasets

- Outdated information skewing analysis

Solutions:

- Automated data validation: Real-time quality checks

- Data profiling tools: Identify quality issues automatically

- Master data management: Single source of truth for key entities

- Data cleansing pipelines: Fix issues before analysis

Impact:

According to IBM, poor data quality costs the US economy $3.1 trillion annually. Companies with high-quality data see 23x more customer acquisition and 6x higher profits.

4. Real-Time Processing Demands

The Challenge:

Business moves at the speed of light. Organizations need insights immediately, not hours or days later.

Industry Requirements:

- E-commerce: Real-time recommendations and fraud detection

- Finance: Millisecond trading decisions and risk monitoring

- Healthcare: Patient monitoring and emergency alerts

- Manufacturing: Predictive maintenance and quality control

Solutions:

- Stream processing: Apache Flink, Apache Storm, Amazon Kinesis

- Edge computing: Process data closer to the source

- In-memory databases: Redis, SAP HANA for faster queries

- Event-driven architecture: React to data changes instantly

Case Study:

Capital One processes over 1 billion events daily in real-time using Apache Kafka and custom streaming applications to detect fraud and improve customer experience.

5. Scalability and Performance Bottlenecks

The Challenge:

Systems that work fine with small datasets crash when scaled up. Performance degrades as data and users increase.

Scaling Problems:

- Slow query response times frustrate users

- System crashes during peak usage

- Adding more hardware doesn’t always help

- Legacy systems can’t handle modern workloads

Solutions:

- Distributed computing: Apache Spark, Hadoop clusters

- Query optimization: Indexing, partitioning, caching

- Auto-scaling infrastructure: Cloud-native solutions that grow with demand

- Load balancing: Distribute work across multiple servers

Technology Stack:

Companies like Uber use Apache Spark clusters with thousands of nodes to process billions of trips and deliver insights in seconds.

6. Data Security and Privacy Concerns

The Challenge:

Big data is a big target for cybercriminals. Data breaches can cost millions and destroy reputations.

Security Threats:

- Healthcare data breaches cost $10.93 million on average

- GDPR fines can reach 4% of annual revenue

- Ransomware attacks target data-rich companies

- Insider threats from employees and contractors

Compliance Requirements:

- GDPR: European data protection laws

- CCPA: California privacy regulations

- HIPAA: Healthcare data protection

- SOX: Financial reporting standards

Solutions:

- Encryption: Protect data at rest and in transit

- Access controls: Role-based permissions and multi-factor authentication

- Data masking: Hide sensitive information in non-production environments

- Regular audits: Monitor access and detect suspicious activity

- Privacy by design: Build security into systems from the start

7. Cost Management and ROI Challenges

The Challenge:

Big data projects can quickly spiral out of control financially. Cloud costs, infrastructure investments, and staffing expenses add up fast.

Cost Drivers:

- Cloud storage and compute expenses growing 50-100% annually

- Specialized talent commands premium salaries

- Infrastructure over-provisioning wastes resources

- Failed projects provide no return on investment

Cost Optimization Strategies:

- Right-sizing resources: Match capacity to actual needs

- Serverless architectures: Pay only for what you use

- Reserved instances: Commit to long-term usage for discounts

- Cost monitoring tools: AWS Cost Explorer, Azure Cost Management

- Data compression and deduplication: Reduce storage requirements

Budget Planning:

Successful organizations allocate 15-20% of their IT budget to data and analytics initiatives, with clear ROI metrics defined upfront.

8. Skills Gap and Talent Shortage

The Challenge:

Demand for data professionals far exceeds supply. Finding qualified people to implement and manage big data systems is extremely difficult.

Talent Shortage Statistics:

- 85% of organizations report difficulty finding qualified data professionals

- Data scientist salaries have increased 30-50% in recent years

- Average time to fill data roles: 4-6 months

- 60% of data teams are understaffed

Solutions:

- Internal training programs: Upskill existing employees

- Low-code/no-code platforms: Enable business users to work with data

- Outsourcing and partnerships: Work with specialized firms

- University partnerships: Build talent pipelines

- Remote work options: Access global talent pools

Training Investment:

Companies that invest in employee data training see 5x higher productivity and 20% lower turnover rates.

9. Data Governance and Compliance

The Challenge: Without proper governance, data becomes chaotic. Teams work with conflicting information, security risks increase, and compliance violations occur.

Governance Problems:

- Different departments using different definitions for the same metrics

- No clear data ownership or accountability

- Inconsistent security policies across systems

- Lack of data lineage and audit trails

Governance Framework:

- Data catalog: Inventory of all data assets

- Data stewardship: Clear ownership and responsibilities

- Policy enforcement: Automated compliance monitoring

- Metadata management: Documentation and lineage tracking

- Quality monitoring: Continuous data health checks

Success Metric: Organizations with strong data governance report 23% higher data quality scores and 47% fewer compliance issues.

10. Technology Selection and Integration

The Challenge:

The big data technology landscape changes constantly. Choosing the right tools and making them work together is complex and risky.

Technology Overload:

- Hundreds of vendors and solutions to evaluate

- Open source vs. commercial tool decisions

- Integration complexity between different platforms

- Risk of vendor lock-in

Selection Criteria:

- Scalability: Can it handle future growth?

- Performance: Does it meet speed requirements?

- Integration: How well does it work with existing systems?

- Support: What level of vendor/community support is available?

- Cost: What’s the total cost of ownership?

Best Practices:

- Start with proof-of-concept projects

- Choose platforms with strong ecosystems

- Prioritize open standards and APIs

- Plan for multi-cloud and hybrid deployments

11. Organizational Change Management

The Challenge:

Technology is often the easy part. Getting people to change how they work and make data-driven decisions is much harder.

Change Resistance:

- Employees comfortable with existing processes

- Fear of job displacement due to automation

- Lack of understanding about data benefits

- Cultural preference for gut-feeling decisions

Change Management Strategy:

- Executive sponsorship: Leadership must champion data initiatives

- Training and education: Help employees understand benefits

- Quick wins: Demonstrate value early and often

- Incentive alignment: Reward data-driven decision making

- Communication: Regular updates on progress and success stories

Culture Transformation:

Data-driven organizations are 23x more likely to acquire customers and 19x more likely to be profitable.

Industry-Specific Big Data Challenges

Healthcare

- Patient privacy compliance (HIPAA)

- Integrating electronic health records

- Real-time patient monitoring

- Drug discovery and clinical trials

Financial Services

- Fraud detection in milliseconds

- Regulatory reporting requirements

- Risk management and stress testing

- Algorithmic trading systems

Retail and E-commerce

- Real-time personalization

- Inventory optimization

- Supply chain visibility

- Customer journey analytics

Manufacturing

- Predictive maintenance

- Quality control automation

- Supply chain optimization

- Energy efficiency monitoring

Gen AI Paradox

The Gen AI Paradox defines the current era of AI adoption—where the technology’s greatest strengths are inseparably linked to its greatest risks.

Why Choose Kanerika to Solve Your Big Data Challenges

At Kanerika, we understand that big data challenges aren’t just technical problems – they’re business transformation opportunities. Here’s why leading organizations trust us with their most critical data initiatives:

Proven Track Record Across Industries

We’ve successfully delivered big data & generative AI solutions for healthcare systems, financial institutions, manufacturing companies, and technology firms. Our cross-industry experience means we understand both technical requirements and business contexts.

Client Results:

- 70% reduction in data processing time for a major healthcare provider

- $2.3M annual cost savings through cloud optimization for a manufacturing client

- 90% improvement in real-time analytics performance for a financial services firm

End-to-End Big Data Expertise

Unlike vendors who specialize in just one area, Kanerika provides comprehensive solutions covering:

- Data Strategy and Assessment: We evaluate your current state and design a roadmap for success

- Architecture and Implementation: Cloud-native solutions built for scale and performance

- Data Integration: Seamlessly connect all your data sources

- Analytics and AI/ML: Turn raw data into actionable insights and get a clear roadmap with our AI assessment

- Governance and Security: Ensure compliance and protect sensitive information

Technology-Agnostic Approach

We’re not tied to any single vendor or platform. Our experts work with:

- Cloud Platforms: AWS, Azure, Google Cloud

- Big Data Technologies: Spark, Hadoop, Kafka, Snowflake

- Analytics Tools: Power BI, Tableau, Qlik

- AI/ML Frameworks: TensorFlow, PyTorch, scikit-learn

This flexibility ensures you get the best solution for your specific needs, not what’s best for our profit margins.

How to Choose the Right Cloud Application Migration Service for Your Business

Explore strategies, services, benefits, challenges, and future trends shaping cloud application migration in 2025 and beyond.

Accelerated Time-to-Value

Our proven methodologies and pre-built accelerators mean faster deployments and quicker ROI:

- 30-60 day proof-of-concepts to demonstrate value quickly

- Reusable frameworks that reduce development time by 40-60%

- Best practice templates for governance, security, and compliance

- Change management support to ensure user adoption

Scalable Solutions Built for Growth

We design systems that grow with your business:

- Cloud-native architectures that scale automatically

- Microservices approach for flexibility and maintainability

- API-first design for easy integration with new systems

- Future-ready platforms that adapt to changing requirements

Dedicated Success Partnership

Your success is our success. We provide:

- 24/7 support for critical systems

- Continuous optimization to improve performance and reduce costs

- Regular health checks to prevent issues before they occur

- Training and knowledge transfer to build internal capabilities

- Strategic consulting to align technology with business goals

Our Big Data Services

Data Strategy and Consulting

- Current state assessment and gap analysis

- Future state architecture design

- Technology selection and roadmap planning

- ROI modeling and business case development

Data Engineering and Integration

- ETL/ELT pipeline development

- Real-time streaming data processing

- Data lake and warehouse implementation

- API development and integration

Analytics and Business Intelligence

- Interactive dashboards and reports

- Self-service analytics platforms

- Predictive modeling and machine learning

- Natural language query interfaces

Data Governance and Security

- Data catalog and metadata management

- Access control and security implementation

- Compliance automation (GDPR, HIPAA, SOX)

- Data quality monitoring and remediation

Cloud Migration and Optimization

- Multi-cloud strategy and implementation

- Legacy system modernization

- Cost optimization and performance tuning

- Disaster recovery and business continuity

Case Studies of Successful Big Data Implementations in Healthcare

At Kanerika, we have led big data implementation for global healthcare organizations. Our clients have approached us with requirements for data transformation, data analytics, and data management.

Leading Clinical Research Company – Improved Data Analytics for Better Research Outcomes

This clinical research company struggled with manual SAS programming delays and inefficient data cleaning, which affected productivity and research outcomes.

By adopting Trifacta, Kanerika’s data team was able to transform complex datasets more efficiently and streamline reporting and data preparation.

This led to a 40% increase in operational efficiency. As well as a 45% improvement in user productivity, and a 60% faster data integration process.

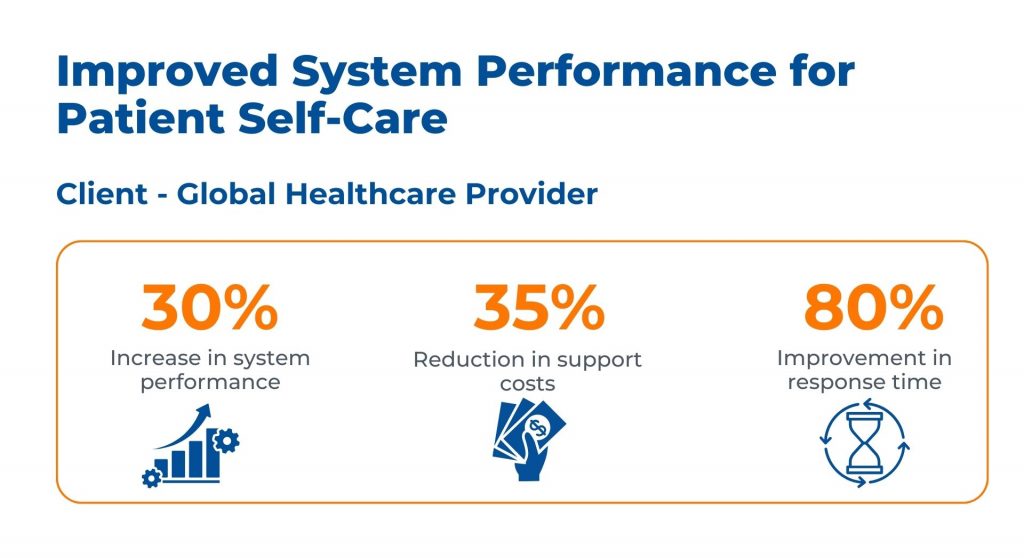

Global Healthcare Provider – Improved System Performance for Patient Self-Care

Inadequate system architecture was impeding patient self-care and system performance for this healthcare provider.

The implemented solution by Kanerika’s team involved re-architecting the system using Microsoft Azure to improve response time and scalability. The results were a 30% increase in system performance. As well as a 35% reduction in support costs, and an 80% improvement in response time.

These summaries highlight the key challenges, solutions, and outcomes for each case study, focusing on the significant improvements made post-intervention.

Transform Your Business with Data & AI- Powered Solutions!

Partner with Kanerika for Expert Data & AI implementation Services

FAQs

What are the various challenges of big data?

Big data’s biggest hurdles aren’t just about sheer volume; it’s managing the velocity (speed of influx) and variety (different data types) effectively. This leads to difficulties in storage, processing, and extracting meaningful insights quickly and cost-effectively. Finally, ensuring data accuracy, security, and privacy across such massive, diverse datasets is a constant struggle.

What are big data's 4 V big challenges?

Big data’s core challenges revolve around its sheer Volume (the massive amount of data), its diverse Variety (different formats and structures), its rapid Velocity (speed of data generation and processing), and the need for Veracity (ensuring data accuracy and reliability). These four V’s demand innovative solutions for storage, processing, analysis, and validation. Overcoming them is crucial for effective data utilization.

Which of the following are the challenges of big data?

Big data’s main challenges revolve around its sheer volume, velocity, and variety. This makes storage, processing, and analysis incredibly complex and costly. Furthermore, ensuring data quality, security, and privacy amidst this scale presents significant hurdles. Ultimately, extracting meaningful insights from this raw data requires sophisticated tools and expertise.

What are the challenges of data growth?

Data growth presents several hurdles. It strains storage capacity and increases costs exponentially, demanding constant upgrades. Processing and analyzing this massive volume becomes increasingly complex and time-consuming, potentially slowing down insights. Finally, managing data security and ensuring its integrity across expanding datasets is a significant ongoing challenge.

How to overcome big data challenges?

Conquering big data hurdles involves strategically choosing the right tools and technologies – from cloud platforms to specialized databases – to manage the sheer volume and velocity of data. Effective data governance is crucial, ensuring data quality and accessibility. Finally, cultivating a data-driven culture within your organization, focusing on skills development and clear communication, is key to utilizing insights effectively.

What are the 5 V's of big data?

Big data’s “5 Vs” describe its defining characteristics: Volume (sheer size), Velocity (speed of creation), Variety (different data types), Veracity (accuracy and trustworthiness), and Value (the potential insights it offers). Essentially, it’s about dealing with massive, diverse, rapidly flowing data that needs careful validation to unlock meaningful information. Understanding these Vs is crucial for effective big data management.

What is data challenges?

Data challenges are hurdles preventing us from effectively using data. These can range from poor data quality (inaccurate, incomplete, inconsistent) to issues with accessibility (finding, storing, and sharing the data). Ultimately, these challenges hinder analysis and informed decision-making. Successfully navigating these obstacles is key to unlocking the true value of data.

What are big data types?

Big data isn’t just about *size*; it’s about the *variety* of information. We categorize big data types as structured (like databases), semi-structured (like XML files), and unstructured (like text and images). These differences dictate how we store, process, and analyze the data, leading to different insights. Ultimately, understanding these types is key to effectively leveraging big data’s potential.

What are the challenges of variety in big data?

Big data’s variety presents a huge challenge because it involves handling vastly different data formats and structures (text, images, sensor readings etc.). This necessitates flexible and adaptable processing tools, as one-size-fits-all solutions often fail. Inconsistencies in data quality across these formats further complicate analysis and introduce significant noise. Ultimately, managing this diversity requires sophisticated data integration and cleaning strategies.

What are the 3 V's of big data?

Big data’s core characteristics are Volume, Velocity, and Variety. Volume refers to the sheer size of the data; Velocity highlights its speed of creation and processing; and Variety encompasses the diverse formats—structured, semi-structured, and unstructured—the data takes. Understanding these three fundamental aspects is crucial for effectively managing and leveraging big data.

What are the challenges in big data visualization?

Big data visualization faces hurdles in managing sheer volume, velocity, and variety of data, often requiring significant processing power and specialized tools. Effectively communicating complex insights from massive datasets to diverse audiences is another key challenge. Finally, ensuring data security and privacy while sharing visualizations presents significant ongoing concerns.

What are the factors affecting big data?

Big data’s effectiveness hinges on several key factors. Data volume, velocity (how fast it arrives), and variety (different formats) directly impact its usability. Furthermore, the veracity (accuracy and reliability) of the data profoundly influences the insights derived. Ultimately, successful big data relies on handling these interconnected aspects effectively.

What are the 4 V's of big data?

Big data’s “Four Vs” describe its defining characteristics: Volume refers to the sheer amount of data; Velocity highlights its rapid creation and processing speed. Variety encompasses the diverse data formats (text, images, etc.), and Veracity focuses on the trustworthiness and accuracy of the information. Understanding these aspects is key to managing and leveraging big data effectively.

What is a major challenge for storage of big data?

Big data storage faces a huge challenge in managing its sheer *volume*, velocity, and variety. This requires specialized, often costly, infrastructure capable of handling diverse data formats at incredible speeds. Finding efficient and reliable ways to store and access this data while minimizing costs is a constant struggle.

What is big data in simple words?

Big data isn’t just *lots* of data; it’s data so vast and complex that traditional tools struggle to manage it. Think of it as a firehose of information – unstructured, varied, and constantly flowing. Effectively utilizing it requires specialized techniques to reveal hidden patterns and insights. Essentially, it’s about finding meaning in the overwhelming.