As the adoption of artificial intelligence (AI) continues to accelerate across industries, the need for a comprehensive framework to address the challenges of trust, risk, and security has become increasingly critical. Recent studies suggest that, by 2026, organizations that prioritize the operationalization of AI transparency, trust, and security within their AI initiatives are expected to experience a 50% boost in AI adoption, goal attainment, and user acceptance. AI TRiSM is a holistic framework that emphasizes Transparency, Responsibility, and Inclusivity as the pillars for building trust, mitigating risks, and ensuring the security of AI systems.

As AI becomes more integral to decision-making across industries, the risks associated with data privacy, bias, and compliance grow exponentially. Without a robust framework like AI TRiSM, organizations risk not only operational failures but also significant reputational damage and legal repercussions. This framework is essential for ensuring that AI systems are not only effective but also secure, transparent, and aligned with ethical standards. AI TRiSM helps organizations navigate these complex challenges, enabling the responsible and trustworthy deployment of AI technologies.

Transform Your Business with AI-Powered Solutions!

Partner with Kanerika for Expert AI implementation Services

What is AI TRiSM?

Artificial Intelligence Trust, Risk, and Security Management, or AI TRiSM, is a framework created to guarantee that AI systems are reliable, safe, and compliant with ethical standards. It focuses on controlling the risks that come with AI, such as bias, data privacy, transparency, and compliance, and it also sets up procedures to create and preserve confidence in AI systems. AI TRiSM assists enterprises in implementing AI solutions that are not only efficient but also dependable and morally sound by incorporating governance, risk management, and security into the AI lifecycle.

In the quickly changing field of AI, the significance of AI TRiSM cannot be overstated. The chances of AI systems being misused or failing also rise as these systems become more commonplace in important decision-making processes across sectors. In order to reduce these risks and guarantee the security, accountability, and transparency of AI deployments, AI TRiSM offers an organized method. This framework is essential for enterprises to maintain regulatory compliance, build stakeholder confidence, and safeguard their brand in a world where artificial intelligence is becoming more and more important.

Why Integrate AI TRiSM into AI Models?

Integrating AI Trust, Risk, and Security Management (TRiSM) into AI models is crucial for effectively handling various risks associated with AI systems. Here are six compelling reasons to embed AI TRiSM from the get-go:

1. Explaining AI for Stakeholders

Many people struggle to understand how AI operates, let alone explain it. When building AI models, it’s vital to provide clear insights into how they function. This includes detailing the model’s strengths, weaknesses, likely behaviors, and any potential biases. Transparency about the datasets and methodologies used in training these models is essential to identify biases and make informed decisions.

2. Managing Generative AI Risks

Generative AI tools, like those used in creating new content, are accessible to almost anyone, introducing both transformation and inherent risks into business operations. These risks often evade traditional control measures, necessitating innovative approaches, especially with cloud-based applications, to mitigate potential threats swiftly.

3. Data Confidentiality Concerns with Third-Party AI

When your organization uses AI tools from other providers, it also takes on the data risks associated with them. This can involve handling large datasets and the potential leakage of sensitive information, leading to regulatory and reputational damage. Ensuring AI TRiSM includes measures to verify how third-party AI tools handle data confidentiality is essential.

4. Ongoing Monitoring Necessities

AI systems require constant oversight to remain compliant and ethical. Regular integration of risk management in AI operations, such as ModelOps, is crucial. This involves developing tailored solutions for each AI workflow, as off-the-shelf options may not suffice, and applying continuous controls throughout the lifecycle of AI models.

5. Protection Against Adversarial Attacks

AI systems are increasingly targets for adversarial attacks that can cause myriad damages, including financial losses and breaches of proprietary data. To combat these, specialized controls must be in place to test, validate, and bolster the resilience of AI against such threats, more so than other application types.

6. Compliance with Emerging Regulations

New regulations around AI compliance are on the horizon across various regions. These will impose strict guidelines on how AI applications can be used and handled. Preparing for and adhering to these looming regulatory requirements is a proactive step, extending beyond current privacy protection laws, and is indispensable for any organization using AI.

The 3 Pillars of AI TRiSM

1. Trustworthiness

Users and stakeholders will feel more confident in trustworthy AI systems since they are unbiased, transparent, and dependable. This pillar highlights how important explainable AI is, meaning that judgments made by AI models can be readily comprehended and rationalized.

- Transparency: AI decisions are made clear and understandable.

- Fairness: Ensures AI operates without bias and treats all users equally.

- Accountability: Clear lines of responsibility for AI actions.

Example: To uphold client trust, a financial institution that use artificial intelligence (AI) for loan approvals must guarantee that the model is impartial and transparent, offering unambiguous justifications for every determination.

2. Risk Management

In AI TRiSM, risk management entails locating, evaluating, and reducing possible hazards related to the application of AI. This covers both ethical and operational hazards, such as bias or privacy violations or system malfunctions.

- Risk Assessment: Identifying potential risks before deployment.

- Mitigation Strategies: Implementing measures to reduce or eliminate risks.

- Continuous Monitoring: Ongoing evaluation to catch and address risks early.

Example: In order to prevent privacy violations and guarantee that AI recommendations do not pose any health hazards, an AI-powered healthcare system must continuously monitor patient data.

3. Security

The primary objective of TRiSM is safeguarding AI systems from adversarial attacks, data breaches, and cyberattacks. This pillar guarantees the security and resilience of the AI models and the data they use.

- Data Protection: Ensuring that sensitive data is encrypted and secure.

- Model Security: Safeguarding AI models from adversarial attacks.

- Access Control: Limiting access to AI systems to authorized users only.

Example: To avoid unwanted access and data breaches, a retail firm that uses artificial intelligence (AI) to tailor consumer experiences needs to secure both its AI models and customer data.

What Are the Core Components of AI TRiSM?

1. Explainability and Model Monitoring

Importance of transparency in AI models: Explainability is essential for understanding how AI models make decisions. It builds trust, enables accountability, and facilitates responsible decision-making. Transparent models allow users to understand the reasoning behind AI-generated outputs, preventing unintended biases or discriminatory outcomes.

Techniques for ensuring AI decisions are understandable and justifiable:

- Feature importance analysis: Identifies the most influential features in a model’s predictions.

- Rule extraction: Derives human-readable rules from a complex model.

- LIME (Local Interpretable Model-Agnostic Explanations): Creates simplified local explanations for any model’s predictions.

- SHAP (SHapley Additive exPlanations): Attributes the contribution of each feature to a prediction.

2. Model Operations (ModelOps)

Managing the AI lifecycle: from deployment to maintenance: ModelOps encompasses the entire lifecycle of an AI model, including development, deployment, monitoring, and maintenance. It ensures seamless model management and continuous performance.

Ensuring continuous performance monitoring and updates:

- Data drift detection: Monitors changes in data distribution over time.

- Model degradation tracking: Identifies performance degradation due to concept drift or other factors.

- Retraining and updating models: Regularly updates models with new data to maintain accuracy.

3. AI Application Security

Addressing vulnerabilities in AI applications: AI applications are susceptible to various vulnerabilities, such as data breaches, adversarial attacks, and model poisoning. Security measures must be implemented to protect against these threats.

Implementing security measures to prevent data breaches and adversarial attacks:

- Secure data storage and transmission: Encrypts data at rest and in transit.

- Access control and authentication: Restricts access to sensitive data and models.

- Adversarial training: Trains models to be robust against adversarial attacks.

- Regular security audits and vulnerability assessments: Identifies and addresses security weaknesses.

4. Privacy Management

Protecting sensitive data in AI processes: AI often deals with sensitive personal data, which requires stringent privacy protection measures.

Techniques like data anonymization and encryption:

- Data anonymization: Removes or disguises personally identifiable information.

- Encryption: Converts data into a secret code, preventing unauthorized access.

- Privacy-preserving machine learning: Develops AI algorithms that can learn from data without revealing individual information.

Responsible AI: Balancing Innovation and Ethics in the Digital Age

Explore how Responsible AI is shaping the future by ensuring that innovation doesn’t come at the cost of ethics.

Upcoming Regulations Defining Compliance Controls for AI

Emerging regulations worldwide are setting the stage for robust compliance controls in AI applications. As we look to the near future, it’s clear that these guidelines will play a pivotal role in shaping how AI is developed, deployed, and managed.

Global Regulatory Initiatives

The European Union’s AI Act stands as a leading example, paving the way for comprehensive compliance standards. Similar efforts are underway in North America, China, and India, establishing frameworks to mitigate AI-related risks and ensure the responsible use of technology.

Broader Compliance Landscape

It’s essential for organizations to gear up for these imminent changes. Adapting to these regulations will often mean exceeding current compliance requirements, such as those focused on data protection and privacy, to address a wider array of potential challenges AI might pose.

These regulations will largely focus on ensuring ethical AI development, transparency in AI processes, and accountability mechanisms. As such, staying ahead of these changes will not only involve meeting the mandatory standards but also embracing a proactive approach to integrating ethical practices in AI solutions.

5 Potential Risks Generative AI Tools Introduce to Enterprises

Generative AI tools, such as those powered by advanced algorithms, introduce several potential risks to enterprises. As these technologies become integral to corporate strategies, they bring distinct challenges that require specialized attention beyond conventional controls.

1. Security Vulnerabilities

Cloud-based generative AI applications often run on external servers. This can introduce security vulnerabilities, as sensitive company data may be transmitted and processed off-premises. The risk of unauthorized access or data breaches can be heightened without robust security measures.

2. Data Privacy Concerns

AI tools generally require substantial amounts of data to function effectively. The handling of this data can lead to privacy concerns, especially if personal or confidential information is involved. Compliance with regulations, such as GDPR, becomes crucial to mitigate risks related to data privacy.

3. Intellectual Property Risks

Generative AI can inadvertently create or expose intellectual property issues. The technology may produce content that infringes on existing patents or copyrights, which can result in legal complications for enterprises using these AI solutions.

4. Bias and Ethical Issues

AI models are trained on vast datasets and can inherit biases present within those datasets. The deployment of these tools without addressing ethical considerations can lead to biased outputs, impacting decision-making processes and potentially harming reputations.

5. Dependence and Over-reliance

As enterprises increasingly rely on AI for strategic operations, there’s a risk of developing a dependency on these tools. Over-reliance can lead to significant challenges if the technology fails or delivers inaccurate results, potentially disrupting business continuity.

Aligning AI TRiSM with Organizational Goals

Strategic Integration

AI TRiSM plays a crucial role in aligning AI initiatives with an organization’s overall business objectives. By ensuring that AI systems are trustworthy, secure, and compliant, AI TRiSM directly supports business goals such as improving operational efficiency, enhancing customer trust, and ensuring regulatory compliance. This alignment ensures that AI technologies not only drive innovation but also contribute positively to achieving desired organizational outcomes, such as revenue growth, risk mitigation, and enhanced brand reputation.

- Supporting Business Objectives: AI TRiSM ensures that AI technologies are aligned with key business goals.

- Positive Contribution: Helps AI systems drive tangible value in line with organizational targets.

- Strategic Impact: Positions AI as a key enabler of business success, rather than a risk.

Involving Diverse Expertise

Successfully implementing AI TRiSM requires the involvement of interdisciplinary teams, drawing on the expertise of data scientists, cybersecurity experts, and legal professionals. Collaboration among these diverse teams ensures that AI systems are not only technically sound but also secure, legally compliant, and ethically aligned. By leveraging the combined knowledge of these professionals, organizations can address the multifaceted challenges associated with AI deployment, from ensuring data privacy to mitigating bias in AI decision-making.

- Interdisciplinary Teams: Collaboration among data scientists, cybersecurity experts, and legal professionals is essential.

- Comprehensive Approach: Diverse expertise ensures AI systems are robust, secure, and compliant.

- Holistic Management: Involvement of various disciplines leads to more balanced and effective AI governance.

Machine Learning vs AI: What’s Best for Your Next Project?

Discover the key differences between Machine Learning and AI to make the right choice for your next project.

Challenges in Implementing AI TRiSM

1. Explainability and Transparency Issues

Complexity of AI models like deep neural networks: Deep neural networks, while powerful, can be notoriously difficult to explain. Their complex architecture and non-linear operations make it challenging to understand how they arrive at their decisions.

Strategies for overcoming these challenges:

- LIME (Local Interpretable Model-Agnostic Explanations): Creates simplified local explanations for any model’s predictions.

- SHAP (SHapley Additive exPlanations): Attributes the contribution of each feature to a prediction.

- Feature importance analysis: Identifies the most influential features in a model’s predictions.

- Rule extraction: Derives human-readable rules from a complex model.

2. Adversarial Attacks

Risks posed by malicious inputs: Adversarial attacks involve introducing carefully crafted inputs to deceive an AI model, causing it to make incorrect predictions. This can lead to serious consequences in fields like autonomous vehicles and healthcare.

Solutions such as adversarial training and robust security protocols:

- Adversarial training: Trains models to be robust against adversarial attacks by exposing them to a variety of adversarial examples during training.

- Robust security protocols: Implements strong security measures to protect AI systems from unauthorized access and manipulation.

3. Continuous Monitoring and ModelOps

The need for ongoing monitoring to ensure AI integrity: AI models can degrade over time due to changes in data distribution or other factors. Continuous monitoring is crucial to detect and address these issues.

Tools and best practices for effective ModelOps:

- Data drift detection: Monitors changes in data distribution over time.

- Model degradation tracking: Identifies performance degradation due to concept drift or other factors.

- Retraining and updating models: Regularly updates models with new data to maintain accuracy.

- AI infrastructure management: Manages the underlying infrastructure and resources required for AI development and deployment.

4. Data Confidentiality

Risks related to third-party integrations: Third-party integrations can introduce new security risks, such as data breaches and unauthorized access.

Ensuring data protection through secure APIs and encryption:

- Secure APIs: Implements strong authentication and authorization mechanisms to protect data access.

- Encryption: Encrypts data at rest and in transit to prevent unauthorized access.

- Data anonymization: Removes or disguises personally identifiable information to protect privacy.

5. Regulatory Compliance

The evolving landscape of AI regulations: AI regulations are rapidly evolving, with new laws and guidelines being introduced regularly.

How organizations can stay compliant and avoid legal pitfalls:

- Stay informed about regulatory changes: Monitor relevant regulatory developments and update AI TRiSM practices accordingly.

- Conduct regular compliance assessments: Assess compliance with relevant laws and regulations.

- Seek legal advice: Consult with legal experts to ensure compliance with complex regulations.

Optimize Your Processes and Elevate Your Productivity With AI

Partner with Kanerika for Expert AI implementation Services

Best Practices for Effective AI TRiSM Implementation

1. Building a Dedicated AI TRiSM Task Force

A dedicated AI TRiSM task force is essential for ensuring effective implementation and oversight of AI systems. This team should consist of experts from various domains, including:

Data Scientists: To understand the technical aspects of AI models and their potential risks.

Cybersecurity Experts: To identify and mitigate security vulnerabilities.

Legal Professionals: To ensure compliance with relevant laws and regulations.

Ethics Experts: To address ethical concerns and biases in AI systems.

Business Analysts: To align AI initiatives with organizational goals.

The roles and responsibilities of an AI TRiSM team may include:

- Developing and implementing AI TRiSM policies and procedures.

- Assessing AI risks and vulnerabilities.

- Monitoring AI systems for compliance with ethical and legal standards.

- Providing guidance on AI development and deployment.

- Investigating and responding to AI-related incidents.

2. Continuous Learning and Adaptation

The field of AI is constantly evolving, with new technologies and techniques emerging regularly. It’s essential for organizations to stay updated on the latest developments and adapt their AI TRiSM strategies accordingly. This can be achieved through:

Regular training and education: Providing AI TRiSM team members with ongoing training on new AI technologies and best practices.

Research and development: Investing in research and development to explore new AI TRiSM techniques and tools.

Collaboration with industry experts: Partnering with other organizations and experts to share knowledge and best practices.

3. Ensuring Scalable and Future-proof AI TRiSM Frameworks

AI TRiSM strategies should be designed to be scalable and adaptable to future AI developments. This means that they should be able to accommodate new AI technologies, data sources, and use cases. Some key considerations for building scalable and future-proof AI TRiSM frameworks include:

Modular design: Breaking down AI TRiSM strategies into modular components that can be easily updated or replaced.

Flexibility: Allowing for customization to meet the specific needs of different AI systems.

Automation: Leveraging automation tools to streamline AI TRiSM processes and reduce manual effort.

Continuous monitoring and evaluation: Regularly assessing the effectiveness of AI TRiSM strategies and making necessary adjustments.

Benefits of AI TRiSM Across Industries

AI TRiSM (Trust, Risk, and Security Management) is a critical framework for ensuring the ethical, responsible, and secure development and deployment of AI systems. Here are some real-world use cases where AI TRiSM is particularly important:

Healthcare

- Diagnostic Tools: AI-powered diagnostic tools can help doctors identify diseases earlier, but it’s crucial to ensure their accuracy and fairness. AI TRiSM can help mitigate biases and ensure that these tools are reliable.

- Personalized Treatment Plans: AI can be used to develop personalized treatment plans based on a patient’s individual characteristics. However, it’s important to protect patient privacy and ensure that these plans are based on accurate data.

- Drug Discovery: AI can accelerate drug discovery by analyzing vast amounts of data. AI TRiSM can help ensure that these processes are transparent and ethical, avoiding biases in research.

Finance

- Credit Scoring: AI can be used to assess creditworthiness, but it’s important to avoid biases that could discriminate against certain groups. AI TRiSM can help ensure that credit scoring models are fair and transparent.

- Fraud Detection: AI can help detect fraudulent activity, but it’s crucial to protect customer privacy and avoid false positives. AI TRiSM can help ensure that fraud detection systems are secure and ethical.

- Algorithmic Trading: AI can be used for algorithmic trading, but it’s important to manage risks and prevent unintended consequences. AI TRiSM can help ensure that algorithmic trading systems are safe and reliable.

Transportation

- Autonomous Vehicles: AI-powered autonomous vehicles have the potential to revolutionize transportation, but it’s crucial to ensure their safety and reliability. AI TRiSM can help address ethical concerns and ensure that these vehicles are safe for public use.

- Traffic Management: AI can be used to optimize traffic flow, but it’s important to protect privacy and avoid biases. AI TRiSM can help ensure that traffic management systems are fair and transparent.

Other Industries

- Customer Service: AI-powered chatbots can provide customer support, but it’s important to ensure that they are accurate and helpful. AI TRiSM can help improve the quality of customer service and protect customer privacy.

- Human Resources: AI can be used for tasks such as recruitment and employee performance evaluation, but it’s important to avoid biases and protect employee privacy. AI TRiSM can help ensure that HR practices are fair and ethical.

- Law Enforcement: AI can be used for tasks such as facial recognition and crime prediction, but it’s crucial to protect civil liberties and avoid biases. AI TRiSM can help ensure that law enforcement practices are ethical and responsible.

Explainable AI: Why is It the Talk of the Town Right Now?

Discover why Explainable AI is capturing everyone’s attention and it’s importance for building trust and transparency in AI-driven decisions.

Case Studies Highlighting Kanerika’s AI Implementation Expertise

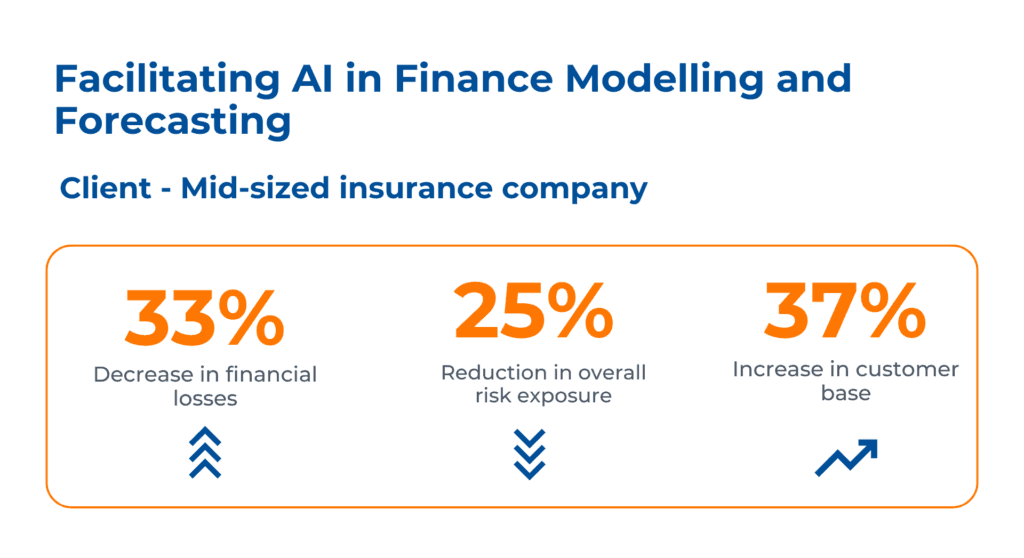

Case study: Facilitating AI in Finance Modelling and Forecasting

The client is a mid-sized insurance company operating within the USA.

The client faced challenges due to limited ability to access financial health, identify soft spots, and optimize resources, which hindered expansion potential. Vulnerability to fraud resulted in financial losses and potential reputation damage.

Kanerika Solved their challenges by:

- Leveraging AI in decision-making for in-depth financial analysis

- Implementing ML algorithms (Isolation Forest, Auto Encoder) to detect fraudulent activities, promptly minimizing losses.

- Utilizing advanced financial risk assessment models to identify potential risk factors, ensuring financial stability.

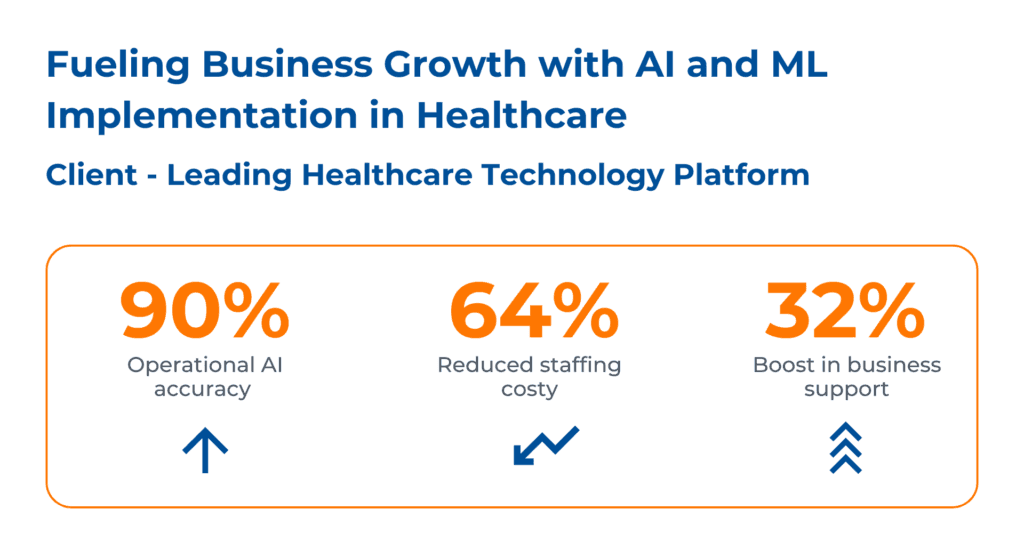

Case Study: Fueling Business Growth with AI/ML Implementation in Healthcare

The client is a technology platform specializing in healthcare workforce optimization. They faced several challenges impeding business growth and operational efficiency, manual SOPs caused talent shortlisting delays, while document verification errors impacted service quality.

Using AI and ML, Kanerika addressed their challenges by providing the following solutions:

- Implemented AI RPA for fraud detection in insurance claim process, reducing fraud-related financial losses

- Leveraged predictive analytics, AI, NLP, and image recognition to monitor customer behavior, enhancing customer satisfaction

- Delivered AI/ML-driven RPA solutions for fraud assessment and operational excellence, resulting in cost savings

Kanerika: The Trusted Name in Advanced AI Solutions

Kanerika is recognized as one of the leading providers of AI services, helping businesses across various sectors enhance their operations, scale efficiently, and drive sustainable growth. By leveraging cutting-edge AI tools and technologies, we deliver tailored solutions that meet the unique needs of each organization.

Understanding the growing importance of ethical AI, Kanerika ensures that all our AI implementations align with global ethical standards, promoting transparency, fairness, and accountability in every project. Our commitment to innovation and responsible AI makes us the trusted partner for businesses looking to harness the power of AI for transformation and success.

Address Process Inefficiencies and Boost Your Operations With AI

Partner with Kanerika for Expert AI implementation Services

Frequently Asked Questions

What does AI TRiSM mean?

AI TRiSM stands for Artificial Intelligence Trust, Risk, and Security Management. It’s about proactively managing the ethical, legal, and operational risks inherent in using AI systems. This includes ensuring fairness, transparency, and accountability in AI’s decision-making processes. Essentially, it’s about building trust in AI by mitigating potential harms.

What are the 5 pillars of AI TRiSM?

AI TRiSM (Trust, Risk, and Security Management) isn’t built on rigidly defined “pillars,” but rather interwoven principles. Think of it as a holistic approach covering governance (establishing responsible AI use), risk assessment (identifying potential harms), security (protecting AI systems and data), transparency (explainability and accountability), and ethical considerations (aligning AI with human values). These elements work together to ensure responsible and trustworthy AI deployment.

What are the principles of AI TRiSM?

AI TRiSM (Trust, Risk, and Security Management) ensures AI systems are reliable and ethical. It prioritizes building trust through transparency and explainability, mitigating risks like bias and misuse, and securing the AI lifecycle against breaches. Essentially, it’s about responsible AI development and deployment, preventing harm and maximizing benefits. This involves proactive governance and continuous monitoring throughout the system’s lifespan.

Why is AI TRiSM a trending technology?

AI TRISM (Trust, Risk, and Security Management) is booming because organizations increasingly need to manage the risks inherent in using AI systems. It addresses the growing concerns around AI bias, explainability, and data privacy, offering frameworks and tools for responsible AI deployment. Essentially, it’s a crucial piece of the puzzle in making AI both powerful and trustworthy. This is driving significant market demand.

What are the disadvantages of AI TRiSM?

AI-driven TRiSM (Trust, Risk, and Security Management) offers great potential, but it’s not without drawbacks. One key disadvantage is the potential for bias in algorithms, leading to unfair or inaccurate risk assessments. Furthermore, over-reliance on AI can diminish human expertise and oversight, creating vulnerabilities. Finally, the complexity of AI systems can make them difficult to audit and explain, hindering transparency and accountability.

Are AI models safe?

AI safety isn’t a simple yes or no. It depends heavily on the model’s design, training data, and intended use. Risks include bias, unintended behavior, and misuse, but ongoing research and responsible development aim to mitigate these. Ultimately, AI safety is an evolving field requiring continuous vigilance.

Why is it called AI?

“AI,” or Artificial Intelligence, is called that because it aims to mimic human intelligence in machines. It focuses on creating systems that can learn, reason, and solve problems – tasks traditionally associated with human thinking. The “artificial” part highlights that this intelligence is not biological, but created through programming and algorithms. Essentially, it’s “fake” intelligence that strives to achieve real intelligence-like results.