Is your daily newsfeed being bombarded with articles you were never interested in? Has another email been flagged as spam while a genuine phishing attempt slips through? These are just a few instances of Artificial Intelligence (AI) silently shaping our daily lives. In times when AI is making a significant impact in every aspect of life, the concept of Explainable AI gains prominence.

Artificial intelligence (AI) is becoming increasingly common in our daily lives, with applications in various industries, including healthcare, banking, transportation, and more. Understanding how AI systems make decisions and generate results is becoming increasingly important as these systems grow more complicated and powerful.

Trust is paramount in any relationship, and our interaction with AI is no different. If we don’t understand how an AI system arrives at a decision, especially one that impacts us personally, how can we genuinely trust its recommendations or actions? This is where Explainable AI (XAI) steps in, aiming to shed light on the inner workings of these powerful machines.

Table of Contents

- Understanding the Black Box Problem in AI

- What is Explainable AI?

- Why is Explainable AI Important?

- Benefits of Explainable AI

- Explainable AI Techniques

- Challenges of Explainable AI

- Future of Explainable AI

- Transforming Businesses with Innovative AI

- Outsmart Your Competitors with Kanerika’s Cutting-Edge AI Solutions

- FAQs

Understanding the Black Box Problem in AI

AI is the ability of machines to mimic human intelligence – to learn, reason, and make decisions. However, within this vast realm of AI lies a subfield called machine learning (ML), the real engine powering many of these experiences. Without explicit programming, machine learning algorithms may recognize patterns and anticipate outcomes because they have been educated on vast volumes of data.

These machine learning algorithms, particularly the more intricate ones, can become black boxes. They are fed on enormous volumes of data, and provide outcomes based on the data they are trained on; nevertheless, it is unclear how those outcomes are derived. The “black box” issue with AI is mostly caused by this lack of transparency. The reliability, fairness, and credibility of AI systems are questioned, particularly in crucial applications where decisions made may have a big impact on people’s lives or society at large.

What is Explainable AI?

Explainable AI, or XAI, is the ability of AI systems to offer comprehensible justifications for the decisions they make, enabling users to comprehend and have faith in the output and outcomes generated by machine learning models. This is essential for fostering credibility, accountability, and acceptance in AI technologies—particularly in high-stakes scenarios where end users need to comprehend the systems’ underlying decision-making processes.

Why is Explainable AI Important?

Explainable AI addresses moral and legal issues, enhances comprehension of machine learning systems for a wide range of users, and fosters confidence among non-AI specialists. Interactive explanations, such as those that are question-and-answer based, have demonstrated potential in fostering confidence in non-AI specialists.

XAI aims to develop methods that shed light on the decision-making process of AI models. These insights could be expressed in a variety of ways, like outlining the essential elements or inputs that led to a certain result, providing the reasoning behind a choice, or showcasing internal model representations.

The Need for XAI in Modern AI Applications

The increasing intricacy of AI models is a major factor that has driven the need for XAI. As AI systems become more advanced, they often rely on complex neural networks and large quantities of data to make decisions. Although this can result in good predictive performance, it can also complicate our ability to comprehend how these systems produce their outputs.

This challenge is particularly severe in essential applications where the determinations made by AI systems can have important real-world implications. In fields such as healthcare, finance, and law enforcement, ensuring that ethical principles guide AI-powered decision-making and that it is transparent and accountable becomes paramount.

For instance, in medicine, diagnostic tools powered by AI may be employed to help doctors make decisions about patients’ health conditions. The lack of explainability becomes a challenge to knowing why those recommendations were made, which causes doubts about whether or not the system could be trusted or relied upon at all. In the same way, financial markets use AI algorithms for lending decisions or investment strategies. Without XAI, one would find it difficult to know whether these decisions were biased or unfair, thus being against any existing regulatory framework or ethical standards.

Additionally, there has been a growing concern regarding AI machines used in high-stakes situations perpetuating or exacerbating societal prejudices. However, there is hope. By showing what influences its decision-making process, XAI has the potential to be a powerful tool in rooting out such biases. This will ensure that responsible and ethically sound deployment of AI systems takes place, with the potential to significantly reduce, if not eliminate, impacts on marginalized populations.

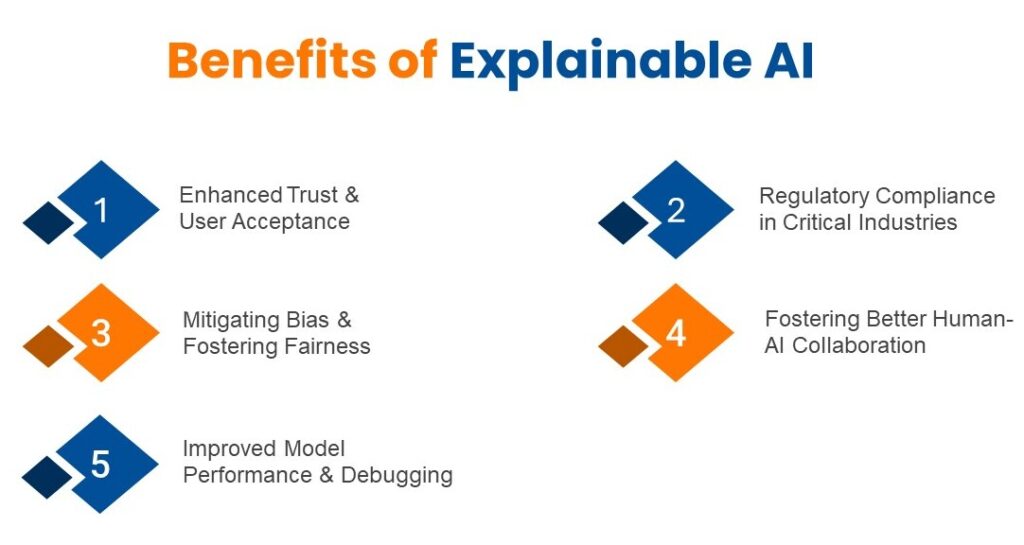

Benefits of Explainable AI

1. Enhanced Trust and User Acceptance

Without understanding how AI systems arrive at their outputs, users naturally hesitate to trust them. XAI techniques bridge this gap by providing clear explanations for recommendations, predictions, and decisions. This fosters trust and confidence, leading to wider adoption and acceptance of AI across various domains.

Imagine receiving a loan denial from an AI model. XAI could explain which factors (e.g., income-to-debt ratio, credit score) contributed most significantly to the decision, allowing you to challenge it if necessary or take steps to improve your eligibility.

2. Mitigating Bias and Fostering Fairness

AI models are only as good as the data they’re trained on. Unfortunately, real-world data can often reflect societal biases. This is where XAI techniques come in, empowering us to identify and address such biases within the model. We can detect and mitigate bias by analyzing how features contribute to the final decision, ensuring fairer and more equitable outcomes.

For instance, XAI could reveal a loan approval model that unfairly disadvantages certain demographics based on historical biases in lending practices. This insight allows developers to adjust the model to deliver unbiased results, making the lending process more equitable for all.

3. Improved Model Performance and Debugging

Errors can occur in even the most sophisticated models. Here, XAI becomes an invaluable diagnostic tool that aids in identifying the sources of these mistakes. By analyzing the relationship between features and the model’s output, we can identify flaws in the model, such as overreliance on unimportant features, and improve its overall accuracy and performance.

For instance, XAI methods may show that a model used for image classification incorrectly classifies some objects because of a small training dataset. We may retrain the model using a larger dataset equipped with this understanding, resulting in more accurate classifications.

4. Regulatory Compliance in Critical Industries

Certain industries, like finance and healthcare, are subject to strict regulations regarding decision-making processes. XAI plays a vital role here by providing auditable explanations for AI-driven decisions. This allows organizations to demonstrate compliance with regulations and ensure responsible AI implementation.

XAI could explain why a medical diagnosis system flagged a patient for further investigation in healthcare. This provides transparency to doctors and patients alike, fostering trust in the AI-assisted diagnosis process.

5. Fostering Human-AI Collaboration

XAI empowers humans to work more effectively with AI systems. Human experts can leverage this knowledge to guide and optimize the models by understanding how AI models arrive at their outputs. This collaborative approach can unlock the full potential of AI for addressing complex challenges. Imagine researchers using XAI to understand a climate change prediction model. Their insights can then be used to refine the model and generate more accurate predictions for climate change mitigation strategies.

Explainable AI Techniques

There are two main categories of XAI techniques:

Model-agnostic Method

These methods are designed to work with a wide range of AI models, regardless of their underlying architecture or implementation. They aim to provide explanations independent of the specific model used, making them more versatile and applicable across different AI systems.

Local Interpretable Model-Agnostic Explanations (LIME), a widely used model-agnostic technique, offers practical applications. By manipulating input data and observing the resulting changes in model output, LIME can pinpoint the key features influencing a prediction. This can be particularly useful in scenarios where understanding the decision-making process is crucial, such as in healthcare or finance. LIME can also provide visual heatmaps or written summaries, enhancing its interpretability.

On the other hand, Shapley Additive Explanations (SHAP) is another model-agnostic technique that uses game theory concepts to quantify how much each feature contributes to a model’s output. It allows deep learning algorithms to identify the essential features associated with one instance and those globally influencing predictions on multiple instances.

Model-specific Method

Model-specific techniques offer unique benefits. They are tailored to specific types of AI models, leveraging their unique architectural or algorithmic properties to provide detailed explanations. This specificity allows for a deeper understanding of the model’s behavior, which can be particularly valuable in complex models requiring a high level of interpretability.

For example, Deep Learning Important Features (DeepLIFT) is a technique specifically used in deep neural networks like ours. In DeepLIFT, the activation of neurons is compared against some “reference” activation value to attribute model output back into its input features. This method helps understand what goes on inside these models, eliminating irrelevant information that may be misleading when interpreting them based on certain inputs.

Grad-CAM comes in handy when using Convolutional Neural Networks (CNNs) in image recognition tasks such as detecting objects in smartphone images. Grad-CAM can highlight the regions within an input image most integral to a model’s decision; hence, users can easily understand the reasons for classification.

Challenges of Explainable AI

Despite its numerous benefits, There are significant challenges with the development and implementation of Explainable AI (XAI). Here’s a closer look at some of the crucial obstacles that must be overcome in order to achieve true transparency and trustworthiness for AI:

1. AI Model Complexity

Many advanced AI models, especially those using deep learning techniques, are highly complex. Their decision-making process is characterized by intricate layers of interconnected neurons that humans cannot easily explain or understand. Think about how you would explain to someone the intuition of an experienced chess player who can make brilliant moves based on some internal understanding of the board state. Capturing these complexities in simple explanations has always been challenging for Explainable AI researchers.

2. Trade-Off Between Accuracy and Explainability

Sometimes, there may be an accuracy-explainability trade-off in an AI model. Simpler models tend to be easier to explain inherently, but they may not possess much capability in tackling complex tasks. On the other hand, very accurate models typically come up with their results through complicated computations that cannot be peeled away to reveal what went into them. Striking this fine balance between achieving high performance and providing clear explanations is one of the core challenges of Explainable AI.

3. Interpretability Gap

It is possible for explanations created by XAI techniques to be technically correct but not comprehensible as intended for their audience. Explanations based on complex statistical calculations might mean nothing to anyone who does not have a background in Data Science. XAI must connect the dots between the technical underpinnings of AI models and various users’ needs regarding technical competence.

4. Bias Challenge

Just like data-driven biases may creep into AI models during training, they can also find their way into XAI explanations. Explanatory techniques used for model outputs could inadvertently magnify certain biases, resulting in misinterpretation or unfairness. This calls for effective Explainable AI that considers bias from data collection to explanation generation throughout the whole lifecycle of AI.

5. Evolving AI Landscape

The field of AI is always changing, with new models and techniques constantly being developed. It might be hard to keep up Explainable AI techniques with these advancements. What researchers need to do is develop flexible and adaptable XAI frameworks that can be used effectively in a broad range of existing AI models and those not yet created.

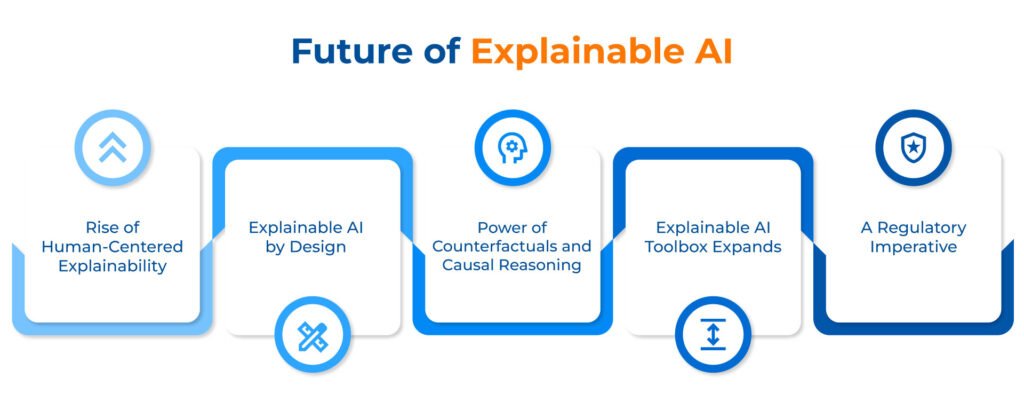

Future of Explainable AI

1. Rise of Human-Centered Explainability

Moreover, the future of Explainable AI isn’t merely about technical explanations but rather tailoring them to user-specific needs. Imagine adaptive explanations that can be adjusted for an audience’s level of technical expertise by using interactive visualizations and non-technical vocabulary. This would make XAI more accessible to more stakeholders and foster a broader understanding.

2. Explainable AI by Design (XAI-by-Design)

Stated differently, instead of a post hoc explanation of complex models, future XAI may involve inherently explainable building models. This is because the so-called ‘XAI-by-Design’ would embed explication techniques directly into AI model architectures, making them innately more see-through. It may entail creating new model architectures that are naturally more interpretable or incorporating interpretative components within intricate models.

3. Power of Counterfactuals and Causal Reasoning

Looking ahead, the XAI landscape will see a significant shift towards counterfactual explanations. These explanations will not only determine how changing input data would impact a model’s output but also provide insights into the causal structure underlying the model’s decisions. This advancement in causal reasoning methods will enable XAI systems to not just answer ‘what’ but also ‘why’, delving deeper into the decision-making process of the model.

4. Explainable AI Toolbox Expands

New approaches and advanced technologies in Explainable AI could also emerge in the future. These improvements might span different areas, such as model-agnostic methodologies that can work across different types of models and model-specific techniques that leverage unique architecture found in particular AI models. Additionally, integrating AI with human expertise to create explanations is a promising direction for the future.

5. A Regulatory Imperative

Additionally, as artificial intelligence becomes more widely adopted, regulations mandating explainability for certain AI applications are likely to grow rapidly, too. This will further incentivize the development of robust and reliable XAI techniques. Governments and industry leaders are instrumental in setting up standards and best practices for developing and implementing Explainable AI.

Transforming Businesses with Innovative AI

See how Kanerika Revolutionizes operational efficiency of a leading skincare firm with innovative AI solutions

Outsmart Your Competitors with Kanerika’s Cutting-edge AI Solutions

Looking for an artificial intelligence consultant to take your business to the next level? Look no further than Kanerika, a renowned technology consulting firm that specializes in AI, Machine Learning, and Generative AI. With a proven track record of implementing many successful AI projects for prominent clients in the US and globally, Kanerika’s expertise in AI services is unmatched. Our team’s deep understanding and innovative approach have led to impactful solutions across industries, driving efficiency, accuracy, and growth for our clients.

Our exceptional AI services have garnered recognition, earning Kanerika the prestigious title of “Top Artificial Intelligence Company” by GoodFirms. This reflects our commitment to delivering high-quality, value-driven AI solutions that exceed expectations and drive tangible business outcomes. At Kanerika, we continue to push the boundaries of AI innovation, empowering businesses to thrive in the digital age with transformative AI technologies tailored to their unique needs.

Frequently Asked Questions

What do you mean by Explainable AI?

What are the examples of Explainable AI?

What is the importance of Explainable AI?

What is the difference between generative AI and Explainable AI?

What are the different techniques in XAI?

- Model-agnostic methods: These work for any AI model, like LIME (Local Interpretable Model-agnostic Explanations) which creates a simpler model to explain a complex one's predictions.