Artificial intelligence systems are only as good as the data they’re trained on. Poor data quality costs the U.S. economy up to $3.1 trillion annually (IBM), proving that inaccurate or incomplete information can derail even the most advanced AI initiatives. High-quality AI data collection is the foundation for building intelligent systems that are reliable, ethical, and capable of driving real business impact.

Unlike generic data gathering, AI data collection focuses on capturing structured, semi-structured, and unstructured data that is diverse, bias-controlled, and well-labeled. This ensures models can learn accurately, make fair predictions, and adapt to real-world complexity. Without it, AI systems risk failure—from biased hiring algorithms to unreliable chatbots and flawed predictions.

In this blog, we’ll explore what AI data collection is, why it’s vital for successful AI adoption, the challenges organizations face, proven best practices, compliance considerations, and emerging trends shaping the future of intelligent data pipelines.

Why AI and Data Analytics Are Critical to Staying Competitive

AI and data analytics empower businesses to make informed decisions, optimize operations, and anticipate market trends, ensuring they maintain a strong competitive edge.

Key Takeaways

- High-quality, ethical AI data collection is the foundation for building accurate, trustworthy, and impactful AI systems.

- Businesses should balance automation with human oversight to ensure data accuracy, reduce bias, and maintain reliability across AI pipelines.

- Early investment in governance, compliance, and security helps avoid costly regulatory risks and builds long-term trust with customers and stakeholders.

- Modern platforms like Labelbox, AWS SageMaker Ground Truth, and Great Expectations simplify data labeling, validation, and monitoring for AI projects.

- The future of AI data collection will increasingly rely on synthetic data generation, autonomous data or AI agents, and privacy-first frameworks to meet growing compliance and scalability needs.

What is AI Data Collection?

AI data collection is the process of gathering, organizing, and preparing structured, semi-structured, and unstructured data to train and improve artificial intelligence (AI) and machine learning (ML) models. It goes beyond simply gathering raw information — it ensures that the data is relevant, high-quality, and properly labeled, enabling algorithms to learn patterns, make accurate predictions, and deliver reliable outcomes.

Unlike traditional data gathering, which focuses mainly on storing and retrieving information, AI data collection involves curating datasets with precision and purpose. It emphasizes critical aspects such as data labeling (tagging content for model training), diversity (ensuring representation of different scenarios and demographics), and bias control to prevent unfair or skewed model outputs. This approach helps create balanced and ethical AI systems that perform well across varied real-world conditions.

AI data can come from multiple sources, including text documents, images, videos, audio files, IoT sensors, customer interactions, and even synthetic data generated to fill gaps where real-world examples are limited. By collecting and preparing data thoughtfully, businesses can ensure their AI models achieve higher accuracy, better generalization, and fairer decision-making across applications such as fraud detection, customer personalization, and predictive analytics.

The Importance of High-Quality Data for AI

When it comes to artificial intelligence, the phrase “garbage in, garbage out” couldn’t be more accurate. AI models are only as good as the data they’re trained on. If the training data is incomplete, inaccurate, biased, or poorly structured, the resulting AI system will produce flawed predictions and unreliable outcomes — no matter how advanced the algorithms are.

- Diverse & Balanced Datasets Reduce Bias

Models trained on narrow or unrepresentative data can unintentionally favor certain groups or scenarios. Diverse, balanced datasets help reduce algorithmic bias and ensure fair outcomes for all users.

- Supports Better Decision-Making

Clean and well-structured data allows AI to identify trends and insights accurately, empowering businesses to make data-driven strategic decisions.

- Enables Personalization & Automation

When data is labeled and organized effectively, AI can deliver hyper-personalized experiences, automate workflows, and reduce manual effort across industries like healthcare, retail, and finance.

Amazon had to scrap its AI recruiting tool after discovering it was biased against women because it was trained on historical hiring data dominated by male candidates. This shows how poor-quality and unbalanced data can create systemic bias in AI systems.

According to Gartner, 80% of AI project time is spent on data preparation and management — underlining how critical data quality is to successful AI outcomes.

How to Optimize Supply Chain with AI & Analytics

Streamline and optimize your supply chain operations using advanced AI and analytics techniques.

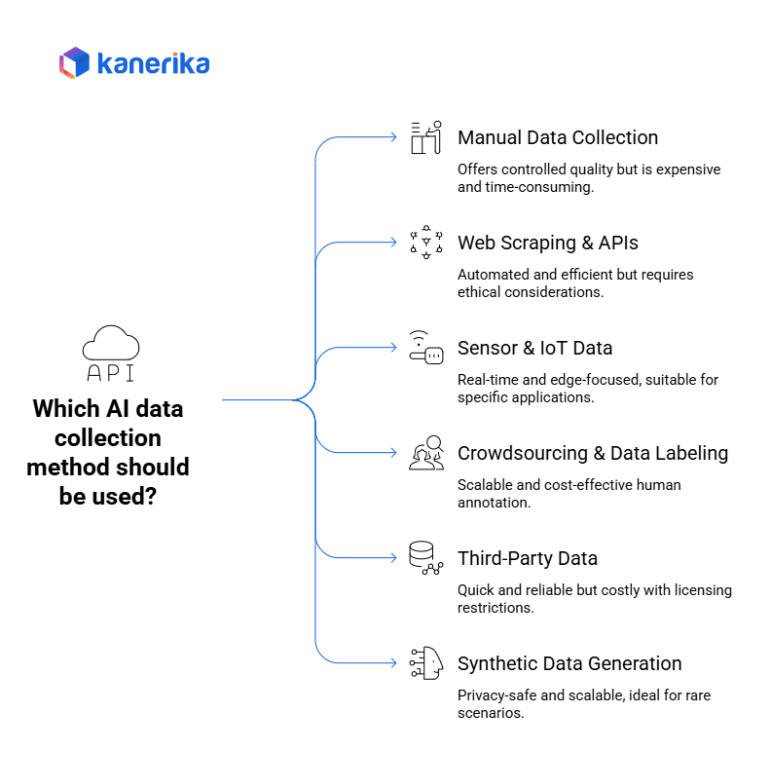

Types of AI Data Collection Methods

Effective AI model development depends on high-quality training data gathered through diverse collection methods.

1. Manual Data Collection

Human-driven approaches include surveys, structured interviews, expert annotations, and direct data entry processes where human judgment and context understanding are essential. This method proves particularly valuable when collecting nuanced information, sensitive data requiring human oversight, or specialized domain knowledge that automated systems cannot capture.

Pros: Exceptional quality control through human verification, rich contextual information, flexibility to adapt collection processes, and ability to gather complex qualitative insights that automated methods miss.

Cons: Extremely time-consuming and labor-intensive, high costs scaling with data volume, potential for human error and bias, and limited scalability for large dataset requirements.

Best Applications: Medical diagnosis annotation, legal document analysis, sentiment analysis requiring cultural context, and specialized technical documentation.

2. Web Scraping & APIs

Automated scraping extracts data from public websites, social media platforms, news sites, and online databases at scale. Modern scraping tools can handle dynamic content, navigate complex site structures, and extract structured data from unstructured sources.

API integrations provide structured, reliable data access through official channels like Twitter API for social media data, financial APIs for market information, and government APIs for public datasets. APIs offer cleaner data with better reliability than web scraping.

Best Practices: Always respect robots.txt files and terms of service, implement rate limiting to avoid overwhelming servers, avoid collecting personal or sensitive information without consent, use official APIs when available, and maintain proper attribution for scraped data.

Legal Considerations: Verify compliance with website terms of service, respect copyright and intellectual property rights, and ensure GDPR compliance when collecting data from European sources.

3. Sensor & IoT Data

Real-time streaming data from Internet of Things devices, industrial sensors, and connected equipment provides continuous data flows essential for applications requiring immediate response capabilities. This method excels in autonomous vehicles collecting camera, lidar, and radar data, healthcare devices monitoring patient vitals, and smart manufacturing systems tracking production metrics.

Edge data collection processes information locally on devices before transmission, reducing bandwidth requirements and enabling faster response times. This approach proves critical for applications where latency matters, such as autonomous driving decisions or industrial safety systems.

Challenges: Managing massive data volumes, ensuring data quality from diverse sensor types, handling intermittent connectivity, and maintaining security across distributed devices.

4. Crowdsourcing & Data Labeling Platforms

Crowdsourcing platforms like Amazon Mechanical Turk, Appen, and Toloka enable organizations to distribute data labeling tasks across global workforces. These services provide scalable human annotation for image classification, text categorization, audio transcription, and quality verification tasks.

Cost-effectiveness: Crowdsourcing reduces per-annotation costs by 60-80% compared to in-house teams while providing faster turnaround through parallel processing across multiple workers.

Quality Control: Implement consensus mechanisms requiring multiple annotators per task, use gold standard test questions to verify worker quality, and establish clear annotation guidelines with examples.

Ethical Considerations: Ensure fair compensation for workers, provide clear task instructions, and respect worker privacy and data security.

5. Third-Party Data Providers

Ready-to-use datasets from commercial providers like Snowflake Marketplace, AWS Data Exchange, and specialized data vendors offer immediate access to cleaned, structured, and often pre-processed data. These platforms provide industry-specific datasets, demographic information, financial data, and consumer behavior insights.

Pros: Immediate availability eliminates collection time, professional quality assurance, regular updates and maintenance, legal compliance and licensing clarity, and often includes documentation and support.

Cons: Recurring licensing costs that can be substantial, limited customization options, potential data staleness, restrictions on data usage and sharing, and reduced competitive differentiation when competitors access identical datasets.

Evaluation Criteria: Assess data freshness, coverage completeness, update frequency, licensing terms, and provider reputation before purchase.

6. Synthetic Data Generation

Artificial dataset creation uses AI algorithms to generate realistic training data that mimics real-world patterns without exposing actual sensitive information. This approach proves essential for rare scenarios like unusual medical conditions, edge cases in autonomous driving, and privacy-sensitive applications.

Tools: Synthesis AI for computer vision applications, Mostly AI for tabular data generation, NVIDIA Omniverse for simulation environments, and generative adversarial networks (GANs) for image generation.

Benefits: Complete privacy preservation as no real personal data is involved, unlimited scalability without collection constraints, ability to create balanced datasets addressing class imbalance issues, and generation of rare edge cases difficult to collect naturally.

Limitations: Generated data may not fully capture real-world complexity, requires validation against actual data to ensure realism, and potential for generating unrealistic patterns if not properly configured.

Use Cases: Healthcare applications where patient privacy is paramount, autonomous vehicle training for rare accident scenarios, fraud detection with limited real fraud examples, and augmenting small datasets to improve model performance.

Tools & Platforms for AI Data Collection

Selecting the right tools and platforms for AI data collection significantly impacts project success, model quality, and operational efficiency. Modern data collection ecosystems offer specialized solutions for every stage of the data pipeline, from initial collection through validation and storage.

1. Data Labeling & Annotation Tools

- Labelbox provides comprehensive annotation capabilities for computer vision, natural language processing, and audio data with collaborative workflows, quality management features, and model-assisted labeling to accelerate annotation speed. The platform supports image segmentation, object detection, text classification, and entity recognition.

- Scale AI offers high-quality human annotation services combined with automated tools, specializing in autonomous vehicle data, document processing, and complex computer vision tasks. Their managed annotation teams provide enterprise-grade quality assurance with accuracy guarantees.

- Supervisely delivers an end-to-end platform for computer vision projects, featuring advanced annotation tools, dataset management, model training capabilities, and team collaboration features. The platform excels in medical imaging, satellite imagery, and manufacturing quality inspection applications.

2. Data Integration & Pipelines

- Apache Kafka serves as the backbone for real-time data streaming and event-driven architectures, enabling high-throughput message processing and distributed data collection across multiple sources. Kafka excels in handling millions of events per second with low latency.

- Airbyte provides open-source data integration with 300+ pre-built connectors for databases, APIs, and cloud services. The platform simplifies ETL processes with no-code configuration while supporting custom connector development for unique data sources.

- Fivetran automates data pipeline creation with fully managed connectors that handle schema changes, incremental updates, and error recovery. The platform reduces engineering overhead by eliminating custom pipeline maintenance.

3. Data Quality & Validation

- Great Expectations enables automated data quality testing through expectation suites that validate data against defined rules. The platform provides comprehensive documentation, data profiling, and integration with existing data pipelines to catch quality issues early.

- Soda Data offers continuous data quality monitoring with anomaly detection, schema validation, and freshness checks. The platform uses SQL-based rules and machine learning to identify data quality degradation before it impacts model performance.

4. Web Scraping Tools

- Scrapy provides a powerful Python framework for building custom web scrapers with advanced features like concurrent requests, middleware support, and pipeline processing. The open-source tool offers maximum flexibility for complex scraping requirements.

- Octoparse delivers a no-code web scraping solution with visual workflow builders, cloud-based execution, and scheduled scraping capabilities. The platform suits business users needing quick data extraction without programming expertise.

- Bright Data (formerly Luminati) offers enterprise-grade web scraping infrastructure with proxy management, CAPTCHA solving, and legal compliance support. Their services ensure reliable, large-scale data collection from websites with anti-scraping measures.

5. Cloud Platforms

- AWS S3 with SageMaker Ground Truth combines scalable storage with machine learning-powered data labeling, reducing annotation costs through automated pre-labeling and active learning. The integrated ecosystem supports the complete ML lifecycle from data collection to model deployment.

- Google Cloud AI Data Labeling provides managed human labeling services with quality assurance, supporting image classification, object detection, video annotation, and text entity extraction. The platform integrates seamlessly with Vertex AI for model training.

- Azure AI Data Services offers comprehensive data labeling, cognitive services for automated annotation, and secure data storage with enterprise-grade compliance. The platform excels in environments already invested in Microsoft technologies.

Key Selection Considerations

- Scalability requirements vary dramatically from prototype projects handling thousands of records to production systems processing billions of data points. Evaluate whether platforms can grow with your needs without architectural changes.

- Security considerations include data encryption at rest and in transit, access control mechanisms, audit logging, and compliance certifications. Ensure platforms meet your industry-specific security requirements.

- Compliance needs differ by industry and geography. Verify tools support GDPR, HIPAA, SOC 2, or other relevant frameworks. Consider data residency requirements for international operations.

- Cost structures include subscription fees, usage-based pricing, storage costs, and hidden expenses like data egress charges. Calculate total cost of ownership including training, maintenance, and scaling expenses to make informed decisions.

Challenges in AI Data Collection

Building high-performing AI models depends on the quality, diversity, and security of the data collected. However, collecting AI-ready data comes with several challenges that organizations must address to ensure reliable and ethical outcomes.

1. Data Privacy & Compliance Risks

With strict regulations like GDPR, CCPA, and HIPAA, handling sensitive personal or health-related data requires extreme care. Collecting user data without explicit consent or scraping protected content can lead to hefty fines and reputational damage. For instance, companies have faced penalties for scraping data from websites without proper user agreements.

Mitigation: Use data anonymization, encryption, and clear consent management processes. Work with legal teams to ensure compliance throughout the data pipeline.

2. Bias & Representational Gaps

If data over-represents certain demographics, AI outputs become unfair and discriminatory. For example, facial recognition tools trained mostly on lighter skin tones have historically underperformed on darker skin tones, leading to real-world harm.

Mitigation: Curate balanced datasets, run bias audits, and adopt tools for bias detection and correction to ensure fairness across diverse user groups.

3. Data Quality & Noise

Duplicate entries, incomplete records, mislabeled images, and irrelevant data can degrade model performance. Models trained on noisy data show poor accuracy and unpredictable behavior in production.

Mitigation: Implement robust data validation, cleaning pipelines, and use tools that flag anomalies or mislabeled records before they enter training datasets.

4. High Costs & Time Investments

Manual labeling, annotation, and cleansing can consume up to 80% of an AI project’s budget. Tasks like tagging medical images or transcribing audio at scale are expensive and slow.

Mitigation: Leverage semi-supervised learning, synthetic data generation, and crowdsourced labeling platforms to reduce cost and speed up data preparation.

5. Infrastructure Scalability

Handling petabytes of unstructured data (e.g., images, video, sensor data) demands scalable storage and high-speed ingestion. Without robust infrastructure, pipelines become bottlenecks.

Mitigation: Use cloud-based storage, distributed data lakes, and scalable ingestion systems like Apache Kafka or AWS Kinesis to manage massive data volumes efficiently.

| Challenge | Mitigation Strategy |

| Privacy Risks | Encryption, anonymization, user consent management |

| Bias | Diverse sampling, bias testing tools |

| Poor Quality | Automated validation & cleansing pipelines |

| High Costs | Semi-supervised learning, synthetic data |

| Scalability | Cloud storage, distributed ingestion systems |

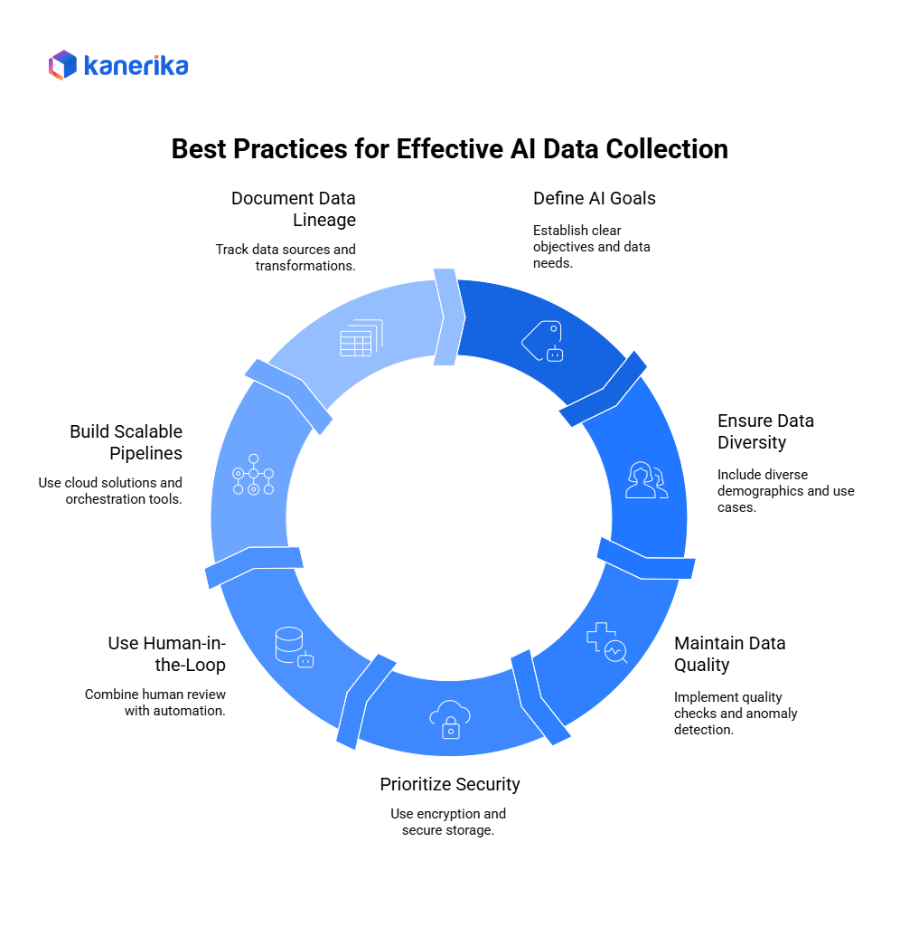

Best Practices for Effective AI Data Collection

Collecting high-quality data is the foundation of any successful AI initiative. Following proven best practices ensures accuracy, fairness, compliance, and scalability while keeping costs under control.

1. Start with Clear AI Goals

Before gathering any data, define what the AI model needs to achieve. Clarify use cases (e.g., fraud detection, image recognition), expected outputs, and the type and volume of data required. This avoids unnecessary collection and ensures the dataset is fit for purpose.

Example: A retail company building a demand forecasting model should collect transaction history, promotions, weather data, and social sentiment — not unrelated customer support tickets.

2. Ensure Data Diversity & Fairness

AI models are only as good as the variety of scenarios they see during training. Include diverse demographics, geographies, languages, and edge cases to avoid bias.

Example: A voice assistant should train on accents from different regions to serve a global user base fairly.

3. Maintain Data Quality with Automated Validation

Low-quality data leads to garbage-in, garbage-out AI models. Use automated validation and anomaly detection to spot missing values, duplicates, mislabeled samples, or outliers early.

Tip: Tools like Great Expectations or built-in data validation frameworks in cloud pipelines help maintain quality.

4. Prioritize Security & Compliance

Sensitive data, especially in regulated industries like healthcare or finance, must comply with GDPR, CCPA, HIPAA, and industry regulations. Use encryption at rest and in transit, anonymization, and secure role-based access to protect privacy.

Example: Healthcare providers anonymize patient data before feeding it into AI models to ensure HIPAA compliance.

5. Use Human-in-the-Loop Systems

Fully automated data pipelines can miss context or edge cases. Combining human review with AI-driven labeling ensures accuracy and relevance while maintaining scalability.

Example: For medical imaging AI, radiologists review a subset of machine-labeled scans to validate and correct annotations.

6. Build Scalable Data Pipelines

Plan for growth by adopting cloud-based storage, distributed processing, and orchestration tools like Apache Airflow or AWS Glue. Scalable infrastructure supports massive data ingestion and real-time updates.

7. Document & Monitor Data Lineage

Track where each dataset originates, how it’s transformed, and how it’s used. Data lineage supports explainability, regulatory audits, and model debugging when things go wrong.

Tip: Tools like Apache Atlas or Microsoft Purview help maintain lineage transparency.

Real-World Examples of AI Data Collection in Action

AI data collection is already reshaping industries by fueling smarter, more adaptive models. Here are some powerful real-world examples:

Example 1 — Tesla’s Autonomous Driving Data Collection

Tesla’s self-driving technology relies on millions of miles of real-world driving data collected through sensors, cameras, and radar installed in its global fleet. Each vehicle acts as a data-gathering node, capturing everything from lane markings and stop signs to unpredictable driver behavior and weather conditions.

This data is continuously uploaded to Tesla’s servers, where it trains and refines the company’s Full Self-Driving (FSD) algorithms. The more people drive, the smarter the system gets — a true crowdsourced fleet learning model. This approach helps Tesla roll out frequent over-the-air updates that make its autonomous features safer and more reliable.

Example 2 — Google Maps & Waze Traffic Predictions

Google Maps and Waze rely heavily on real-time data collection from billions of devices. GPS signals, speed patterns, and user-generated traffic reports are constantly ingested to predict congestion, road closures, and travel times.

This massive flow of crowdsourced location and movement data allows Google to deliver highly accurate route optimization and live traffic updates. It’s a prime example of how big data and user participation create smarter AI-powered navigation.

Example 3 — Healthcare AI (PathAI & Zebra Medical Vision)

Healthcare companies like PathAI and Zebra Medical Vision collect massive libraries of medical images — such as X-rays, CT scans, and pathology slides — which are then annotated by experts. These annotated datasets power AI models that detect diseases, recommend treatments, and reduce diagnostic errors.

By combining human expertise with machine learning, these companies improve early disease detection and patient outcomes, especially in areas like cancer screening and radiology.

Example 4 — E-commerce Personalization (Amazon)

Amazon continuously collects clickstream data, purchase history, product reviews, and browsing patterns to fuel its recommendation engine. Every search, page view, and purchase contributes to models that predict what customers want to buy next.

This real-time behavioral data collection drives personalized recommendations, dynamic pricing, and marketing campaigns — significantly boosting customer satisfaction and sales conversions.

Take Your Business to the Next Level with Innovative AI and Data Analytics Solutions!

Partner with Kanerika for Expert AI implementation Services

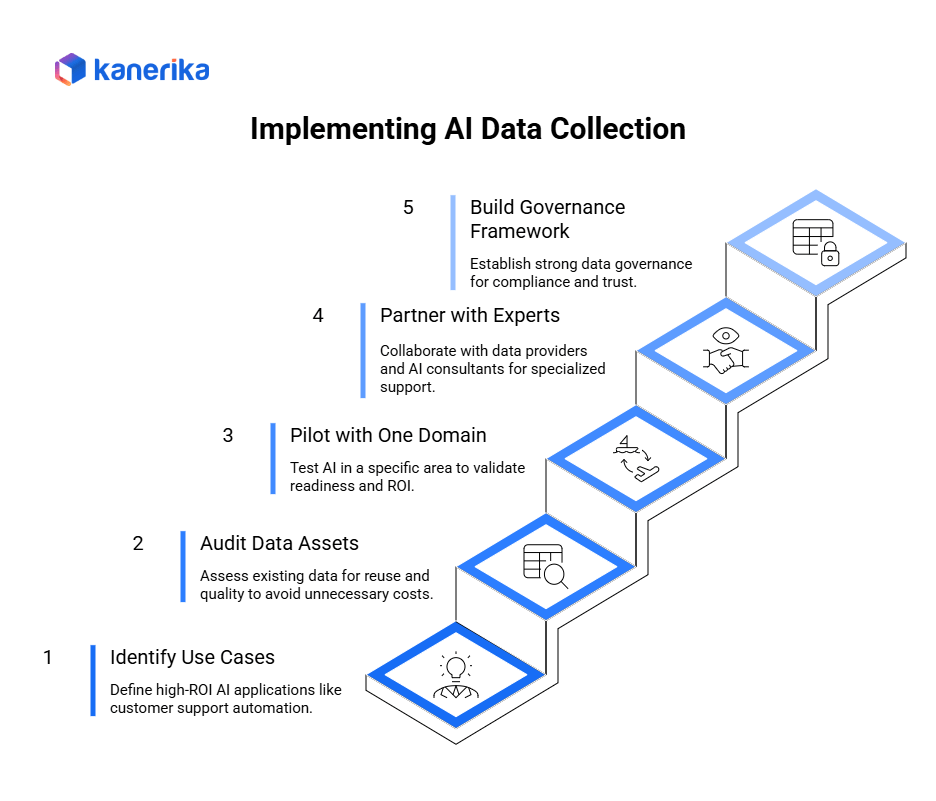

How Businesses Can Get Started with AI Data Collection

Implementing AI data collection doesn’t have to be overwhelming — a structured approach helps organizations scale confidently while staying compliant and cost-efficient.

1. Identify Key AI Use Cases with High ROI Potential

Start by defining where AI can deliver the most value — such as customer support automation, fraud detection, or predictive maintenance. Focus on problems that require data-driven insights and where automation can reduce costs or create new revenue streams.

2. Audit Existing Data Assets Before Buying or Generating New Ones

Many companies already have valuable data spread across CRMs, ERPs, support tickets, and cloud storage. Conduct a data inventory and quality check to understand what can be reused, cleaned, or enriched instead of purchasing expensive third-party datasets upfront.

3. Start Small — Pilot with One Domain

Avoid boiling the ocean. Run a proof of concept (POC) in one area, such as analyzing customer support transcripts or product feedback. This helps validate data readiness, infrastructure needs, and ROI before scaling to other business units.

4. Partner with Data Providers or AI Service Companies

Specialized data collection platforms, labeling services, and AI consulting firms can accelerate your journey. These partners offer expertise in compliance, diversity, and annotation quality, helping reduce time and risk.

5. Build a Governance-First Framework

Ensure privacy, compliance, and explainability from the start. Implement strong access controls, encryption, and clear data lineage tracking to meet regulations like GDPR or HIPAA while maintaining trust and accountability.

Elevate Your Business with Kanerika’s Cutting-Edge AI Data Analysis Solutions

When it comes to harnessing the power of AI for data analysis, Kanerika stands out as a leader in the field. With a team of seasoned experts and a deep understanding of AI technologies, we provide tailored solutions that meet the unique needs of businesses across various industries. From automated data cleaning and preprocessing to advanced machine learning models and real-time analytics, we ensure you get the most out of your data.

Partnering with us means gaining access to state-of-the-art AI tools and technologies, as well as our extensive experience in delivering successful AI projects. We work closely with our clients to understand their specific challenges and goals, developing customized solutions that drive tangible results. Whether you’re looking to improve customer segmentation, enhance predictive maintenance, or gain deeper insights into market trends, Our AI solutions can help you achieve your objectives efficiently and effectively.

FAQs

1. What is AI data collection?

AI data collection is the process of gathering, labeling, and preparing structured, semi-structured, and unstructured data to train and improve machine learning models. It focuses on accuracy, diversity, and compliance to ensure reliable AI outputs.

2. How is AI data collection different from traditional data gathering?

Traditional data gathering focuses on storing and reporting data. AI data collection goes further — it requires labeling, balancing for bias, ensuring diversity, and preparing data for training predictive and generative models.

3. Why is high-quality data important for AI models?

AI models learn from data patterns. Inaccurate, biased, or incomplete data leads to poor predictions and unfair outcomes. High-quality, diverse, and well-labeled datasets improve accuracy, fairness, and decision-making.

4. What challenges do companies face in AI data collection?

Key challenges include privacy compliance (GDPR, CCPA), data bias, poor quality or noisy data, high labeling costs, and scaling infrastructure to handle large volumes of data efficiently.

5. What tools help with AI data collection?

Popular tools include Labelbox, AWS SageMaker Ground Truth, Scale AI, Great Expectations (for validation), and Apache Kafka (for real-time data streaming). These simplify labeling, monitoring, and scaling.

6. How can businesses ensure compliance in AI data collection?

Organizations should use data anonymization, secure storage, encryption, and maintain clear consent policies. Regular audits and tracking data lineage help comply with GDPR, HIPAA, and other global regulations.

7. What’s the future of AI data collection?

AI data collection is moving toward synthetic data generation, autonomous agents for automated labeling, and privacy-first frameworks. Hybrid human-in-the-loop models will remain essential for quality and governance.